Engadget has been testing and reviewing consumer tech since 2004. Our stories may include affiliate links; if you buy something through a link, we may earn a commission. Read more about how we evaluate products.

We're not getting Luke Skywalker's prosthetics any time soon

You've got about as good a chance inventing a lightsaber.

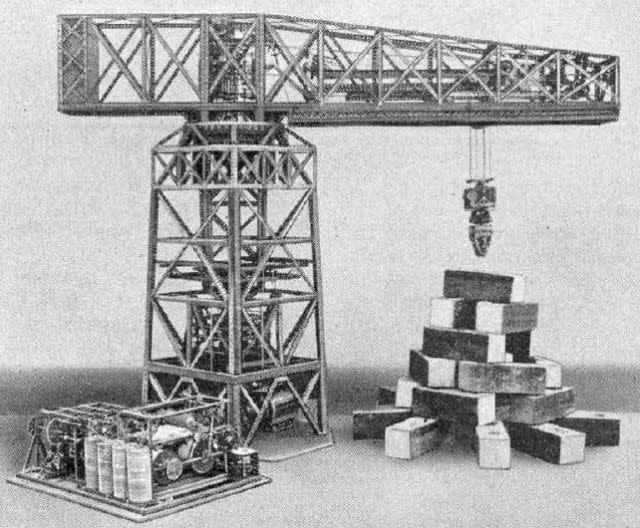

In 1937, robot hobbyist "Bill" Griffith P. Taylor of Toronto invented the world's first industrial robot. It was a crude machine, dubbed the Robot Gargantua (PDF, Pg 172) by its creator. The crane-like device was powered by a single electric motor and controlled via punched paper tape, which threw a series of switches controlling each of the machine's five axes of movement. Still, it could stack wooden blocks in preprogrammed patterns, an accomplishment that Meccano Magazine, an English monthly hobby magazine from the era, hailed as "a Wells-ian vision of 'Things to Come' in which human labor will not be necessary in building up the creations of architects and engineers."

The robot Gargantua - credit: Meccano Magazine

In the 80 years since, Gargantua's progeny have revolutionized how we work. They're now staples in agriculture, automotive, construction, mining, transportation and material-handling. According to the International Federation of Robotics, the United States employs 152 robots for every 10,000 manufacturing employees -- though that lags behind South Korea's 437, Japan's 323 and Germany's 282.

Over the past two decades, this push toward automation helped boost US manufacturing output by almost 40 percent, an added value worth $2.4 trillion annually. Even with losing roughly 5.6 million manufacturing jobs between 2000 and 2010 -- only 15 percent of which were the result of international trade -- America's manufacturing base manages to produce more products (and more valuable products) than ever.

But as efficient as these machines are, they still can't hold a candle (literally) to the dexterity, sensitivity and gripability of the human hand. Take the industrial robots that work on automotive-manufacturing lines, for example. Their jobs nearly exclusively are to perform coarse assembly work -- lifting body panels into place, spray painting and welding -- while final assembly tasks, like wiring and installing interior panels, fall to more-dextrous humans.

But what if these industrious automatons were able to perform the tasks that only humans can currently do? What if we could design a robotic hand that's just as good as a biological one? A number of researchers around the world are looking to do just that.

The primary challenges hampering the creation of a truly human-like robotic hand can be broken down into three distinct parts: the dexterity and strength of the physical hand itself; its sensitivity; and the system that moves it. Dexterity, when it comes to robot hands, determines how readily it can manipulate objects around it.

Similarly, sensation refers to how responsive the hand is to stimuli, and grip strength ensures that the robot can hold onto whatever it's trying to grab without crushing it. These three capabilities must operate in unison if the overall system is to work efficiently.

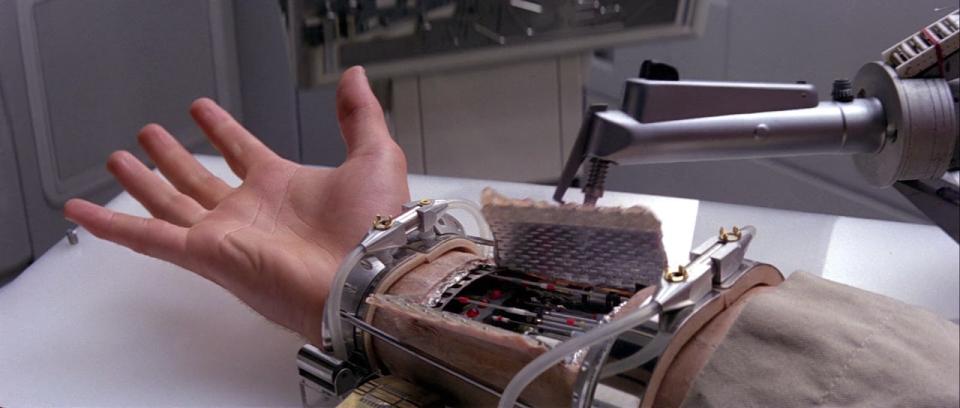

Like this, but with robots.

The human hand's dexterity -- specifically, the opposable thumb -- has proved nearly as important to our evolution as our cognitive abilities, according to researchers from Yale University, and could potentially impart the same benefits to robot-kind. However, providing mechanical grippers with the same flexibility and adaptivity of the human hand has been incredibly difficult and expensive.

That's why a vast majority of current robotic manipulators still use the two-pronged pincer system or are highly specialized to a singular task. "You can get a relatively simple gripper that's inexpensive, or you could get a very dextrous hand for two orders of magnitude more," said Jason Wheeler, a roboticist at Sandia Labs who helped develop the hand used by Robonaut 2 aboard the International Space Station. "And there aren't a whole lot of options in between."

There are a number of engineer limitations slowing the development of truly dextrous robot hands. First, modern actuators -- the components that activate the fingers to open and close -- are still pricey and often prohibitively heavy. This limits the degrees of freedom (DoF) a robo-hand can have. The human hand has 27 DoF: four in each finger, five in the thumb and another six in the wrist. As Wheeler points out, if you want 27 DoF in a robot hand, you're going to need 27 individual actuators, as well as somewhere to put them.

"Most of the muscles for the human hand are up in the forearm, so if you're designing a forearm to go with it, you can put most of those actuators up there," he explained.

What's more, Wheeler continued, "the force capability and the force density of human muscle is still outpacing what we can do with electromagnetic actuators, so the size and weight of the hand and the force and torque are limited because we don't have actuators that can match human muscles."

Simply put, the greater flexibility of the robo-hand, the weaker it is going to be because we can't pack enough strength into the same amount of space as human muscles are able to produce. Plus, the more components you pack into a robotic hand, the more often things will wear down, fail and require service.

Despite these challenges, a number of public and private research groups are working to improve the strength and dexterity of robot hands. The Sandia Hand from Sandia National Labs, which was born out of Darpa's Revolutionizing Prosthetics program, is one such example. It was initially designed as a stand-in for IED (improvised explosive device) disposal technicians so they wouldn't have to don advanced bomb suits and put themselves in harm's way.

In this case, anthropomorphic function was a key design point because the robot hand had to operate in a chaotic real-world environment rather than in an automotive assembly line or warehouse. "Because of the number and diversity of those tasks that were required and they were all things that humans would normally do, having an anthropomorphic hand made sense," Wheeler explained.

But even with a high degree of dexterity, robotic hands can't achieve the same level of performance as biological ones if they don't possess a sense of touch. The human hand is jam-packed with roughly 17,000 nerves that allow it to recognize not only that it is physically touching an object, but also nearly instantly sense that object's weight, firmness, shape, temperature and texture.

"What your hand can sense is a combination of the vibrations when you stroke the object, the deformation of your fingertip, which informed shear and roll forces, as well as points of contact, like the curvature of an object," said Gerald Loeb, CEO and co-founder of robotics startup, Syntouch. "You can also tell how heat is exchanged between your hand and the object."

Our sense of touch is what enables us to feel when a glass is slipping from our grasp and tighten our grip without squeezing down hard enough to shatter it. Not so with robotic hands, Dr. Ravi Balasubramanian of Oregon State University explained during a recent interview. "Even if there are 10 or 20 sensors on a robot hand, the designer will be extremely nervous when it is interacting with the outside world."

Part of this concern with operating outside of lab conditions grew from DARPA's 2006 attempt to develop more-functional prosthetics. Dubbed "Revolutionizing Prosthetics,"", this program effectively sought to build a robotic hand as capable as Luke Skywalker's arm from The Empire Strikes Back. DARPA highlighted the differences between the current state of robotics and what science fiction thinks is possible "and said 'these should do everything that that does,' essentially, everything that a human hand should do," Loeb explained.

But the problem is that a decade ago, the state of the art was still quite crude. "They weren't just providing crude information, they're providing the wrong information," Loeb said. The early touch sensors employed in the DARPA program were measuring force and profilometry, which, as the name implies, physically maps a surface's profile. It's the same premise as pin art impression boards. "That's interesting," Loeb quipped, "but not actually what human hands do."

As such, Loeb and his team at Syntouch have set about tackling the challenge of getting all that sensory information from a single device that's the same size as a fingertip and robust enough to be used in the real world. The result is a proprietary system called the BioTac Toccare. The BioTac's hardware is an anthropomorphic mechanical finger.

All of the tactile sensors are embedded where the bone would be and are surrounded by an elastic skin. The beauty of this setup is that the external skin can easily and inexpensively be replaced when it wears down without having to swap out the sensors, which makes it far more durable than other similar systems.

The raw data generated by the BioTac are then interpreted by Syntouch's software, called the Syntouch Standard. It's a lot like the conventional RGB standard, but for tactile data. "Developing a number of quantifiable dimensions [of touch] ... allows us to quantify each of the critical elements that make up what something feels like," Loeb explained. The standard measures 15 metrics in all, from coarseness and resistance to thermal cooling and adhesiveness.

And while this may sound like science fiction, the company has already integrated its tactile sensors into prosthetic arms. "We at Syntouch have been focusing on reflex loops," Loeb said. Loops include picking up a glass, grabbing your phone without crushing it — all the stuff you do without actively thinking about it.

With funding by the Congressional Directed Medical Program, Syntouch is developing those reflexive subroutines, putting them in arms and giving the prosthetics to military veterans, who take them home and try them out in real-world situations before keeping the arm long-term (basically a try-before-you-buy situation). "Our preliminary work at our facilities has shown that amputees respond very well to having a reliable grasp," Loeb said. "It's convenient, it doesn't distract them, and they've been really happy with it."

The ability for to train artificial hands to react reflexively has proved a surprisingly difficult challenge to overcome. "If you are holding a glass and it begins slipping, we don't know how to mimic that in a robot hand," Balasubramanian said. This is a symptom of a larger roadblock in the development of human-like robotic hands: our general inability to control them, especially when used as prosthetics.

For pure robotic applications, command and control is fairly straightforward. If you want the hand to have a certain number of degrees of freedom, perform a dextrous task or incorporate sensory-feedback data, you simply increase the processing power of the computer controlling it. Even in applications where physical space, high-bandwidth feedback or computational power is limited, there are technical workarounds.

For example, the Robonaut 2, which is serving aboard the ISS, is "under-actuated" — that is, it has more joints than it can actively control. By coupling these extra joints with actively controlled ones in preprogrammed manners, its hands can increase their relative dexterity without having to add more processing power. Still, as Wheeler points out, "there's not a lot of value in adding more than three or four degrees of freedom per finger unless you have a really good, smart way to control them.

So for most applications, some of those more subtle degrees of freedom used for very fine dextrous tasks, we don't have the capability." And for such tasks that do require a high-level of dextrous control, teleoperation systems like Shadow Robot's "cyberglove" can remotely control the hand. It uses motion-capture to precisely mimic the user's hand motions and relay those commands to the hand.

Unfortunately, when it comes to using robotic hands as prosthetics, neither of these solutions is really feasible right now. "The single biggest issue with a prosthetic hand is control," Wheeler explained. "Right now, there's almost no sensory feedback. And there's only two to three control channels at the most." That's the single biggest limiting factor, Wheeler explained. "How you get the command signal and sensory feedback information back and forth?" That's where the neural interfaces will come in.

Interfaces like the one that Elon Musk has been making headlines with in recent weeks. He's touting a partial brain-machine interface (BMI) that, when it is released in the next four to five years, could be used to treat Parkinson's, depression and epilepsy. However, he is far from the first to work on bridging the divide between robotic hardware and the squishy bio-computers that reside in our skulls.

In fact, there have been a number of significant developments over the past 20 years for connecting neural interfaces to prosthetics. In 2015, researchers from the EPFL created the e-Dura, an implantable "bridge" that physically crosses the severed point of a patient's spinal cord. Last November, the EPFL improved upon that design with a wireless version that "uses signals recorded from the motor cortex of the brain to trigger coordinated electrical stimulation of nerves in the spine that are responsible for locomotion," David Borton, assistant professor of engineering at Brown University, said in a press statement.

This is very similar to the system that a University of California, Irvine, team used to partially restore a paralyzed man's ability to walk. And just last week, researchers at Radboud UMC in the Netherlands wired up a Bluetooth connection to an amputee's arm stump to create the world's first "click-on" prosthetic.

But don't get too excited for our Ghost in the Shell future, at least not yet. While robotic hand fabrication techniques and cutting-edge sensory capabilities are beginning to put these devices on equal footing with their human counterparts, the ability to integrate them into biological systems and seamlessly control them remains in its infancy. Even though Luke Skywalker's robo-hand has been a cornerstone of the science-fiction universe since The Empire Strikes Back, don't expect it to become part of scientific reality for at least another couple decades.

Welcome to Tomorrow, Engadget's new home for stuff that hasn't happened yet. You can read more about the future of, well, everything, at Tomorrow's permanent home and check out all of our launch week stories here.