I tried, failed and finally managed to set up HDR on Windows 10

If you want to buy an HDR monitor, beware of the pitfalls.

You've been hearing a lot lately about high dynamic range (HDR) arriving to Windows 10 for gaming, movies and graphics. You may have also heard the rumors that there's not a lot you can do with it, it's underwhelming and can be incredibly difficult to set up.

As someone who edits video and photos and enjoys both Netflix and gaming in HDR, I decided to see how much of that was actually true. After many weeks of setup and trying, I'm here to tell you that the rumors are accurate: HDR on Windows 10 still isn't ready for prime time, and if you want to give it a try, prepare for some pain and disappointment in exchange for minimal benefits.

What is HDR, exactly?

HDR offers a richer, more colorful viewing experience. It increases the detail in both the shadows and highlights of a scene, letting you see more in dimly lit indoor and very bright outdoor scenes. It also offers more colors, contrast and much more brightness. A lot of cinephiles feel that HDR improves the viewing experience more than the extra resolution of 4K.

We've explained HDR in detail before, but here's what it's supposed to deliver. When watching a movie in SDR, the bright light in a window behind an actor might appear as just a white blob. In HDR, you'll see details like clouds, mountains, and more. Colors will appear brighter and more saturated, and gradients between colors will look smooth instead of blocky. On top of that, HDR runs at a maximum 60 fps, giving you smoother images, especially when gaming.

In the video world, there are different flavors of HDR, most notably Dolby Vision, HDR10+ and the most basic version, HDR10. For now, PC games are strictly limited to HDR10, which still offers more punch, contrast and color, albeit at a slight cost to performance. Whether you care about this might depend on whether prettier visuals or pure gaming speed is most important.

As a reminder, VESA recently introduced DisplayHDR to certify HDR displays that meet minimum standards with true 8-bit color at 95 percent of the BT.709 gamut, and a minimum of 400 nits of brightness. Monitors that don't meet that certification, however, might still be fine for many users.

Equipment

Watching TV in HDR is pretty simple: All you need is an HDR compatible TV (many modern 4K sets comply, and some 55-inch models cost as little as $570), an HDR-compatible streaming key or box, a 4K HDR Blu-Ray player and Amazon Prime video or the HDR tier of Netflix. For streaming, a reasonably fast internet connection is obviously a must -- 25 Mbps is the minimum, according to Netflix. If you own a Sony PlayStation 4 Pro or Xbox One X, you'll have a lot of those features and HDR games, to boot.

To get HDR on a Windows 10 PC requires more thought.

All the components on your desktop or laptop need to be HDR-ready, including the CPU, GPU and display or TV. You must have the correct version of Windows 10, and it must be set up correctly.

To put this into perspective, here's an alphabet soup of all things you need to make the minimum HDR standard work. Note that things change fast in the Windows 10 HDR world, so this list may be different by the time you read it. For instance, HDR Netflix support came to Windows 10 just over a year ago.

Windows 10 Fall Creators Update or later

Windows 10 HEVC Media Extension

Netflix multi-screen plan that supports 4K and HDR streaming

Microsoft Edge (yep) or Windows 10 Netflix application

Chrome or Edge browser for HDR YouTube streaming

Internet connection speed of at least 25 Mbps

4K display with HDCP 2.2 capability

HDCP 2.2-certified cable

HDCP 2.2 and 4K capable port (HDMI 2.0, DisplayPort 1.4 or USB-C)

Supported discrete or integrated GPU (PlayReady 3.0 and HDCP 2.2 output)

Compatible graphics driver

The best flavor of HDR on Windows 10 is 4K (3,840 x 2,160), 60 fps RGB (4:4:4) video with 10 bits (1 billion) colors. To get that to work requires a more expensive PC and definitely a more expensive 10-bit monitor. For the latter, you can cheat a bit by using an 8-bit monitor that dithers colors to 10-bit, also known as frame-rate control. For my testing, I had both a true 10-bit and 8-bit + FRC display.

Intel notes that 7th-generation Kaby Lake CPUs with integrated HD graphics are its first to support HDR. Any CPUs from that generation or later should allow for playback of HDR content, including YouTube videos, Netflix and Blu-Ray movies. However, your laptop or desktop PC also needs the appropriate BIOS and other hardware.

To do any kind of gaming in HDR, you'll need discrete graphics. NVIDIA started supporting HDR with its 900-series Maxwell GPUs, with the least costly option being the GeForce GTX 960. Considering how HDR can tax your system, however, you'd be best off with a more modern 10- or 20-series GPU. AMD brought full HDR support to its Vega-based Ryzen APUs and most recent Polaris and Vega GPUs. Rock Paper Shotgun has a good list of supported graphics hardware and games.

Many modern laptops have the necessary internal hardware, but here's the catch: Almost no laptop displays are HDR ready (other than a few of the very latest laptops we saw at CES 2019). As such, you'll need to connect an external HDR monitor or TV.

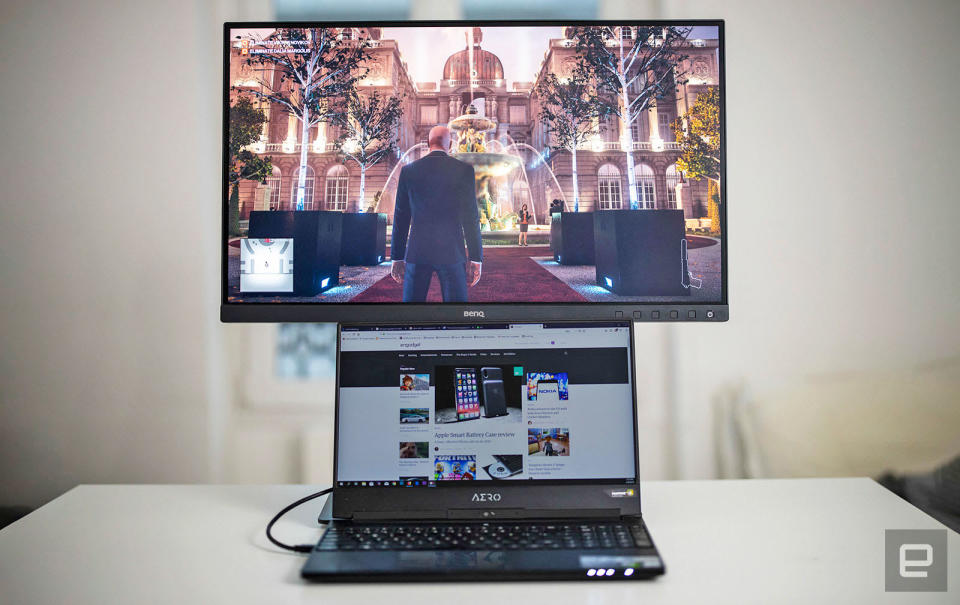

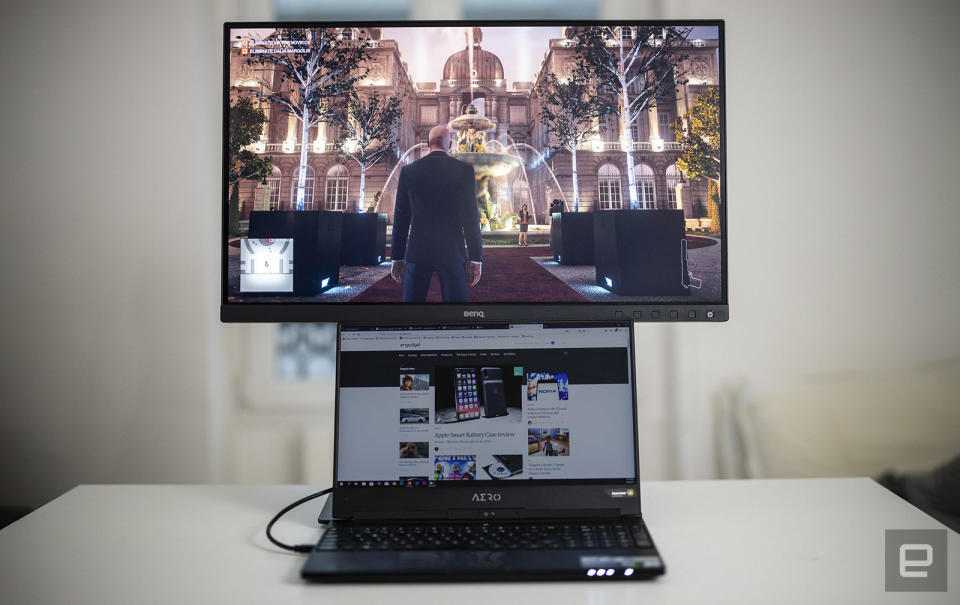

For my own testing purposes, I used a Gigabyte Aero 15X laptop with an 8th-generation Intel Core i7 CPU, 32GB of RAM and NVIDIA GTX 1070 Max-Q graphics. That's a pretty stout setup that should take any 4K HDR I'd throw at it (narrator: not quite). BenQ loaned me a pair of monitors, the gaming- and consumer-oriented EW3270U and the stellar professional graphics-oriented true 10-bit SW271.

These monitors give me two different HDR experiences. Neither is particularly bright, as the EW3270U delivers up to 300 nits, and the SW271, 350 nits (the minimum standard for the DisplayHDR standard is 400 nits peak brightness). However, the $1,100 SW271 is one of the brighter models out there aimed at professionals and delivers very accurate colors. The EW3270U is more for gaming and entertainment, and at just $520 (street), is the kind that a typical user can buy and afford. Brightness levels aside, they're both excellent ways to get into HDR.

Setup

Microsoft introduced full HDR support with the Fall Creators Update in 2017. At first, it wasn't very useful, as any non-HDR content (i.e., browsers and pretty much everything else) would be too bright or dim and washed out. With subsequent updates, it introduced a brightness control for SDR content, making it more practical for everyday use.

I actually tried to make the switch to HDR Windows last year but essentially failed due to various problems with the hardware and software. Although gaming mostly worked, I wasn't able to play YouTube 4K HDR videos or Netflix in HDR. Unlike most users, I had an arsenal of experts from Google, Gigabyte, BenQ and the DisplayHDR group, but I still couldn't resolve the issue. I decided to wait a few months for Windows 10 and hardware updates, and now everything (almost!) works.

Most consumer-oriented gaming laptops have both discrete NVIDIA or AMD GPUs and Intel HD integrated graphics. My Gigabyte laptop uses NVIDIA's Optimus structure that switches between them to provide either maximum performance or minimum power draw from the system's graphics hardware. That sounds fine, but according to Gigabyte's engineers, it can cause flaky behavior with HDR.

My first step with the installation was connecting BenQ's SW271 monitor. To complicate things, there are at least four kinds of ports that work for HDR, three of which are on my Gigabyte Aero 15X: HDMI 2.0, DisplayPort 1.4, USB Type-C with DisplayPort support and USB Type-C with Thunderbolt support. To say the least, the standards are kind of a mess.

Personally, though, I found I could get 10-bit (1 billion) colors only over the DisplayPort (DP) 1.4 connection on my Aero 15X. When I connected my machine over HDMI 2.0 or USB-C, I managed 4K 60 fps video, but my bit depth was limited to 8-bit. This is a connection speed issue: 4K, 10-bit, 60 fps full RGB video requires speeds of around 25 Gbps, which is beyond the capability of 18 Gbps HDMI 2.0. NVIDIA's latest RTX cards support HDMI 2.0b, but that standard has the same maximum speeds.

HDR video standard | Speed needed | HDMI 2.0 | DisplayPort 1.4 | USB Type-C alt DP |

4K 30 fps RGB 10-bit | 11.14 Gbps | ✔ | ✔ | |

4K 60 fps 4:2:2 10-bit | 17.82 Gbps | X | ✔ | ✔ |

4K 60 fps RGB 8-bit | 17.82 Gbps | ✔ | ✔ | ✔ |

4K 60 fps RGB 10-bit | 22.28 Gbps | X | ✔ | ✔ |

I recently received for testing an NVIDIA RTX laptop that had no DP-specific port. It had two USB Type-C ports, one with DisplayPort 1.4 and another with Thunderbolt 3 support. I believe the best HDR cable here would be DisplayPort to USB Type-C. This type of display connector is likely to become standard on laptops to simplify manufacturing, but most users won't have the right cable lying around. I certainly didn't.

VESA told me in a statement that "DisplayPort is sent over USB Type-C in alternate mode, where the four high-speed lanes in the Type-C are used for DisplayPort. If the monitor supports DisplayPort 1.4, then it can send 8.1 Gbps over each lane for a maximum raw bandwidth 32 Gbps. Reduce that number to 25.6 Gbps for overhead."

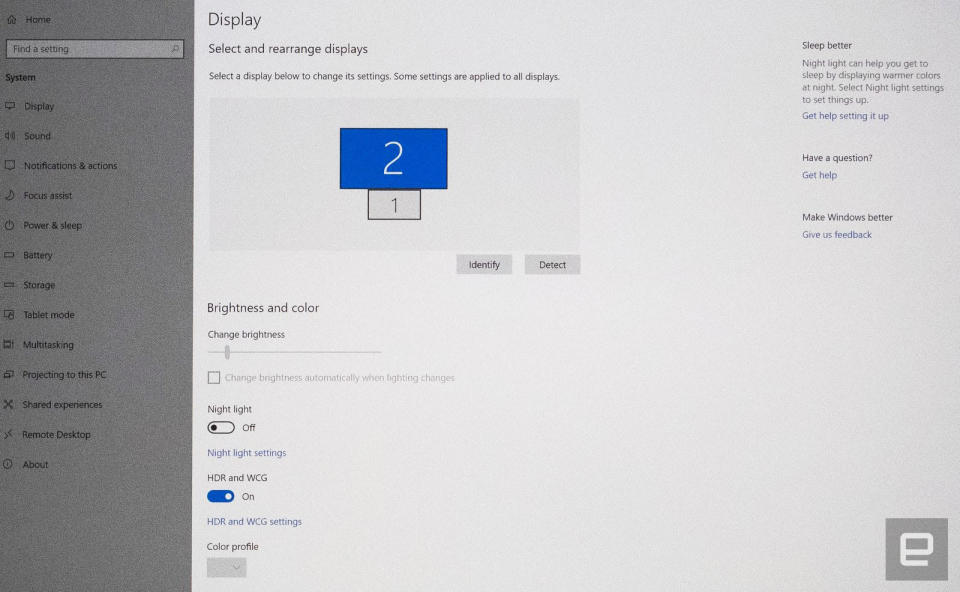

Once installed, I turned on the computer, which recognized the monitor but attempted to mirror my laptop screen. Once I set it to extend the two screens, it set the display to the right resolution (4K) automatically. The final step was to set the layout (HDR screen above the laptop screen) so I could mouse between them seamlessly.

So far, so good. Microsoft has come a long way with dual screens on Windows 10 in the last couple of years and now handles high-resolution screens pretty much seamlessly. That was not always the case.

The next step is to actually enable HDR in Windows. Since the Fall Creators Build of Windows 10 in late 2017, Microsoft has steadily improved it to the point where it works nearly automatically, assuming all your hardware is in order. In my case, after toggling "HDR and WCG" to "On," it instantly kicked into HDR mode, and the BenQ SW271 automatically switched into HDR mode, as well.

All going great, right?

Apps

My first try at making HDR work was decidedly unsuccessful because I couldn't really do anything with it. The second time around, I had a bit more success.

My first goal was to get YouTube HDR to work. First, know that such videos will only play on Chrome or Edge, so don't even try to get it to work on Firefox or other browsers. Yes, this lack of consistency is a glaring flaw for the fledgling standard.

I have a reasonably fast laptop computer and an extremely fast 1Gbps internet connection, so YouTube HDR should easily be possible, right? Not so much. In the end, I was unable to play 60 fps HDR at 4K without stuttering or skipping. This is a task that a tiny 4K Chromecast device can pull off, so it's not clear why a $2,500 computer can't. The best I could manage with smooth playback was 1440p. I couldn't pin it down for sure, but the problem might either be with NVIDIA's hybrid graphics or YouTube's over-reliance on the system CPU.

That's not so bad, as most YouTube HDR content consists of beautiful scenery, wildlife and dancing, all set to insipid music. What counted more for me were Netflix shows like Stranger Things and Altered Carbon.

4K HDR Netflix doesn't work on Chrome and only works on Microsoft's much unused Edge browser -- the opposite situation to YouTube HDR. This is a pretty good example of how scattershot the standard still is.

At the start, I couldn't get Netflix HDR to work at all -- the best I could manage was 4K. Again, it's not clear at all why this happened as I had done everything I was supposed to, including installing HEVC codecs, using the Edge browser and Microsoft's UAP Netflix app and enabling HDR. Without Netflix, HDR was frankly pretty useless for me.

The second time around, with the SW271 monitor, I was finally able to get HDR on the Windows 10 Netflix app. Suffice to say, all this fiddling was frustrating. And I've done considerable trouble-shooting on more complex PC issues in the past, so I can imagine that the average user would likely just throw up their hands. These compatibility issues have to be solved by the hardware makers, DisplayHDR consortium and, especially, Microsoft, and fast.

Still, I had Netflix solved, so the last step was HDR gaming. That presented some new thorny issues but, luckily, setup wasn't one of them.

I installed a couple of recent HDR titles via Steam, including Hitman 2, Shadow of the Tomb Raider and Assassin's Creed: Odyssey. That went very smoothly, and HDR setup was detected and enabled by default for all the titles.

At first, I cranked up all the settings to high detail and 4K, but it turns out you can't quite have it all when it comes to HDR. While pretty formidable for a laptop-sized GPU, the NVIDIA GTX 1070 Max-Q isn't quite enough to do all those things, so I needed to reduce the resolution to 1440p or 1080p and accept slightly less detail to get smooth playback. That issue, however, is more related to my hardware than any problems with HDR, as far as I can tell. With a desktop-level GTX 1080 or better, smooth 4K HDR should be possible for some games.

Results

The most dramatic HDR content I saw was on YouTube, but it didn't work at 4K resolution. To be sure, videos like the one above are designed to be eye candy, with dramatically over-saturated colors and bright, shiny visuals. But comparing them with HDR turned off, then on, shows the true potential of the format, and why it has more to offer than 4K.

The running joke with Netflix is that it's practically impossible to see the difference between SDR and HDR. If you know where to look, however, you can't un-see it. The benefit comes in dark scenes -- and there are many of those on the streaming service -- where HDR reveals more detail. That makes it easier to appreciate the lighting and cinematography and, you know, see who's actually on the screen.

Meanwhile, bright, colorful sequences pop more, and contrast is improved. I found that I just generally enjoyed content slightly more with HDR enabled, that I was getting more for my money. However, I realize that I'm in the minority here, and some of my appreciation probably comes from the fact that I worked as an editor, colorist and special effects creator in a past life. Many other users and reviewers don't feel that HDR is worth all that effort.

When it comes to gaming, I'm more of an "enjoy the scenery" than "mow everything down in sight" type. As such, I really did enjoy the HDR visuals, especially in Hitman 2 and Shadow of the Tomb Raider. Bright, contrasty outdoor scenes were even more breathtaking, and I found it easier to see what was going on while skulking around in dim, nighttime scenes. However, much like NVIDIA's ray-tracing, many users will not appreciate HDR if it slows them down in any way, and it definitely does impact performance.

Wrap-up

HDR arrived with few problems to TV screens, and it was generally heralded as being worthwhile, even more so than 4K or 8K. The same cannot be said of HDR on PCs. The introduction has been clumsy, and unless you're luckier than I was, setup can still be an enormous hassle. At least I was being paid to handle these problems -- I wouldn't wish what I went through on any consumer or even my own worst enemy.

And after all that trouble, the results were sometimes very cool, but probably not worth it for most folks. Unless you're rocking a very powerful machine, the performance hit for gaming is also not worth it.

The problems with HDR are a microcosm for the PC industry as a whole. GPUs have fragmented into AMD and NVIDIA camps because manufacturers can't agree on common industry standards. With DisplayHDR, a standard has been established, but implementation is still spotty. Microsoft, in particular, has to take the lead and force all the players to fall in line. Once the experience becomes seamless and plug-and-play, it could become the game-changer that the PC industry had hoped it would be.