braincomputerinterface

Latest

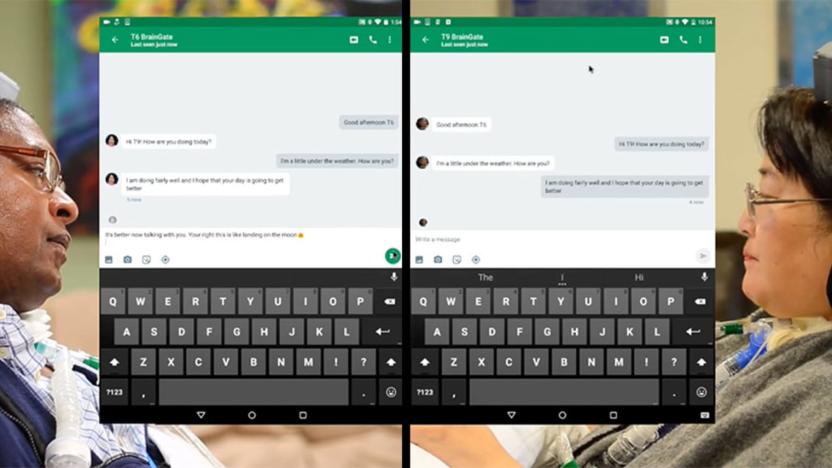

Brain implant lets paralyzed people turn thoughts into text

Three people paralyzed from the neck down have been able to use unmodified computer tablets to text friends, browse the internet and stream music, thanks to an electrode array system called BrainGate2. The findings could have a major impact on the lives of those affected by neurologic disease, injury, or limb loss.

Implants enable richer communication for people with paralysis

John Scalzi's science fiction novel Lock In predicts a near future where people with complete body paralysis can live meaningful, authentic lives thanks to (fictional) advances in brain-computer interfaces. A new study by researchers at Stanford University might be the first step towards such a reality.

University of Minnesota researchers demo AR.Drone controlled by thought (video)

Researchers from the University of Minnesota seem hellbent on turning us all into X-Men. Why's that, you ask? Well, back in 2011, the team devised a method, using non-invasive electroencephalogram (EEG), to allow test subjects to steer computer generated aircraft. Fast forward to today and that very same team has managed to translate their virtual work into real-world mind control over a quadrocopter. Using the same brain-computer interface technique, the team was able to successfully demonstrate full 3D control over an AR.Drone 1.0, using a video feed from its front-facing camera as a guide. But it's not quite as simple as it sounds. Before mind-handling the drone, subjects underwent a training period that lasted about three months on average and utilized a bevy of virtual simulators to let them get acquainted with the nuances of mental navigation. If you're wondering just how exactly these human guinea pigs were able to fly a drone using thought alone, just imagine clenching your fists. That particular mental image was responsible for upward acceleration. Now imagine your left hand clenched alone... that'd cause it to move to the left; the same goes for using only the right. Get it? Good. Now, while we wait for this U. of Minnesota team to perfect its project (and make it more commercial), perhaps this faux-telekinetic toy can occupy your fancy.

University of Minnesota researchers flex the mind's muscle, steer CG choppers

You've undoubtedly been told countless times by cheerleading elders that anything's possible if you put your mind to it. Turns out, those sagacious folks were spot on, although we're pretty sure this pioneering research isn't what they'd intended. A trio of biomedical engineers at the University of Minnesota have taken the realm of brain-computer interfaces a huge leap forward with a non-invasive control system -- so, no messy drills boring into skulls here. The group's innovative BCI meshes man's mental might with silicon whizzery to read and interpret sensorimotor rhythms (brain waves associated with motor control) via an electroencephalography measuring cap. By mapping these SMRs to a virtual helicopter's forward-backward and left to right movements, subjects were able to achieve "fast, accurate and continuous" three-dimensional control of the CG aircraft. The so scifi-it-borders-on-psychic tech could one day help amputees control synthetic limbs, or less nobly, helps us mentally manipulate 3D avatars. So, the future of gaming and locomotion looks to be secure, but we all know where this should really be headed -- defense tactics for the Robot Apocalypse.

SmartNav units control PCs with just your noggin'

It's not as if there has been any shortage of conceptual contraptions conjured up to control computers with just the brain, but it has been increasingly difficult to find units ready for the commercial market. Enter NaturalPoint, who is offering up a new pair of SmartNav 4 human-computer interface devices designed to let users control all basic tasks with just their head. The AT and EG models are designed to help physically handicapped and health-minded individuals (respectively) get control over their desktops by using their gord to mouse around, select commands and peck out phrases on a virtual keyboard. The sweetest part? These things are only $499 and $399 in order of mention, so you should probably pick one up just to give your mousing hand a rest.[Via EverythingUSB]Read - SmartNav 4:EGRead - SmartNav 4:AT

German scientists develop nerdiest brain-computer interface yet

Brain-computer interfaces have been popping up left and right lately, but the latest system from Germany's Technical University of Braunschweig, might be the silliest one we've seen so far. While the system doesn't involve the careful placement of electrodes, it does require you to don a large metal helmet fitted with sensors, which can even detect brain activity through hair -- and makes you look like Magneto on a bad day. The system is solid enough to allow test subjects to control an RC car and researchers say the tech is similarly applicable to wheelchairs and prosthetics. Yeah, that's great -- we'll stick with the dangerous neurosurgery implantation over this contraption, guys. Video after the break.

Researchers develop robotic brain-computer interface

Brain-computer interfaces have been kicking around for a few years now, but they're relatively slow and unwieldy, which kind of puts a damper on world-domination plans -- the guy with the keyboard would probably be well into the missile-launch sequence by the time you've strapped on your dork-helmet. That might be slowly changing, though, as Caltech researchers are working on a robotic brain-computer interface, which can currently be implanted directly into non-human primate brains and move itself around to optimize readings. Although the MEMS-based motor system that actually moves the electrodes is still being developed, the software to do the job is ready to go, and the whole system being presented this week at the IEEE International Conference on Robotics and Automation in Pasadena. Robot-android chimps? Sure, that's just what we need.

g.tec launches ready-to-go brain computer interface kit

We know what you're thinking [1], how come no-one has made any of the various brain interface technologies out there into a commercial product? Well, your dream last night [2] took a step closer to becoming reality with the announcement of the "ready-to-go" g.BCIsys Brain-Computer interface kit by the Austrian company g.tec. Out of the box, the BCIsys can play simple games and comes with a P300 spelling device which, with a little training, can read your thoughts and place single letters on a screen. Ok, so you're not exactly going to want to throw away your QWERTY just yet, as the P300 can take as many as 20 "flashes" to correctly read the word that you're thinking; nor should the weight conscious be concerned that Wii Sports will be moving back to the sofa just yet, as the only included game is Pong. Also, this system isn't exactly what you'd call a commercial release (lets just say that g.tec's distribution partners aren't the "one click purchase" type). In fact, we can't find any information about how much the kit costs, or even whether simpletons like us would be allowed to get their hands on one. Little steps, little steps.[1] No, we didn't place one of the brain interface kits onto your head whilst you were sleeping: it's just a turn of phrase ...[2] ... honest![Via gizmag]

Thought-based biometrics system underway?

Seems kind of old school if your brain interface doesn't provide extra-sensory enhancement or integration to robotic limbs, but researchers at Carleton University in Ottawa, Canada are working on a system for thought-based biometrics by scanning and interpreting each individual's unique brain-wave signatures that occur when they think of a certain thought or can identify patterns uniquely -- kind of like that Peter Pan pixie dust thing, except in this case you get granted access to your box. For a variety of reasons the system isn't without its doubts and detractors, and will probably continue to have them so long as you have to wear an EEG cap on your scalp to get a reading -- though according to UCLA professor and BCI expert Jacques Vidal, rocking that headgear's the least of this system's problems. But if you expect us to shrug off any system that lets us interface with our gear via mind-link, you're sorely mistaken. So keep at it Carleton U, let's see some thought scanners.