computerscience

Latest

Schools find ways to get more women into computer science courses

Technology giants like Apple and Google are frequently dominated by men, in part because relatively few women pursue computer science degrees; just 18 percent of American comp sci grads are female. However, at least a few schools have found ways to get more women into these programs. Carnegie Mellon University saw female enrollment jump to 40 percent after it both scrapped a programming experience requirement and created a tutoring system, giving women a support network they didn't have as a minority. Harvey Mudd College and the University of Washington, meanwhile, saw greater uptake (40 and 30 percent) after they reworked courses to portray coding as a solution to real-world problems, rather than something to study out of personal interest. Harvey Mudd's recruiters also made an effort to be more inclusive in advertising and campus tours.

Google's Made with Code encourages girls to embrace computer science

Less than one percent of high school girls are interested in computer science, but Google wants to alter that script with a new initiative called Made with Code. Created in conjunction with heavy hitters like the MIT Media Lab, Chelsea Clinton and the Girl Scouts of the USA, the campaign connects girls with coding resources, inspirational videos and more. The effort sprung from Google's own research showing that kids are more likely to get excited about computer science if they try it at an early age and are shown how it can benefit their careers. It hopes the effort will help girls to not just consume technology, but also use it as a creation tool in whichever profession they choose.

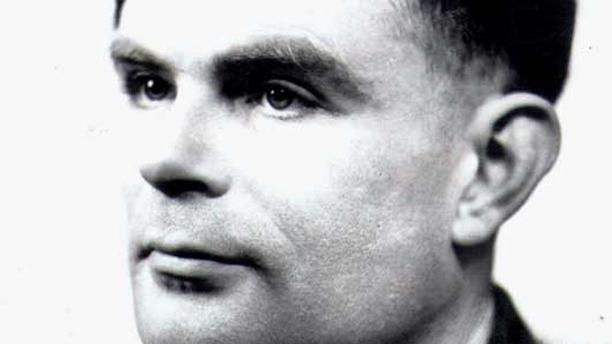

UK pardons computing pioneer Alan Turing

Campaigners have spent years demanding that the UK exonerate computing legend Alan Turing, and they're finally getting their wish. Queen Elizabeth II has just used her royal prerogative to pardon Turing, 61 years after an indecency conviction that many now see as unjust. The criminal charge shouldn't overshadow Turing's vital cryptoanalysis work during World War II, Justice Secretary Chris Grayling said when explaining the move. The pardon is a purely symbolic gesture, but an important one all the same -- it acknowledges that the conviction cut short the career of a man who defended his country, broke ground in artificial intelligence and formalized computing concepts like algorithms.

Hour of Code campaign teaches programming in 30,000 US schools (video)

Code.org wants to make computer science a staple of the classroom, and it's taking a big step toward that goal today with the launch of its Hour of Code campaign. More than 30,000 US schools (35,000 worldwide) will devote at least one hour this week to teaching programming, with incentives in store for everyone involved. Students who take an online follow-up course can win gift cards and Skype credits, while schools and teachers can win everything from 10GB of Dropbox space to a class-sized computer set. The initiative has plenty of outside support, as well. Apple and Microsoft are holding Hour of Code workshops in their US retail stores, while politicians on all sides (including President Barack Obama and Republican House Majority Leader Eric Cantor) are endorsing the concept. You don't even need to be a student to participate -- Code.org is making its tutorials available to just about anyone with a modern web browser or smartphone. Whether you're curious about what kids are learning or want to write some code yourself, you'll find everything you need at the source links.

UK government receptive to bill that would pardon Alan Turing

Many in the UK recognize Alan Turing's contributions to computing as we know it, but attempts to obtain a pardon for the conviction that tragically cut short his career have thus far been unsuccessful. There's a new glimmer of hope, however: government whip Lord Ahmad of Wimbledon says that the current leadership has "great sympathy" for a bill that would pardon Turing. As long as no one calls for amendments, the legislation should clear Parliament's House of Lords by late October and reach the House of Commons soon afterward. While there's no guarantee that the measure will ultimately pass, the rare level of endorsement suggests that Turing's name could soon be cleared.

Programming is FUNdamental: A closer look at Code.org's star-studded computer science campaign

"All these people who've made it big have their own variation of the same story, where they felt lucky to be exposed to computer programming at the right age, and it bloomed into something that changed their life," explains the organization's co-founder, Ali Partovi, seated in the conference room of one of the many successful startups he's helped along the way. The Iranian-born serial entrepreneur has played a role in an impressive list of companies, including the likes of Indiegogo, Zappos and Dropbox. Along with his twin brother, Hadi, he also co-founded music-sharing service iLike. Unlike past offerings from the brothers, Code.org is a decidedly non-commercial entity, one aimed at making computer science and programming every bit as essential to early education as science or math. For the moment, the organization is assessing just how to go about changing the world. The site currently offers a number of resources for bootstrappers looking to get started in the world of coding. There are simple modules from Scratch, Codecademy, Khan Academy and others, which can help users tap into the buzz of coding their first rectangle, along with links to apps and online tutorials. The organization is also working to build a comprehensive database of schools offering computer science courses and soliciting coders interested in teaching.

Remembering Alan Turing at 100

Alan Turing would have turned 100 this week, an event that would have, no doubt, been greeted with all manner of pomp -- the centennial of a man whose mid-century concepts would set the stage for modern computing. Turing, of course, never made it that far, found dead at age 41 from cyanide poisoning, possibly self-inflicted. His story is that of a brilliant mind cut down in its prime for sad and ultimately baffling reasons, a man who accomplished so much in a short time and almost certainly would have had far more to give, if not for a society that couldn't accept him for who he was. The London-born computing pioneer's name is probably most immediately recognized in the form of the Turing Machine, the "automatic machine" he discussed in a 1936 paper and formally extrapolated over the years. The concept would help lay the foundation for future computer science, arguing that a simple machine, given enough tape (or, perhaps more appropriately in the modern sense, storage) could be used to solve complex equations. All that was needed as Turing laid it out, was a writing method, a way of manipulating what's written and a really long ream to write on. In order to increase the complexity, only the storage, not the machine, needs upgrading.

Researchers get CPUs and GPUs talking, boost PC performance by 20 percent

How do you fancy a 20 percent boost to your processor's performance? Research from the North Carolina State University claims to offer just that. Despite the emergence of fused architecture SoCs, the CPU and GPU cores typically still work independently. The University hoped that by assigning tasks based on each processor's abilities, performance efficiency would be increased. As the CPU and GPU can fetch data at comparable speeds, the researchers set the GPUs to execute the computational functions, while the CPUs did the prefetching. With that data ready in advance, the graphics processor unit has more resources free, yielding an average performance boost of 21.4 percent though it's unclear what metrics the researchers were using. Incidentally, the research was funded by AMD, so no prizes for guessing which chips we might see using the technique first.

MESM Soviet computer project marks 60 years

Before you go complaining about your job, take a moment to remember the MESM project, which just marked the 60th anniversary of its formal recognition by the Soviet Academy of Sciences. The project, headed by Institute of Electrical Engineering director Sergey Lebedev, was born in a laboratory built from scratch amongst the post-World War II ruins of Ukrainian capital city, Kyiv, by a team of 20 people, many of whom took up residence above the lab. Work on MESM -- that's from the Russian for Small Electronic Calculating Machine -- began toward the end of 1948. By November 1950, the computer was running its first program. The following year, it was up and running full-time. The machine has since come to be considered the first fully operation electronic computer in continental Europe, according to a Google retrospective. Check out a video interview with a MESM team member, after the break -- and make sure you click on that handy caption button for some English subtitles.

New computer system can read your emotions, will probably be annoying about it (video)

It's bad enough listening to your therapist drone on about the hatred you harbor toward your father. Pretty soon, you may have to put up with a hyper-insightful computer, as well. That's what researchers from the Universidad Carlos III de Madrid have begun developing, with a new system capable of reading human emotions. As explained in their study, published in the Journal on Advances in Signal Processing, the computer has been designed to intelligently engage with people, and to adjust its dialogue according to a user's emotional state. To gauge this, researchers looked at a total of 60 acoustic parameters, including the tenor of a user's voice, the speed at which one speaks, and the length of any pauses. They also implemented controls to account for any endogenous reactions (e.g., if a user gets frustrated with the computer's speech), and enabled the adaptable device to modify its speech accordingly, based on predictions of where the conversation may lead. In the end, they found that users responded more positively whenever the computer spoke in "objective terms" (i.e., with more succinct dialogue). The same could probably be said for most bloggers, as well. Teleport past the break for the full PR, along with a demo video (in Spanish).

Intel 4004, world's first commercial microprocessor, celebrates 40th birthday, ages gracefully

Pull out the candles and champagne, because the Intel 4004 is celebrating a major birthday today -- the big four-oh. That's right, it's been exactly four decades since Intel unveiled the world's first commercially available CPU, with an Electronic News ad that ran on November 15th, 1971. It all began in 1969, when Japan's Nippon Calculating Machine Corporation asked Intel to create 12 chips for its Busicom 141-PF calculator. With that assignment, engineers Federico Faggin, Ted Hoff and Stanley Mazor set about designing what would prove to be a groundbreaking innovation -- a 4-bit, 16-pin microprocessor with a full 2,300 MOS transistors, and about 740kHZ of horsepower. The 4004's ten micron feature size may seem gargantuan by contemporary standards, but at the time, it was rather remarkable -- especially considering that the processor was constructed from a single piece of silicon. In fact, Faggin was so proud of his creation that he decided to initial its design with "FF," in appropriate recognition of a true work of art. Hit up the coverage links below for more background on the Intel 4004, including a graphic history of the microprocessor, from the Inquirer.

Wireless bike brake system has the highest GPA ever

Color us a yellow shade of mendacious, but if we designed something that works 99.999999999997 percent of the time, we'd probably round off and give ourselves a big ol' 100 percent A+. We'd probably throw in a smiley faced sticker, too. Computer scientist Holger Hermanns, however, is a much more honest man, which is why he's willing to admit that his new wireless bike brake system is susceptible to outright failure on about three out of every trillion occasions. Hermanns' concept bike, pictured above, may look pretty standard at first glance, but take a closer look at the right handlebar. There, you'll find a rubber grip with a pressure sensor nestled inside. Whenever a rider squeezes this grip, that blue plastic box sitting next to it will send out a signal to a receiver, attached to the bike's fork. From there, the message will be sent on to an actuator that converts the signal into mechanical energy, and activates the brake. Best of all, this entire process happens will take just 250 milliseconds of your life. No wires, no brakes, no mind control. Hermanns and his colleagues at Saarland University are now working on improving their system's traction and are still looking for engineers to turn their concept into a commercial reality, but you can wheel past the break for more information, in the full PR.

Dennis Ritchie, pioneer of C programming language and Unix, reported dead at age 70

We're getting reports today that Dennis Ritchie, the man who created the C programming language and spearheaded the development of Unix, has died at the age of 70. The sad news was first reported by Rob Pike, a Google engineer and former colleague of Ritchie's, who confirmed via Google+ that the computer scientist passed away over the weekend, after a long battle with an unspecified illness. Ritchie's illustrious career began in 1967, when he joined Bell Labs just one year before receiving a PhD in physics from Harvard University. It didn't take long, however, for the Bronxville, NY native to have a major impact upon computer science. In 1969, he helped develop the Unix operating system alongside Ken Thompson, Brian Kernighan and other Bell colleagues. At around the same time, he began laying the groundwork for what would become the C programming language -- a framework he and co-author Kernighan would later explain in their seminal 1978 book, The C Programming Language. Ritchie went on to earn several awards on the strength of these accomplishments, including the Turing Award in 1983, election to the National Academy of Engineering in 1988, and the National Medal of Technology in 1999. The precise circumstances surrounding his death are unclear at the moment, though news of his passing has already elicited an outpouring of tributes and remembrance for the man known to many as dmr (his e-mail address at Bell Labs). "He was a quiet and mostly private man," Pike wrote his brief post, "but he was also my friend, colleague, and collaborator, and the world has lost a truly great mind."

NC State researchers team with IBM to keep cloud-stored data away from prying eyes

The man on your left is Dr. Peng Ning -- a computer science professor at NC State whose team, along with researchers from IBM, has developed an experimental new method for safely securing cloud-stored data. Their approach, known as a "Strongly Isolated Computing Environment" (SICE), would essentially allow engineers to isolate, store and process sensitive information away from a computing system's hypervisors -- programs that allow networked operating systems to operate independently of one another, but are also vulnerable to hackers. With the Trusted Computing Base (TCB) as its software foundation, Ping's technique also allows programmers to devote specific CPU cores to handling sensitive data, thereby freeing up the other cores to execute normal functions. And, because TCB consists of just 300 lines of code, it leaves a smaller "surface" for cybercriminals to attack. When put to the test, the SICE architecture used only three percent of overhead performance for workloads that didn't require direct network access -- an amount that Ping describes as a "fairly modest price to pay for the enhanced security." He acknowledges, however, that he and his team still need to find a way to speed up processes for workloads that do depend on network access, and it remains to be seen whether or not their technique will make it to the mainstream anytime soon. For now, though, you can float past the break for more details in the full PR.

New program makes it easier to turn your computer into a conversational chatterbox

We've already seen how awkward computers can be when they try to speak like humans, but researchers from North Carolina State and Georgia Tech have now developed a program that could make it easier to show them how it's done. Their approach, outlined in a recently published paper, would allow developers to create natural language generation (NLG) systems twice as fast as currently possible. NLG technology is used in a wide array of applications (including video games and customer service centers), but producing these systems has traditionally required developers to enter massive amounts of data, vocabulary and templates -- rules that computers use to develop coherent sentences. Lead author Karthik Narayan and his team, however, have created a program capable of learning how to use these templates on its own, thereby requiring developers to input only basic information about any given topic of conversation. As it learns how to speak, the software can also make automatic suggestions about which information should be added to its database, based on the conversation at hand. Narayan and his colleagues will present their study at this year's Artificial Intelligence and Interactive Digital Entertainment conference in October, but you can dig through it for yourself, at the link below.

IBM developing largest data drive ever, with 120 petabytes of bliss

So, this is pretty... big. At this very moment, researchers at IBM are building the largest data drive ever -- a 120 petabyte beast comprised of some 200,000 normal HDDs working in concert. To put that into perspective, 120 petabytes is the equivalent of 120 million gigabytes, (or enough space to hold about 24 billion, average-sized MP3's), and significantly more spacious than the 15 petabyte capacity found in the biggest arrays currently in use. To achieve this, IBM aligned individual drives in horizontal drawers, as in most data centers, but made these spaces even wider, in order to accommodate more disks within smaller confines. Engineers also implemented a new data backup mechanism, whereby information from dying disks is slowly reproduced on a replacement drive, allowing the system to continue running without any slowdown. A system called GPFS, meanwhile, spreads stored files over multiple disks, allowing the machine to read or write different parts of a given file at once, while indexing its entire collection at breakneck speeds. The company developed this particular system for an unnamed client looking to conduct complex simulations, but Bruce Hillsberg, IBM's director of storage research, says it may be only a matter of time before all cloud computing systems sport similar architectures. For the moment, however, he admits that his creation is still "on the lunatic fringe."

AP, Google offer $20,000 scholarships to aspiring tech journalists, we go back to school

Love technology? Love journalism? Well, the AP-Google Journalism and Technology Scholarship program might be right up your alley. The initiative, announced earlier this week, will offer $20,000 scholarships to six graduate or undergraduate students working toward a degree in any field that combines journalism, new media and computer science. Geared toward aspiring journalists pursuing projects that "further the ideals of digital journalism," the program also aims to encompass a broad swath of students from diverse ethnic, gender, and geographic backgrounds. Applications for the 2012-2013 school year are now open for students who are currently enrolled as college sophomores or higher, with at least one year of full-time coursework remaining. Hit up the source link below to apply, or head past the break for more information, in the full presser.

Telex anti-censorship system promises to leap over firewalls without getting burned

Human rights activists and free speech advocates have every reason to worry about the future of an open and uncensored internet, but researchers from the University of Michigan and the University of Waterloo have come up with a new tool that may help put their fears to rest. Their system, called Telex, proposes to circumvent government censors by using some clever cryptographic techniques. Unlike similar schemes, which typically require users to deploy secret IP addresses and encryption keys, Telex would only ask that they download a piece of software. With the program onboard, users in firewalled countries would then be able to visit blacklisted sites by establishing a decoy connection to any unblocked address. The software would automatically recognize this connection as a Telex request and tag it with a secret code visible only to participating ISPs, which could then divert these requests to banned sites. By essentially creating a proxy server without an IP address, the concept could make verboten connections more difficult to trace, but it would still rely upon the cooperation of many ISPs stationed outside the country in question -- which could pose a significant obstacle to its realization. At this point, Telex is still in a proof-of-concept phase, but you can find out more in the full press release, after the break.

Scientists develop algorithm to solve Rubik's cubes of any size

A computer solving a Rubik's cube? P'shaw. Doing it in 10.69 seconds? Been there, record set. But to crack one of any size? Color us impressed. Erik Demaine of MIT claims to have done just that -- he and his team developed an algorithm that applies to cubes no matter how ambitious their dimensions. Pretty early on, he realized he needed to take a different angle than he would with a standard 3 x 3 x 3 puzzle, which other scientists have tackled by borrowing computers from Google to consider all 43 quintillion possible moves -- a strategy known simply as "brute force." As you can imagine, that's not exactly a viable solution when you're wrestling with an 11 x 11 x 11 cube. So Demaine and his fellow researchers settled on an approach that's actually a riff on one commonly used by Rubik's enthusiasts, who might attempt to move a square into its desired position while leaving the rest of the cube as unchanged as possible. That's a tedious way to go, of course, so instead the team grouped several cubies that all needed to go in the same direction, a tactic that reduced the number of moves by a factor of log n, with n representing the length of any of the cube's sides. Since moving individual cubies into an ideal spot requires a number of moves equal to n², the final algorithm is n²/log n. If we just lost you non-math majors with that formula, rest assured that the scientists expect folks won't be able to apply it directly, per se, though they do say it could help cube-solvers sharpen their strategy. Other that, all you overachievers out there, you're still on your own with that 20 x 20 x 20.

Computer learning and computational neuroscience icon Dr. Leslie Valiant wins Turing Award

We've seen recently that computers are more than capable of kicking humanoids to the curb when it comes to winning fame and fortune, but it's still we humans who dole out the prizes, and one very brainy humanoid just won the best prize in computer science. That person is Leslie Valiant, and the prize is the fabled A.M. Turing Award. Dr. Valiant currently teaches at Harvard and over the years developed numerous algorithms and models for parsing and computer learning, including work to understand computational neuroscience. His achievements have helped make those machines smarter and better at thinking like we humans, but he's as of yet been unsuccessful in teaching them the most important thing: how to love.