ImageRecognition

Latest

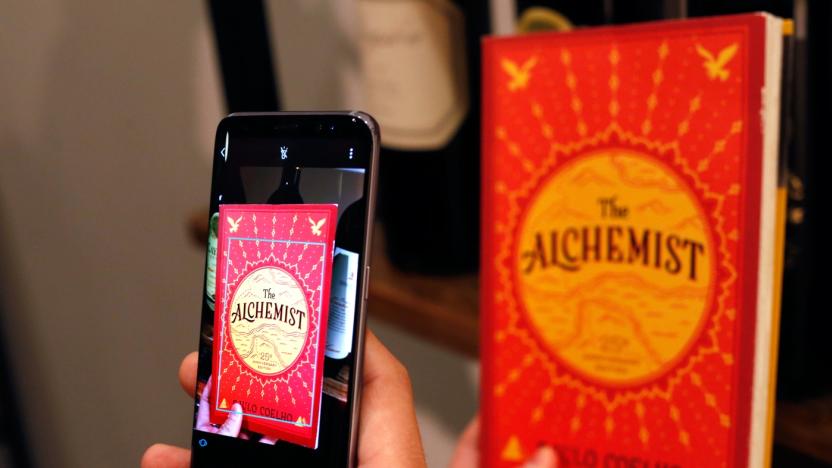

Verizon's Galaxy S8 won't help you shop on Amazon

Samsung already gave a heads-up that Bixby wouldn't be complete when the Galaxy S8 launched, but Verizon customers will have to make do with less than most... for a while, anyway. The American carrier has confirmed to CNET that its version of the S8 doesn't currently let you use Bixby Vision to find products on Amazon just by pointing your camera. You can find images of that book you're looking for, but you can't shop for it. A Verizon spokesperson didn't explain why the feature wasn't available right away (Amazon and Samsung didn't say either), but did promise that the network was "working with Amazon" to provide it in the future.

AI is learning to speed read

As clever as machine learning is, there's one common problem: you frequently have to train the AI on thousands or even millions of examples to make it effective. What if you don't have weeks to spare? If Gamalon has its way, you could put AI to work almost immediately. The startup has unveiled a new technique, Bayesian Program Synthesis, that promises AI you can train with just a few samples. The approach uses probabilistic code to fill in gaps in its knowledge. If you show it very short and tall chairs, for example, it should figure out that there are many chair sizes in between. And importantly, it can tweak its own models as it goes along -- you don't need constant human oversight in case circumstances change.

Google uses AI to sharpen low-res images

Deckard's photo-enhancing gear in Blade Runner is still the stuff of fantasy. However, Google might just have a close-enough approximation before long. The Google Brain team has developed a system that uses neural networks to fill in the details on very low-resolution images. One of the networks is a "conditioning" element that maps the lower-res shot to similar higher-res examples to get a basic idea of what the image should look like. The other, the "prior" network, models sharper details to make the final result more plausible.

AI is nearly as good as humans at identifying skin cancer

If you're worried about the possibility of skin cancer, you might not have to depend solely on the keen eye of a dermatologist to spot signs of trouble. Stanford researchers (including tech luminary Sebastian Thrun) have discovered that a deep learning algorithm is about as effective as humans at identifying skin cancer. By training an existing Google image recognition algorithm using over 130,000 photos of skin lesions representing 2,000 diseases, the team made an AI system that could detect both different cancers and benign lesions with uncanny accuracy. In early tests, its performance was "at least" 91 percent as good as a hypothetically flawless system.

Apple publishes its first AI research paper

When Apple said it would publish its artificial intelligence research, it raised at least a couple of big questions. When would we see the first paper? And would the public data be important, or would the company keep potential trade secrets close to the vest? At last, we have answers. Apple researchers have published their first AI paper, and the findings could clearly be useful for computer vision technology.

Google AI experiments help you appreciate neural networks

Sure, you may know that neural networks are spicing up your photos and translating languages, but what if you want a better appreciation of how they function? Google can help. It just launched an AI Experiments site that puts machine learning to work in a direct (and often entertaining) way. The highlight by far is Giorgio Cam -- put an object in front of your phone or PC camera and the AI will rattle off a quick rhyme based on what it thinks it's seeing. It's surprisingly accurate, fast and occasionally chuckle-worthy.

Disney Research's AI system knows what a car sounds like

A picture may be worth a thousand words, but sound is just as important to how we experience the world as how we see it -- that's why a team at Disney Research is working on a computer vision system that can not only recognize what an image is, but how it sounds, too. In an initial study presented at the European Conference on Computer Vision, the group's system successfully managed to pair appropriate audio with images of doors closing, glasses clinking and vehicles driving down the road.

Google machine learning can protect endangered sea cows

It's one thing to track endangered animals on land, but it's another to follow them when they're in the water. How do you spot individual critters when all you have are large-scale aerial photos? Google might just help. Queensland University researchers have used Google's TensorFlow machine learning to create a detector that automatically spots sea cows in ocean images. Instead of making people spend ages coming through tens of thousands of photos, the team just has to feed photos through an image recognition system that knows to look for the cows' telltale body shapes.

'Watch Dogs 2' web app tries to reveal secrets in your selfies

Ubisoft is no stranger to producing poignant on the surface, but ultimately vapid marketing for its hacker-centric Watch Dogs series. And the latest example of that is a selfie analyzer for the upcoming sequel. The pitch is that your self-portraiture reveals a lot of hidden info about you, and uploading a picture to the web app will reveal it. Stuff like your age, what your picture says about you to employers, financial institutions, pharmaceutical companies, political organizations and police databases -- info based on details in your photos. That's in theory, of course. In practice the results don't pan out so well.

Artificial intelligence won't save the internet from porn

"I shall not today attempt further to define the kinds of material I understand to be embraced within that shorthand description ["hard-core pornography"], and perhaps I could never succeed in intelligibly doing so. But I know it when I see it, and the motion picture involved in this case is not that." -- United States Supreme Court Justice Potter Stewart In 1964, the Supreme Court overturned an obscenity conviction against Nico Jacobellis, a Cleveland theater manager accused of distributing obscene material. The film in question was Louis Malle's "The Lovers," starring Jeanne Moreau as a French housewife who, bored with her media-mogul husband and her polo-playing sidepiece, packs up and leaves after a hot night with a younger man. And by "hot," I mean a lot of artful blocking, heavy breathing and one fleeting nipple -- basically, nothing you can't see on cable TV.

Google's AI is getting really good at captioning photos

It's great to be an AI developer right now, but maybe not a good time to have a job that can be done by a machine. Take image captioning -- Google has released its "Show and Tell" algorithm to developers, who can train it recognize objects in photos with up to 93.9 percent accuracy. That's a significant improvement from just two years ago, when it could correctly classify 89.6 percent of images. Better photo descriptions can be used in numerous ways to help historians, visually impaired folks, and of course, other AI researchers, to name a few examples.

Google AI builds a better cucumber farm

Artificial intelligence technology doesn't just have to solve grand challenges. Sometimes, it can tackle decidedly everyday problems -- like, say, improving a cucumber farm. Makoto Koike has built a cucumber sorter that uses Google's TensorFlow machine learning technology to save his farmer parents a lot of work. The system uses a camera-equipped Raspberry Pi 3 to snap photos of the veggies and send the shots to a small TensorFlow neural network, where they're identified as cucumbers. After that, it sends images to a larger network on a Linux server to classify the cucumbers by attributes like color, shape and size. An Arduino Micro uses that info to control the actual sorting, while a Windows PC trains the neural network with images.

Computers learn to predict high-fives and hugs

Deep learning systems can already detect objects in a given scene, including people, but they can't always make sense of what people are doing in that scene. Are they about to get friendly? MIT CSAIL's researchers might help. They've developed a machine learning algorithm that can predict when two people will high-five, hug, kiss or shake hands. The trick is to have multiple neural networks predict different visual representations of people in a scene and merge those guesses into a broader consensus. If the majority foresees a high-five based on arm motions, for example, that's the final call.

Apple iOS 10 uses AI to help you find photos and type faster

Apple is making artificial intelligence a big, big cornerstone of iOS 10. To start, the software uses on-device computer vision to detect both faces and objects in photos. It'll recognize a familiar friend, for instance, and can tell that there's a mountain in the background. While this is handy for tagging your shots, the feature really comes into its own when you let the AI do the hard work. There's a new Memories section in the Photos app that automatically organizes pictures based on events, people and places, complete with related memories (such as similar trips) and smart presentations. Think of it as Google Photos without having to go online.

FBI is building a tattoo tracking AI to identify criminals

AI-powered image recognition is all the rage these days, but it could have a sinister side too. Since 2014, the National Institute of Standards and Technology started working with the FBI to develop better automated tattoo recognition tech, according to a study by the Electronic Frontier Foundation. The idea here is to basically develop profiles of people based on their body art. The EFF says that because tattoos are a form of speech, "any attempt to identify, profile, sort or link people based on their ink raises significant First Amendment questions."

Microsoft's Translator app gets image recognition on Android

Microsoft Translator's image translation is simple: point your phone's camera at a sign or menu in any of the 21 supported languages and the app translates it in real-time onscreen. The app's iOS version got it back in February and now the feature comes to the Android one. But a few other features and language additions come along with the update.

Scientists can identify terrorists by their victory signs

To no one's surprise, many terrorists aren't willing to divulge their identities -- they'd rather cover themselves head-to-toe than risk a drone strike or police bust. Researchers, however, may have made it that much harder for these extremists to hide. They've developed a biometric identification technique that can pinpoint people by the V-for-victory hand signs they make. By measuring finger points, the gap between them and two palm points, scientists can identify someone even when there are no other telltale cues. In some cases, it was more than 90 percent accurate.

Google neural network tells you where photos were taken

It's easy to identify where a photo was taken if there's an obvious landmark, but what about landscapes and street scenes where there are no dead giveaways? Google believes artificial intelligence could help. It just took the wraps off of PlaNet, a neural network that relies on image recognition technology to locate photos. The code looks for telltale visual cues such as building styles, languages and plant life, and matches those against a database of 126 million geotagged photos organized into 26,000 grids. It could tell that you took a photo in Brazil based on the lush vegetation and Portuguese signs, for instance. It can even guess the locations of indoor photos by using other, more recognizable images from the album as a starting point.

IBM's Watson can sense sadness in your writing

Artificial intelligence won't be truly convincing until it can understand emotions. What good is a robot that can't understand the nuances of what you're really saying? IBM thinks it can help, though. It just gave Watson an upgrade that includes a much-improved Tone Analyzer. The AI now detects a wide range of emotions in your writing, including joy or sadness. If you tell everyone that you're fine when you're really down in the dumps, Watson should pick up on that subtle melancholy. Watson is also better at spotting social tendencies like extroversion, and studies whole sentences (important for context) rather than looking at individual words.

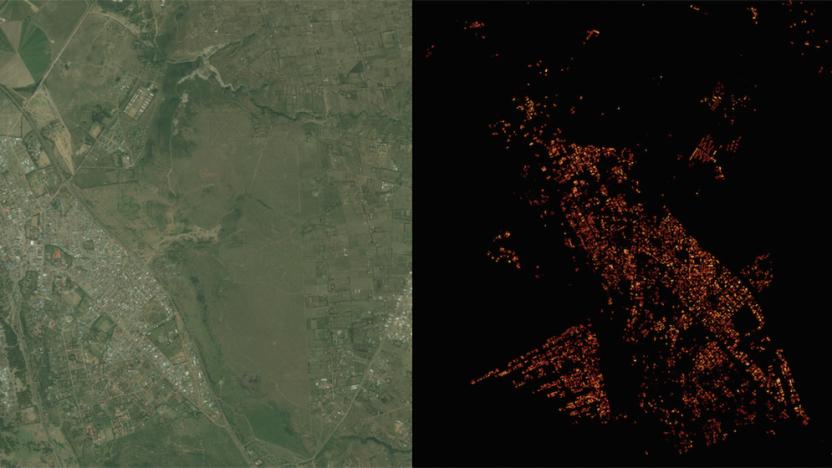

Facebook created a super-detailed population density map

Facebook's quest to get the world online is paying some unexpected dividends. Its Connectivity Lab is using image recognition technology to create population density maps that are much more accurate (to within 10m) than previous data sets -- where earlier examples are little more than blobs, Facebook shows even the finer aspects of individual neighborhoods. The trick was to modify the internet giant's existing neural network so that it could quickly determine whether or not buildings are present in satellite images. Instead of spending ages mapping every last corner of the globe, Facebook only had to train its network on 8,000 images and set it loose.