Adobe is using AI to catch Photoshopped images

The Photoshop creator is trying to snuff out fake photos.

While picture editors have tweaked images for decades, modern tools like Adobe Photoshop let them alter photos to the point of complete fabrication. Think of sharks swimming in the streets of New Jersey after Hurricane Sandy, or someone flying a "where's my damn dinner?" banner over a women's march. Those images were fake, but clever manipulation can trick news outlets and social media users into thinking they're real. By the time we figure out that they're phony, bombastic pictures can go viral and it's nearly impossible to let everyone know the image they shared is a sham.

Adobe, certainly aware of how complicit its software is in the creation of fake news images, is working on artificial intelligence that can spot the markers of phony photos. In other words, the maker of Photoshop is tapping into machine learning to find out if someone has Photoshopped an image.

Using AI to find fake images is a way for Adobe to help "increase trust and authenticity in digital media," the company says. That brings it in line with the likes of Facebook and Google, which have stepped up their efforts to fight fake news.

Whenever someone alters an image, unless they are pixel perfect in their work, they always leave behind indicators that the photo is modified. Metadata and watermarks can help determine a source image, and forensics can probe factors like lighting, noise distribution and edges on the pixel level to find inconsistencies. If a color is slightly off, for instance, forensic tools can flag it. But Adobe wagers that it could employ AI to find telltale signs of manipulation faster and more reliably.

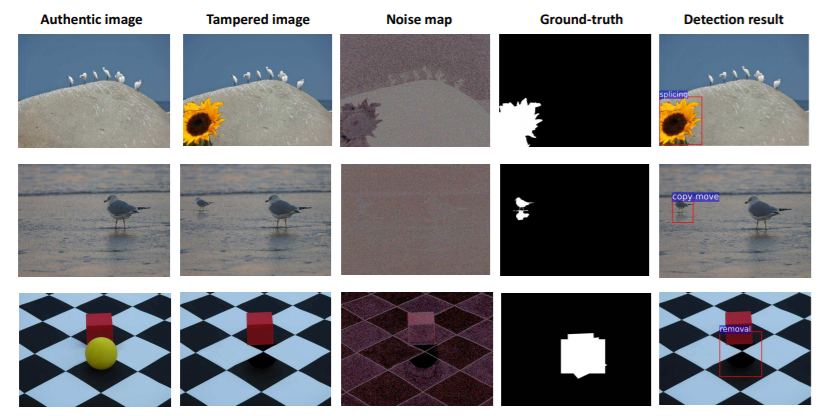

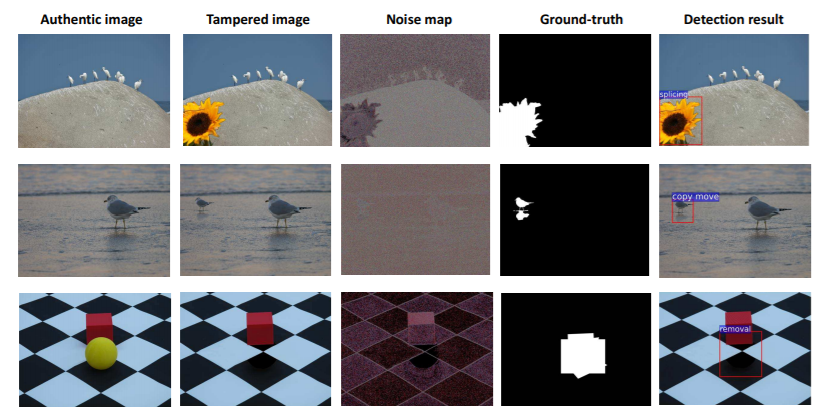

The AI looks for three types of manipulation: cloning, splicing and removal. Cloning (or copy-move) is when objects are copied or moved within an image, such as parts of a crowd duplicated to make it seem like there are more people in a scene. Splicing is where someone smushes together aspects of two different images, like the aforementioned sharks, which were grabbed from one photo and blended into another showing flooded streets. Removal is self-explanatory.

As is typical with machine learning methods, the Adobe team, along with University of Maryland researchers, fed the AI tens of thousands of phony images to teach it what to look for. The team trained the AI to figure out the type of manipulation used on an image and to flag the area of a photo that someone changed. The AI can do this in seconds, Adobe says.

The AI uses a pair of techniques to hunt for artifacts. It looks for changes to the red, green and blue color values of pixels. It also examines noise, the random variations of color and brightness caused by a camera's sensor or software manipulations. Those noise patterns are often unique to cameras or photos, so the AI can pick up on inconsistencies, especially in spliced images.

Adobe notes these techniques are not perfect, though they "provide more possibility and more options for managing the impact of digital manipulation, and they potentially answer questions of authenticity more effectively." The research team says it might harness the AI to examine other types of artifacts, like those caused by compression when a file is saved repeatedly.

Still, there could be a long way to go before this AI becomes a viable product. Photoshop is unquestionably a great tool for touching up images and creating memorable art, but people have used it to poison the well of legitimate, newsworthy photos, and it seems Adobe wants to offer a stronger antidote.