Bumble will use AI to detect unwanted nudes

A new “private detector” will warn users about graphic images.

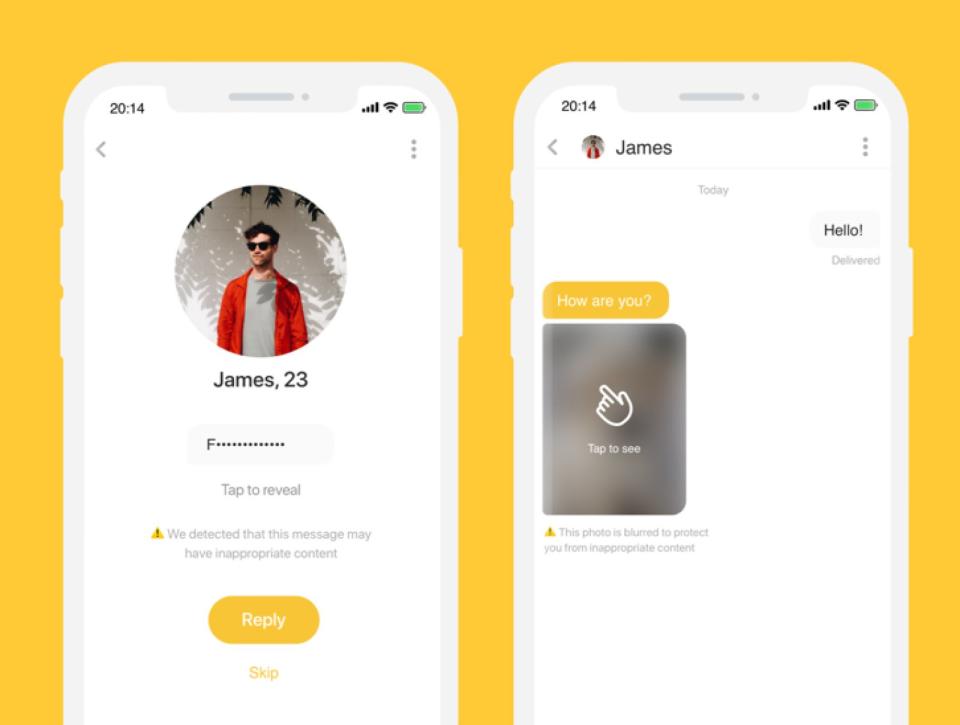

Artificial intelligence will soon weed out any NSFW photos a match sends to you on Bumble. The dating app that requires women to make the first contact said it is launching a "private detector" to warn users about lewd images. Bumble CEO Whitney Wolfe Herd and Andrey Andreev, CEO of the dating app parent company that includes Bumble, Badoo, Chappy and Lumen, made the announcement Wednesday in a press release.

Beginning in June, all images sent on Bumble and the other apps will be screened by the AI-assisted "private detector." If a photo is suspected to be lewd or inappropriate, users will have the option to view, block or report the image to moderators before they open it.

Unwanted sexual advances are a daily reality for many women on the internet, and that figure only multiplies on dating apps. A 2016 Consumers' Research study found that 57 percent of women report feeling harassed on dating apps, compared to 21 percent of men. Unlike Tinder or Hinge, Bumble allows its users to send photos to people they match with on the dating app. All images sent on Bumble are automatically blurred out; recipients must long press the image in order to view it. While the image safeguards keep users from being bombarded with sexual images on Bumble, the company doesn't feel like it's enough.

"The sharing of lewd images is a global issue of critical importance and it falls upon all of us in the social media and social networking worlds to lead by example and to refuse to tolerate inappropriate behavior on our platforms," said Andreev in a press release.

Bumble claims that its private detector feature is 98-percent "effective". A Bumble spokesperson told Engadget that the AI tool will also be able to detect pictures of guns and shirtless mirror selfies, both of which are banned on the app.

Wolfe Herd is currently working with Texas state lawmakers to make the sharing of lewd images a punishable crime. The bill championed by Wolfe Herd would make it a Class C misdemeanor--punishable by a fine of $500--to send a lewd photo without a recipient's consent.

A Bumble spokesperson told Engadget that an AI tool to detect inappropriate messages and harmful language is under development. Bumble won't be the first to use AI to filter profiles and messages. While Tinder does not allow photo sharing on its app, it has been using an AI tool to automatically scan profiles for "red-flag language and images."