What do made-for-AI processors really do?

Will a dedicated “neural processing unit” really affect you?

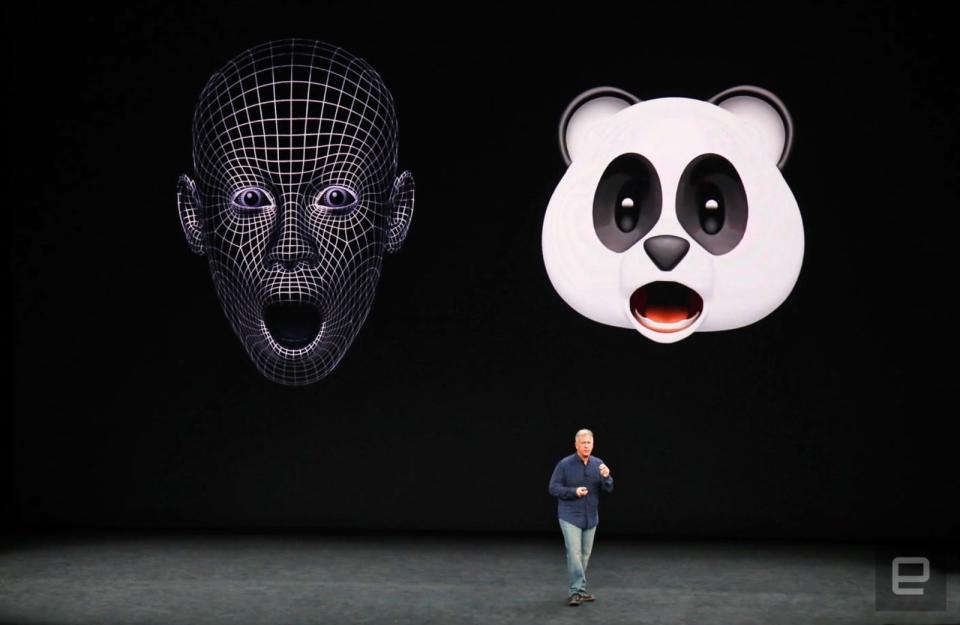

Tech's biggest players have fully embraced the AI revolution. Apple, Qualcomm and Huawei have made mobile chipsets that are designed to better tackle machine-learning tasks, each with a slightly different approach. Huawei launched its Kirin 970 at IFA this year, calling it the first chipset with a dedicated neural processing unit (NPU). Then, Apple unveiled the A11 Bionic chip, which powers the iPhone 8, 8 Plus and X. The A11 Bionic features a neural engine that the company says is "purpose-built for machine-learning," among other things.

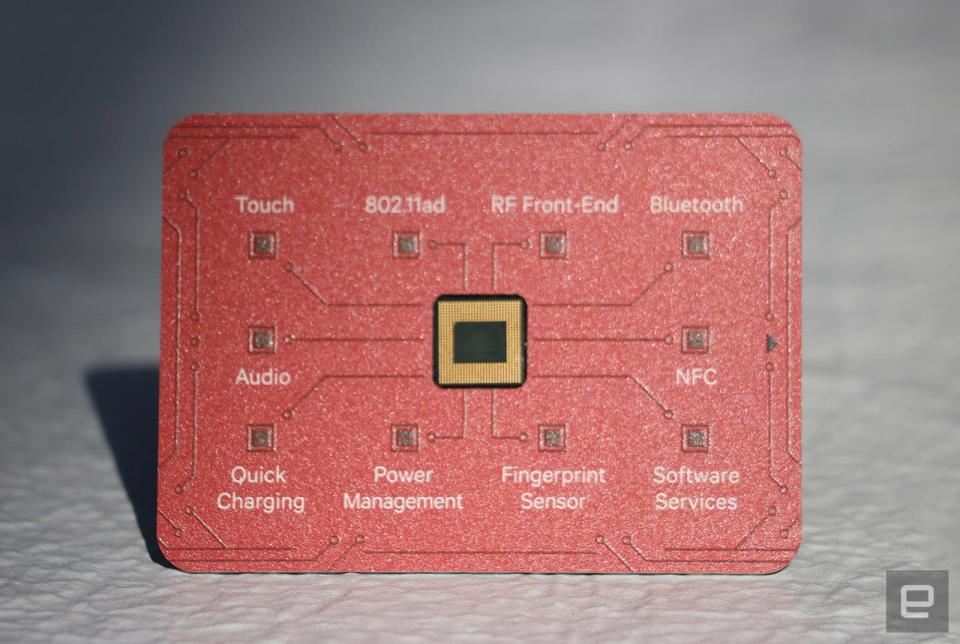

Last week, Qualcomm announced the Snapdragon 845, which sends AI tasks to the most suitable cores. There's not a lot of difference between the three company's approaches -- it ultimately boils down to the level of access each company offers to developers and how much power each setup consumes.

Before we get into that though, let's figure out if an AI chip is really all that different from existing CPUs. A term you'll hear a lot in the industry with reference to AI lately is "heterogeneous computing." It refers to systems that use multiple types of processors, each with specialized functions, to gain performance or save energy. The idea isn't new -- plenty of existing chipsets use it -- the three new offerings in question just employ the concept to varying degrees.

Smartphone CPUs from the last three years or so have used ARM's big.LITTLE architecture, which pairs relatively slower, energy-saving cores with faster, power-draining ones. The main goal is to use as little power as possible, to get better battery life. Some of the first phones using such architecture include the Samsung Galaxy S4 with the company's own Exynos 5 chip, as well as Huawei's Mate 8 and Honor 6.

This year's "AI chips" take this concept a step further by either adding a dedicated component to execute machine-learning tasks or, in the case of the Snapdragon 845, using other low-power cores to do so. For instance, the Snapdragon 845 can tap its digital signal processor (DSP) to tackle long-running tasks that require a lot of repetitive math, like listening out for a hotword. Activities like image-recognition, on the other hand, are better managed by the GPU, Qualcomm Director of Product Management Gary Brotman told Engadget. Brotman heads up AI and machine-learning for the Snapdragon platform.

Meanwhile, Apple's A11 Bionic uses a neural engine in its GPU to speed up Face ID, Animoji and some third-party apps. That means when you fire up those processes on your iPhone X, the A11 turns on the neural engine to carry out the calculations needed to either verify who you are or map your facial expressions onto talking poop.

On the Kirin 970, the NPU takes over tasks like scanning and translating words in pictures taken with Microsoft's Translator, which is the only third-party app so far to have been optimized for this chipset. Huawei said its "HiAI" heterogeneous computing structure maximizes the performance of most of the components on its chipset, so it may be assigning AI tasks to more than just the NPU.

Differences aside, this new architecture means that machine-learning computations, which used to be processed in the cloud, can now be carried out more efficiently on a device. By using parts other than the CPU to run AI tasks, your phone can do more things simultaneously, so you are less likely to encounter lag when waiting for a translation or finding a picture of your dog.

Plus, running these processes on your phone instead of sending them to the cloud is also better for your privacy, because you reduce the potential opportunities for hackers to get at your data.

Another big advantage of these AI chips is energy savings. Power is a precious resource that needs to be allocated judiciously because some of these actions can be repeated all day. The GPU tends to suck more juice, so if it's something the more energy efficient DSP can perform with similar results, then it's better to tap the latter.

To be clear, it's not the chipsets themselves that decide which cores to use when executing certain tasks. "Today, it's up to developers or OEMs where they want to run it," Brotman said. Programmers can use supported libraries like Google's TensorFlow (or more specifically its Lite mobile version) to dictate on which cores to run their models. Qualcomm, Huawei and Apple all work with the most popular options like TensorFlow Lite and Facebook's Caffe2. Qualcomm also supports the newer Open Neural Networks Exchange (ONNX), while Apple adds compatibility for even more machine-learning models via its Core ML framework.

So far, none of these chips have delivered very noticeable real-world benefits. Chip makers will tout their own test results and benchmarks, which are ultimately meaningless until AI processes become a more significant part of our daily lives. We're in the early stages of on-device machine learning being implemented, and developers who have made use of the new hardware are few and far between.

Right now, though, it's clear that the race is on to make carrying out machine learning-related tasks on your device much faster and more power-efficient. We'll just have to wait awhile longer to see the real benefits of this pivot to AI.