How Instagram’s anti-vaxxers fuel coronavirus conspiracy theories

The coronavirus pandemic has given rise to a new wave of viral disinformation.

Instagram’s efforts to curb health misinformation have done little to stem the flow of conspiracy theories and misinformation about vaccines. The app continues to be a hotbed of anti-vaccine conspiracy theories, which often spread without the promised fact-checks and are further fueled by Instagram’s search and recommendation algorithms.

The problem has only escalated during COVID-19 as the coronavirus pandemic has given rise to a new surge of viral disinformation and conspiracy theories, many of which are widely promoted by the anti-vaccination movement. At the same time, many of Facebook's moderators have been unable to work and review reports of potentially rule-breaking content.

Instagram’s ‘rabbit hole’ problem

Like Facebook, Instagram doesn’t ban anti-vaccine content, though the company claims it has attempted to make it less visible to users. The company blocks some hashtags and says it tries to make anti-vaccine content harder to find in public areas of the app, like Explore. Yet accounts promoting conspiracy theories and inaccurate information about vaccines dominate the app’s search results.

When you search the word “vaccine” on Instagram, the app recommends dozens of anti-vaccine accounts in its top results. Accounts with names such as “Vaccines_revealed,” “Vaccinesuncovered,” “vaccines_kill_” “vaccinesaregenocide_” and “say_no_to_bill_gates_vaccine” are front and center. While some of these accounts are popular, with nearly 100,000 followers, others have only a few hundred. Yet Instagram’s algorithm consistently recommends these accounts — and not one verified health organization — as the most relevant accounts for the search term “vaccine.”

Accounts promoting conspiracy theories and inaccurate information about vaccines dominate search results.

Some of these accounts are meant to sow fear — many are aimed at parents — and post clearly spurious claims like “vaccines are causing autism rates to skyrocket”. Many have pivoted to posting conspiracy theories about Bill Gates and the coronavirus pandemic.

Instagram’s recommendation algorithm also pushes users toward accounts spreading conspiracy theories, including those about vaccines and COVID-19.

I made a new Instagram account, searched “vaccine,” and then followed a few of the top results mentioned above. Within seconds, the app began suggesting I follow more anti-vaccine pages and other accounts peddling conspiracy theories, including QAnon. This isn’t a new phenomenon, either. Vice noted last year that Instagram’s follow suggestions could easily lead users down an anti-vax rabbit hole. The company said at the time it would look into it, but it doesn’t appear much has changed.

Not only do the suggestions still appear, these recommendations are now pushing users toward other fringe conspiracy theories. I only had to follow four anti-vaccine accounts before Instagram began recommending popular QAnon pages, one of which prominently linked to the Plandemic documentary Facebook and others have struggled to successfully banish from their platform. A couple days later, the app sent push notifications recommending I follow two more QAnon pages.

An Instagram spokesperson reiterated that the company aims to make misinformation about vaccines harder to find in public areas of the app.

Searching for specific hashtags can also lead users into a rabbit hole of conspiracy theories.

Searching for #vaccine prompts you to first visit the CDC’s website and contains relatively sanitized results, but Instagram’s hashtag search recommends other “related” search terms that are less filtered, including #vaccineinjuryadvocate and #vaççineskillandinjure. (Using the cedilla character instead of a “c” is a common tactic used by anti-vaccine advocates in order to evade detection, as Coda reported last year.)

And when you look at search results for these recommended hashtags, like #vaccineinjuryadvocate, Instagram further suggests more hashtags associated with various other conspiracy theories, including coronavirus conspiracy theories: #plandemic, #governmentconspiracy, #populationcontrol, and #scamdemic. (Instagram has since blocked search results for #plandemic, which had more than 26,000 posts, according to the app.)

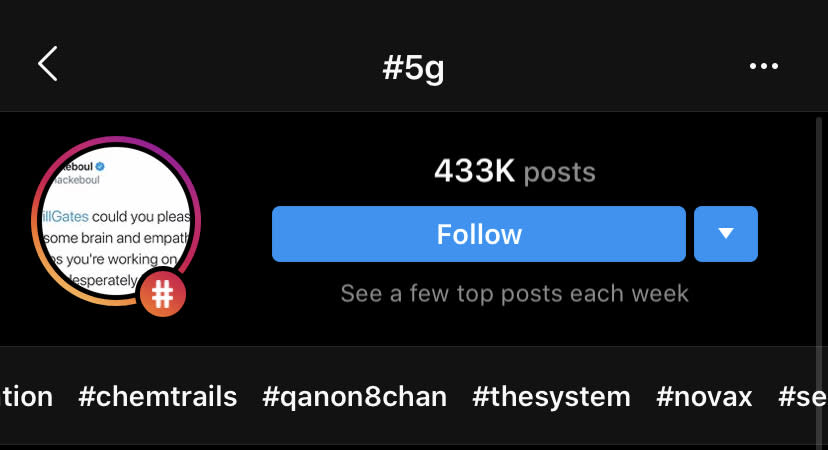

Instagram's algorithm recommending hashtags associated with conspiracy theories isn’t just limited to vaccines either. Search #5G and the app surfaces “related hashtags” like #fuckbillgates #billgatesisevil #chemtrails and #coronahoax. Other seemingly innocuous suggestions, like #5Gtowers, also lead to conspiracy theories like #projectbluebeam #markofthebeast #epsteindidntkillhimself.

Misinformation on Instagram

None of these are new issues for Instagram, but the photo-sharing app’s misinformation problem has often avoided the same scrutiny that’s been applied to Facebook. When company officials testified in front of Congress, they downplayed Instagram’s role in spreading Russian disinformation. The Senate Intelligence Committee’s subsequent report found that Instagram ”was the most effective tool used by the IRA.”

The problem, according to those who study it, is that misinformation on Instagram often takes the form of memes and other images that are harder for the company’s systems to detect and can be more difficult for the company’s human reviewers to parse. And while Instagram is building out new systems to address this, images can be a much more effective conduit for bad actors, says Paul Barrett, the deputy director of NYU's Stern Center for Business and Human Rights.

“Disinformation, which would include anti-vaxxer material, is increasingly a visual game. This is not something that's done exclusively or even primarily anymore via big blasts of text,” Barrett said. “Visual material makes it easy to digest, and something that's not going to seem threatening or overbearing. And I think as a result that makes Instagram appealing.”

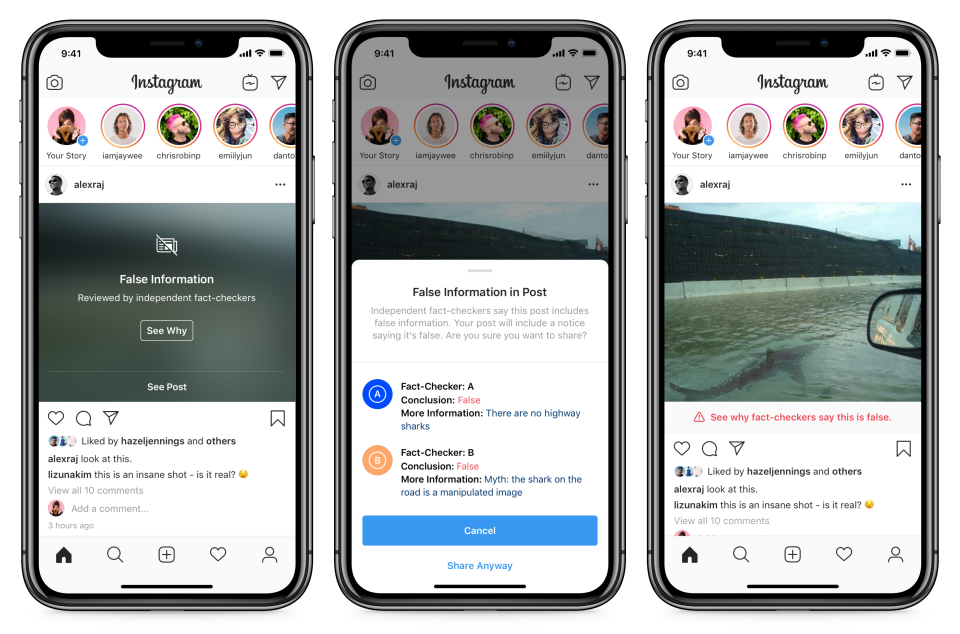

Yet Instagram has been much slower to deal with its misinformation problem than Facebook. The photo-sharing app didn’t implement any fact-checking efforts until last May — nearly three years after Facebook began debunking posts with outside fact-checkers. And the app has only recently moved to make debunked posts less visible in users’ feeds.

And though Instagram, like Facebook, has prioritized coronavirus misinformation it considers “harmful,” the company doesn’t apparently consider anti-vaccine content, which researchers have linked to measles outbreaks and other instances of actual harm, to be as urgent a problem as some coronavirus conspiracies.

“We're prioritizing reviewing certain types of content, like child safety, suicide and self injury, terrorism and harmful misinformation related to COVID, to make sure that we're handling the most dangerous issues,” Mark Zuckerberg said during a call with reporters to discuss the company’s content moderation efforts this week.

When asked whether the company was prioritizing anti-vaccine content given its links to coronavirus misinformation, Facebook’s VP of integrity, Guy Rosen, said, “Health-related harm is something that’s very much top of mind and very much something that we want to prioritize.”

An Instagram spokesperson told Engadget the company doesn’t bar anti-vaccination content, but noted it has removed some posts with misinformation in response to a deadly measles outbreak in Samoa and a polio resurgence in Pakistan. Officials in both countries have blamed misinformation for rising anti-vaccination sentiment.

In most cases, though, the company doesn’t act to remove such content entirely, attempting to make it less visible or adding “false information” labels when the content has been debunked by fact-checkers.

But fact-checking might not be enough, according to Barrett. “Facebook is so outmatched by the scale of the problem, it's almost a little naive to assume that fact-checking is — even if it's done vigorously — that you're going to be able to catch a substantial majority of false information that's being posted on a continuous basis,” Barrett says. “When you're talking about billions of posts a day, even if you have Facebook's 60 fact-checking organizations around the world, a lot of stuff is going to slip by them.”