ComputerScienceAndArtificialIntelligenceLaboratory

Latest

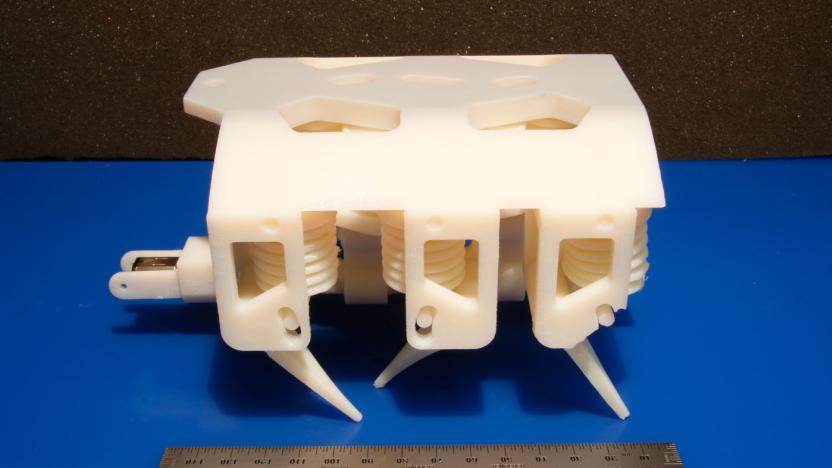

MIT 3D prints a complete walking robot

A team from MIT's Computer Science and Artificial Intelligence Lab believe that it's created a new way to 3D print whole robots. The breakthrough that researchers have made centers around creating what's being called "printable hydraulics," a way to create liquid-filled pumps inside the manufacturing process. According to CSAIL director Daniella Rus, the technique "is a step towards the rapid fabrication of functional machines." She adds that "all you have to do is stick in a battery and motor and you have a robot that can practically walk right out of the printer."

Inefficient? MIT's new chip software doesn't know the meaning of the word

Would you rather have a power-hungry cellphone that could software-decode hundreds of video codecs, or a hyper-efficient system-on-chip that only processes H.264? These are the tough decisions mobile designers have to make, but perhaps not for much longer. MIT's Computer Science and Artificial Intelligence Laboratory has developed a solution that could spell the end for inefficient devices. Myron King and Nirav Dave have expanded Arvind's BlueSpec software so engineers can tell it what outcomes they need and it'll decide on the most efficient design -- printing out hardware schematics in Verilog and software in C++. If this outcome-oriented system becomes widely adopted, we may never need worry about daily recharging again: good because we'll need that extra power to juice our sporty EV. [Image courtesy of MIT / Melanie Gonick]

MIT researchers develop the most fabulous gesture control technique yet

When looking for a cheap, reliable way to track gestures, Robert Wang and Jovan Popovic of MIT's Computer Science and Artificial Intelligence Laboratory came upon this notion: why not paint the operator's hands (or better yet, his Lycra gloves) in a manner that will allow the computer to differentiate between different parts of the hand, and differentiate between the hand and the background? Starting with something that Howie Mandel might have worn in the 80s, the researchers are able to use a simple webcam to track the hands' locations and gestures -- with relatively little lag. The glove itself is split into twenty patches made up of ten different colors, and while there's no telling when this technology will be available for consumers, something tells us that when it does become available it'll be very hard not to notice. Video after the break. Update: Just received a nice letter from Rob Wang, who points out that his website is the place to see more videos, get more info, and -- if you're lucky -- one day download the APIs so you can try it yourself. What are you waiting for?