neuralnetwork

Latest

Google DeepMind AI can imagine a 3D model from a 2D image

One of the difficulties when it comes to creating visual recognition systems for an AI is to program what the human brain does effortlessly. Specifically, when a person enters an unfamiliar area, it's easy to recognize and categorize what's there. Our brains are designed to automatically take it in at a glance, make inferences based on prior knowledge and see it from a different angle or recreate it in our heads. The team at Google's DeepMind are working on a neural network that can do similar things.

AI detects movement through walls using wireless signals

You don't need exotic radar, infrared or elaborate mesh networks to spot people through walls -- all you need are some easily detectable wireless signals and a dash of AI. Researchers at MIT CSAIL have developed a system (RF-Pose) that uses a neural network to teach RF-equipped devices to sense people's movement and postures behind obstacles. The team trained their AI to recognize human motion in RF by showing it examples of both on-camera movement and signals reflected from people's bodies, helping it understand how the reflections correlate to a given posture. From there, the AI could use wireless alone to estimate someone's movements and represent them using stick figures.

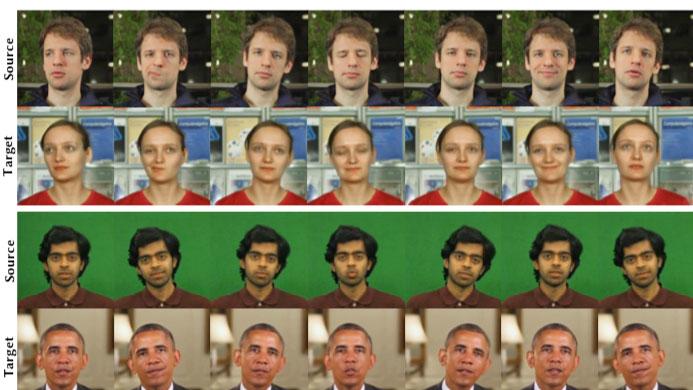

AI can transfer human facial movements from one video to another

Researchers have taken another step towards realistic, synthesized video. The team, made up of scientists in Germany, France, the UK and the US, used AI to transfer the head poses, facial expressions, eye motions and blinks of a person in one video onto another entirely different person in a separate video. The researchers say it's the first time a method has transferred these types of movements between videos and the result is a series of clips that look incredibly realistic.

Facial recognition may help save endangered primates

Facial recognition isn't limited to humans. Researchers have developed a face detection system, PrimNet, that should help save endangered primates by tracking them in a non-invasive way. The neural network-based approach lets field workers keep tabs on chimpanzees, golden monkeys and lemurs just by snapping a photo of them with an Android app -- it'll either produce an exact match or turn up five close candidates. That's much gentler than tracking devices, which can stress or even hurt animals.

Facebook AI turns whistling into musical masterpieces

Ever wish you could whistle a tune and have a computer build a whole song out of that idea? It might just happen. Facebook AI Research has developed an AI that can convert music in one style or genre into virtually any other. Instead of simply trying to repeat notes or style-specific traits, the approach uses unsupervised training to teach a neural network how to create similar noises all on its own. Facebook's system even prevents the AI from simply memorizing the audio signal by purposefully distorting the input.

NVIDIA's AI fixes photos by recognizing what's missing

Most image editing tools aren't terribly bright when you ask them to fix a photo. They'll borrow content from adjacent pixels (such as Adobe's recently demonstrated context-aware AI fill), but they can't determine what should have been there -- and that's no good if you're trying to restore a decades-old photo where you know what's absent. NVIDIA might have a solution. It developed a deep learning system that restores photos by determining what should be present in blank or corrupted spaces. If there's a missing eye in a portrait, for instance, it knows to insert one even if the eye area is largely obscured.

Microsoft's AI-powered offline translation now runs on any phone

Like many translation apps, Microsoft Translator has only used AI to decipher phrases while you have an internet connection. That's not much help if you're on a vacation in a place where mobile data is just a distant memory. Well, you won't have to sacrifice quality for much longer -- Microsoft has released offline language packs for Translator (currently on Android, iOS and Amazon Fire devices) that use AI for translation when you're offline regardless of your hardware. The move not only provides higher quality translations, but shrinks the size of the language packs by half. If you're a jetsetter, you might not have to shuffle language packs whenever you visit a new country.

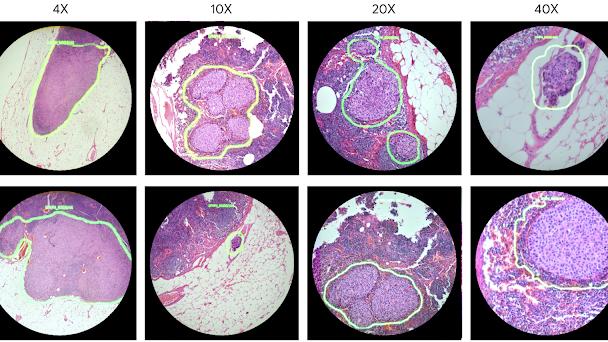

Google made an AR microscope that can help detect cancer

In a talk given today at the American Association for Cancer Research's annual meeting, Google researchers described a prototype of an augmented reality microscope that could be used to help physicians diagnose patients. When pathologists are analyzing biological tissue to see if there are signs of cancer -- and if so, how much and what kind -- the process can be quite time-consuming. And it's a practice that Google thinks could benefit from deep learning tools. But in many places, adopting AI technology isn't feasible. The company, however, believes this microscope could allow groups with limited funds, such as small labs and clinics, or developing countries to benefit from these tools in a simple, easy-to-use manner. Google says the scope could "possibly help accelerate and democratize the adoption of deep learning tools for pathologists around the world."

Microsoft says its AI can translate Chinese as well as a human

Run Chinese text through a translation website and the results tend to be messy, to put it mildly. You might get the gist of what's being said, but the sheer differences between languages usually lead to mangled sentences without any trace of fluency or subtlety. Microsoft might have just conquered that problem, however: it has developed an AI said to translate Chinese to English with the same quality as a human. You can even try it yourself. The trick, Microsoft said, was to change how it trained AI.

Robots that pick up and sort objects may improve warehouse efficiency

Sorting and organizing may not always be the most difficult tasks, but they can certainly get tedious. And while they may seem like prime examples of something we might like robots to do for us, picking up, recognizing and sorting objects is actually a pretty difficult thing to teach a machine. But researchers at MIT and Princeton have developed a robot that can do just that and in the future, it could be used for things like warehouse sorting or cleaning up a disaster area.

ARM's latest processors are designed for mobile AI

ARM isn't content to offer processor designs that are kinda-sorta ready for AI. The company has unveiled Project Trillium, a combination of hardware and software ingredients designed explicitly to speed up AI-related technologies like machine learning and neural networks. The highlights, as usual, are the chips: ARM ML promises to be far more efficient for machine learning than a regular CPU or graphics chip, with two to four times the real-world throughput. ARM OD, meanwhile, is all about object detection. It can spot "virtually unlimited" subjects in real time at 1080p and 60 frames per second, and focuses on people in particular -- on top of recognizing faces, it can detect facing, poses and gestures.

DroNet's neural network teaches UAVs to navigate city streets

Scientists from ETH Zurich are training drones how to navigate city streets by having them study things that already know how: cars and bicycles. The software being used is called DroNet, and it's a convolutional neural network. Meaning, it learns to fly and navigate by flying and navigating. The scientists collected their own training data by strapping GoPros to cars and bikes, and rode around Zurich in addition to tapping publicly available videos on Github. So far, the drones have learned enough to not cross into oncoming traffic and to avoid obstacles like traffic pylons and pedestrians.

Facebook tries giving chatbots a consistent personality

Dig into the personalities of chat bots and you'll find that they're about as shallow as they were in the days of Eliza or Dr. Sbaitso. They respond with canned phrases and tend to be blithely unaware of what you've said. Facebook wants to fix that. Its research team has tested a new approach that gives bots more consistent personalities and more natural responses. Facebook taught its AI to look for patterns in a special 164,000-utterance data set, Persona-Chat, that included a handful of facts about a given bot's persona. An AI trying to mimic a real person would have five biographical statements to work with, such as its family and hobbies, with each of them revised to say the same things in a different way. Train existing chat bots from that and you get AI that 'knows' what it likes, but still maintains the context of a conversation and speaks relatively fluently.

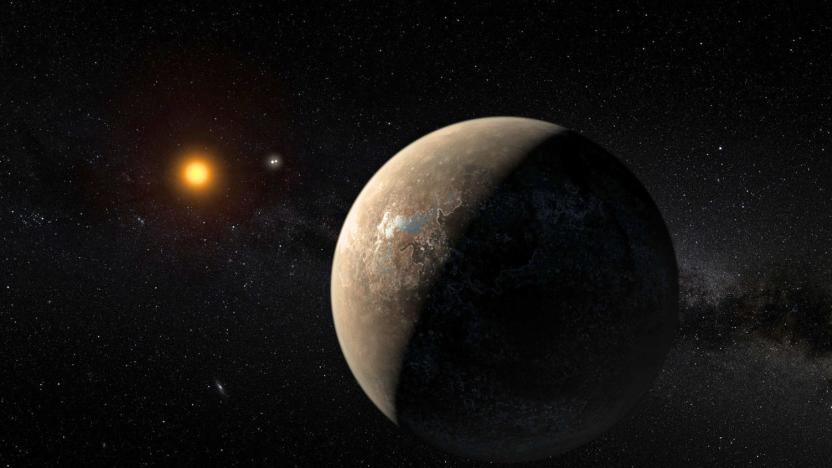

Google researchers use AI to spot distant exoplanets

Hunting for exoplanets is a very data-intensive and time-consuming task. Sifting through piles of data to find subtle signs of distant planets takes quite a lot of work, but researchers at Google have been developing a way to use AI to make the process faster and more effective.

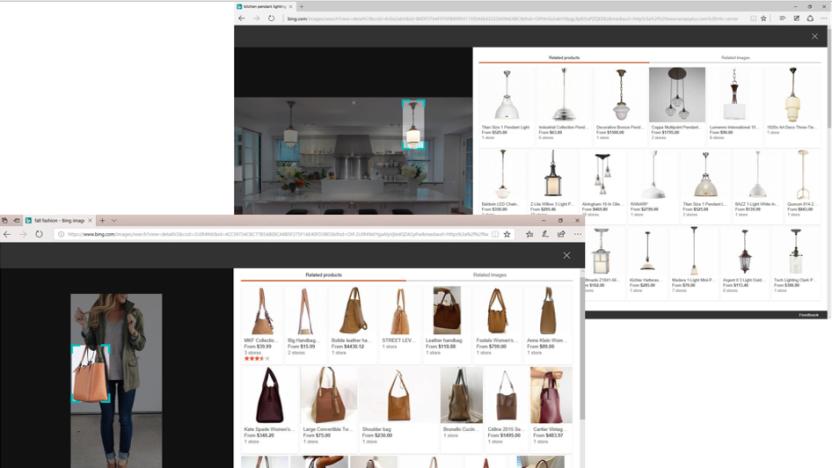

Microsoft unveils improved AI-powered search features for Bing

Microsoft unveiled a handful of new intelligent search features for Bing at an event held in San Francisco today. Powered by AI, the search updates are meant to provide more thorough answers and allow for more conversational or general search queries.

Neural network creates photo-realistic images of fake celebs

While Facebook and Prisma tap AI to transform everyday images and video into flowing artworks, NVIDIA is aiming for all-out realism. The graphics card-maker just released a paper detailing its use of a generative adversarial network (GAN) to create high-definition photos of fake humans. The results, as illustrated in an accompanying video, are impressive and creepy in equal measure.

Android 8.1 preview unlocks your Pixel 2 camera's AI potential

Remember how Google said the Pixel 2 and Pixel 2 XL both have a custom imaging chip that's just laying idle? Well, you can finally use it... in a manner of speaking. Google has released its first Developer Preview for Android 8.1, and the highlight is arguably Pixel Visual Core support for third-party apps. Companies will have to write support into their apps before you notice the difference, but this should bring the Pixel 2 line's HDR+ photography to any app, not just Google's own camera software. You might not have to jump between apps just to get the best possible picture quality when you're sharing photos through your favorite social service.

Researchers use AI to banish choppy streaming videos

Nobody likes it when their binge watching is disrupted by a buffering video. While streaming sites like Netflix have offered workarounds for connectivity problems (including offline viewing and quality controls), researchers are tackling the issue head on. In August, a team from MIT CSAIL unveiled its solution: A neural network that can pick the ideal algorithms to ensure a smooth stream at the best possible quality. But, they're not alone in their quest to banish video stutters. The folks at France's EPFL university are also tapping into machine learning as part of their own method. The researchers claim their program can boost the user experience by 37 percent, while also reducing power loads by almost 20 percent.

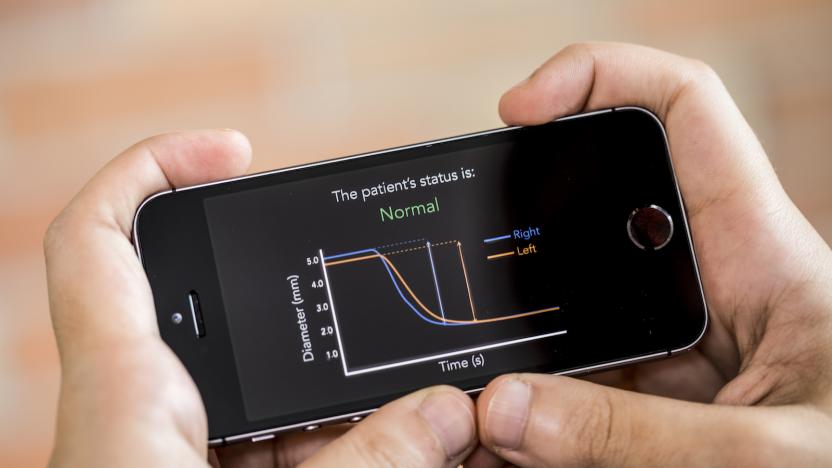

Smartphones could someday assess brain injuries

Researchers at the University of Washington are developing a simple way to assess potential concussions and other brain injuries with just a smartphone. The team has developed an app called PupilScreen that uses video and a smartphone's camera flash to record and calculate how the pupils respond to light.

AI writes Yelp reviews that pass for the real thing

On any given day, hordes of people consult online reviews to help them pick out where to eat, what to watch, and products to buy. We trust that these reviews are reliable because they come from everyday folk just like us. But, what if the feedback blurbs on sites ranging from Amazon to iTunes could be faked -- not just by nefarious humans, but by AI? That's what researchers from University of Chicago tried to do, with surprising results. Not only did the Yelp restaurant reviews written by their neural network manage to pass for the real thing, but people even found the posts to be useful.