NeuralNetworks

Latest

Facebook AI turns real people into controllable game characters

Facebook's AI Research team has created an AI called Vid2Play that can extract playable characters from videos of real people, creating a much higher-tech version of '80s full-motion video (FMV) games like Night Trap. The neural networks can analyze random videos of people doing specific actions, then recreate that character and action in any environment and allow you to control them with a joystick.

EA is teaching AI troops to play 'Battlefield 1'

It's been a couple of years since AI-controlled bots fragged each other in an epic Doom deathmatch. Now, EA's Search for Extraordinary Experiences Division, or SEED, has taught self-learning AI agents to play Battlefield 1. Each character in the basic match uses a model based on neural-network training to learn how to play the game via trial and error. The AI-controlled troops in the game learned how to play after watching human players, then parallel training against other bots. The AI soldiers even learned how to pick up ammo or health when they're running low, much like you or I do.

MIT has a new chip to make AI faster and more efficient on smartphones

Just one day after MIT revealed that some of its researchers had created a super low-power chip to handle encryption, the institute is back with a neural network chip that reduces power consumption by 95 percent. This feature makes them ideal for battery-powered gadgets like mobile phones and tablets to take advantage of more complex neural networking systems.

Twitter uses smart cropping to make image previews more interesting

Twitter's recent character limit extension means we're spending more time reading tweets, but now the site now wants us to spend less time looking at pictures. Or more specifically, less time looking for the important bit of a picture. Thanks to Twitter's use of neural networks, picture previews will now be automatically cropped to their most interesting part.

This neural network generates weird and adorable pickup lines

Training a neural network involves feeding it enough raw data to start recognizing and replicating patterns. It can be a long, tedious process to just approximate complex things -- like writing articles for Engadget, for example. Research scientist Janelle Shane has experimented with her own neural network to create recipes, lists of new Pokemon and weird superhero names with varying results. Now, however, she's turned her training attention to pickup lines. Surprisingly, her neural network has generated some pretty adorable ones.

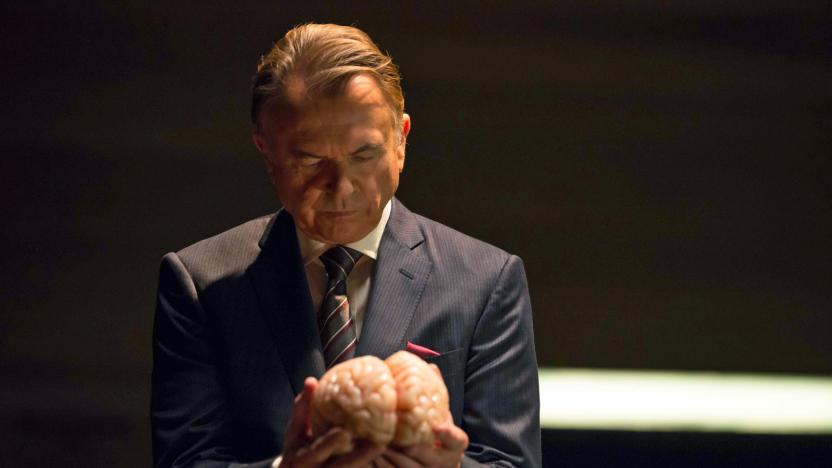

'MindGamers' clip shows off the dangers of wireless neural networks

The upcoming science fiction film MindGamers has an intriguing hook: 1,000 audience members are going to wear cognitive headbands to monitor their brain activity during a screening. And when it's over, researchers will go over the results of the "mass mind state" they gathered. It's apparently the first time such a large-scale collection of mind activity has been attempted, and it could potentially lead to new insights around human cognition. If you're in Los Angeles or New York City on March 28th, you can sign up to wear a headband on the film's site. You can also grab a ticket to watch the experience live in other locations via Fathom Events.

Google's using neural networks to make image files smaller

Somewhere at Google, researchers are blurring the line between reality and fiction. Tell me if you've heard this one, Silicon Valley fans -- a small team builds a neural network for the sole purpose of making media files teeny-tiny. Google's latest experiment isn't exactly the HBO hit's Pied Piper come to life, but it's a step in that direction: using trained computer intelligence to make images smaller than current JPEG compression allows.

Japan's latest humanoid robot makes its own moves

Japan's National Science Museum is no stranger to eerily human androids: It employs two in its exhibition hall already. But for a week, they're getting a new colleague. Called "Alter," it has a very human face like Professor Ishiguro's Geminoids, but goes one step further with an embedded neural network that allows it to move itself. The technology powering this involves 42 pneumatic actuators and, most importantly, a "central pattern generator." That CPG has a neutral network that replicates neurons, allowing the robot to create movement patterns of its own, influenced by sensors that detect proximity, temperature and, for some reason, humidity. The setup doesn't make for human-like movement, but it gives the viewer the very strange sensation that this particular robot is somehow alive. And that's precisely the point.

ICYMI: AI in a USB stick, electric bike wheel and more

#fivemin-widget-blogsmith-image-784522{display:none;} .cke_show_borders #fivemin-widget-blogsmith-image-784522, #postcontentcontainer #fivemin-widget-blogsmith-image-784522{width:570px;display:block;} try{document.getElementById("fivemin-widget-blogsmith-image-784522").style.display="none";}catch(e){} Today on In Case You Missed It: Chip maker Movidius created an advanced neural networks USB stick to put AI into any device; the GeoOrbital wheel turns any dumb bike into a 20 miles per hour powerhouse; and Samsung has a pilot program to put a mother's heartbeat into her premature baby's incubator. An open source robot used for research is also really good at yoga. As always, please share any great tech or science videos you find by using the #ICYMI hashtag on Twitter for @mskerryd.

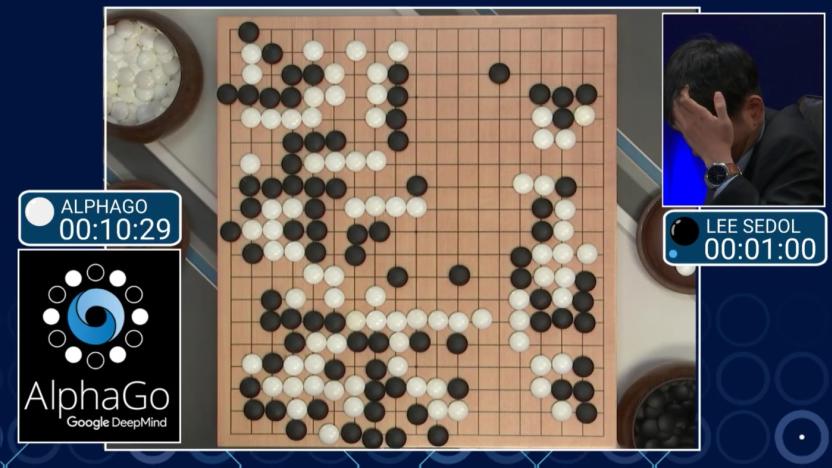

Watch AlphaGo vs. Lee Sedol (update: AlphaGo won)

Human Go player Lee Sedol is currently down 0-2 in a five game series against the AlphaGo program, which is powered by Google's DeepMind AI. The third match is currently under way at the Four Seasons hotel in Seoul, and like the others, you can watch a live stream (with commentary explaining things for us you Go novices) on YouTube. If there's any chance for humanity to pull out an overall victory then Sedol will need to make a move tonight, tune in and see how close we are to facing retribution for our crimes against robots.

The curious sext lives of chatbots

ELIZA is old enough to be my mother, but that didn't stop me from trying to have sex with her. NSFW Warning: This story may contain links to and descriptions or images of explicit sexual acts.

ICYMI: Ripples in Spacetime, brain jolts to learn and more

#fivemin-widget-blogsmith-image-917098{display:none;} .cke_show_borders #fivemin-widget-blogsmith-image-917098, #postcontentcontainer #fivemin-widget-blogsmith-image-917098{width:570px;display:block;} try{document.getElementById("fivemin-widget-blogsmith-image-917098").style.display="none";}catch(e){}Today on In Case You Missed It: Researchers at the Laser Interferometer Gravitational-Wave Observatory confirmed Einstein's theory that ripples in the fabric of spacetime do, in fact, exist. They spotted the gravitational waves made when two black holes collided.

I let Google's Autoreply feature answer my emails for a week

Google's Inbox is like an experimental Gmail, offering a more active (or laborious) way of tackling your inbox bloat, delaying and reminding you to respond at a later time. Its latest trick involves harnessing deep neural networks to offer a trio of (short!) auto-responses to your emails -- no typing necessary. Does it do the trick? Can a robot truly express what I need it to, or at least a close enough approximation that I'm satisfied with? I tried it this week to find out.

Google acquires neural network startup that may help it hone speech recognition and more

Mountain View has just picked up some experts on deep neural networks with their acquisition of DNNresearch, which was founded last year by University of Toronto professor Geoffrey Hinton and graduate students Alex Krizhevsky and Ilya Sutskever. The group is being brought into the fold after developing a solution that vastly improves object recognition. As a whole, advances in neural nets could lead to the development of improved computer vision, language understanding and speech recognition systems. We reckon that Page and Co. have a few projects in mind that would benefit from such things. Both students will be transitioning to Google, while Hinton will split his attention between teaching and working with the search giant.

DNA-based artificial neural network is a primitive brain in a test tube (video)

Many simpler forms of life on this planet, including some of our earliest ancestors, don't have proper brains. Instead they have networks of neurons that fire in response to stimuli, triggering reactions. Scientists from Caltech have actually figured out how to create such a primitive pre-brain using strands of DNA. Researchers, led by Lulu Qian, strung together DNA molecules to create bio-mechanical circuits. By sequencing the four bases of our genetic code in a particular way, they were able to program it to respond differently to various inputs. To prove their success the team quizzed the organic circuit, essentially playing 20 questions, feeding it clues to the identity of a particular scientist using more DNA strands. The artificial neural network nailed answer every time. Check out the PR and pair of videos that dig a little deeper into the experiment after the break.

British researchers design a million-chip neural network 1/100 as complex as your brain

If you want some idea of the complexity of the human brain, consider this: a group of British universities plans to link as many as a million ARM processors in order to simulate just a small fraction of it. The resulting model, called SpiNNaker (Spiking Neural Network architecture), will represent less than one percent of a human's gray matter, which contains 100 billion neurons. (Take that, mice brains!) Yet even this small scale representation, researchers believe, will yield insight into how the brain functions, perhaps enabling new treatments for cognitive disorders, similar to previous models that increased our understanding of schizophrenia. As these neural networks increase in complexity, they come closer to mimicking human brains -- perhaps even developing the ability to make their own Skynet references.

Schizophrenic computer may help us understand similarly afflicted humans

Although we usually prefer our computers to be perfect, logical, and psychologically fit, sometimes there's more to be learned from a schizophrenic one. A University of Texas experiment has doomed a computer with dementia praecox, saddling the silicon soul with symptoms that normally only afflict humans. By telling the machine's neural network to treat everything it learned as extremely important, the team hopes to aid clinical research in understanding the schizophrenic brain -- following a popular theory that suggests afflicted patients lose the ability to forget or ignore frivolous information, causing them to make illogical connections and paranoid jumps in reason. Sure enough, the machine lost it, and started spinning wild, delusional stories, eventually claiming responsibility for a terrorist attack. Yikes. We aren't hastening the robot apocalypse if we're programming machines to go mad intentionally, right?