depthsensor

Latest

Huawei says it can do better than Apple's Face ID

Huawei has a history of trying to beat Apple at its own game (it unveiled a "Force Touch" phone days before the iPhone 6s launch), and that's truer than ever now that the iPhone X is in town. At the end of a presentation for the Honor V10, the company teased a depth-sensing camera system that's clearly meant to take on Apple's TrueDepth face detection technology. It too uses a combination of infrared and a projector to create a 3D map of your face, but it can capture 300,000 points -- that's 10 times as many as the iPhone X captures, thought Huawei's version currently takes 10 seconds to rebuild this more precise 3D model.

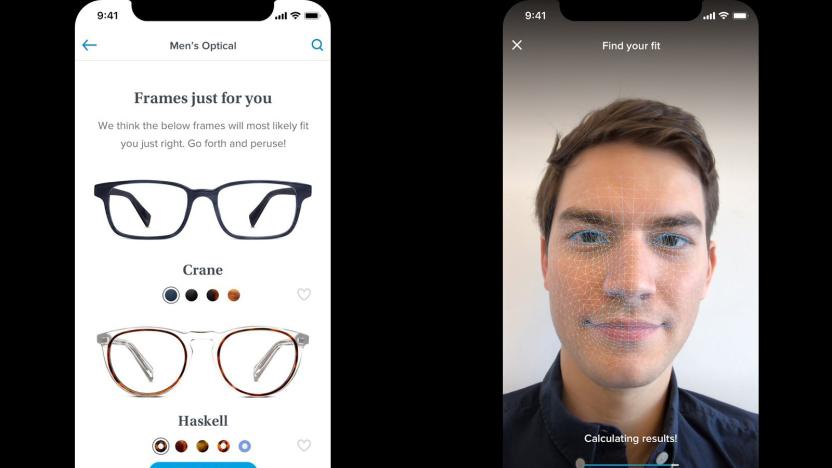

Warby Parker recommends glasses using your iPhone X's depth camera

The depth-sensing front camera on the iPhone X isn't just useful for unlocking your phone or making silly emoji clips. Eyewear maker Warby Parker has updated its Glasses app for iOS to include an iPhone X-only recommendation feature. Let the app scan your face and it'll recommend the frames that are most likely to fit your measurements. This isn't the same as modeling the frames on your face (wouldn't the iPhone X be ideal for that?), but it could save you a lot of hemming and hawing as you wonder which styles are a good match.

The next iPhone reportedly scans your face instead of your finger

Rumormongers have long claimed that Apple might include face recogition in the next iPhone, but it's apparently much more than a nice-to-have feature... to the point where it might overshadow the Touch ID fingerprint reader. Bloomberg sources understand that the new smartphone will include a depth sensor that can scan your face with uncanny levels of accuracy and speed. It reportedly unlocks your device inside of "a few hundred milliseconds," even if it's laying on flat of a table. Unlike the iris scanner in the Galaxy S8, you wouldn't need to hold the phone close to your face. The 3D is said to improve security, too, by collecting more biometric data than Touch ID and reducing the chances that the scanner would be fooled by a photo.

Intel buys Movidius to build the future of computer vision

Intel is making it extra-clear that computer vision hardware will play a big role in its beyond-the-PC strategy. The computing behemoth has just acquired Movidius, a specialist in AI and computer vision processors. The Intel team isn't shy about its goals. It sees Movidius as a way to get high-speed, low-power chips that can power RealSense cameras in devices that need to see and understand the world around them. Movidius has already provided the brains behind gadgets like drones and thermal cameras, many of which are a logical fit for Intel's depth-sensing tech -- and its deals with Google and Lenovo give nothing to sneeze at, either.

Intel scientist teases depth-sensing 'horn' for HTC Vive

Intel scientist Dimitri Diakopoulos revealed a sneak peek of an interesting depth sensing camera that attaches to HTC's Vive headset. "I felt that VR needed a solid unicorn peripheral with six additional cameras," he tweeted. The device, presumably based on Intel's RealSense tech, may be designed to track your hands or keep you from bumping into things. He mentioned that each camera weighs 10 grams (0.35 ounces), and said the design maintains the headset's original balance point.

Yuneec's 3D-sensing drone is available for pre-order

Have you been curious to see how a drone fares when it has Intel's depth-aware RealSense tech onboard? You now have a chance to find out first-hand. Yuneec has started taking pre-orders for the Typhoon H, its first drone with RealSense built-in. Plunk down $1,899 ($100 more than mentioned in January) and you'll get a hexacopter that uses Intel's camera system to map its environment and avoid obstacles while it records your adventures. You'll also get 4K video, 12-megapixel still shots and a 7-inch Android-based controller. It's a lot to pay, and you'll have to endure a 4-week wait if you're in the first wave, but look at it this way: the money you spend now might save you from a nasty tree collision in the future.

Amazon wants augmented reality to be headset-free

Augmented reality (AR) isn't all headsets and funny glasses. Amazon wants to turn it into something that you can interact with in your living room, judging by a couple of the company's recently approved patents. The "object tracking" patent shows how a system of projectors and cameras could beam virtual images onto real objects, and track your hand while you interact with them. The other, called "reflector-based depth mapping," would use a projector to transform your room into a kind of holodeck, mapping the depth of objects and bodies in a room.

Kinect sensors could lead to safer X-rays

You don't want to stand in front of an X-ray machine for any longer than necessary, and scientists have found a clever way to make that happen: the Kinect sensor you might have picked up with your Xbox. Their technique has the depth-sensing camera measuring both motion and the thickness of your body to make sure that doctors get a high-quality shot using as little radiation as possible. That's particularly important for kids, who can be sensitive to strong X-ray blasts.

Acer's upgraded laptops include one with a motion-sensing 3D camera

Acer is showing up at CES with a lot of laptop upgrades in store, and its Windows PC revamps have a few tricks up their sleeve. By far the highlight of the mix is a new version of the high-end V 17 Nitro (above) that includes an Intel RealSense 3D camera. The depth sensor lets you control games and other supporting apps with hand motions instead of reaching for the trackpad and keyboard. If you're the creative sort, it'll also let you scan your face and other 3D objects for inclusion in games or 3D printing projects. There aren't any major upgrades under the hood, although you're still getting a beefy 17-inch desktop replacement between the quad-core 2.5GHz Core i7 chip, GeForce GTX 860M graphics and choice of solid-state (128GB or 256GB) or spinning hard drive (1TB) storage. You won't have to wait long to give this system a try, as it's shipping in January. However, it's not yet clear what the trick camera adds to the price, if anything.

Project Tango teardown reveals the wonders of the phone's 3D sensing tech

Want to get a better understanding of Google's 3D-sensing Project Tango smartphone beyond the usual promo videos? iFixit is more than happy to show you now that it has torn down the device for itself. The close-up identifies many of the depth mapping components in the experimental handset, including the infrared and fisheye cameras (both made by OmniVision), motion tracking (from InvenSense) and dual vision processors (from Movidius).

Insert Coin: Meta 1 marries 3D glasses and motion sensor for gesture-controlled AR

In Insert Coin, we look at an exciting new tech project that requires funding before it can hit production. If you'd like to pitch a project, please send us a tip with "Insert Coin" as the subject line. Now that Google Glass and Oculus Rift have entered the zeitgeist, might we start to see VR and AR products popping up on every street corner? Perhaps, but Meta has just launched an interesting take on the concept by marrying see-through, stereoscopic, display glasses with a Kinect-style depth sensor. That opens up the possibility of putting virtual objects into the real world, letting you "pick up" a computer-generated 3D architectural model and spin it around in your hand, for instance, or gesture to control a virtual display appearing on an actual wall. To make it work, you connect a Windows PC to the device, which consists of a pair of 960 x 540 Epson displays embedded in the transparent glasses (with a detachable shade, as shown in the prototype above), and a depth sensor attached to the top. That lets the Meta 1 track your gestures, individual fingers and walls or other physical surfaces, all of which are processed in the PC with motion tracking tech to give the illusion of virtual objects anchored to the real world. Apps can be created via Unity3D and an included SDK on Windows computers (other platforms will arrive later, according to the team), with developers able to publish their apps on the upcoming Meta Store. The group has launched the project on Kickstarter with the goal of raising $100,000 to get developer kits into the hands of app coders, and though it's no Google, Meta is a Y Combinator startup and has several high-profile researchers on the team. As such, it's asking for exactly half of Glass' Explorer Edition price as a minimum pledge to get in on the ground floor: $750. Once developers have had their turn, the company will turn its attention toward consumers and more sophisticated designs -- so if you like the ideas peddled in the video, hit the source to give them your money.

Kinect for Windows SDK gets accelerometer and infrared input, reaches China and Windows 8 desktops

Microsoft had hinted that there were big things in store for its update to the Kinect for Windows SDK on October 8th. It wasn't bluffing; developers can now tap a much wider range of input than the usual frantic arm-waving. Gadgets that move the Kinect itself can use the accelerometer to register every tilt and jolt, while low-light fans can access the raw infrared sensor stream. The Redmond crew will even even let coders go beyond the usual boundaries, giving them access to depth information beyond 13 feet, fine-tuning the camera settings and tracking skeletal data from multiple sensors inside of one app. Just where we use the SDK has been expanded as well -- in addition to promised Chinese support, Kinect input is an option for Windows 8 desktop apps. Programmers who find regular hand control just too limiting can hit the source for the download link and check Microsoft's blog for grittier detail.

SoftKinetic's motion sensor tracks your hands and fingers, fits in them too (video)

Coming out of its shell as a possible Kinect foe, SoftKinetic has launched a new range sensor at Computex right on the heels of its last model. Upping the accuracy while shrinking the size, the DepthSense 325 now sees your fingers and hand gestures in crisp HD and as close as 10cm (4 inches), an improvement from the 15cm (6 inches) of its DS311 predecessor. Two microphones are also tucked in, making the device suitable for video conferencing, gaming and whatever else OEMs and developers might have in mind. We haven't tried it yet, but judging from the video, it seems to hunt finger and hand movements quite competently. Hit the break to see for yourself. Show full PR text SoftKinetic Announces World's Smallest HD Gesture Recognition Camera and Releases Far and Close Interaction Middleware Professional Kit Available For Developers To Start Building a New Generation of Gesture-Based Experiences TAIPEI & BRUSSELS – June 5, 2012 – SoftKinetic, the pioneering provider of 3D vision and gesture recognition technology, today announced a device that will revolutionize the way people interact with their PCs. The DepthSense 325 (DS325), a pocket-sized camera that sees both in 3D (depth) and high-definition 2D (color), delivered as a professional kit, will enable developers to incorporate high-quality finger and hand tracking for PC video games, introduce new video conferencing experiences and many other immersive PC applications. The DS325 can operate from as close as 10cm and includes a high-resolution depth sensor with a wide field of view, combined with HD video and dual microphones. In addition, the company announced the general availability of iisu™ 3.5, its acclaimed gesture-recognition middleware compatible with most 3D sensors available on the market. In addition of its robust full body tracking features, iisu 3.5 now offers the capacity to accurately track users' individual fingers at 60 frames per second, opening up a new world of close-range applications. "SoftKinetic is proud to release these revolutionary products to developers and OEMs," said Michel Tombroff, CEO of SoftKinetic. "The availability of iisu 3.5 and the DS325 clearly marks a milestone for the 3D vision and gesture recognition markets. These technologies will enable new generations of video games, edutainment applications, video conference, virtual shopping, media browsing, social media connectivity and more." SoftKinetic will demonstrate the new PC and SmartTV experiences and at its booth at Computex, June 5-9, 2012, in the NanGang Expo Hall, Upper Level, booth N1214. For business appointments, send a meeting request to events@softkinetic.com. The DS325 Professional Kit is available for pre-order now at SoftKinetic's online store (http://www.softkinetic.com/Store.aspx) and is expected to begin shipping in the coming weeks. iisu 3.5 Software Development Kit is available free for non-commercial use at SoftKinetic's online store (http://www.softkinetic.com/Store.aspx) and at iisu.com. About SoftKinetic S.A. SoftKinetic's vision is to transform the way people interact with the digital world. SoftKinetic is the leading provider of gesture-based platforms for the consumer electronics and professional markets. The company offers a complete family of 3D imaging and gesture recognition solutions, including patented 3D CMOS time-of-flight sensors and cameras (DepthSense™ family of products, formerly known as Optrima product family), multi-platform and multi-camera 3D gesture recognition middleware and tools (iisu™ family of products) as well as games and applications from SoftKinetic Studios. With over 8 years of R&D on both hardware and software, SoftKinetic solutions have already been successfully used in the field of interactive digital entertainment, consumer electronics, health care and other professional markets (such as digital signage and medical systems). SoftKinetic, iisu, DepthSense and The Interface Is You are trade names or registered trademarks of SoftKinetic. For more information on SoftKinetic please visit www.softkinetic.com. For videos of SoftKinetic-related products visit SoftKinetic's YouTube channel: www.youtube.com/SoftKinetic.

SoftKinetic brings DepthSense range sensor to GDC, hopes to put it in your next TV

Microsoft's Kinect may have put depth sensors in the eye of the common consumer, but they aren't the only outfit in the game -- Belgian startup SoftKinetic has their own twist on the distance sensing setup. The literally named "DepthSense" range sensor uses infrared time-of-flight technology, which according to representatives, allows it to not only accurately calculate depth-sensitivity in dark, cramped spaces, but more importantly offers a shallower operating distance than its competition. We dropped by SoftKinetic's GDC booth to see exactly how cramped we could get. It turns out the sensor can accurately read individual fingers between four to fourteen feet (1.5 - 4.5 meters), we had no trouble using it to pinch our way through a few levels of a mouse-emulated session of Angry Birds. The developer hardware we saw on the show floor was admittedly on the bulky side, but if all goes to plan, SoftKinetic says we'll see OEMs stuff the tech into laptops and ARM-powered TVs in the near future. In the meantime, though, gesture-crazy consumers can look forward to a slimmer version of this rig in stores sometime this holiday season. Hit the break for a quick demo of the friendly sensor in action. %Gallery-150189% Dante Cesa contributed to this post.

Prototype glasses use video cameras, face recognition to help people with limited vision

We won't lie: we love us a heartwarming story about scientists using run-of-the-mill tech to help people with disabilities, especially when the results are decidedly bionic. Today's tale centers on a team of Oxford researchers developing sensor-laden glasses capable of displaying key information to people with poor (read: nearly eroded) vision. The frames, on display at the Royal Society Summer Science Exhibition, have cameras mounted on the edges, while the lenses are studded with lights -- a setup that allows people suffering from macular degeneration and other conditions to see a simplified version of their surroundings, up close. And the best part, really, is that the glasses cull that data using garden-variety technology such as face detection, tracking software, position detectors, and depth sensors -- precisely the kind of tech you'd expect to find in handsets and gaming systems. Meanwhile, all of the processing required to recognize objects happens in a smartphone-esque computer that could easily fit inside a pocket. And while those frames won't exactly look like normal glasses, they'd still be see-through, allowing for eye contact. Team leader Stephen Hicks admits that vision-impaired people will have to get used to receiving all these flashes of information, but when they do, they might be able to assign different colors to people and objects, and read barcodes and newspaper headlines. It'll be awhile before scientists cross that bridge, though -- while the researchers estimate the glasses could one day cost £500 ($800), they're only beginning to build prototypes.

Microsoft seeking to quadruple Kinect accuracy?

Hacked your Kinect recently? Then you probably know something most regular Xbox 360 gamers don't -- namely, that the Kinect's infrared camera is actually capable of higher resolution than the game console itself supports. Though Microsoft originally told us it ran at 320 x 240, you'll find both color and depth cameras display 640 x 480 images if you hook the peripheral up to a PC, and now an anonymous source tells Eurogamer that Microsoft wants to do the very same in the video game space. Reportedly, Redmond artificially limited the Kinect on console in order to leave room for other USB peripherals to run at the same time, but if the company can find a way around the limitation, it could issue a firmware update that could make the Kinect sensitive enough to detect individual finger motions and inevitably lead to gesture control. One of multiple ways Microsoft intends to make the world of Minority Report a reality, we're sure.

Kinect sensor bolted to an iRobot Create, starts looking for trouble

While there have already been a lot of great proof-of-concepts for the Kinect, what we're really excited for are the actual applications that will come from it. On the top of our list? Robots. The Personal Robots Group at MIT has put a battery-powered Kinect sensor on top of the iRobot Create platform, and is beaming the camera and depth sensor data to a remote computer for processing into a 3D map -- which in turn can be used for navigation by the bot. They're also using the data for human recognition, which allows for controlling the bot using natural gestures. Looking to do something similar with your own robot? Well, the ROS folks have a Kinect driver in the works that will presumably allow you to feed all that great Kinect data into ROS's already impressive libraries for machine vision. Tie in the Kinect's multi-array microphones, accelerometer, and tilt motor and you've got a highly aware, semi-anthropomorphic "three-eyed" robot just waiting to happen. We hope it will be friends with us. Video of the ROS experimentation is after the break.