UncannyValley

Latest

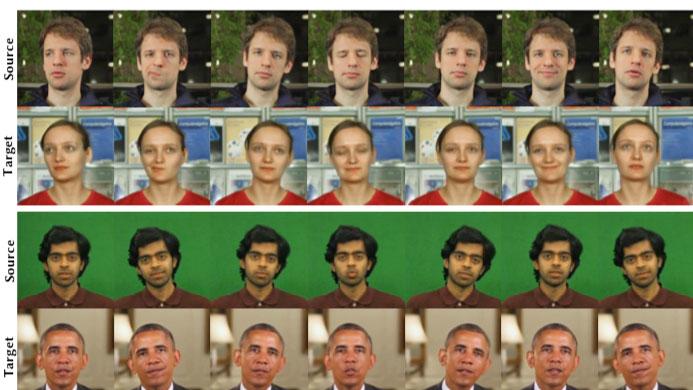

AI can transfer human facial movements from one video to another

Researchers have taken another step towards realistic, synthesized video. The team, made up of scientists in Germany, France, the UK and the US, used AI to transfer the head poses, facial expressions, eye motions and blinks of a person in one video onto another entirely different person in a separate video. The researchers say it's the first time a method has transferred these types of movements between videos and the result is a series of clips that look incredibly realistic.

Researchers make a surprisingly smooth artificial video of Obama

Translating audio into realistic looking video of a person speaking is quite a challenge. Often, the resulting video just looks off -- a problem called the uncanny valley, which states that human replicas appearing almost but not quite real come off as eerie or creepy. However, researchers at the University of Washington have made some serious headway in overcoming this issue and they did it using audio and video of Barack Obama.

Disney can digitally recreate your teeth

Digital models of humans can be uncannily accurate these days, but there's at least one area where they fall short: teeth. Unless you're willing to scan the inside of someone's mouth, you aren't going to get a very faithful representation of someone's pearly whites. Disney Research and ETH Zurich, however, have a far easier solution. They've just developed a technique to digitally recreate teeth beyond the gum line using little more than source data and everyday imagery. The team used 86 3D scans to create a model for an "average" set of teeth, and wrote an algorithm that adapts that model based on what it sees in the contours of teeth in photos and videos.

China's realistic robot Jia Jia can chat with real humans

The University of Science and Technology of China has recently unveiled an eerily realistic robot named Jia Jia. While she looks more human-like than that creepy ScarJo robot, you'll probably still find yourself plunging head first into the uncanny valley while looking at her. Jia Jia can talk and interact with real humans, as well as make some facial expressions -- she can even tell you off if she senses you're taking an unflattering picture of her. "Don't come too close to me when you are taking a picture. It will make my face look fat," she told someone trying to capture her photo during the presscon.

Celebrities get digital puppets made from paparazzi photos

Typically, recreating a celebrity as an animated 3D character requires painstaking modeling based on motion capture and laser scans. In the future, though, all you'll need is a few limo-chasing photographers. University of Washington researchers have developed a system that creates digital face "puppets" by running a collection of photos -- in this case, paparazzi shots -- through special face tracking software. The digital dopplegangers (such as Kevin Spacey and Arnold Schwarzenegger) bear an uncanny resemblance to their real-world counterparts, but are sophisticated enough to mimic the expressions of virtually anyone else. Want the Japanese Prime Minister saying Daniel Craig's lines? You can make it happen.

The new Unreal Engine will bring eerily realistic skin to your games

It hasn't been hard to produce realistic-looking skin in computer-generated movies, but it's much harder to do that in the context of a game running live on your console or PC. That trip to the uncanny valley is going to be much easier in the near future, though, thanks to the impending arrival of Unreal Engine 4.5. The gaming framework adds subsurface light scattering effects that give digital skin a more natural look. Instead of the harsh visuals you normally get (see the pale, excessively-shadowed face at left), you'll see softer, decidedly fleshier surfaces (middle and right). The scattering should also help out with leaves, candle wax and other materials that are rarely drawn well in your favorite action games.

UK's Ministry of Defence has its own creepy hazmat testing robot (video)

Boston Dymanics' Petman now has serious competition in the "eerie human-like robots" category, courtesy of the UK's Ministry of Defence. This new contender is a £1.1 million ($1.8 million) masterpiece called Porton Man, named after the Defence Science and Technology Laboratory in Porton Down, Wiltshire. If you're expecting a killing machine designed for the battlefield, though, you'll be sorely disappointed. The Porton Man will actually be used for tests in the development of new and lighter protective equipment for the military. Remember how Petman donned and tested a hazmat suit at one point that made it even creepier than usual? It'll be doing something like that.

Researchers' robotic face expresses the needs of yellow slime mold (video)

Apparently, slime mold has feelings too. Researchers at the University of the West of England have a bit of a history with Physarum polycephalum -- a light-shy yellow mold known for its ability to seek out the shortest route to food. Now, they're on a quest to find out why the organism's so darn smart, and the first in their series of experiments equates the yellow goo's movements to human emotions. The team measured electrical signals the mold produced when moving across micro-electrodes, converting the collected data into sounds. This audio data was weighted against a psychological model and translated into a corresponding emotion. Data collected when the mold was moving across food, for instance, correspond to joy, while anger was derived from the colony's reaction to light. Unfortunately, mold isn't the most expressive form of life, so when the team demonstrated the studies results at the Living Machines conference in London, they enlisted the help of a robotic head. Taking cues from a soundtrack based on the mold's movements, the dismembered automaton reenacts the recorded emotions with stiff smiles and frowns. Yes, it's as creepy as you might imagine, but those brave enough can watch it go through a cycle of emotions in the video after the break. [Image credit: Jerry Kirkhart / Flickr]

XYZbot's Fritz offers a cheaper robot head, free trips to the uncanny valley (video)

It's been relatively easy to get your hands on an expressive robot face... if you're rich or a scientist, that is. XYZbot would like to give the rest of us a shot by crowdfunding Fritz, an Arduino-powered robot head. The build-it-yourself (and eerily human-proportioned) construction can react to pre-programmed actions, text-to-speech conversion or live control, ranging from basics like the eyes and jaw to the eyelids, eyebrows, lips and neck of an Advanced Fritz. Windows users should have relatively simple control through an app if they just want to play, but where Fritz may shine is its open source nature: the code and hardware schematics will be available for extending support, changing the look or building a larger robot where Fritz is just one part. The $125 minimum pledge required to set aside a Fritz ($199 for an Advanced Fritz) isn't trivial, but it could be a relative bargain if XYZbot makes its $25,000 goal -- and one of the quickest routes to not-quite-lifelike robotics outside of a research grant.

Sony takes SOEmote live for EverQuest II, lets gamers show their true CG selves (video)

We had a fun time trying Sony's SOEmote expression capture tech at E3; now everyone can try it. As of today, most EverQuest II players with a webcam can map their facial behavior to their virtual personas while they play, whether it's to catch the nuances of conversation or drive home an exaggerated game face. Voice masking also lets RPG fans stay as much in (or out of) character as they'd like. About the only question left for those willing to brave the uncanny valley is when other games will get the SOEmote treatment. Catch our video look after the break if you need a refresher.

Baby robot Affetto gets a torso, still gives us the creeps (video)

It's taken a year to get the sinister ticks and motions of Osaka University's Affetto baby head out of our nightmares -- and now it's grown a torso. Walking that still-precarious line between robots and humans, the animated robot baby now has a pair of arms to call its own. The prototype upper body has a babyish looseness to it -- accidentally hitting itself in the face during the demo video -- with around 20 pneumatic actuators providing the movement. The face remains curiously paused, although we'd assume that the body prototype hasn't been paired with facial motions just yet, which just about puts it the right side of adorable. However, the demonstration does include some sinister faceless dance motions. It's right after the break -- you've been warned.

Samsung files patents for robot that mimics human walking and breathing, ratchets up the creepy factor

As much as Samsung is big on robots, it hasn't gone all-out on the idea until a just-published quartet of patent applications. The filings have a robot more directly mimicking a human walk and adjusting the scale to get the appropriate speed without the unnatural, perpetually bent gait of certain peers. To safely get from point A to point B, any path is chopped up into a series of walking motions, and the robot constantly checks against its center of gravity to stay upright as it walks uphill or down. All very clever, but we'd say Samsung is almost too fond of the uncanny valley: one patent has rotating joints coordinate to simulate the chest heaves of human breathing. We don't know if the company will ever put the patents to use; these could be just feverish dreams of one-upping Honda's ASIMO at its own game. But if it does, we could be looking at Samsung-made androids designed like humans rather than for them.

MIT unveils computer chip that thinks like the human brain, Skynet just around the corner

It may be a bit on the Uncanny Valley side of things to have a computer chip that can mimic the human brain's activity, but it's still undeniably cool. Over at MIT, researchers have unveiled a chip that mimics how the brain's neurons adapt to new information (a process known as plasticity) which could help in understanding assorted brain functions, including learning and memory. The silicon chip contains about 400 transistors and can simulate the activity of a single brain synapse -- the space between two neurons that allows information to flow from one to the other. Researchers anticipate this chip will help neuroscientists learn much more about how the brain works, and could also be used in neural prosthetic devices such as artificial retinas. Moving into the realm of "super cool things we could do with the chip," MIT's researchers have outlined plans to model specific neural functions, such as the visual processing system. Such systems could be much faster than digital computers and where it might take hours or days to simulate a simple brain circuit, the chip -- which functions on an analog method -- could be even faster than the biological system itself. In other news, the chip will gladly handle next week's grocery run, since it knows which foods are better for you than you ever could.

PIGORASS quadruped robot baby steps past AIBO's grave (video)

Does the Uncanny Valley extend to re-creations of our four-legged friends? We'll find out soon enough if Yasunori Yamada and his University of Tokyo engineering team manage to get their PIGORASS quadruped bot beyond its first unsteady hops, and into a full-on gallop. Developed as a means of analyzing animals' musculoskeletal system for use in biologically-inspired robots, the team's cyborg critter gets its locomotion on via a combo of CPU-controlled pressure sensors and potentiometers. It may move like a bunny (for now), but each limb's been designed to function independently in an attempt to simulate a simplified neural system. Given a bit more time and tweaking (not to mention a fine, faux fur coating), we're pretty sure this wee bitty beastie'll scamper its way into the homes of tomorrow. Check out the lil' fella in the video after the break.

Japan creates frankenstein pop idol, sells candy

Sure, Japan's had its fair share of holographic and robotic pop idols, but they always seem to wander a bit too far into the uncanny valley. Might an amalgam composite pop-star fare better? Nope, still creepy -- but at least its a new kind of creepy. Eguchi Aimi, a fictional idol girl created for a Glico candy ad, is comprised of the eyes, ears, nose, and other facial elements of girls from AKB48, a massive (over 50 members) all-female pop group from Tokyo. Aimi herself looks pretty convincing, but the way she never looks away from the camera makes our skin crawl ever so slightly. Check out the Telegraph link below to see her pitch Japanese sweets while staring through your soul.

Emoti-bots turn household objects into mopey machines (video)

Some emotional robots dip deep into the dark recesses of the uncanny valley, where our threshold for human mimicry resides. Emoti-bots on the other hand, manage to skip the creepy human-like pitfalls of other emo-machines, instead employing household objects to ape the most pathetic of human emotions -- specifically dejection and insecurity. Sure it sounds sad, but the mechanized furniture designed by a pair of MFA students is actually quite clever. Using a hacked Roomba and an Arduino, the duo created a chair that reacts to your touch, and wanders aimlessly once your rump has disembarked. They've also employed Nitinol wires, a DC motor, and a proximity sensor to make a lamp that seems to tire with use. We prefer our lamps to look on the sunny side of life, but for those of you who like your fixtures forlorn, the Emoti-bots are now on display at Parsons in New York and can be found moping about in the video after the break.

Fake robot baby provokes real screams (video)

Uncanny valley, heard of it? No worries, you're knee-deep in it right now. It's the revulsion you feel to robots, prostheses, or zombies that try, but don't quite duplicate their human models. As the robot becomes more humanlike, however, our emotional response becomes increasingly positive and empathetic. Unfortunately, the goal of Osaka University's AFFETTO was to create a robot modeled after a young child that could produce realistic facial expressions in order to endear it to a human caregiver in a more natural way. Impressive, sure, but we're not ready to let it suckle from our teat just yet.

Cambridge developing 'mind reading' computer interface with the countenance of Charles Babbage (video)

For years now, researchers have been exploring ways to create devices that understand the nonverbal cues that we take for granted in human-human interaction. One of the more interesting projects we've seen of late is led by Professor Peter Robinson at the Computer Laboratory at the University of Cambridge, who is working on what he calls "mind-reading machines," which can infer mental states of people from their body language. By analyzing faces, gestures, and tone of voice, it is hoped that machines could be made to be more helpful (hell, we'd settle for "less frustrating"). Peep the video after the break to see Robinson using a traditional (and annoying) satnav device, versus one that features both the Cambridge "mind-reading" interface and a humanoid head modeled on that of Charles Babbage. "The way that Charles and I can communicate," Robinson says, "shows us the future of how people will interact with machines." Next stop: uncanny valley!

President Obama takes a minute to chat with our future robot overlords (video)

President Obama recently took some time out of the APEC Summit in Yokohama to meet with a few of Japan's finest automatons, and as always he was one cool cat. Our man didn't even blink when confronted with this happy-go-lucky HRP-4C fashion robot, was somewhat charmed by the Paro robotic seal, and more than eager to take a seat in one of Yamaha's personal transport robots. But who wouldn't be, right? See him in action after the break.

Flobi robot head realistic enough to convey emotions, not realistic enough to give children nightmares (hopefully)

We've seen our fair share of robots meant to convey emotions, and they somehow never fail to creep us out on some level. At least Flobi, the handiwork of engineers at Bielefeld University in Germany, eschews "realism" for cartoon cuteness. But don't let it fool you, this is a complicated device: about the size of a human head, it features a number of actuators, microscopes, gyroscopes, and cameras, and has the ability to exhibit a wide range of facial expressions by moving its eyes, eyebrows and mouth. The thing can even blush via its cheek-mounted LEDs, and it can either take on the appearance of a male or female with swappable hair and facial features. And the cartoonish quality of the visage is deliberate. According to a paper submitted by the group to the ICRA 2010 conference, the head is "far enough from realistic not to trigger unwanted reactions, but close enough that we can take advantage of familiarity with human faces." Works for us! Video after the break. [Thanks, Simon]