Uber’s transparency is key to making self-driving cars safer

As autonomous cars claim their first pedestrian death, sharing information is vital.

Yesterday evening, a self-driving Uber vehicle in Tempe, Arizona, struck a woman at a crosswalk. She would later die in the hospital as a result of the accident. Even though there was a human safety driver behind the wheel, the car is said to have been in autonomous mode at the time. The incident is widely described as the first known pedestrian death caused by an autonomous vehicle.

As a result of this incident, Uber has stopped all self-driving vehicle tests in San Francisco, Pittsburgh, Toronto and the greater Phoenix area. "Our hearts go out to the victim's family. We are fully cooperating with local authorities in their investigation of this incident," said Uber in a statement. CEO Dara Khosrowshahi echoed the sentiment on Twitter, saying that the authorities were trying to figure out what happened.

Some incredibly sad news out of Arizona. We're thinking of the victim's family as we work with local law enforcement to understand what happened. https://t.co/cwTCVJjEuz

— dara khosrowshahi (@dkhos) March 19th, 2018

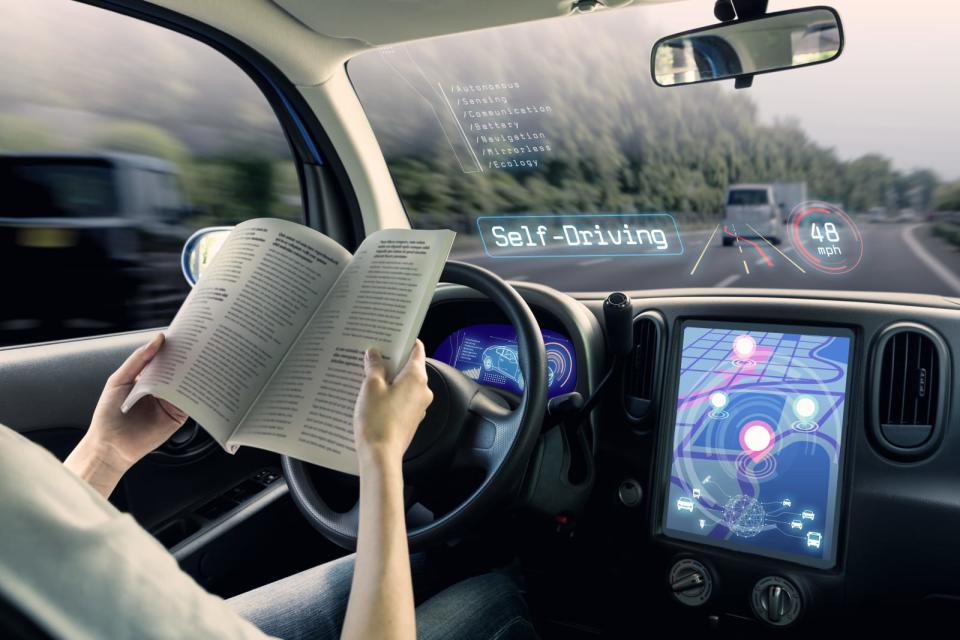

The trend toward self-driving cars seems inescapable. While most states still require a human driver behind the wheel, not all do. Arizona, for example, allows truly driverless cars. California has also agreed to let companies test self-driving vehicles without anyone behind the wheel starting in April.

This incident is likely to increase public scrutiny over self-driving cars. A recent survey by the American Automobile Association (AAA) shows that 63 percent of Americans are afraid of getting in them (a drop from last year's 78 percent) while only 13 percent said they would feel safer when sharing the road with autonomous vehicles.

Yet it's far too early to say that self-driving cars are inherently more dangerous than cars with human drivers. In 2016, there were a total of 193 pedestrian fatalities in the state of Arizona, and of that, 135 took place in Maricopa County, which is home to both Tempe and Phoenix. According to the Bureau of Transportation Statistics, there were a total of 5,987 pedestrian fatalities in 2016 nationwide. And yes, all of those involved vehicles with human drivers.

"On average there's a fatality about once every 100 million miles in the US, so while this incident is not statistically determinative, it is uncomfortably soon in the history of automated driving," Bryant Walker Smith, an assistant professor at the University of South Carolina, told Engadget. In short, the number of self-driving cars on the road is relatively small, making it harder to determine how dangerous they are in comparison.

"This would have happened sooner or later," Edmond Awad, a postdoctoral associate at MIT Media Lab, told Engadget. "It could certainly deter customers, and it could provoke politicians to enact restrictions. And it could slow down the process of self-driving car research."

Where the problem lies, Awad said, is that there seems to be a general misconception that self-driving cars can't make mistakes. "What should be done first of all, is for manufacturers to communicate that their cars are not perfect. They're being perfected. If everyone keeps saying they'll never make a mistake, they're going to lose the public's trust."

This isn't the first time an accident involving a self-driving car has occurred though. In 2016, a Tesla Model S collided with a tractor trailer, killing its driver -- even though it was in autopilot mode. The driver apparently ignored safety warnings, and it seems that the car misidentified the truck. In the end, the fault lied with the truck driver, who was charged with a right-of-way traffic violation.

This recent incident adds new fuel to the ongoing concern over the so-called trolley dilemma: Would a self-driving car have the ethics necessary to make a decision between two potentially fatal outcomes? Germany recently adopted a set of guidelines for self-driving cars that would compel manufacturers to design vehicles so that they would hit the person they would "hurt less."

Two years ago, Awad created the Moral Machine, a website that generates random moral dilemmas to ask users what a self-driving car should do in two possible outcomes, both resulting in death.

While Awad wouldn't reveal the details of his findings yet, he did say that answers from Eastern countries differ wildly from those from Western countries, suggesting that car manufacturers might want to consider cultural differences when implementing these guidelines.

Recently, Awad and his MIT colleagues ran another study around semiautonomous vehicles, asking participants who was to blame in two different scenarios: one where the car was on autopilot and the human could override it and another where the human was driving and the car could override where necessary. Would people blame the human behind the wheel or the manufacturer of the car? In Awad's research results, most people blamed the human behind the wheel.

In the end, what's important is that we know what exactly happened in the Uber accident. "We have no understanding of how this car is acting," said Awad. "An explanation would be very important. Was it a problem with the car itself? Was it something not part of the car, that's beyond the machine's capability? We need to help people understand what happened."

Smith echoed the sentiment, stating that Uber needs to completely transparent here. "This incident will test whether Uber has become a trustworthy company," he said. "They need to be scrupulously honest and welcome outside supervision of this investigation immediately. They shouldn't touch their systems without credible observers."

The bigger question for autonomous cars and the safety of pedestrians in the future will largely depend on how the government responds. We already know that revised federal guidelines are coming this summer, but this recent tragedy could require a more immediate response. In a news release, the National Transportation Safety Board stated that it was sending a team of four investigators to Tempe, where it hopes to "address the vehicle's interaction with the environment, other vehicles and vulnerable road users such as pedestrians and bicyclists." We reached out to Arizona's Department of Transportation (the body that oversees self-driving cars in Arizona) about this but have yet to hear back at this time.

For now we're still not clear on the actual cause of the accident. "At this time we don't know enough about the incident to identify what part of the self-driving technology failed, but quite likely the pedestrian was in a very unexpected location and the sensor technology did not adapt the model of its environment quickly enough," Bart Selman, a computer science professor at Cornell University, said in a statement to press.

"In fact, self-driving technology cannot completely eliminate all accidents, and the goal remains to show that the technology will greatly reduce the overall number of driving fatalities," he added. "I firmly believe that this goal remains achievable in part because the automatic-sensing system of the car can track many more events more accurately and reliable than a human driver. Still, an accident like this calls for a reevaluation of how to introduce and further develop the self-driving technology so that people will come to recognize and accept it as feasible and very safe."

Yet regardless of statistics, this accident will certainly harm the faith in self-driving cars in the immediate aftermath. And no amount of legislative change will help the family of the person who died. "We should be concerned about automated driving," said Smith. "But we should be terrified about conventional driving."