Robots learn to understand the context of what you say

You don't have to explicitly identify what you're talking about.

It can be frustrating when telling robots what to do, especially if you aren't a programmer. Robots don't really understand context -- when you ask them to "pick it up," they don't usually know what "it" is. MIT's CSAIL team is fixing that. They've developed a system, ComText, that helps robots understand contextual commands. Effectively, researchers are teaching robots the concept of episodic memory, where they remember details about objects that include their position, type and who owns them. If you tell a robot "the box I'm putting down is my snack," it'll know to grab that box if you ask it to fetch your food.

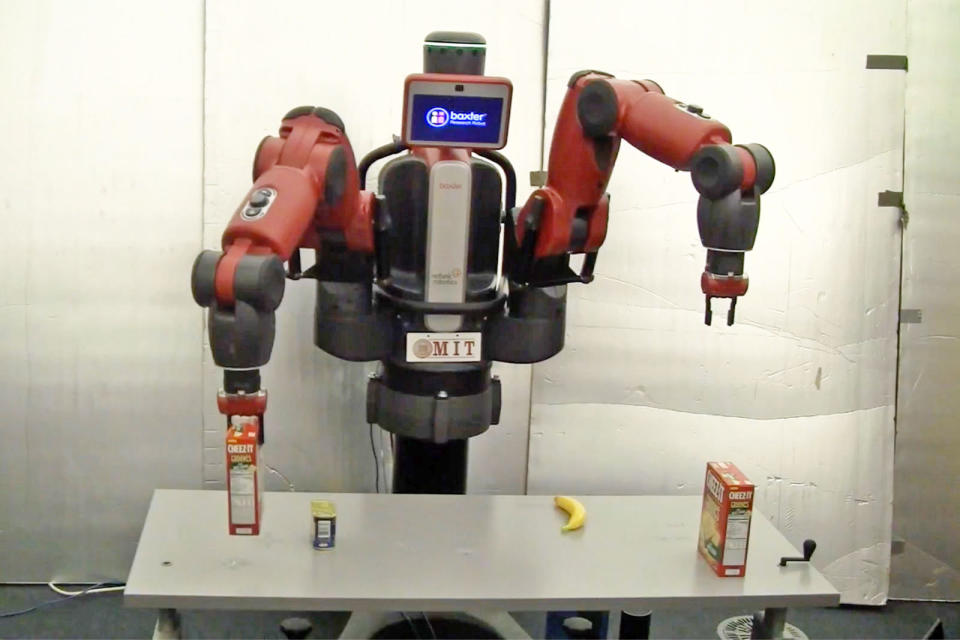

In testing with a Baxter robot, ComText understood commands 90 percent of the time. That's not reliable enough to be used in the field, but it shows that the underlying concept is sound.

Of course, robots are still a long way from understanding all the vagaries of human language. They won't know what you see as a snack unless you teach them first, for instance, and CSAIL wants to address this with future work. However, it's already evident that systems like ComText will be crucial to making autonomous robots useful in the real world, where people generally don't want (or expect) to issue explicit commands every time they need something done. You could speak to robot helpers almost as if they were human, rather than carefully choosing your words.