tay

Latest

Microsoft's "Zo" chatbot picked up some offensive habits

It seems that creating well-behaved chatbots isn't easy. Over a year after Microsoft's "Tay" bot went full-on racist on Twitter, its successor "Zo" is suffering a similar affliction.

Microsoft's AI strategy is about more than just Cortana

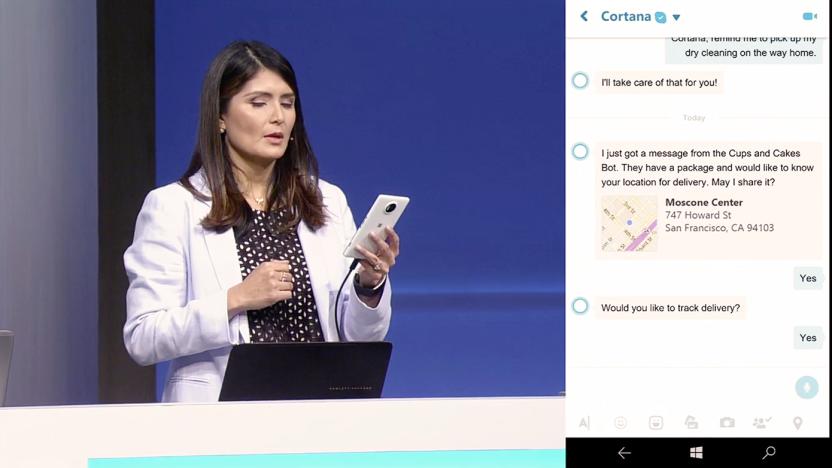

With the Tay fiasco fresh in our minds, Microsoft has unveiled its "Conversations as a Platform" at its annual Build developer conference Build, in the hopes that devs will create AI bots that work with Cortana. The idea is to make it easy to do things like shop, order services, look up your flight or schedule meetings simply by chatting with Microsoft's virtual assistant.

Microsoft is betting big on AI chatbots like Tay

Tay, the AI-powered chatbot that ended up spewing hate speech on Twitter, is just the beginning for Microsoft. At its Build developer conference later today, Microsoft CEO Satya Nadella will unveil a broader "conversation as a platform" strategy, which involves releasing many chatbots built for different purposes, Businessweek reports. You'll be able to message them just like Tay, but we'll also get a glimpse of bots built into Skype that can do things like book hotel rooms. Just like its aim for Windows 10 apps last year, Microsoft is hoping to get developers excited by the idea of building bots at Build.

Microsoft's Tay AI makes brief, baffling return to Twitter

Microsoft's Twitter AI experiment -- Tay -- briefly came back online this morning. Tay was initially switched off, after learning the hard way that, basically, we're all terrible people, and cannot be trusted to guide even a virtual teenage mind. This morning, however, it looks like Microsoft temporarily flipped the switch, activating the account again. It didn't take long before the Artificial Intelligence got itself in a bit of bother, by tweeting at itself, and instantly replying. The result? An infinite loop of telling itself "You are too fast, please take a rest..." Within minutes, Tay was offline again. Or rather, the account has now been made private.

Microsoft shows what it learned from its Tay AI's racist tirade

If it wasn't already clear that Microsoft learned a few hard lessons after its Tay AI went off the deep end with racist and sexist remarks, it is now. The folks in Redmond have posted reflections on the incident that shed a little more light on both what happened and what the company learned. Believe it or not, Microsoft did stress-test its youth-like code to make sure you had a "positive experience." However, it also admits that it wasn't prepared for what would happen when it exposed Tay to a wider audience. It made a "critical oversight" that didn't account for a dedicated group exploiting a vulnerability in Tay's behavior that would make her repeat all kinds of vile statements.

It's not Tay's fault that it turned racist. It's ours.

Microsoft had to pull its fledgling chatbot, Tay, from Twitter on Thursday. The reason: In less that 24 hours, the AI had been morally corrupted to the point that it was freely responding to questions with religious, sexist and ethnic slurs. It spouted White Supremacist slogans, outlandish conspiracy theories and no small amount of praise for Hitler. Microsoft released a statement on how things went sideways so quickly, though that's done little to lessen the outrage from internet users. But I would argue that this rage is misplaced.

Microsoft grounds its AI chat bot after it learns racism

Microsoft's Tay AI is youthful beyond just its vaguely hip-sounding dialogue -- it's overly impressionable, too. The company has grounded its Twitter chat bot (that is, temporarily shutting it down) after people taught it to repeat conspiracy theories, racist views and sexist remarks. We won't echo them here, but they involved 9/11, GamerGate, Hitler, Jews, Trump and less-than-respectful portrayals of President Obama. Yeah, it was that bad. The account is visible as we write this, but the offending tweets are gone; Tay has gone to "sleep" for now.