moderation

Latest

YouTube reportedly considered screening all YouTube Kids videos

YouTube paid the FTC a $170 million fine this year, which was pocket change for Google. However, the charge of violating the Children's Online Privacy Protection Act will remain a very costly stain on its reputation. In fact, things got so bad for YouTube when it came to kids last year that the site reportedly considered individual screening for every YouTube Kids video, according to Bloomberg.

YouTube AI thought robot fighting was animal cruelty

In the latest example of the need for further human moderation, YouTube's automated system took down several videos after mistaking robot fighting matches as animal cruelty. Those affected, including some BattleBots contestants, received a message stating, "Content that displays the deliberate infliction of animal suffering or the forcing of animals to fight is not allowed on YouTube." Was this a mere glitch, or are the robots gaining empathy for their brethren?

An Amazon employee might have listened to your Alexa recording

Yes, someone might listen to your Alexa conversations someday. A Bloomberg report has detailed how Amazon employs thousands of full-timers and contractors from around the world to review audio clips from Echo devices. (Update: Amazon has clarified to us that the recordings were captured after the wake word is detected.) Apparently, these workers transcribe and annotate recordings, which they then feed back into the software to make Alexa smarter than before. The process helps beef up the voice AI's understanding of human speech, especially for non-English-speaking countries or for places with distinctive regional colloquialisms. In French, for instance, an Echo speaker could hear avec sa ("with his" or "with her") as "Alexa" and treat it as a wake word.

Steam mods will filter 'off-topic review bombs' from ratings

One of the issues with relying on user reviews to rate content is the possibility that some of those reviews may not be written in entirely good faith. Recently Rotten Tomatoes took new steps to manage the impact of fake reviews submitted for Captain Marvel, while Netflix responded to several instances of "review bombing" by removing written reviews from its service entirely. Over the years Steam has taken a few different steps to deal with the issue, but now its latest response is a combination of automated scanning and human moderation teams. In a blog post it explained the plan: "we're going to identify off-topic review bombs, and remove them from the Review Score." In practice, what it has is a tool that monitors reviews in real-time to detect "anomalous" activity that suggests something is happening. It alerts a team of moderators, who can then look through the reviews who will investigate, and if they do find that there's a spate of "off-topic reviews," then they'll alert the developer, and remove those reviews from the way the game's score is calculated, although the reviews themselves will stay up.

Facebook defends its moderation policies, again

Now that the latest New York Times article about Facebook has hit -- following earlier stories on its moderation missteps from Motherboard and ProPublica -- the social network is once again defending itself. In a blog post it denied charges that moderators operate under "quotas," saying that "Reviewers' compensation is not based on the amount of content they review, and our reviewers aren't expected to rely on Google Translate as they are supplied with training and supporting resources."

Facebook's leaked moderation 'rulebook' is as confused as you'd think

Nearly a year ago, ProPublica tested Facebook's moderation with multiple items of hate speech and the company apologized after it failed to treat many of them properly based on its policies. In May, documents leaked to Motherboard showing its quickly shifting content policies -- and now another New York Times report cites leaked moderation guidelines showing how it is, and in many cases is not, handling hate and propaganda messages. Issues include failures to keep up to date information on shifting political situations in countries like Sri Lanka and Bosnia, while a paperwork error allowed an extremist group in Myanmar to keep using Facebook for months longer than it should have. There's evidence Facebook is misinterpreting laws restricting speech in countries like India, and the focus seems heavily weighted toward protecting Facebook's reputation more than anything else.

Facebook will cooperate with French hate speech investigation

Facebook plans to cooperate with the French government as it investigates the company's content moderation policies and systems, according to TechCrunch. Facebook will reportedly grant the government significant access to its internal processes for the informal investigation, which will primarily focus on hate speech on the platform.

Facebook accused of shielding far-right activists who broke its rules

An upcoming documentary reportedly reveals that Facebook has been protecting far-right activists, even though they would normally have been banned over rule violations. UK's Channel 4's documentary series Dispatches sent a reporter undercover and found toxic content, including graphic violence, child abuse and hate speech that moderators from Facebook contractor CPL refused to ban. Facebook admitted that it made mistakes with regard to content moderation, but denied that it sought to profit from the extreme content.

Netflix will remove user reviews from its website next month

Aside from a looming 'Ultra' tier that could raise prices while restricting features like 4K, HDR or simultaneous streams, Netflix has recently notified users of one feature that's definitely going away: written user reviews. While Netflix dropped the five-star rating system from its apps early last year, on the website users can still write down and share their thoughts.

Leaked Facebook documents show its shifting hate speech policies

Over the last year or so, Facebook's public statements have reflected the ongoing process of its moderation policies, both when it comes to election fraud and the even pricklier issue of hate speech. Now, beyond its publicly available Community Standards and various apologies, Motherboard has published internal documents showing what it's actually policing, and how that has changed over time.

Zuckerberg apologizes for Facebook's response to Myanmar conflict

Mark Zuckerberg has been accused of keeping too quiet on the many issues affecting Facebook recently, so Myanmar activists were surprised when they received a personal response from the chief exec following their open letter criticizing his approach to hate speech in their conflict-stricken country.

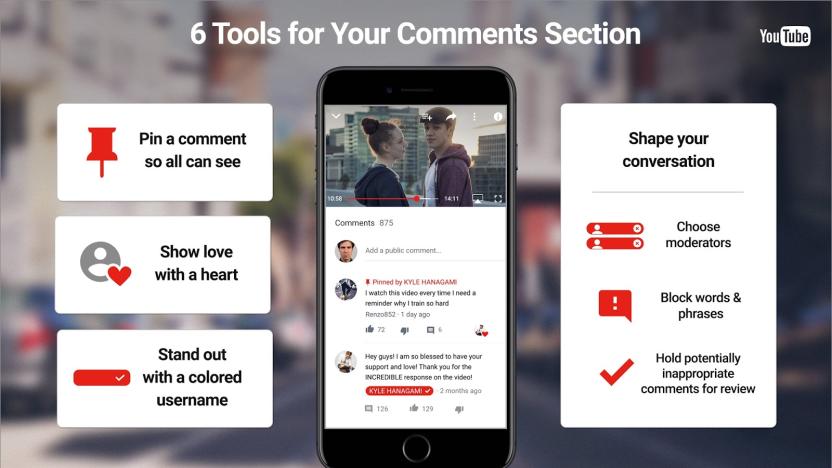

YouTube gives creators more control over the comment section

YouTube comments have historically been a toxic cesspool, but Google's video platform is finally making some changes to give creators and money-makers even more control over the conversations that take place below their videos. Today, YouTube announced a new set of commenting tools meant to help creators engage with and build their communities.

SOE relies on players to report inappropriate posts

If you see something, SOE definitely wants you to say something. EverQuest II CM Linda Carlson responded to a post on EQ2Wire to say that the studio depends on its players to help police the "meeelions" of comments in its forums. "Unless we are actually perusing the forums when something comes up, we do indeed rely on players helping to keep the forum community a reasonable and comfortable place to post by reporting disruptive or inappropriate posts," Carlson said. Carlson went on to give advice on how to constructively post on forums and avoid unnecessary conflict with other posters, including "Never post angry. Never post drunk." and "Two trolls do not make a right."

Ask Massively: Nick Burns edition

Today's Ask Massively letters come from David and Abionie, both of whom wrote us over the summer with comments about, well, our comments. This first one's from David: I'm probably not the only one who is generally displeased with the state of trolls on the internet today. You know them: doomsayers, hatespreaders, the "HAHA company X is failing, finally!" types, the kind of people for whom the hatred of a video game or company has become more addictive than playing the game that company made. Unfortunately, the internet gives soapboxes to people who probably don't have much of a relationship with soap. I would like to see more moderation towards keeping an articles comments on the topic of the article. It's becoming tiring to open up an article talking about something I am interested in, only to fear scrolling down too far and inadvertently opening up the comments to see "FAIL GAME IS FAIL COPYING X GAME IS LAZY" and the many hundreds of comments like that. We hear you, David, and not just about the soap. But also that.

Ask Massively: Everything in moderation

Happy Fourth of July, Americans, and happy new columnist day to Massively! Yes, it's official: Anatoli Ingram will be joining the Massively staff this month as our new Guild Wars 2 columnist. Those of you who dwell in the land of comments and trolls might recognize him from his erudite posts as Ring Bonefield. We're thrilled to have him on board to lend a pen and a critical eye to such a popular game, and we were equally thrilled at the impressive pool of applicants. Thank you all for applying! Speaking of commenters, we have comments on the brain today in Ask Massively. Let's review how -- or more specifically, when -- we moderate the comment section.

Ask Massively: The mobile site and trolly trollersons

Welcome back to Ask Massively, that corner of the site where we take a stab at answering random questions you deposit in our inboxes and comments. Hey guys, we have a cave troll, checkit. soundersfc.tid wrote: I have a question or two about the commenting system. Do you think there will ever be a way to flag offensive comments through the mobile version of the site? And speaking of offensive comments, what metric does Massively use when considering permanent bans on commenters? Long-time mobile viewers will know that our mobile site is... well, it's a thing. A thing that doesn't get a whole lot of love from the technical staff, unfortunately. We were thrilled that the new comment system works so well on mobile, but you're right: It has some deficiencies, which is a bummer because according to our site analytics, a lot of you surf from your favorite hand-held gadgets.

Microsoft's Toulouse explains why swastikas are banned on Xbox Live

An Xbox Live user recently inquired to professional banhammer-wielder Stephen Toulouse whether or not he could use a swastika as his Call of Duty: Black Ops emblem, to which Toulouse responded, "No, of course you can't, we'll ban you." Apparently, this sparked quite a reaction from gamers who witnessed the exchange, who defended the use of a swastika as a symbol widely used throughout several Eastern religions. In a post on his personal blog, Toulouse explained why this argument doesn't hold much water with the Xbox Live moderation crew. "Let's be clear: no educated human on the planet looks at the swastika symbol ... and says 'oh, that symbol has nothing at all in any way to do with global genocide of an entire race,'" Toulouse stated, "'and, even if it did, one should totally and reasonably ignore that because it's a symbol that was stolen or coop-ted from religions.'" He succinctly adds, "If you think the swastika symbol should be re-evaluated by societies all over the Earth, I think that's great. Your Xbox Live profile or in game logo, which doesn't have the context to explain your goal, is not the right place to do that." We certainly know that every time we see someone use the swastika as their identifier on an online game, our first thought is, "Oh, how nice, they're pushing for a global re-evaluation of the meaning of the swastika." Like, that's just common sense, isn't it?

Internet content filters are human too, funnily enough

Algorithms can only take you so far when you want to minimize obscene content on your social networking site. As the amount of user-uploaded content has exploded in recent times, so has the need for web content screeners, whose job it is to peruse the millions of images we throw up to online hubs like Facebook and MySpace every day, and filter out the illicit and undesirable muck. Is it censorship or just keeping the internet from being overrun with distasteful content? Probably a little bit of both, but apparently what we haven't appreciated until now is just how taxing a job this is. One outsourcing company already offers counseling as a standard part of its benefits package, and an industry group set up by Congress has advised that all should be providing therapy to their image moderators. You heard that right, people, mods need love too! Hit the source for more.

The new Massively.com Code of Conduct

Well hey there readers! Today I come to you with a brief but important announcement -- we have a new code of conduct! What does that mean for you? Well, in short, we want to take care of our comment section a little more closely than we have in the past. You're going to see us more frequently in the comments, discussing the news with you guys, and you're also going to see us moderating the comment section from time to time. What does this not mean for you? Well, we're certainly not going to squelch your opinions. We're doing this to make our comments section a great place to discuss games, news, and MMOs at large, no matter your opinion. We just want to put a few ground rules into place, and let you all know what is and what is not acceptable to write down in our comments section. For most of you, you're already abiding by the new code of conduct, so don't go worrying yourself. However, we certainly invite you all to go check out the new home of the Code of Conduct and familiarize yourself with the five basic rules of commenting. Plus, if you have any comments, questions, or concerns about any of this, you can always contact me personally at seraphina AT massively DOT com. So no worries! Comment away, dear readers! We can't wait to see what opinions you have to share with us!

The sad fate of the PlayOnline Viewer

Those of you who are currently playing Final Fantasy XI or who have played it in the past will remember the PlayOnline Viewer. Those of you who haven't will have no idea what it is, which might be for the best. Square-Enix originally included the program with the launch of Final Fantasy XI with the expectation that it would serve as a hub for a variety of different online games, so it would serve as a chat program, a launcher, a social network hub, and a support site. Of course, when you think of all those things, what you probably think of is Steam, which does all of those things quite well. The PlayOnline Viewer, on the other hand, has proved excellent at doing... well, it mostly means more clicks before you get into FFXI. And as Pet Food Alpha has recently noted, it also seems to be hosting wholly unmoderated explicit chat rooms. Square-Enix has stated that Final Fantasy XIV will not use the viewer, which means they seem to have abandoned it as a poor idea. Steam works, in part, because it sells a variety of both online and offline games, most of which don't come from its parent company. With a clunky interface and strange functional restrictions on it, it's hardly a surprise that the service never took off... but in light of recent events, the company's policy to ignore it completely might not be the best plan.