If hacking back becomes law, what could possibly go wrong?

Because escalation always ends well.

Representative Tom Graves, R-Ga., thinks that when anyone gets hacked -- individuals or companies -- they should be able to "fight back" and go "hunt for hackers outside of their own networks." The Active Cyber Defense Certainty ("ACDC") Act is getting closer to being put before lawmakers, and the congressman trying to make "hacking back" easy-breezy-legal believes it would've stopped the WannaCry ransomware.

Despite its endlessly lulzy acronym, Graves says he "looks forward to formally introducing ACDC" to the House of Representatives in the next few weeks.

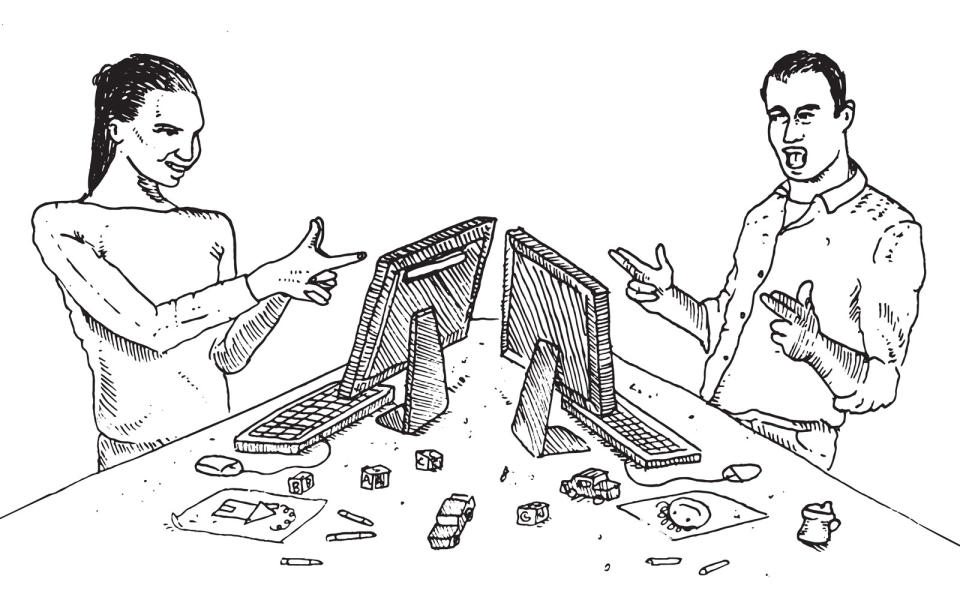

Hacking back sounds really awesome at first glance, and obviously, especially to lawmakers. The hacking back of "ACDC" in action would most likely happen just like on Mr. Robot, or in that movie about black hats. Evil hackers invade a company's computer system and plant a cyberbomb. Except the company's IT department is actually a crack team of formerly-evil hackers who now work for the good guys (you can tell they're the good guys because they have jobs). Because it's now legal, the good guys dive into The Matrix and defuse the cyberbomb just in time! Then they fly through the wires to get into the bad guy's computer, instantly downloading his name and address and Tinder profile, sending it instantly to the FBI who bust through the guy's door right as he's trying to wipe the files!

Yeah, that's probably how lawmakers see it happening, too.

Attacks that go both ways

The bipartisan ACDC bill would let companies who believe they are under ongoing attack break into the computer of whoever they think is attacking them, for the purposes of stopping the attack or gathering info for law enforcement. According to early press, the bill "includes caveats such as you cannot destroy data on another person's computer, cause physical injury to someone or create a threat to public safety."

This is Rep. Graves' second attempt at making ACDC work out with all his friends. His May 25th revision of the legislation included attempts at limiting collateral damage, which as you may have suspected, is a particular criticism of people who work in infosec.

According to Politico, which had the early scoop on the new version:

Key changes include: a mandatory reporting requirement for entities that use active-defense technique to help federal law enforcement ensure such tools are used responsibly; a two-year sunset clause that would make Congress revisit the law in order to make changes; and an exemption allowing people or companies to recover their lost data if it's found using defensive techniques and can be grabbed back without destroying other data.

Setting aside the naive idea that digital secrets can somehow be "stolen back," just that information alone diagrams why hacking back generally isn't considered a great idea by most.

Brian Bartholomew, senior security researcher at Kaspersky Lab, told Engadget, "While the proposal's intent is to make it more difficult for an attack to be successful, it also raises major concern within the community." He explained that for starters, it's impossible to contain what data the victim touches when they're hacking back, destroying the bill's rule that victims only mess with their own stolen property.

"Another concern is for chain-of-custody preservation," Bartholomew told Engadget, even if victims tell law enforcement what they're about to do. "Providing a 'plan of action' is a far cry from possessing the proper training or legal expertise on how to preserve evidence that will be upheld in a court of law," he explained. "It is only a matter of time until the first criminal is prosecuted and evidence [is] thrown out due to improper chain of custody or documentation."

Attribution is hard and usually wrong when not done by teams of professional analysts. And even then they get it wrong thanks to the incredible skills attackers have at what's called "false flagging" -- making attacks look like they're from someone else.

Bartholomew, who co-authored the leading research on "false-flag operations" agreed. As it has been demonstrated many times, attackers are becoming more and more aware of techniques to throw defenders off their scent and lead them down a wrong path.

"Pointing the proverbial finger at another attacker is becoming more commonplace and with the introduction of this bill, the possible outcome of such an act can potentially be devastating. Performing proper attribution of an attack is an already difficult and sometimes impossible task, especially for an untrained person."

There is also the serious concern that a hack back attack might spark a bigger issue between nations than anyone's considering.

Last September, the Ethics + Emerging Sciences Group at Cal Poly released a report called "Ethics of Hacking Back," a neutral look at the issues and laws surrounding the infosec version of "an eye for an eye."

The report comes from the stance that reasonable arguments exist to support hacking back. Still, in a summary from Patrick Lin, Ph.D., the group's director, Cal Poly found that the concept of hacking back fails across a number of categories. "If meant as a deterrent," Lin wrote, "hacking back would likely not deter malicious and ideological attackers."

Importantly, the paper cuts to the heart of all hacking-back discussions by raising a critical issue: We don't know if it would actually work or not because there is no data. "Currently," Lin said, "there is no self-reporting of hacking back because the practice is presumed to be 'likely illegal,' according to the U.S. Department of Justice."

We cannot track what we do not measure. Without that data—a way for individuals and organizations to safely report countermeasures, without fear of being made into criminals—it is difficult to answer the question of whether hacking back has deterrent value, which is an empirical question.

More to the ideological point of Graves' bill, Lin points out that hacking back also won't do anything to restore security.

By peddling the idea that hacking back could've stopped WannaCry -- ransomware -- ACDC is security snake oil. It's reminiscent of the "anonymity box" gold rush that hit Kickstarter after the Snowden snatch-and-dump, where charlatans sold "Tor in a box," promising that their gadgets would've prevented the Sony hack. Just take a recent, dimly-understood hacking disaster and graft on an infantile solution, don't bother to consult people in the trenches, and off we go.

When you try to make laws about hacking based on a child's concept of "getting someone back," you're getting very far and away from making yourself secure. It's like trying to make gang warfare productive. Or trying to make it legal to break into someone's house to steal your stuff back and take photos, because they broke into yours, except without anything in your favor of going right. Unless you're really, really good at different kinds of hacking. Spoiler: Most everyone in hacking and security isn't, no matter how hard they try to make us all think all hackers are good at everything.

It's a smarmy and conceited bill to propose. But that seems to be going around D.C., lately, so good luck to us.

Images: Yuri_Arcurs via Getty Images (Guys at computer); EFE/EPA/RITCHIE B. TONGO (WannaCry ransomware)