In 2017, society started taking AI bias seriously

Our algorithms have the same race and gender prejudices as us.

A crime-predicting algorithm in Florida falsely labeled black people re-offenders at nearly twice the rate of white people. Google Translate converted the gender-neutral Turkish terms for certain professions into "he is a doctor" and "she is a nurse" in English. A Nikon camera asked its Asian user if someone blinked in the photo -- no one did.

From the ridiculous to the chilling, algorithmic bias -- social prejudices embedded in the AIs that play an increasingly large role in society -- has been exposed for years. But it seems in 2017 we reached a tipping point in public awareness.

Perhaps it was the way machine learning now decides everything from our playlists to our commutes, culminating in the flawed social media algorithms that influenced the presidential election through fake news. Meanwhile, increasing attention from the media and even art worlds both confirms and recirculates awareness of AI bias outside the realms of technology and academia.

Now, we're seeing concrete pushback. The New York City Council recently passed what may be the US' first AI transparency bill, requiring government bodies to make public the algorithms behind its decision making. Researchers have launched new institutes to study AI prejudice (along with the ACLU) while Cathy O'Neil, author of Weapons of Math Destruction, launched an algorithmic auditing consultancy called ORCAA. Courts in Wisconsin and Texas have started to limit algorithms, mandating a "warning label" about its accuracy in crime prediction in the former case, and allowing teachers to challenge their calculated performance rankings in the latter.

"2017, perhaps, was a watershed year, and I predict that in the next year or two the issue is only going to continue to increase in importance," said Arvind Narayanan, an assistant professor of computer science at Princeton and data privacy expert. "What has changed is the realization that these aren't specific exceptions of racial and gender bias. It's almost definitional that machine learning is going to pick up and perhaps amplify existing human biases. The issues are inescapable."

Narayanan co-authored a paper published in April analyzing the meaning of words according to an AI. Beyond their dictionary definitions, words have a host of socially constructed connotations. Studies on humans have shown they more quickly associate male names with words like "executive" and female names with "marriage" and the study's AI did the same. The software also perceived European American names (Paul, Ellen) as more pleasant than African American ones (Malik, Shereen).

The AI learned this from studying human texts -- the "common crawl" corpus of online writing -- as well as Google News. This is the basic problem with AI: Its algorithms are not neutral, and the reason they're biased is that society is biased. "Bias" is simply cultural meaning, and a machine cannot divorce unacceptable social meaning (men with science; women with arts) from acceptable ones (flowers are pleasant; weapons are unpleasant). A prejudiced AI is an AI replicating the world accurately.

"Algorithms force us to look into a mirror on society as it is," said Sandra Wachter, a lawyer and researcher in data ethics at London's Alan Turing Institute and the University of Oxford.

For an AI to be fair, then, it needs to not reflect the world, but create a utopia, a perfect model of fairness. This requires the kind of value judgments that philosophers and lawmakers have debated for centuries, and rejects the common but flawed Silicon Valley rhetoric that AI is "objective." Narayanan calls this an "accuracy fetish" -- the way big data has allowed everything to be broken down into numbers which seem trustworthy but conceal discrimination.

The datafication of society and Moore's Law-driven explosion of AI has essentially lowered the bar for testing any kind of correlation, no matter how spurious. For example, recent AIs have tried to examine, from a headshot alone, whether a face is gay, in one case, or criminal, in another.

Then there was AI that sought to measure beauty. Last year, the company Beauty.AI held an online pageant judged by algorithms. Out of about 6,000 entrants, the AI chose 44 winners, the majority of whom were white, with only one having apparently dark skin. Human beauty is a concept debated since the days of the ancient Greeks. The idea that it could be number-crunched in six algorithms measuring factors like pimples and wrinkles as well as comparing contestants to models and actors is naïve at best. Deeply human questions were at play -- what is beauty? Is every race beautiful in the same way? -- which the scientists alone were ill-equipped to wrestle with. So instead, perhaps unwittingly, they replicated the Western-centric standards of beauty and colorism that already exist.

The major question for the coming year is how to remove these biases.

First, an AI is only as good as the training data fed into it. Data that is already riddled with bias -- like texts that associate women with nurses and men with doctors -- will create a bias in the software. Availability often dictates what data gets used, like the 200,000 Enron emails made public by authorities while the company was prosecuted for fraud that reportedly have since been used in fraud detection software and studies of workplace behavior.

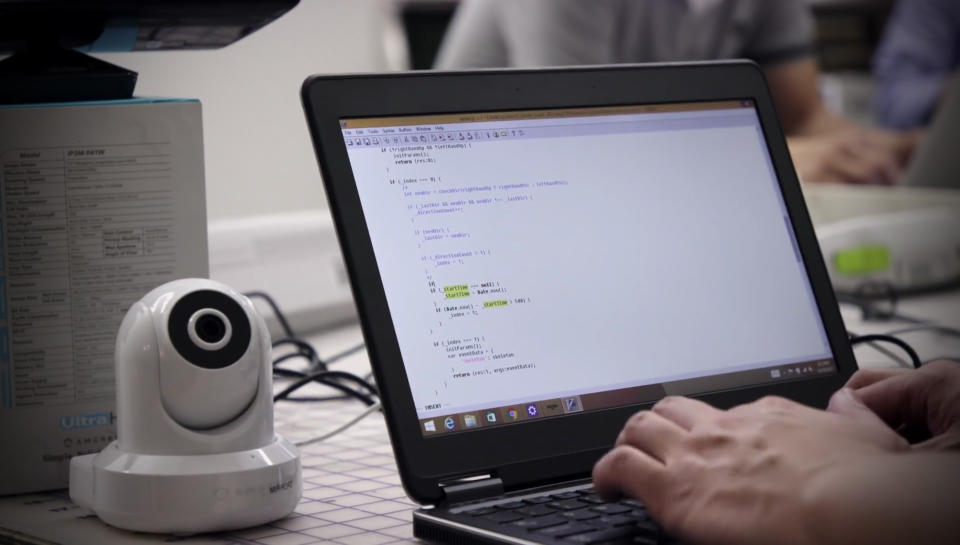

Second, programmers must be more conscious of biases while composing algorithms. Like lawyers and doctors, coders are increasingly taking on ethical responsibilities except with little oversight. "They're diagnosing people, they're preparing treatment plans, they're deciding if somebody should go to prison," said Wachter. "So the people developing those systems should be guided by the same ethical standards that their human counterparts have to be."

This guidance involves dialogue between technologists and ethicists, says Wachter. For instance, the question of what degree of accuracy is required for a judge to rely on crime prediction is a moral question, not a technological one.

"All algorithms work on correlations -- they find patterns and calculate the probability of something happening," said Wachter. "If the system tells me this person is likely to re-offend with a competence rate of 60 percent, is that enough to keep them in prison, or is 70 percent or 80 percent enough?"

"You should find the social scientists, you should find the humanities people who have been dealing with these complicated questions for centuries."

A crucial issue is that many algorithms are a "black box" and the public doesn't know how they makes decisions. Tech companies have pushed back against greater transparency, saying it would reveal trade secrets and leave them susceptible to hacking. When it's Netflix deciding what you should watch next, the inner workings are not a matter of immense public importance. But in public agencies dealing with criminal justice, healthcare or education, nonprofit AI Now argues that if a body can't explain its algorithm, it shouldn't use it -- the stakes are too high.

In May 2018, the General Data Protection Regulation will come into effect in the European Union, aiming to give citizens a "right to explanation" for any automated decision and the right to contest those decisions. The fines for noncompliance can add up to 4 percent of annual revenue, meaning billions of dollars for behemoths like Google. Critics including Wachter say the law is vague in places -- it's unclear how much of the algorithm must be explained -- and implementation may be defined by local courts, yet it still creates a significant precedent.

Transparency without better algorithmic processes is also insufficient. For one, explanations may be unintelligible to regular consumers. "I'm not a great believer in looking into the code, because it's very complex, and most people can't do anything with it," said Matthias Spielkamp, founder of Berlin-based nonprofit AlgorithmWatch. "Look at terms and services -- there's a lot of transparency in that. They'll tell you what they do on 100 pages, and then what's the alternative?" Transparency may not solve the deep prejudices in AI, but in the short run it creates accountability, and allows citizens to know when they're being discriminated against.

The near future will also provide fresh challenges for any kind of regulation. Simple AI is basically a mathematical formula full of "if this then that" decision trees. Humans set the criteria for what a software "knows." Increasingly, AI relies on deep neural networks where the software is fed reams of data and creates its own correlations. In these cases, the AI is teaching itself. The hope is that it can transcend human understanding, spotting patterns we can't see; the fear is that we have no idea how it reaches decisions.

"Right now, in machine learning, you take a lot of data, you see if it works, if it doesn't work you tweak some parameters, you try again, and eventually, the network works great," said Loris D'Antoni, an assistant professor at the University of Wisconsin, Madison, who is co-developing a tool for measuring and fixing bias called FairSquare. "Now even if there was a magic way to find that these programs were biased, how do you even fix it?"

An area of research called "explainable AI" aims to teach machines how to articulate what they know. An open question is whether AI's inscrutability will outpace our ability to keep up with it and hold it accountable.

"It is simply a matter of our research priorities," said Narayanan. "Are people spending more time advancing the state of the art AI models or are they also spending a significant fraction of that time building technologies to make AI more interpretable?"

Which is why it matters that, in 2017, society at large increasingly grappled with the flaws in machine learning. The more that prejudiced AI is in the public discourse, the more of a priority it becomes. When an institution like the EU adopts uniform laws on algorithmic transparency, the conversation reverberates through all 28 member states and around the world: to universities, nonprofits, artists, journalists, lawmakers and citizens. These are the people -- alongside technologists -- who are going to teach AI how to be ethical.