It's too easy to trick your Echo into spying on you

"Alexa, define privacy."

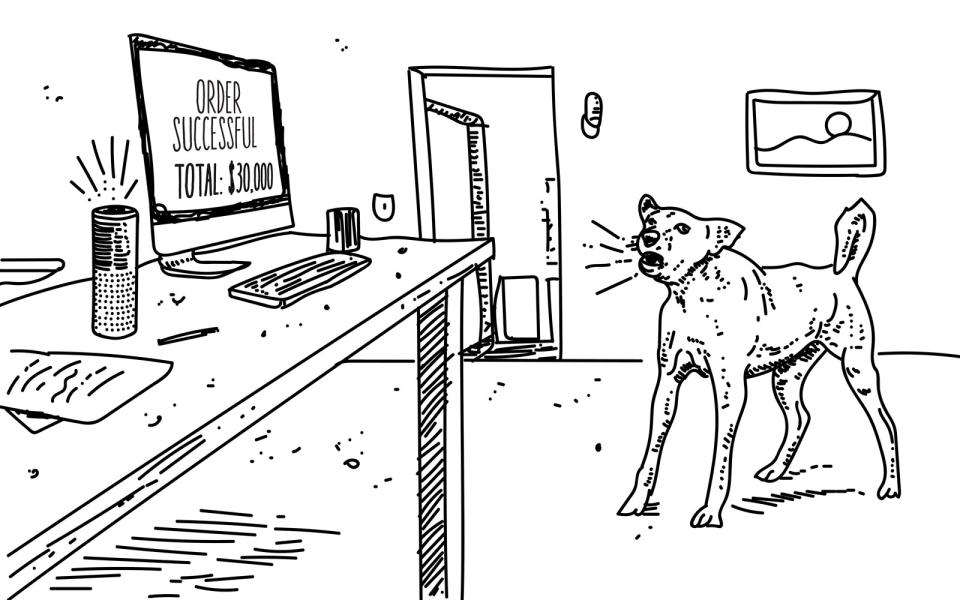

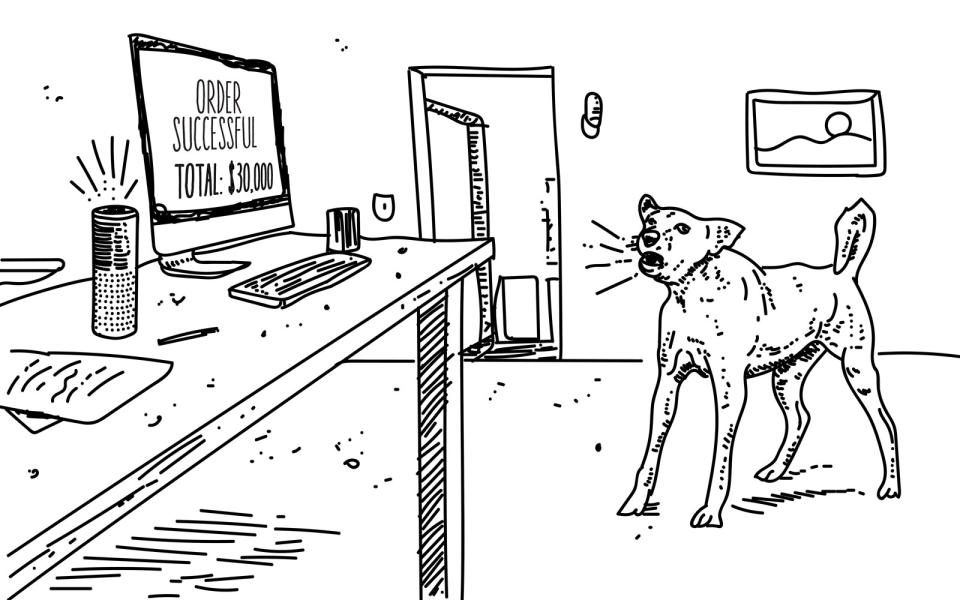

The main reason most people get an Amazon Echo, with its onboard AI servant,Alexa, is convenience. But, after a family in Oregon found out Alexa recorded at least one private conversation and sent it to a contact in their address book, you might want to sacrifice convenience for privacy and personal security. Or, maybe you should at least keep the microphone turned off when not in use. Not very convenient, I know. Danielle (who identified herself only by her first name) and her family were going about their normal lives when her husband's employee unexpectedly called them and urged them to turn off all the Amazon devices in their home. Alexa had apparently sent him recordings of conversations in their house — and they were clearly unaware of both the recording and the sending of the audio. The device's AI seemed to have selected someone in their address book at random. It was more than chilling. The couple hurried to turn off all their devices and called Amazon. "They said 'our engineers went through your logs, and they saw exactly what you told us, they saw exactly what you said happened and we're sorry.' He apologized like 15 times in a matter of 30 minutes and he said we really appreciate you bringing this to our attention, this is something we need to fix!" Danielle told KIRO 7. When their harrowing story made big headlines, Amazon responded to press with this statement: This explanation had a lot of people going, wow, there's a lot that needs to line up in order for something this crazy to actually happen. Voice activation. Confirmation. Instructions, and more confirmation. At least Amazon isn't blaming the user like Facebook loves to do. There's just one hitch. "For the record," wrote Computerworld, "the family says they didn't hear the Echo saying anything." Danielle told press in a follow-up that the device volume was set at seven (out of 10), it was next to her the entire time, and she was never asked anything by the device (let alone permission to record and send her conversation). As some readers may remember, just one month before this happened, researchers at security firm Checkmarx discovered that the Echo could be turned into a wiretap. Its post "Eavesdropping With Amazon Alexa", described how they made a malicious "skill" that could silently record users on startup, transcribe the recording, and eavesdrop on them indefinitely. The Checkmarx research concluded with specific suggestions on how the issue could be fixed. Amazon told press it was fixed, saying: "We have put mitigations in place for detecting this type of skill behavior and reject or suppress those skills when we do." The good news is, Amazon seems to be pretty fast at fixing bugs when they come up. Back in November of last year, security firm Armis disclosed that a Bluetooth vulnerability called BlueBorne previously thought to only affect smartphones and tablets also extended to Amazon Echo and Google Home. "Armis notified the companies in question long enough for them to patch out the vulnerabilities, so updated devices should be safe," wrote Engadget. "But the firm noted in its release that each of the 15 million Amazon Echoes and 5 million Google Homes sold were potentially at risk from BlueBorne." But wait, there's more. Last September, hackers from Zheijiang University in China found out that voice assistants Siri, Alexa and others could be manipulated by sending them commands in ultrasonic frequencies, high above what humans can hear. They called it DolphinAttack and said it could be leveraged to do all kinds of malicious things, like opening the smart lock on your front door or navigating to web pages with malware. As we reported, the attack was as scary as it was surreal because it was effective on "Siri, Google Assistant, Samsung S Voice and Alexa, on devices like smartphones, iPads, MacBooks, Amazon Echo and even an Audi Q3 — 16 devices and seven systems in total." The researchers even claimed they'd used it to successfully change the navigation on an Audi Q3. DolphinAttack is still a concern, though it's effective only within 6 feet of the target. The Zheijiang researchers said it could be mitigated if device makers did one of two things: change their microphones to refuse or ignore signals above 20 kHz, or nix all audio commands at inaudible frequencies. Could this be what happened with Danielle and her family? We may never find out. If there was a show called Unsolved Tech Mysteries, this would be a spooky episode. It could air right after the episode about that time Alexa started creepily and randomly laughing, as well as occasionally refusing to do as asked. Amazon fixed the issue, telling press: "In rare circumstances, Alexa can mistakenly hear the phrase 'Alexa, laugh.' We are changing that phrase to be 'Alexa, can you laugh?' which is less likely to have false positives," and the company added that Alexa now confirms the request before cackling like a digital Babadook. Until this gets sorted out (or not) maybe postpone the multidevice exorcism you've scheduled this weekend and check out Amazon's help page, where you can review settings and listen to (and delete) any recorded audio you find there.

"Echo woke up due to a word in background conversation sounding like "Alexa." Then, the subsequent conversation was heard as a "send message" request. At which point, Alexa said out loud "To whom?" At which point, the background conversation was interpreted as a name in the customers contact list. Alexa then asked out loud, "[contact name], right?" Alexa then interpreted background conversation as "right". As unlikely as this string of events is, we are evaluating options to make this case even less likely."