Not even IBM is sure where its quantum computer experiments will lead

But it will be fun to find out.

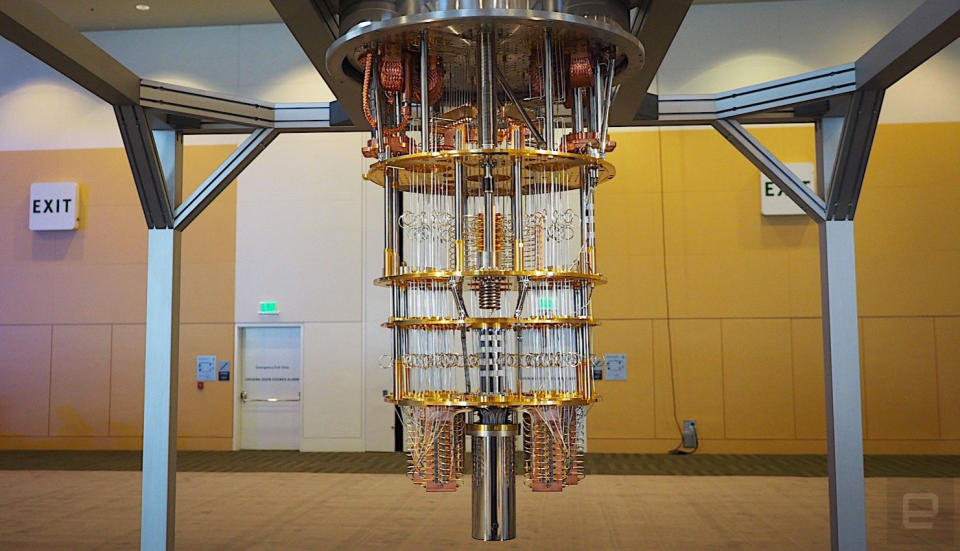

Despite the hype and hoopla surrounding the burgeoning field of quantum computing, the technology is still in its infancy. Just a few years ago, researchers were making headlines with rudimentary machines that housed less than a dozen qubits -- the quantum version of a classical computer's binary bit. At IBM's inaugural Index Developer Conference held in San Francisco this week, the company showed off its latest prototype: a quantum computing rig housing 50 qubits, one of the most advanced machines currently in existence.

Quantum computing -- with its ability to calculate and solve algorithms in parallel, at speeds far faster than conventional computers -- promises to revolutionize fields from chemistry and logistics to finance and physics. The thing is, while quantum computing is a technology for the world of tomorrow, it hasn't yet advanced far enough for anyone to know what that world will actually look like.

"People aren't going to just wake up in three or four years, and say, 'Oh okay, now I'm ready to use quantum, what do I have to learn,'" Bob Sutor, VP of IBM Q Strategy and Ecosystem at IBM Research, told Engadget.

These systems rely on the "spooky" properties of quantum physics, as Einstein put it, and their operation is radically different from how today's computers work. "What you're basically doing is you're replacing the notion of bits with something called qubits," Sutor said. "Ultimately when you measure a qubit it's zero or one, but before that there's a realm of freedom of what that can actually be. It's not zero and one at the same time or anything like this, it just takes on values from a much, much larger mathematical space.

"The basic logic gates [AND, OR, NOT, NOR, etc], those gates are different for quantum," he continued. "The way the different qubits work together to get to a solution is completely different from the way the bits within your general memory works." Rather than tackling problems in sequence, as classical computers do, quantum rigs attempt to solve them in parallel. This enables quantum computers to solve certain equations, such as modeling complex molecules, far more efficiently.

This efficiency, however, is tempered by the system's frailty. Currently, a qubit's coherence time tops out at 90 microseconds before decaying. That is, if a qubit is designated as a 1, it'll only remain a 1 for 0.0009 seconds. "After that all bets are off. You've got a certain amount of time in which to actually use this thing reliably," Sutor said. "Any computations you're going to do with a qubit have to come within that period."

As such quantum computers are highly sensitive to interference from temperature, microwaves, photons, even the electricity running the machine itself. Sutor said, "With heat you've got lots of electrons moving around, bumping into each other," which can lead to the qubit's decoherence. That's why these rigs have to be cooled to near absolute zero on order to operate.

"Outer space in the shade is between two and three degree Kelvin," Sutor explained. "Outer space is much too warm to do these types of calculations." Instead, the lowest levels of a quantum computer rig, where the calculations themselves take place, exist at a frosty 10 millikelvin -- a hundreth of a degree above absolute zero. So no, Sutor assured Engadget, we probably shouldn't expect desktop quantum computers running at room temperature to exist within the next few decades -- perhaps even within our lifetimes.

Surprisingly, these systems are fairly energy efficient. Aside from the energy needed to sufficiently cool the system for operation (a process that takes around 36 hours) IBM's 50-qubit rig only draws 10 to 15 kilowatts of power -- roughly equivalent to 10 standard microwave ovens.

So now that IBM has developed a number of quantum computer systems ranging from 5 to 50 qubits, the next challenge is figuring out what to do with them. And that's where the company's Q network comes in. Last December, IBM announced that it's partnering with a number of Fortune 500 companies and research institutes -- including JPMorgan Chase, Samsung, Honda, Japan's Keio University, Oak Ridge National Lab and Oxford University -- to suss out potential practical applications for the technology.

Learning centers like Keio University also act as localized hubs. "We in IBM research, while we have a large team on this, we can't work with everybody in the world who wants to work on quantum computing," Sutor explained. These hubs, however, "can work with local companies, local colleges, whomever to do whatever. They would get their quantum computer power from us, but they would be at the front lines." The same is true for Oakridge National Lab, Oxford University and the University of Melbourne.

What's more, the company has also launched the IBM Q experience which allows anyone -- businesses, universities, even private citizens -- to write and submit their own quantum application or experiment to be run on the company's publicly available quantum computing rig. It's essentially a cloud service for quantum computations. So far more than 75,000 people have taken advantage of the service, running more than 2.5 million calculations which have resulted in more than two dozen published research papers on subjects ranging from quantum phase space measurement to homomorphic encryption.

But while the public's interest in this technology is piqued, there is a significant knowledge gap that must be overcome before we start to see quantum applications proliferate the way classical programs did in the 1970s and '80s. "Let's say in the future you're running investment house types of calculations [similar to the financial risk applications that JP Morgan is currently developing]," Sutor points out, "there are big questions as to what those would be, and what the algorithms would be. We're way too early to have anything determined like that, even to the extent of knowing how well [quantum computing] will be applicable in some of these other areas."

The entry point for writing programs is a challenge too. For classical computers, it's as simple as running a compiler. But there's not yet such a function for quantum computers. "What does it mean to optimize a quantum program knowing that this completely different from the model that's in your phone?" he queried.

Another challenge that must be overcome is how to scale these machines. As Sutor points out, it's a simple enough task to add qubits to silicon chips, but every component added, increases the amount of heat generated and the amount of energy needed to keep the system within its operational temperature boundaries.

So rather than simply packing in more and more qubits and setting off a quantum version of Moore's Law, Sutor believes that the next major step forward for this technology is quality over quantity. "Having 50 great qubits is much more powerful than having 2,000 lousy ones," he quipped. "You don't want something very noisy that you're going to have to fix," but instead research should focus on improving the system's fidelity over increasing the qubit count.

But even as quantum technologies continue to improve, there will still be a place in the world of tomorrow for classical computers. "Don't think of quantum as a wholesale replacement for anything you do," Sutor warned. "The theory says that you could run any classical algorithm on a quantum rig but it would be so glacially slow because it's not designed to run those types of products."

Instead, Sutor prefers to think of the current crop of quantum technologies as an accelerator. "It does certain things very quickly, it does some things we don't know how to do well classically... and so it'll work hand in hand that way."

And if you're waiting for today's quantum computers to be able to compete with modern supercomputers anytime soon, you shouldn't hold your breath. "We need to get several orders of magnitude better than we are now to probably move into that period where we're solving the really super hard problems," he said.

"Just to be very clear," Sutor concluded, "this is a play for the 21st century... this is I think going to be one of the most critical computing technologies for the remainder of the century, and major breakthroughs will occur all along the line. Many of which we can't even imagine right now."