Microsoft's Cortana will eventually sound more like a real assistant

It's all thanks to new natural language technology.

Virtual assistants like Microsoft's Cortana, Amazon's Alexa and Google's have finally made voice-controlled computing a reality. But talking to them still feels basic -- shouting commands isn't exactly how you'd interact with another human being. At its Build developer conference today, Microsoft gave us a glimpse at how Cortana could improve on that.

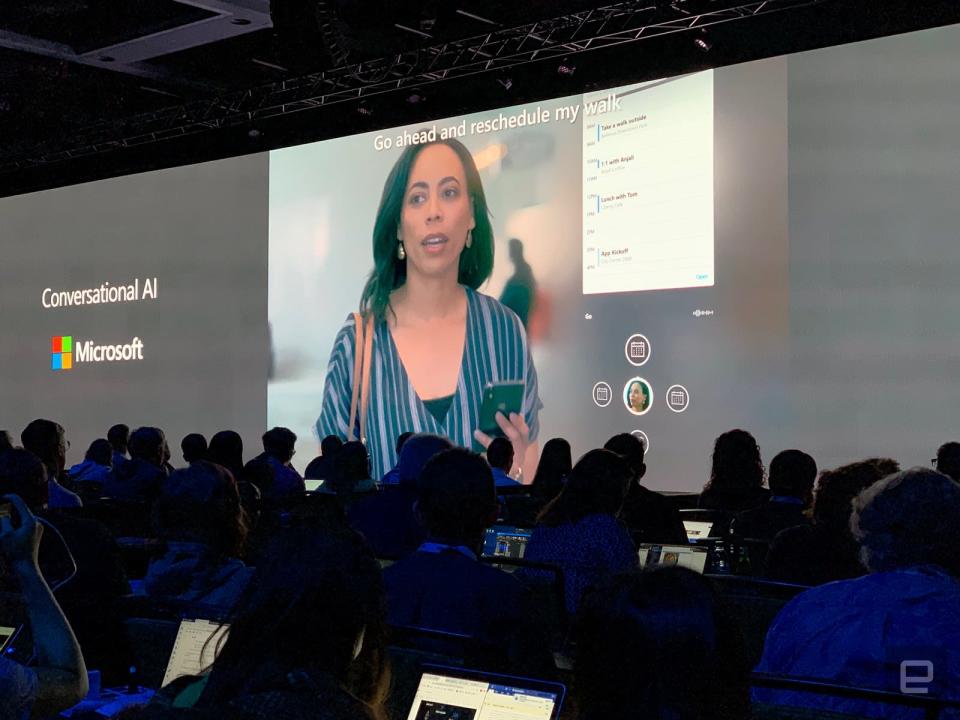

Using technology from Semantic Machines, a natural language startup Microsoft acquired last year, the company plans to let Cortana understand you in an organic way. In the demonstration, an executive strolled into her office while maintaining an ongoing conversation with Cortana. She was able to get a rundown of her schedule, reschedule meetings while booking rooms, and find time to meet with colleagues, all while maintaining a realistic conversation with the the virtual assistant. Cortana's responses sounded like an actual human's, complete with "umms" realistic language ticks, and the executive never had to phrase things like a command.

According to Microsoft CEO Satya Nadella, Cortana's smarter conversations are a way to move beyond the brittle, command-based interactions we have with voice assistants today. He likens it to the open web, where every browser can view most experiences.

The whole demo interaction basically sounded like a phone conversation between two people. It's the sort of thing I've dreamed of since my childhood introduction to Knight Rider, but it remains to be seen if Microsoft can actually deliver on the potential of this demo.