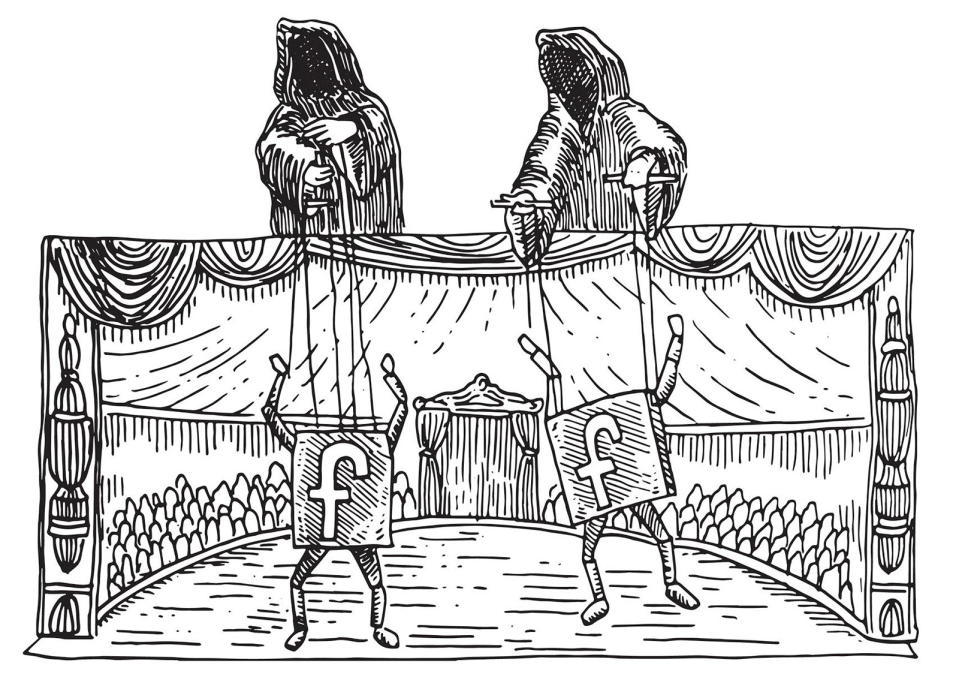

Facebook's widening role in electing Trump

Blaming fake accounts isn’t Facebook’s get-out-of-jail-free card, apparently.

Facebook admitted this week that a Russian propaganda mill used the social-media giant's ad service for political operation around the 2016 campaign. This came out when sources revealed to The Washington Post on Wednesday that Facebook was grilled by 2016 Russia-Trump congressional investigators behind closed doors Wednesday. US lawmakers are furious.

Putin's propaganda farm bought around $150,000 in political ads from at least June 2015-May 2017; Facebook was compelled to share the information and will be cooperating with ongoing investigations into Russian interference in the 2016 election. The troll farm in question is the Internet Research Agency, a well-funded, well-established, nimble, English-speaking, pro-Putin propaganda unit, and the ads are in all likelihood illegal.

What this week's revelations about Facebook mean is that Facebook ads are now undeniably a form of political campaigning, one with no checks and balances. And people have been taking advantage of this, big time.

The total money spent (that Facebook would admit to) was allegedly responsible for around 3,000 ads, with the potential to reach millions of people. Facebook isn't saying how many people actually saw them.

There were an additional 2,200 ads Facebook said it suspected were also Russia-backed; the company has avoided making a positive statement. It's arguable that the world's biggest surveillance platform has the data to connect the dots; it simply isn't doing so for this problem.

Facebook maintains that it is not culpable, only that the buyers violated Facebook's "inauthentic accounts" rule. The Washington Post wrote:

Facebook discovered the Russian connection as part of an investigation that began this spring looking at purchasers of politically motivated ads, according to people familiar with the inquiry. It found that 3,300 ads had digital footprints that led to the Russian company.

Facebook teams then discovered 470 suspicious and likely fraudulent Facebook accounts and pages that it believes operated out of Russia, had links to the company and were involved in promoting the ads.

The language that Facebook "discovered" this is disingenuous. As if it had no way of monitoring its ad program, and a Russian troll farm blasting propaganda were akin to finding a coin purse someone left under a cushion. Whoa! Who knew, or had any way of knowing? Well, Facebook did.

Pretending otherwise is fool's errand; no one could be that incompetent at running advertising and metrics and simultaneously have the entire industry in a chokehold.

Blaming fake accounts

Facebook is working hard and fast to minimize everything about this.

Facebook's minimizing of the problem and pretending it's now fixed -- by deleting a few fake accounts -- is like minimizing gangrene. As if the accounts belonging to Putin's Internet Research Agency are a just tiny speck of bad actors and now they're gone, so phew, rest easy, everyone.

The primary talking point is that the accounts have been removed because, by gosh, they violated Facebook's rules. They "misused the platform" by making fake accounts. Not by actively working against the company's alleged values around diversity. Or by making racists more racist and fascists feel like they're so validated that stabbing immigrants to death or mowing anti-racism protesters down with a car is not just a good idea, but the right thing to do.

Facebook said, "We are exploring several new improvements to our systems for keeping inauthentic accounts and activity off our platform. For example, we are looking at how we can apply the techniques we developed for detecting fake accounts to better detect inauthentic Pages and the ads they may run."

Cool, so as long as the real accounts of people at Russian or any other propaganda factories are the ones running ads, it's all good?

In a way, you have to wonder at how Facebook can't just admit how toxic and monstrous and effective as a tool to manipulate people its advertising really is. Or that, thanks to all its talented engineers and skill at navigating big-picture trends to rake in billions, the company has a hell of a lot to do with why we're now a nation at war against itself -- neo-Nazis murdering people in the streets, immigrant children set for deportation by the hundreds of thousands, our country on the brink of nuclear war and more, so much more.

Let's be honest: The trouble is that Facebook's ads are really effective. Those Facebook "emotional contagion" experiments to make people happy or sad and then to tell advertisers how to use it to make you do stuff, well, that was real -- and it worked.

Foreign and domestic Trump campaigns

Who else, besides Russian state operatives, was running ads about the same topics, at the exact same time? The Trump campaign.

There is a connection between Russian efforts to influence the election and Facebook-ad buys, just as there was the same connection with the Trump campaign, at the same time, with ad content covering the same issues in parallel -- race, immigration, LGBT rights and more. Facebook told The Washington Post that the Russian ads "were directed at people on Facebook who had expressed interest in subjects explored on those pages, such as LGBT community, black social issues, the Second Amendment and immigration."

The Trump campaign's Facebook-ad strategy -- divisive race-fueled messaging on the social network -- was so highly successful that The New York Review of Books concluded, "Donald Trump is our first Facebook president."

Domestic Trump operatives started by purchasing $2 million in Facebook ads -- eventually ramping that up to $70 million a month, with most of it in Facebook ads. The New York Review of Books quotes Trump digital-team member Gary Coby telling Wired that "on any given day ... the campaign was running 40,000 to 50,000 variants of its ads. ... On the day of the third presidential debate in October, the team ran 175,000 variations."

NYRB detailed:

He then uploaded all known Trump supporters into the Facebook advertising platform and, using a Facebook tool called Custom Audiences from Customer Lists, matched actual supporters with their virtual doppelgangers and then, using another Facebook tool, parsed them by race, ethnicity, gender, location, and other identities and affinities.

From there he used Facebook's Lookalike Audiences tool to find people with interests and qualities similar to those of his original cohort and developed ads based on those characteristics, which he tested using Facebook's Brand Lift surveys.

Trump's team no doubt saw in Facebook's ad platform the same things that Putin's propaganda mill had already learned to love. It used Facebook's ad tools, refined at targeting those most vulnerable to suggestion, to influence those ripening under Facebook's own rules that coddle Holocaust denial, and anti-immigrant and anti-Muslim sentiment. "They understood that some numbers matter more than others," NYRB explained. "In this case, the number of angry, largely rural, disenfranchised potential Trump voters -- and that Facebook, especially, offered effective methods for pursuing and capturing them."

In light of this week's revelations, Trump's Facebook ads deserve a closer look. Like the one that intended to stir anger about mistreatment of US veterans -- but depicted Russian veterans.

You have to wonder how a mistake like that is made. It's easy to wonder if it was an ad made by a Russian house and its pool of files, where topics have stock images labeled by campaign, and if even more of the misapplied images in Trump's Facebook ads came from an organization that used the same ones for the same topics, too.

It's more urgent to know if it was an ad run by both foreign and domestic Trump Facebook ad campaigns, because that would surely be something.

Facebook could tell us more about what was in those ads and when -- like if both the domestic Trump campaign and the Russian Trump campaign were coordinated in messaging -- but it won't. It could also tell us who was targeted with what, where, and when, but it hasn't.

The "tip of the iceberg"

On Thursday, Virginia Democratic Sen. Mark Warner told press that Facebook's disclosure was just the "tip of the iceberg." Warner reminded everyone that when Facebook was first called out on all this in late 2016 during the election, the social-media giant told us a very different story. Speaking Thursday at the Intelligence & National Security Summit in Washington, Warner said: "The first reaction from Facebook was: 'Well you're crazy, there's nothing going on.' Well, we find yesterday there actually was something going on."

In this week's PR spin coming from Facebook, we're hearing a lot about fake accounts and false personas, and things that happen (parenthetically) outside Facebook. We're given the impression that Facebook has no accountability or role in this other than racing to the rescue to protect its users. The same users it sells out to Facebook's real customers: its advertisers. O,r more specifically, anyone with enough money to rank high in its class system of ad buyers.

"It's not my fault" -- aka hiding behind "we got rid of the fake accounts" -- doesn't cut it anymore, and it actually never did. That's what we keep hearing neo-Nazis say to press when they march in American streets. They just showed up; we can't control who joins us; he acted alone; they were fake accounts; our ads don't really swing opinion into action.

These are things America's neo-Nazis say when members of their groups attack and kill innocent people in the name of racism, in the name of what we know was in those foreign and domestic Facebook ads served up to the very people who would be most receptive to them. People who, thanks to Facebook's fragmenting and dangerous "filter bubbles" and coddling of Holocaust denial, are retreating from meaningful debate and hunkering down into ideological bunkers.

Facebook has a role in this, and it's ugly. Even as they dance around it and pretend there's no connection, or that what little it will admit to is somehow not a big deal. It's a huge deal.

How can Facebook truly combat this? Auditing and legitimate transparency would be a starting point. So, you know, don't hold your breath.

In an "information operations update" Thursday, Facebook Chief Security Officer Alex Stamos wrote at length about fake accounts, trying harder to tie its advertising-propaganda problem to an abuse problem that is only peripherally related.

Stamos wrote: "We have shared our findings with US authorities investigating these issues, and we will continue to work with them as necessary."

As necessary, indeed.

We have contacted Facebook for comment and will update this article in the event of a response.