neuralnetwork

Latest

AI learns to solve a Rubik's Cube in 1.2 seconds

Researchers at the University of California, Irvine have created an artificial intelligence system that can solve a Rubik's Cube in an average of 1.2 seconds in about 20 moves. That's two seconds faster than the current human world record of 3.47 seconds, while people who can finish the puzzle quickly usually do so in about 50.

AI-guided material changes could lead to diamond CPUs

Scientists know that you can dramatically alter a crystalline material's properties by applying a bit of strain to it, but finding the right strain is another matter when there are virtually limitless possibilities. There may a straightforward solution, though: let AI do the heavy lifting. An international team of researchers has devised a way for machine learning to find strains that will achieve the best results. Their neural network algorithm predicts how the direction and degree of strain will affect a key property governing the efficiency of semiconductors, making them far more efficient without requiring educated guesses from humans.

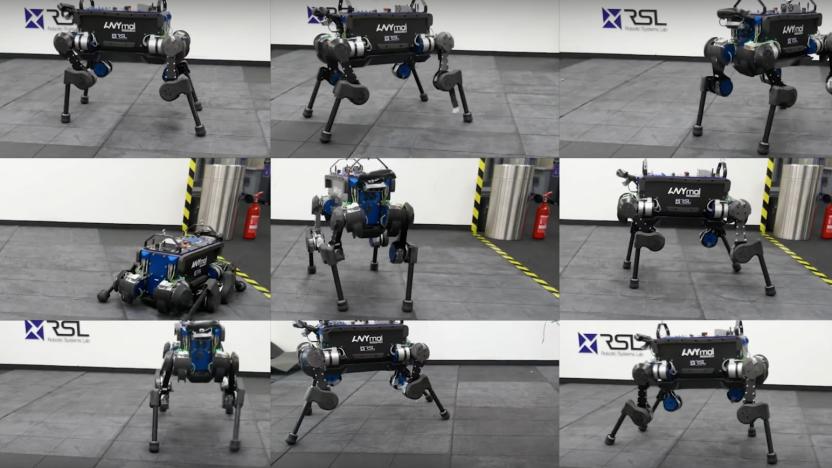

Researchers train robot dog to pick itself back up after a fall

Researchers have taught a robot dog to overcome one of the toughest challenges for a four-legged droid: how to get back up after a fall. In a paper published in the Science Robotics journal, its Swiss creators describe how they trained a neural network in a computer simulation to make the ultimate guard bot. Then they went and kicked the canine around in real life (because that's what researchers do) to see if their technique worked. It did: the robot's digital training regimen made it 25 times faster, more resilient, and able to adapt to any given environment, according to the team.

AI reveals hidden objects in the dark

You might not see most objects in near-total darkness, but AI can. MIT scientists have developed a technique that uses a deep neural network to spot objects in extremely low light. The team trained the network to look for transparent patterns in dark images by feeding it 10,000 purposefully dark, grainy and out-of-focus pictures as well as the patterns those pictures are supposed to represent. The strategy not only gave the neural network an idea of what to expect, but highlighted hidden transparent objects by producing ripples in what little light was present.

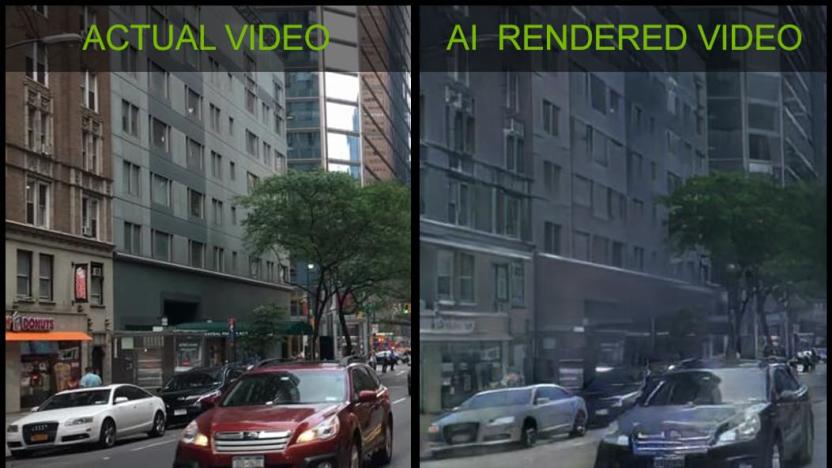

NVIDIA's new AI turns videos of the real world into virtual landscapes

Attendees of this year's NeurIPS AI conference in Montreal can spend a few moments driving through a virtual city, courtesy of NVIDIA. While that normally wouldn't be much to get worked up over, the simulation is fascinating because of what made it possible. With the help of some clever machine learning techniques and a handy supercomputer, NVIDIA has cooked up a way for AI to chew on existing videos and use the objects and scenery found within them to build interactive environments.

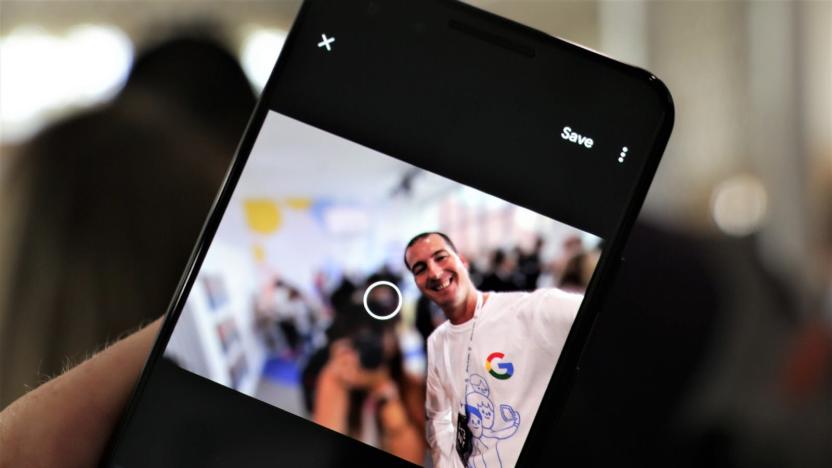

Google explains the Pixel 3's improved AI portraits

Google's Pixel 3 takes portrait photos that are more accurate than its predecessor could take when new, which is no mean feat when you realize that the upgrade comes solely through software. But just what is Google doing, exactly? The company is happy to explain. It just posted a look into the Pixel 3's (or really, the Google Camera app's) Portrait Mode that illustrates how its AI changes produce portraits with fewer visual glitches.

Microsoft’s new experimental app is all about imitating emojis

Microsoft has released a new app that aims to demonstrate how its Windows Machine Learning APIs can be used to build apps and "make machine learning fun and approachable." Emoji8 is a UWP app that uses machine learning to determine how well you can imitate emojis. As you make your best efforts to imitate a random selection of emojis in front of your webcam, Emoji8 will evaluate your attempts locally using the FER+ Emotion Recognition model, a neural network for recognizing emotion in faces. You'll then be able to tweet a gif of your top scoring images.

Intel's 'neural network on a stick' brings AI training to you

Ahead of its first AI developers conference in Beijing, Intel has announced it's making the process of imparting intelligence into smart home gadgets and other network edge devices faster and easier thanks to the company's latest invention: the Neural Compute Stick 2.

Researchers train AI to spot Alzheimer’s disease ahead of diagnosis

While Alzheimer's disease affects tens of millions of people worldwide, it remains difficult to detect early on. But researchers exploring whether AI can play a role in detecting Alzheimer's in patients are finding that it may be a valuable tool for helping spot the disease. Researchers in California recently published a study in the journal Radiology, and they demonstrated that, once trained, a neural network was able to accurately diagnose Alzheimer's disease in a small number of patients, and it did so based on brain scans taken years before those patients were actually diagnosed by physicians.

Google’s Piano Genie lets anyone improvise classical music

Google has taken the idea of Rock Band and Guitar Hero and pushed it one step further, creating an intelligent controller that lets you improvise on the piano and makes it sound like you actually know what you're doing, no matter how unskilled you are. The controller is called Piano Genie, and it comes from Google's Magenta research project. Powered by a neural network trained on classical piano music, Piano Genie translates what you tap out on eight buttons into music that uses all 88 piano keys.

Deezer's AI mood detection could lead to smarter song playlists

Astute listeners know that you can't gauge a song's mood solely through the instrumentation or the lyrics, but that's often what AI has been asked to do -- and that's not much help if you're trying to sift through millions of songs to find something melancholic or upbeat Thankfully, Deezer's researchers have found a way to make that AI consider the totality of a song before passing judgment. They've developed a deep learning system that gauges the emotion and intensity of a track by relying on a wide variety of data, not just a single factor like the lyrics.

AI can identify objects based on verbal descriptions

Modern speech recognition is clunky and often requires massive amounts of annotations and transcriptions to help understand what you're referencing. There might, however, be a more natural way: teaching the algorithms to recognize things much like you would a child. Scientists have devised a machine learning system that can identify objects in a scene based on their description. Point out a blue shirt in an image, for example, and it can highlight the clothing without any transcriptions involved.

Google's Trafalgar Square lion uses AI to generate crowdsourced poem

In London's Trafalgar Square, four lions sit at the base of Nelson's Column. But starting today, there will be a fifth. Google Arts & Culture and designer Es Devlin have created a public sculpture for the London Design Festival. It's a lion that over the course of the festival will generate a collective poem by using input from the public and artificial intelligence.

Google brings its AI song recognition to Sound Search

Google's Now Playing song recognition was clever when it premiered late in 2017, but it had its limits. When it premiered on the Pixel 2, for instance, its on-device database could only recognize a relatively small number of songs. Now, however, that same technology is available in the cloud through Sound Search -- and it's considerably more useful if you're tracking down an obscure title. The system still uses a neural network to develop "fingerprints" identifying each song, and uses a combination of algorithms to both whittle down the list of candidates and study those results for a match. However, the scale and quality of that song matching is now much stronger.

Google offers AI toolkit to report child sex abuse images

Numerous organizations have taken on the noble task of reporting pedophilic images, but it's both technically difficult and emotionally challenging to review vast amounts of the horrific content. Google is promising to make this process easier. It's launching an AI toolkit that helps organizations review vast amounts of child sex abuse material both quickly and while minimizing the need for human inspections. Deep neural networks scan images for abusive content and prioritize the most likely candidates for review. This promises to both dramatically increase the number of responses (700 percent more than before) and reduce the number of people who have to look at the imagery.

MIT's AI can tell if you're depressed from the way you talk

When it comes to identifying depression, doctors will traditionally ask patients specific questions about mood, mental illness, lifestyle and personal history, and use these answers to make a diagnosis. Now, researchers at MIT have created a model that can detect depression in people without needing responses to these specific questions, based instead on their natural conversational and writing style.

Google and Harvard use AI to predict earthquake aftershocks

Scientists from Harvard and Google have devised a method to predict where earthquake aftershocks may occur, using a trained neural network. The researchers fed the network with historical seismological data, some 131,000 mainshock-aftershock pairs all told, and more accurately predicted where "more than 30,000 mainshock-aftershock pairs" from an independent dataset occurred, more accurately than previous ways like the Coulomb forecast method. That's because the AI method takes multiple aspects of stress shifts into account versus Coulomb's singular approach.

Facebook and NYU researchers aim to use AI to speed up MRI scans

Facebook is teaming up with researchers at the NYU School of Medicine's Department of Radiology in order to make MRIs more accessible. Scientists with the Facebook Artificial Intelligence Research (FAIR) group and NYU note that getting an MRI scan can take up a fair amount of time, sometimes over an hour, and for people who have a hard time laying still for that period of time -- including children, those who are claustrophobic or individuals with conditions that make it painful to do so -- the length of a typical MRI scan poses a problem. So the researchers are turning to AI.

MIT can secure cloud-based AI without slowing it down

It's rather important to secure cloud-based AI systems, especially when they they use sensitive data like photos or medical records. To date, though, that hasn't been very practical -- encrypting the data can render machine learning systems so slow as to be virtually unusable. MIT thankfully has a solution in the form of GAZELLE, a technology that promises to encrypt convolutional neural networks without a dramatic slowdown. The key was to meld two existing techniques in a way that avoids the usual bottlenecks those methods create.

AI-powered instant camera turns photos into crude cartoons

Most cameras are designed to capture scenes as faithfully as possible, but don't tell that to Dan Macnish. He recently built an instant camera, Draw This, that uses a neural network to translate photos into the sort of crude cartoons you would put on your school notebooks. Macnish mapped the millions of doodles from Google's Quick, Draw! game data set to the categories the image processor can recognize. After that, it was largely a matter of assembling a Raspberry Pi-powered camera that used this know-how to produce its 'hand-drawn' pictures with a thermal printer.