Google researchers trained AI with your Mannequin Challenge videos

They used 2,000 YouTube clips to improve AI's depth perception.

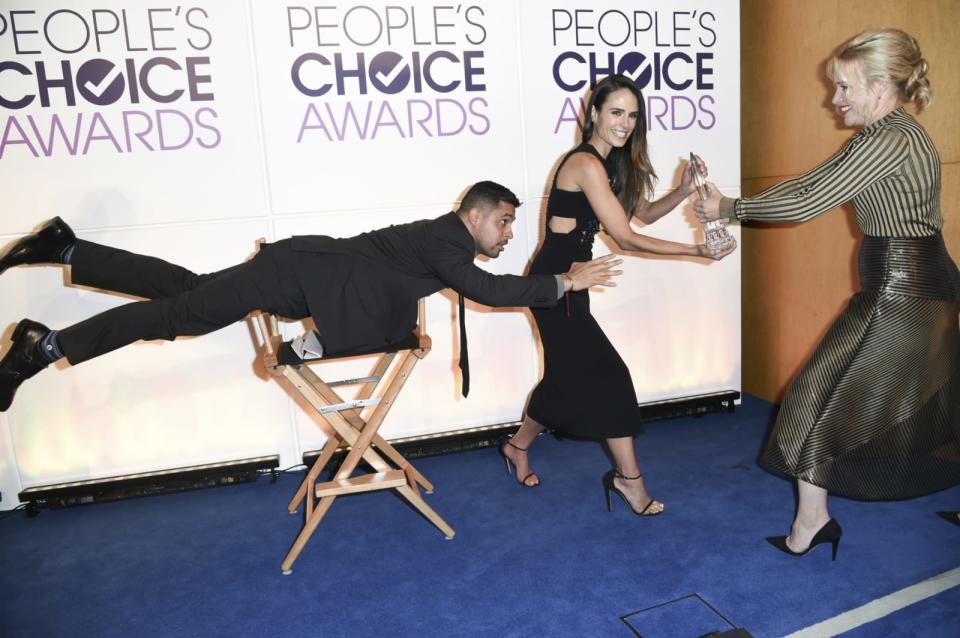

Way back in 2016, thousands of people participated in the Mannequin Challenge. As you might remember, it was an internet phenomenon in which people held random poses while someone with a camera walked around them. Those videos were shared on YouTube and many earned millions of views. Now, a team from Google AI is using the videos to train neural networks. The goal is to help AI better predict depth in videos where the camera is moving.

While human depth perception isn't duped by object motion, AI isn't quite there yet. But generating enough clips to train AI would be difficult, and gathering enough people of different ages and genders creates an added challenge. That's why the team turned to YouTube. If you participated in the Mannequin Challenge, there's a chance your video is one of the 2,000 the researchers scraped together to build their dataset. They plan to share that data with the larger scientific community.

To train the neural network, the researchers converted the clips into 2D images, estimated the camera pose and created depth maps. The AI was then able to predict the depth of moving objects in videos with higher accuracy than previously possible. With this enhanced ability, neural networks could help self-driving cars and robots better navigate unfamiliar areas.

The fact that the researchers used videos without notifying the people in them could raise some privacy concerns. As Technology Review points out, it's not uncommon for researchers to glean publicly available data from sources like Twitter and Flickr, and as neural networks grow dependent on larger data sets, the practice will likely continue. That might make you think twice about which YouTube fads you join.