computervision

Latest

Microsoft's AI app for the blind helps you explore photos with touch

Microsoft's computer vision app for the blind and poor-sighted, Seeing AI, just became more useful for those moments when you're less interested in navigating the world than learning about what's on your phone. The company has updated the iOS app with an option to explore photos by touching them. Tap your finger on an image and you'll hear a description of both the objects in that scene as well as their spatial relationship. You can get descriptions for photos taken through Seeing AI's Scene channel, but they'll also be available for pictures in your camera roll as well as other apps (through options menus).

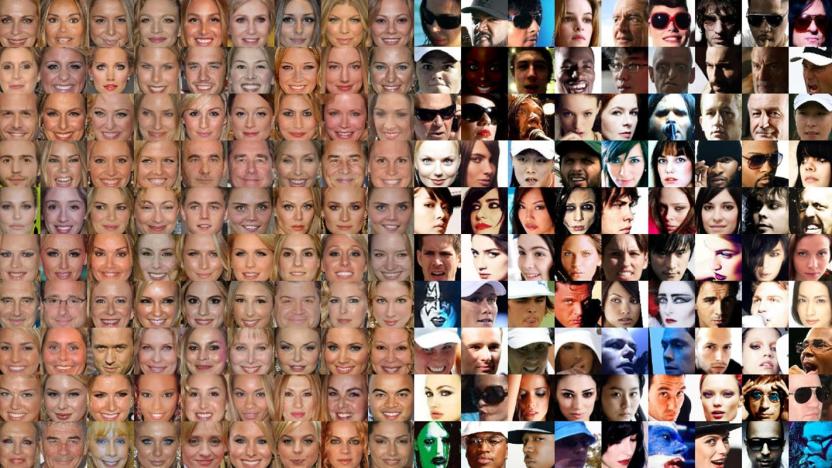

MIT hopes to automatically 'de-bias' face detection AI

There have been efforts to fight racist biases in face detection systems through better training data, but that usually involves a human manually supplying the new material. MIT's CSAIL might have a better approach. It's developing an algorithm that automatically 'de-biases' the training material for face detection AI, ensuring that it accommodates a wider range of humans. The code can scan a data set, understand the set's biases, and promptly resample it to ensure better representation for people regardless of skin color.

Intel RealSense tracking camera helps robots navigate without GPS

Intel is back with another RealSense camera, but this one has a slight twist: it's meant to give machines a sense of place. The lengthily-titled RealSense Tracking Camera T265 uses inside-out tracking (that is, it doesn't need outside sensors) to help localize robots and other autonomous machines, particularly in situations where GPS is unreliable or non-existent. A farming robot, for instance, could both map a field as well as adapt on the fly to obstacles like buildings and rocks.

Intel-powered camera uses AI to protect endangered African wildlife

Technology is already in use to help stop poachers. However, it's frequently limited to monitoring poachers when they're already in shooting range, or after the fact. The non-profit group Resolve vows to do better -- it recently developed a newer version of its TrailGuard camera that uses AI to spot poachers in Africa before they can threaten an endangered species. It uses an Intel-made computer vision processor (the Movidius Myriad 2) that can detect animals, humans and vehicles in real-time, giving park rangers a chance to intercept poachers before it's too late.

Court tosses lawsuit over Google Photos' facial recognition

Google Photos users nervous about facial recognition on the service aren't going to be very happy. A Chicago judge has granted Google a motion dismissing a lawsuit accusing the company of violating Illinois' Biometric Information Privacy Act by gathering biometric data from photos without permission. The plaintiffs couldn't demonstrate that they'd suffered "concrete injuries" from the facial recognition system, according to the judge.

Stanford AI found nearly every solar panel in the US

It would be impractical to count the number of solar panels in the US by hand, and that makes it difficult to gauge just how far the technology has really spread. Stanford researchers have a solution: make AI do the heavy lifting. They've crafted a deep learning system, DeepSolar, that mapped every visible solar panel in the US -- about 1.47 million of them, if you're wondering. The neural network-based approach turns satellite imagery into tiles, classifies every pixel within those tiles, and combines those pixels to determine if there are solar panels in a given area, whether they're large solar farms or individual rooftop installations.

AI reveals hidden objects in the dark

You might not see most objects in near-total darkness, but AI can. MIT scientists have developed a technique that uses a deep neural network to spot objects in extremely low light. The team trained the network to look for transparent patterns in dark images by feeding it 10,000 purposefully dark, grainy and out-of-focus pictures as well as the patterns those pictures are supposed to represent. The strategy not only gave the neural network an idea of what to expect, but highlighted hidden transparent objects by producing ripples in what little light was present.

Amazon tests checkout-free shopping for larger stores

Amazon's checkout-free Go stores might hint at the future of retail, but they're small locations that aren't much good if you need more than lunch or a bag of chips. You might see more soon, though. Wall Street Journal sources say Amazon is testing a version of its computer vision-based shopping technology for larger stores. It's not certain how close the company might be to trying this in the real world (it's currently running in a Seattle space "formatted like a big store"). It won't shock you to hear where the tech might go if it's successful, however.

AR technology helps the blind navigate by making objects 'talk'

If you're blind, finding your way through a new area can sometimes be challenging. In the future, though, you might just need to wear a headset. Caltech researchers have developed a Cognitive Augmented Reality Assistant (CARA) that uses Microsoft's HoloLens to make objects "talk" to you. CARA uses computer vision to identify objects in a given space and say their names -- thanks to spatialized sound, you'll know if there's a chair in front of you or a door to your right. The closer you are, the higher the pitch of an object's voice.

Mercedes’ AI research could mean faster package delivery

It looked and worked like a Ferris wheel and it seemed like a good idea. Packages would sit in the basket and rotate for easy access when about to be delivered. Then a gallon of milk got caught in a crossbeam and it exploded all over the back of the van. That system was scrapped.

Arlo cameras will soon detect animals, vehicles and packages

Arlo's cameras are getting more AI-powered features though the Arlo Smart subscription service, which will soon detect packages, animals and vehicles. The features arrive later this year and will work with all Arlo cameras.

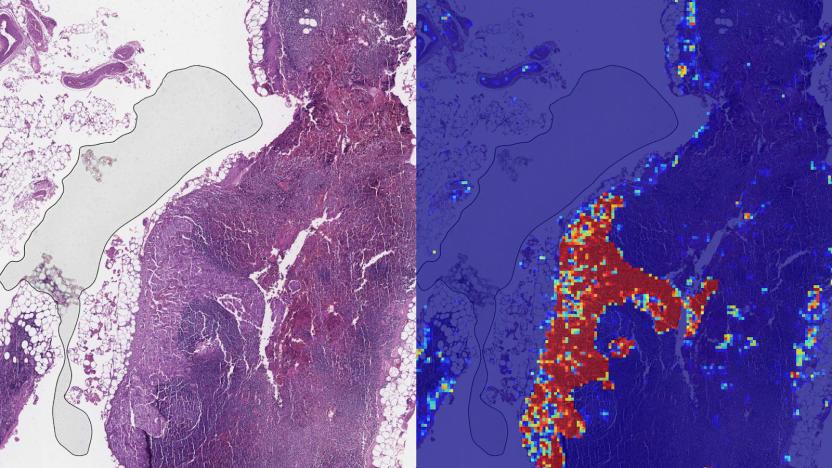

Google AI can spot advanced breast cancer more effectively than humans

Google has delivered further evidence that AI could become a valuable ally in detecting cancer. The company's researchers have developed a deep learning tool that can spot metastatic (advanced) breast cancer with a greater accuracy than pathologists when looking at slides. The team trained its algorithm (Lymph Node Assistant, aka LYNA) to recognize the characteristics of tumors using two sets of pathological slides, giving it the ability to spot metastasis in a wide variety of conditions. The result was an AI system that could tell the difference between cancer and non-cancer slides 99 percent of the time, even when looking for extremely small metastases that humans might miss.

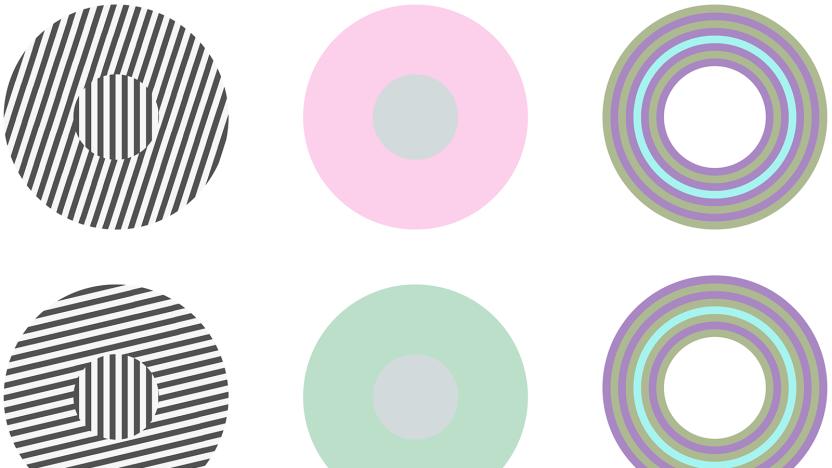

Researchers develop a computer that's fooled by optical illusions

Say you're staring at the image of a small circle in the center of a larger circle: The larger one looks green, but the smaller one appears gray. Except your friend looks at the same image and sees another green circle. So is it green or gray? It can be maddening and fun to try to decipher what is real and what is not. In this instance, your brain is processing a type of optical illusion, a phenomenon where your visual perception is shaped by the surrounding context of what you are looking at.

AI can identify objects based on verbal descriptions

Modern speech recognition is clunky and often requires massive amounts of annotations and transcriptions to help understand what you're referencing. There might, however, be a more natural way: teaching the algorithms to recognize things much like you would a child. Scientists have devised a machine learning system that can identify objects in a scene based on their description. Point out a blue shirt in an image, for example, and it can highlight the clothing without any transcriptions involved.

Google offers AI toolkit to report child sex abuse images

Numerous organizations have taken on the noble task of reporting pedophilic images, but it's both technically difficult and emotionally challenging to review vast amounts of the horrific content. Google is promising to make this process easier. It's launching an AI toolkit that helps organizations review vast amounts of child sex abuse material both quickly and while minimizing the need for human inspections. Deep neural networks scan images for abusive content and prioritize the most likely candidates for review. This promises to both dramatically increase the number of responses (700 percent more than before) and reduce the number of people who have to look at the imagery.

Spear-toting robot can guard coral reefs against invasive lionfish

Lionfish are threats to not only fragile coral reef ecosystems, but the divers who keep them in check. They not only take advantage of unsuspecting fish populations, but carry poisonous spines that make them challenging to catch. Student researchers at Worcester Polytechnic Institute may have a solution: robotic guardians. They've crafted an autonomous robot (below) that can hunt lionfish without requiring a tethered operator that could harm the reefs.

Autonomous drones will help stop illegal fishing in Africa

Drones aren't just cracking down on land-based poaching in Africa -- ATLAN Space is launching a pilot that will use autonomous drones to report illegal fishing in the Seychelles islands. The fliers will use computer vision to identify both the nature of boats in protected waters as well as their authorization. If they detect illegal fishing boats, the drones will note vessel locations, numbering and visible crews, passing the information along to officials.

Fox AI predicts a movie's audience based on its trailer

Modern movie trailers are already cynical exercises in attention grabbing (such as the social media-friendly burst of imagery at the start of many clips), but they might be even more calculated in the future. Researchers at 20th Century Fox have produced a deep learning system that can predict who will be most likely to watch a movie based on its trailer. Thanks to training that linked hundreds of trailers to movie attendance records, the AI can draw a connection between visual elements in trailers (such as colors, faces, landscapes and lighting) and the performance of a film for certain demographics. A trailer with plenty of talking heads and warm colors may appeal to a different group than one with lots of bold colors and sweeping vistas.

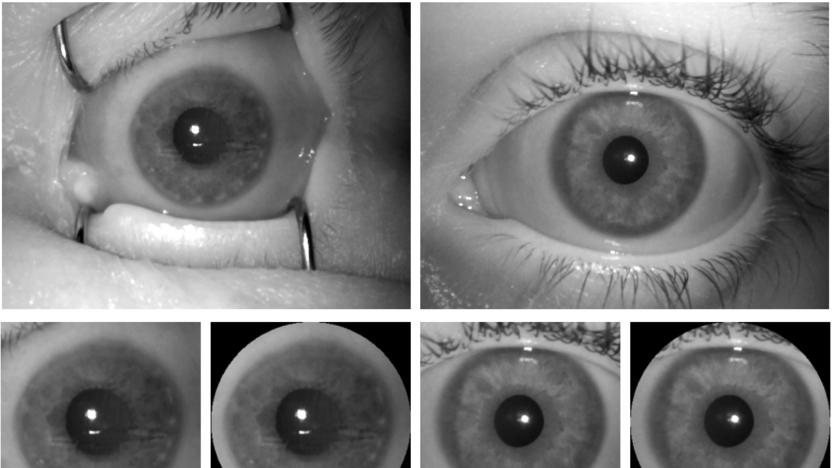

Iris scanner AI can tell the difference between the living and the dead

It's possible to use a dead person's fingerprints to unlock a device, but could you get away with exploiting the dead using an iris scanner? Not if a team of Polish researchers have their way. They've developed a machine learning algorithm that can distinguish between the irises of dead and living people with 99 percent accuracy. The scientists trained their AI on a database of iris scans from various times after death (yes, that data exists) as well as samples of hundreds of living irises, and then pitted the system against eyes that hadn't been included in the training process.

Google AI experiment compares poses to 80,000 images as you move

Google released a fun AI experiment today called Move Mirror that matches whatever pose you make to hundreds of images of others making that same pose. When you visit the Move Mirror website and allow it to access your computer's camera, it uses a computer vision model called PoseNet to detect your body and identify what positions your joints are in. It then compares your pose to more than 80,000 images and finds which ones best mirror your position. Move Mirror then shows you those images next to your own in real time and as you move around, the images you're matched to change. You can even make a GIF of your poses and your Move Mirror matches.