computervision

Latest

AI is learning to speed read

As clever as machine learning is, there's one common problem: you frequently have to train the AI on thousands or even millions of examples to make it effective. What if you don't have weeks to spare? If Gamalon has its way, you could put AI to work almost immediately. The startup has unveiled a new technique, Bayesian Program Synthesis, that promises AI you can train with just a few samples. The approach uses probabilistic code to fill in gaps in its knowledge. If you show it very short and tall chairs, for example, it should figure out that there are many chair sizes in between. And importantly, it can tweak its own models as it goes along -- you don't need constant human oversight in case circumstances change.

AI learns to recognize exotic states of matter

It's difficult for humans to identify phase transitions, or exotic states of matter that come about through unusual transitions (say, a material becoming a superconductor). They might not have to do all the hard work going forward, however. Two sets of researchers have shown that you can teach neural networks to recognize those states and the nature of the transitions themselves. Similar to what you see with other AI-based recognition systems, the networks were trained on images -- in this case, particle collections -- to the point where they could detect phase transitions on their own. They're both very accurate (within 0.3 percent for the temperature of one transition) and only need to see a few hundred atoms to identify what they're looking at.

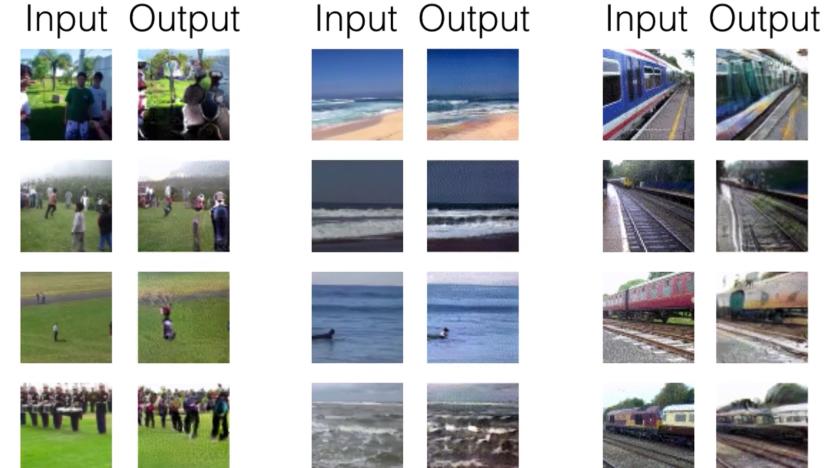

Google uses AI to sharpen low-res images

Deckard's photo-enhancing gear in Blade Runner is still the stuff of fantasy. However, Google might just have a close-enough approximation before long. The Google Brain team has developed a system that uses neural networks to fill in the details on very low-resolution images. One of the networks is a "conditioning" element that maps the lower-res shot to similar higher-res examples to get a basic idea of what the image should look like. The other, the "prior" network, models sharper details to make the final result more plausible.

AI is nearly as good as humans at identifying skin cancer

If you're worried about the possibility of skin cancer, you might not have to depend solely on the keen eye of a dermatologist to spot signs of trouble. Stanford researchers (including tech luminary Sebastian Thrun) have discovered that a deep learning algorithm is about as effective as humans at identifying skin cancer. By training an existing Google image recognition algorithm using over 130,000 photos of skin lesions representing 2,000 diseases, the team made an AI system that could detect both different cancers and benign lesions with uncanny accuracy. In early tests, its performance was "at least" 91 percent as good as a hypothetically flawless system.

Apple publishes its first AI research paper

When Apple said it would publish its artificial intelligence research, it raised at least a couple of big questions. When would we see the first paper? And would the public data be important, or would the company keep potential trade secrets close to the vest? At last, we have answers. Apple researchers have published their first AI paper, and the findings could clearly be useful for computer vision technology.

KFC's latest weird tech suggests an order based on your face

KFC is no stranger to getting funky in order to sell its chicken but this one is really out there. The company's Chinese division is partnering with Baidu to create a smart restaurant which will recommend meals based on the customer's looks. Specifically, the restaurant's ordering kiosks, which are powered by Baidu's computer vision systems, will look at the customer's age, gender and facial expressions to make educated guesses as to what they might be in the mood for. A guy in his 20s, for example, is far more likely to order a big meal with a large soda for lunch than, say, a 75 year-old granny who walks in at 8am. These are just suggestions of course, it's not like you have to eat what it recommends (yet) but if you're a regular, the kiosks will remember your previous order and recommend that as well. Don't get weird about that last bit, it's no different than the bartender at your local pub remembering your drink order from last time. For now, the facial recognition kiosks are confined to the single smart restaurant, located in Beijing, but if it's a hit with the public, the technology will hopefully spread.

ICYMI: Amazon wants to revolutionize grocery shopping

try{document.getElementById("aol-cms-player-1").style.display="none";}catch(e){} Today on In Case You Missed It: Amazon created a smart store in Seattle which is currently open to just employees but next year will open to all. It lets people saunter in, grab whatever they need, then leave without formally checking out. The trick is in using the Amazon Go app and all the sensors within the store, which track which items are placed in a basket and charges shoppers accordingly. Meanwhile, Georgia Tech created a 'TuneTable,' an interactive table with moving coaster-sized tiles people use to both program and then play music. If you're interested, the Guinness Book of World Records video for candles is here, and the behind-the-scenes video from Rogue One is here. As always, please share any interesting tech or science videos you find by using the #ICYMI hashtag on Twitter for @mskerryd.

Computer vision may help the blind see the world

The world is getting better at combining machine learning and computer vision, but it's not just cars and drones that benefit from that. For instance, the same technology could be used to dramatically improve the lives of people with visual impairments, enabling them to be more independent. One of the startups looking to do just that is Eyra, which is showing off a wearable called Horus that could help the blind "see."

Microsoft expands its accessibility efforts on Windows 10

Microsoft is keen on making sure people with disabilities can use their products, and next year it's only going to expand upon that directive. It starts with some big additions to Windows 10 and Narrator for the Creators Update like support for braille, some 10 news voices for text to speech and volume ducking when Narrator chimes in while you're listening to Spotify or another music program.

Facebook shuts off Prisma's live video support

If you're a Prisma fan, you were likely heartbroken when the AI-driven art app lost its Facebook Live streaming feature. Why did it go almost as soon as it arrived? Now we know. The Prisma team tells TechCrunch that Facebook shut off its access to the Live programming kit over claims that this wasn't the intended use for the framework. The platform is meant for live footage from "other sources," such as pro cameras or game feeds. It's an odd reason when Facebook's public developer guidelines don't explicitly forbid use with smartphones, but the social network does state that it's primarily for non-smartphone uses.

AI can create videos of the future

Loads of devices can preserve moments on camera, but what if you could capture situations that were about to happen? It's not as far-fetched as you might think. MIT CSAIL researchers have crafted a deep learning algorithm that can create videos showing what it expects to happen in the future. After extensive training (2 million videos), the AI system generates footage by pitting two neural networks against each other. One creates the scene by determining which objects are moving in still frames. The other, meanwhile, serves as a quality check -- it determines whether videos are real or simulated, and the artificial video is a success when the checker AI is fooled into thinking the footage is genuine.

ICYMI: Basketball is about to get even more stats-heavy

try{document.getElementById("aol-cms-player-1").style.display="none";}catch(e){}Today on In Case You Missed It: The National Basketball Association signed a seven-year agreement to use a computer-vision, artificial intelligence system that analyzes on-court action in ways average viewers couldn't spot as they watch.

Google machine learning can protect endangered sea cows

It's one thing to track endangered animals on land, but it's another to follow them when they're in the water. How do you spot individual critters when all you have are large-scale aerial photos? Google might just help. Queensland University researchers have used Google's TensorFlow machine learning to create a detector that automatically spots sea cows in ocean images. Instead of making people spend ages coming through tens of thousands of photos, the team just has to feed photos through an image recognition system that knows to look for the cows' telltale body shapes.

Netgear security camera is wireless, ultra-wide and weatherproof

Look, we know -- it's hard to get excited about home security cameras. However, Netgear is determined to stand out with a camera that ticks virtually every checkbox on the list. Its new Arlo Pro is not only wireless (with the option of plugging in), but touts an ultra-wide 130-degree viewing angle and weatherproofing. Yes, you can stick this on a tree with the knowledge that it could easily spot an intruder in the pouring rain. That includes at night, too, thanks to night vision and an infrared motion sensor.

Indiana Pacers use AI to help you get hot dogs faster

Among the hassles you deal with at sports events, waiting in line is one of the most annoying. What if you miss the start of play because you had to satisfy a hot dog craving? The Indiana Pacers want to alleviate that headache. They're partnering with tech startup WaitTime to shorten waits through artificial intelligence. The newly-launched system takes photos of arena lines at a rate of 10 times per second, and interprets that data to gauge not just queuing times, but also order completion times and the number of people who've given up. The Pacers display the wait times on screens and a mobile app to show you where wait times are short -- you'll know that a given washroom is empty, or whether it'd be quicker to grab nachos instead of a burger.

IBM and MIT team up to help AI see and hear like humans

Autonomous robots and other AI systems still don't do a great job of understanding the world around them, but IBM and MIT think they can do better. They've begun a "multi-year" partnership that aims to improve AI's ability to interpret sight and sound as well as humans. IBM will supply the expertise and technology from its Watson cognitive computing platform, while MIT will conduct research. It's still very early, but the two already have a sense of what they can accomplish.

Microsoft hopes AI will find better cancer treatments

Google isn't the only tech giant hoping that artificial intelligence can aid the fight against cancer. Microsoft has unveiled Project Hanover, an effort to use AI for both understanding and treating cancers. To begin with, the company is developing a system that would automatically process legions of biomedical papers, creating "genome-scale" databases that could predict which drug cocktails would be the most effective against a given cancer type. An ideal treatment wouldn't go unnoticed by doctors already swamped with work.

Intel buys Movidius to build the future of computer vision

Intel is making it extra-clear that computer vision hardware will play a big role in its beyond-the-PC strategy. The computing behemoth has just acquired Movidius, a specialist in AI and computer vision processors. The Intel team isn't shy about its goals. It sees Movidius as a way to get high-speed, low-power chips that can power RealSense cameras in devices that need to see and understand the world around them. Movidius has already provided the brains behind gadgets like drones and thermal cameras, many of which are a logical fit for Intel's depth-sensing tech -- and its deals with Google and Lenovo give nothing to sneeze at, either.

Facebook opens its advanced AI vision tech to everyone

Over the past two years, Facebook's artificial intelligence research team (also known as FAIR) has been hard at work figuring out how to make computer vision as good as human vision. The crew has made a lot of progress so far (Facebook has already incorporated some of that tech for the benefit of its blind users), but there's still room for improvement. In a post published today, Facebook details not only its latest computer-vision findings but also announces that it's open-sourcing them to the public so that everyone can pitch in to develop the tech. And as FAIR tells us, improved computer vision will not only make image recognition easier but could also lead to applications in augmented reality.

Computers learn to predict high-fives and hugs

Deep learning systems can already detect objects in a given scene, including people, but they can't always make sense of what people are doing in that scene. Are they about to get friendly? MIT CSAIL's researchers might help. They've developed a machine learning algorithm that can predict when two people will high-five, hug, kiss or shake hands. The trick is to have multiple neural networks predict different visual representations of people in a scene and merge those guesses into a broader consensus. If the majority foresees a high-five based on arm motions, for example, that's the final call.