AI hackers will make the world a safer place -- hopefully

A high-stakes game of capture the flag could be the spark that launches the artificial-intelligence computing revolution.

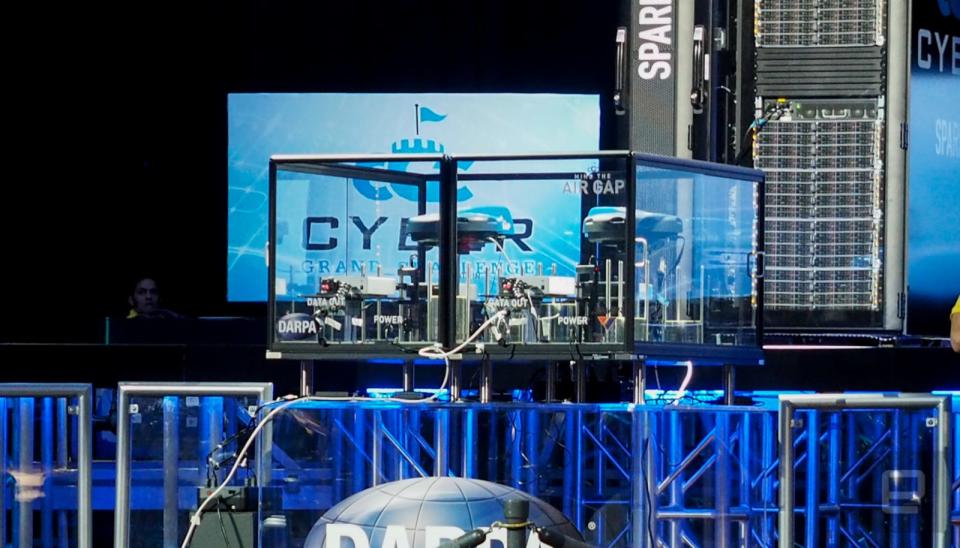

The spotlights whirl in circles and transition from blue to purple to red and back to blue again. Basking in the glow is a stage constructed to resemble something out of a prime-time singing competition. But instead of showcasing would-be pop stars, the backdrop is built to push 21kW of power while simultaneously piping 3,500 gallons of water to cool its contestants. Those seven competitors were actually server boxes autonomously scanning and patching vulnerabilities.

The DARPA Cyber Grand Challenge at the Def Con hacker conference last week pitted these AI systems -- housed in the same enclosures you'd find in an IT department -- against one another in a digital version of capture the flag. The government research agency is doing its best to add some pizazz to the event, with all the lights and play-by-play announcers. The reality is, though, that this is more than just an e-sports event: The outcome of this competition and the innovation it spurns could change the way the United States government and companies deal with software vulnerabilities and cyber attacks.

The seven teams, which consisted of universities, researchers and companies, built and programmed their AI "bots" to find, diagnose and fix software flaws in a highly competitive environment. The systems also have to defend themselves against other teams attacking the vulnerable code (or flag) on their own server while trying to launch counterattacks. Yeah, it's complicated. It's like being handed a series of puzzles with no directions on how to solve them while trying to keep your friends from figuring out the same brain teasers.

Except these brain teasers have the potential to bring down the internet or leave serious vulnerabilities in code that could be exploited by nefarious hackers and nation states. At this early stage, things are looking promising. One of the flaws handed to the bots was the SQL Slammer denial-of-service bug that brought the net to its knees in 2003 as it propagated to 75,000 servers in 10 minutes. Two of the bots recognized and patched the flaw, with one of them doing so in just five minutes. That's quicker than a security researcher sitting down at her desk and launching the tools needed to reverse-engineer a bug like that.

The whole thing is being projected to the audience on screens using visualizations that resemble TRON. The arena view is compelling if a bit confusing (the audience was subjected to a 10-minute explainer about each element in the artwork). It's a bit of a dog-and-pony show to enhance the importance of what's happening. While we're being wowed with bright lights and canned commentary, the machines have been hacking away at code for 12 hours at break-neck speeds. They are the rockstars of Def Con, the new Monoliths of 2001 with silkscreened names and flashing LEDs that will change the future of computing.

Behind all the Hollywood-style presentations, the actual story of the boxes is that the AI technology in these bots scans code, finds vulnerabilities and patches it so quickly that it has the potential to reduce cyber attacks and squash software flaws before they have a chance to do any harm. A company could throw a new application or OS into a bot like these and find flaws before it even ships. That reduces not only the number of patches being released but the holes left in applications by bad code before a customer even installs it.

Just don't expect to see these systems out in the wild anytime soon, DARPA Director Arati Prabhakar told Engadget. "This is not a two-year journey," he said. "I don't think it's a 50-year journey, either. In a decade, I think you're going to see a huge change."

DARPA wants this event to help drive innovation in this area. "A few of the specific steps that are gonna happen will probably happen from these competitors," Prabhakar said. Indeed, this how the agency operates. It did the same thing with its Urban Challenge for autonomous cars. Now we have semi-autonomous systems on the road while full autonomy is being researched by every major automaker and Google. But like those early cars that went a few years and veered off course, these systems aren't ready for prime time. Instead, expect to see iterative progress as the technology matures.

But AI machines like those in the competition could also be used to plow through the code of operating systems, infrastructure and applications to find vulnerabilities for exploitation. It's a future of AI battling AI to see who can find a flaw the quickest. DARPA insists that its research for the Department of Defense will help it thwart attempts by bad actors to infiltrate both government and private systems in the US.

In the meantime, the top robot came from security company and Carnegie Mellon subsidiary ForAllSecure, whose Mayhem AI bot bested the field and went home with a $2 million purse. Unfortunately, later in the weekend, it came in last when pitted against some the world's best hackers in the professional CTF competition. David Brumley, CEO of ForAllSecure, is still happy about the bot's performance. At one point, it was even ahead of two human teams.

The computer has speed on its side, but humans still have critical thinking and years of experience in their corner. The company spent two years working on Mayhem and 13 years researching how to automatically find vulnerabilities. Brumley thinks it'll be 30 years before computers are as good as the best human security researchers.

But it's more than just trying to be better than people. "What computers are doing is really changing the way we're going to think about security," Brumley said. "Right now if you want to analyze something for security vulnerabilities, you have to think long and hard about whether it's worth the expense."

For now, the technology is being gamified. Competition breeds innovation. The Mayhem team will go home and start applying what it learns to tackling problems in actual software. There won't be any lights or commentary. Instead, this computer and others like will work side-by-side with human counterparts to secure everything from the Pentagon to the IoT bulbs in your home.

The robot hackers are coming to make things more secure. At least that's the hope.