Facebook can’t decide when a page should be banned

Unlike YouTube, there's no three strikes and you're out.

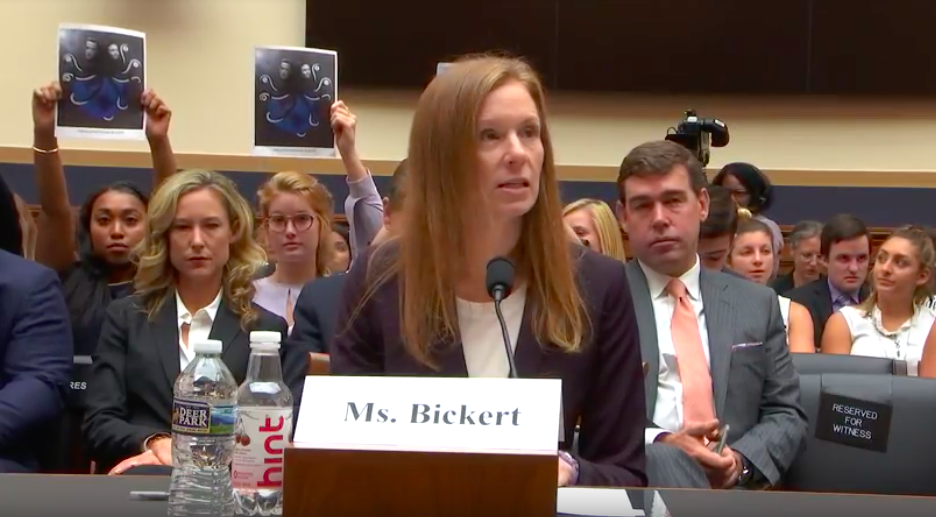

Another day, another congressional hearing on how tech companies are conducting themselves. This time it was Facebook, Twitter and YouTube that testified before the House Judiciary Committee today, in a hearing titled "Examining the Content Filtering Practices of Social Media Giants." While much of the three-hour session was information we've heard before, like what they're all doing to fight fake news and propaganda-driven bots, there was an interesting discussion about Facebook's policies (or lack thereof). In particular, the company's president for global policy management, Monika Bickert, couldn't give members of the committee a firm answer on what exactly it takes to ban offensive pages from Facebook.

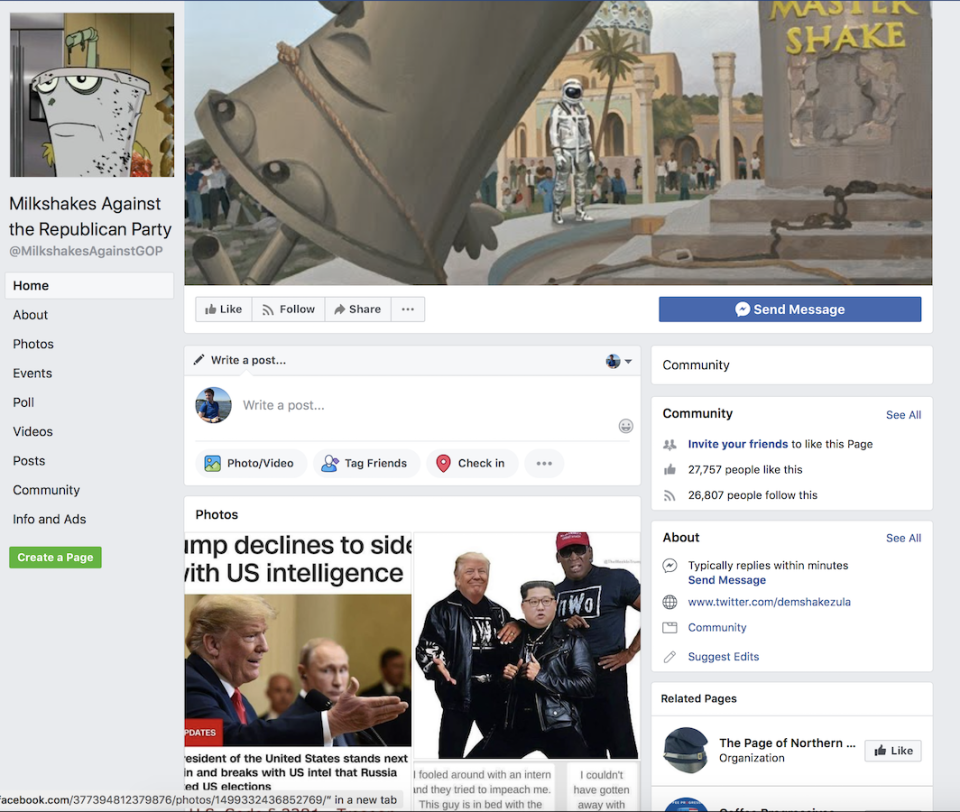

Bickert was given an example by Rep. Matt Gaetz (R-Florida) about a page on Facebook called "Milkshakes Against the Republican Party," which he said had multiple posts urging people to shoot members of his party. He said he reported the posts using the site's flagging tools, but that he received a response saying they didn't violate Facebook's Community Standards. Gaetz said the posts were removed eventually, though the page in question still exists. "Any calls for violence violates our terms of service," said Bickert. "So why is Milkshakes Against the Republican Party a live page on your platform?" asked Gaetz, adding that Facebook executives told him, "Well, we removed those specific posts, but we're not going to remove the entire page."

Gaetz then asked Bickert why Facebook wouldn't just remove pages repeatedly posting harmful content, particularly if they're threatening violence. She said the company removes pages, groups and profiles when "there's a certain threshold of violations that has been met," but that's on a case-by-case basis. For example, Bickert said, if someone posts an image of child sexual abuse, the account will come down immediately. "How many times does a page have to encourage violence against Republican members at baseball practice before you ban the page?" Gaetz asked. Bickert didn't seem to have an answer for the question and instead said she would be happy to look into the page.

Naturally, Facebook's Bickert was also asked about Alex Jones' InfoWars, a publication known for spreading conspiracy theories. "How many strikes does a conspiracy theorist who attacks grieving parents and student survivors of mass shootings get? How many strikes are they entitled to before they can longer post those types of horrific attacks?" asked Rep. Ted Deutch (D-FL). "Allegations that survivors of a tragedy like Parkland are crisis actors, that violates our policy and we remove that content," Bickert said, adding that Facebook will continue to remove any violations from the InfoWars page. "If they posted sufficient content that violated our threshold, that page would come down. That threshold varies, depending on the severity of different types of violations."

But because it's unclear what that threshold is, at least publicly, various members of the committee asked Bickert to please follow up with them to clarify. On the other hand, Juniper Downs, global policy lead for YouTube, told the committee that the company typically terminates channels after three strikes. That's a system Facebook would likely benefit from because it could keep offenders from making and promoting toxic content regularly. Bickert's testimony, of course, comes only days after Facebook said its long-term plan to fight fake news is to reduce and flag misinformation, rather than completely remove it. The company's argument is that it wants to remain a place for "a wide range of ideas," something Bickert echoed in her opening testimony today.

We reached out to Facebook for comment and will update this post if we hear back.