NVIDIA’s RTX speed claims fall short without game support

A lot has to happen before the RTX 2080 Ti is as fast as promised.

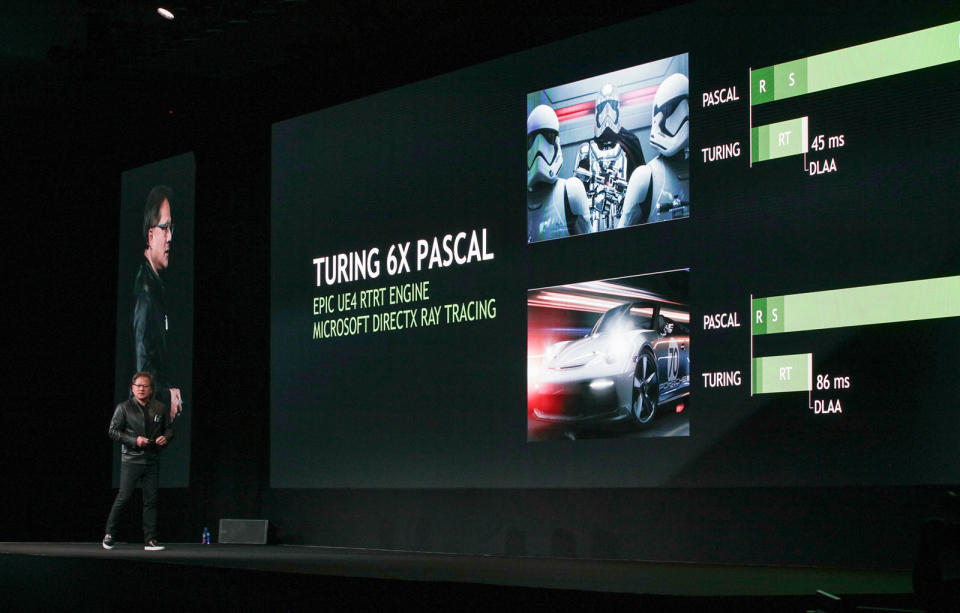

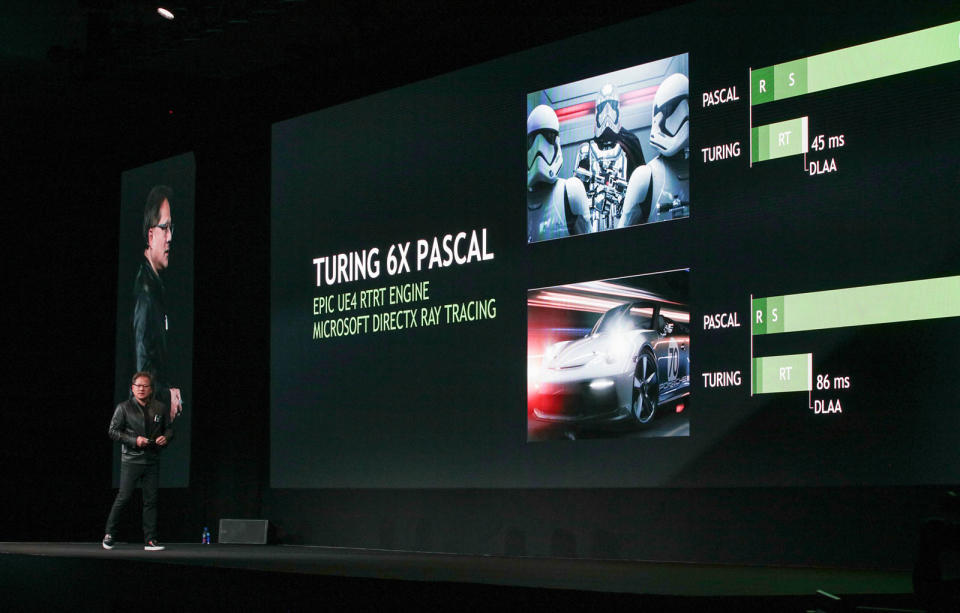

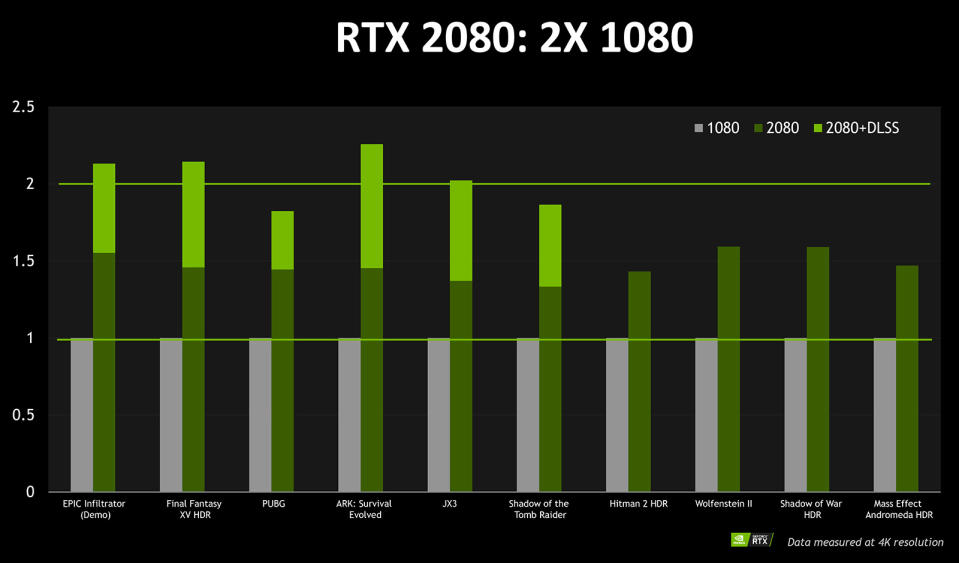

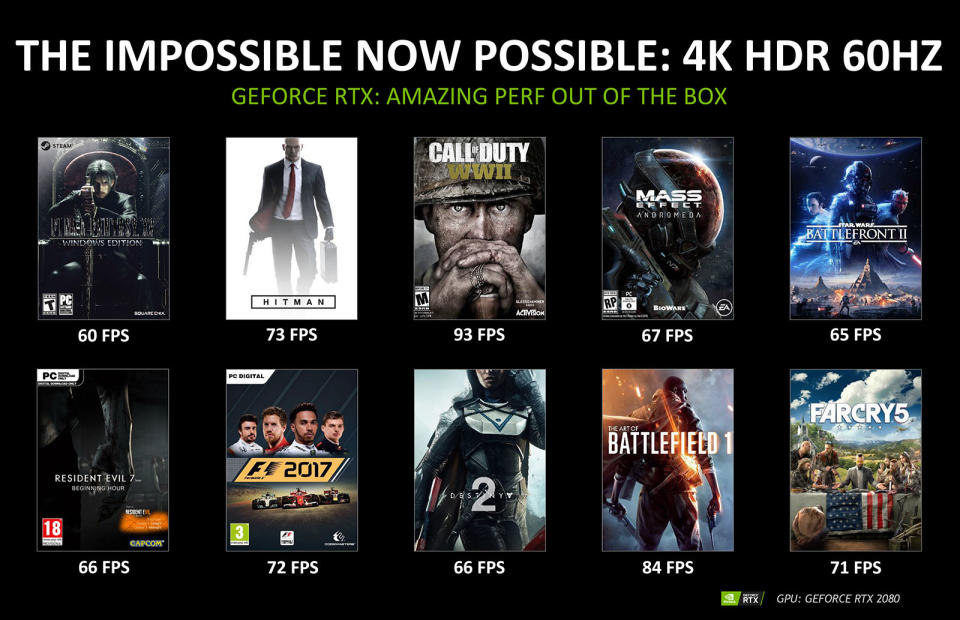

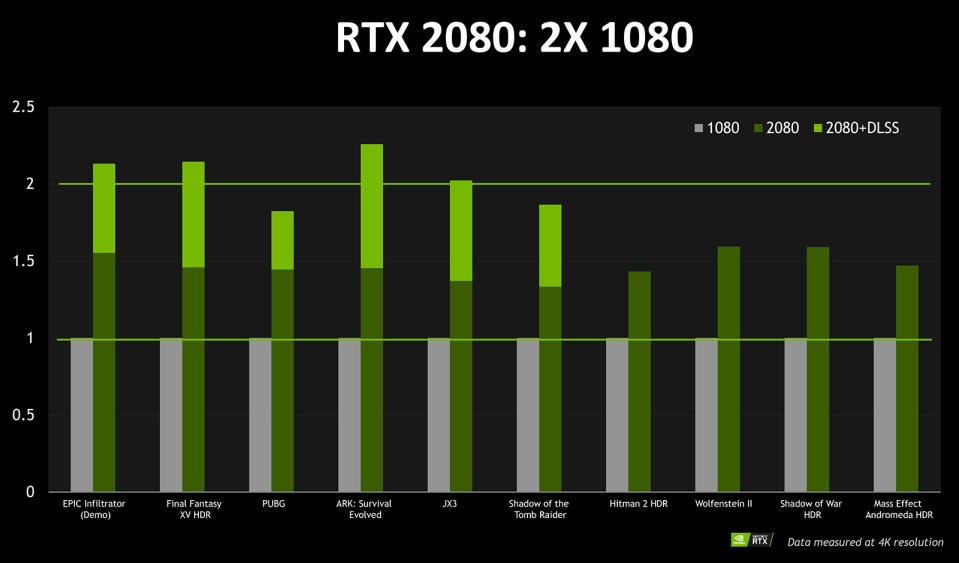

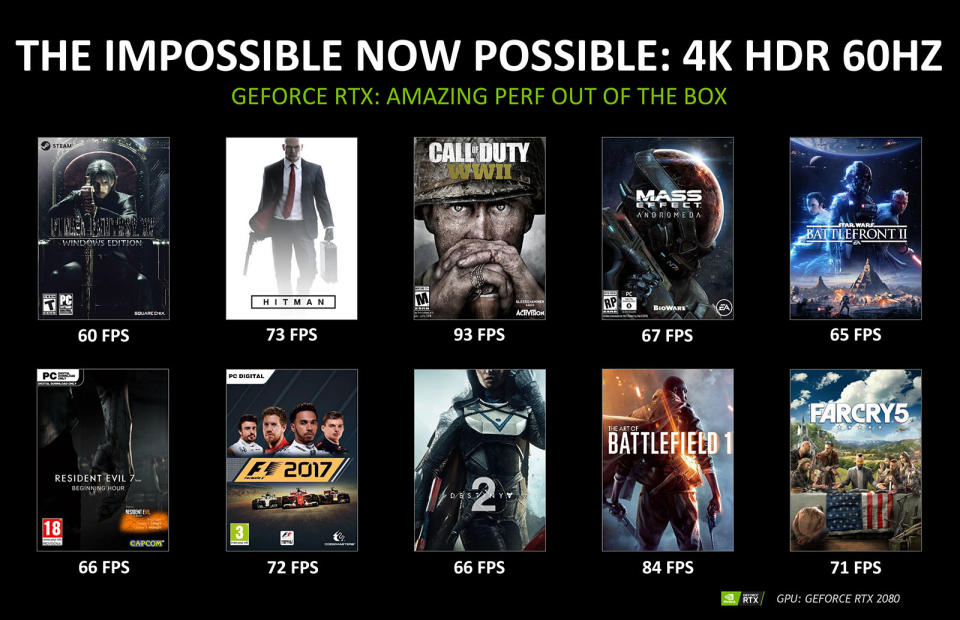

At its big RTX event at Gamescom, NVIDIA made some bold claims about its new Turing RTX cards. First and foremost was that the GeForce RTX 2080 offered performance "six times faster" than current 1000-series Pascal-based GTX cards. That's in large part because of new ray-tracing tech that helps the GPUs calculate complex game lighting much more quickly. "This is a new computing model, so there's a new way to think about performance," said CEO Jensen Huang. The new GeForce RTX 2080 Ti, 2080 and 2070 cards are quicker than ever, but the increase is not nearly as dramatic as the "six times" claim. At this point, we're at a 30 to 40 percent boost and higher for 4K. If those claims stand up to testing, that's still pretty good and probably enough reason for many gamers to jump on board -- particularly if you have a 4K monitor. It's still a far cry from double to six times the performance, however. For NVIDIA's larger claims to come true, game and app developers must first adopt the RTX ray-tracing and deep learning tech. And as we saw before with GameWorks (remember that?), that's far from a foregone conclusion. Until then, you might want to wait for benchmarks and reviews to see how they work with current games. NVIDIA gave us the main specs for GeForce RTX 2080 Ti, but omitted a few key figures. We know that the $1,199 Founder's Edition version has 11GB of DDR6 14Gbps memory, that it runs at a boosted speed of 1635 Mhz and has 4352 Cuda cores. NVIDIA didn't give us the key teraflops (TFLOPs) figure, but we calculate it as 4,352 (x2) shaders x 1,635 boost clock = 14,231,040 or 14.2 TFLOPs. That compares to 11.3 TFLOPs for the GTX 1080 Ti, and 12.15 TFLOPs for the Titan XP. So the RTX 2080 Ti provides a good 17 percent boost over the Titan XP, currently the fastest gaming card in the world, and 26 percent over its contemporary, the GTX 1080 Ti. However, keep in mind that the 2080 has lower base GPU clock speeds than the GTX 1080, and we have no information yet on its overclocking potential. That's not taking into account the 23 percent boost in the GDDR6 memory speed (14 Gbps for the 2080 Ti compared to 11.2 Gbps for the 1080 Ti), which will have a big impact on real-world performance. For instance, when the GTX 1070 arrived, it had slightly less raw power than the GTX 980, but edged it with superior bandwidth, memory speeds and architecture. It's worrying, though, that fill rates on the GTX 1080 Ti might actually be down because of lower GPU clock speeds. At a Gamescom press briefing, NVIDIA claimed a 30 to 40 percent performance boost, but we've yet to see independent tests to back that up. NVIDIA CEO Jensen Huang spent a long time (about an hour) during his Gamescom keynote talking about the Turing and Tensor tech embedded in RTX. The ray-tracing and neural network compute units have a lot more power for specific tasks than previous GPUs, allowing for much faster processing and more realistic graphics. Right now, your GPU largely renders games using rasterization. It's generally very efficient, as it only renders visible objects, ignoring anything hidden. Tricks developed over the last couple of decades, like pixel shaders and shadow maps, further improve realism. However, rasterization isn't photorealistic because physics doesn't work that way. It has trouble with ambient occlusion (how much ambient light strikes different parts of objects), caustics (light bouncing off translucent or transparent objects) and shadows generated by multiple light sources and objects, among other problems. That's where ray-tracing comes in. Light rays are projected from the pixels or light source, depending on the algorithm, then bounce around the scene until they hit the camera or light source. This is exactly how we see things -- photons bounce off and through objects, have their wavelengths changed, and eventually hit our eyes. As we explained earlier this year, dedicated ray-tracers render VFX scenes realistically enough to be inserted into live-action movies. However, all these photons bouncing around saturate your GPU pretty quickly. Ray-tracing is fine for movie post-production facilities, which have hundreds of servers to render things. It's not ideal for games, though, where limited hardware must render a frame in 1/60th of a second. That's where NVIDIA's RTX comes in, as it can execute ray-tracing computations with far greater speed than ever before. Ray-tracing will not take over how your games render graphics anytime soon. Doing full ray-traced rendering is still well beyond any graphics card, even NVIDIA's $10,000 Quadro RTX 8000. Mostly, they'll be used alongside existing raster engines to enhance certain effects, like reflections and shadows. To put a number to these new specialized cores, NVIDIA is now emphasizing giga rays and RTX-OPS. While the GeForce GTX 1080 Ti puts out a mere 1.21 giga rays per second, Turing GPUs can power through 10 giga rays per second -- up to eight times quicker. Meanwhile, using a hybrid measurement that combines ray-tracing and floating point operations, the Turing-powered GTR 2080 Ti can handle 78 RTX-OPS compared to 12 RTX-OPS for the 1000-series Pascal generation. That's the six-fold increase we've seen trumpeted around the internet. Jensen Huang said that such progress "has simply never happened before; a supercomputer replaced by one GPU in one generation." The demos he showed off at Gamescom, especially Project Sol (above) that showed robots and an Iron Man-like suit, were the most impressive real-time ray-tracing videos we've ever seen, considering they ran on a single GPU (the $6,300 Quadro RTX 6000). However, don't expect games to look instantly better with RTX cards. To see any benefit beyond the theoretical 30 to 40 percent boost, games will need to be designed specifically to take advantage of ray-tracing. None are available at the moment, and just two that we know of are coming down the pipe -- Battlefield V and Shadow of the Tomb Raider. NVIDIA's RTX uses Microsoft's new DirectX feature called DXR, also supported by AMD via its Radeon Rays feature. And Microsoft has said that DXR will work just fine on current-generation and older graphics cards, so it's not a feature exclusive to RTX. However, NVIDIA has strongly implied that it will work much better on the updated algorithms of its next-gen RTX cards compared to current models like the GTX 1080 Ti. All that said, NVIDIA is promising that with the new card, many games will hit the long-sought 4K 60 fps benchmark, including Resident Evil 7 (66 fps) Call of Duty: WWII (93 fps) and Far Cry 5 (71 fps). For comparison's sake, Far Cry 5 can hit around 54 fps max at 4K on the GeForce GTX 1080 Ti, while 4K Call of Duty: WWII currently maxes at 70-80 fps on the 1080 Ti. NVIDIA also said that its DLSS AI feature will boost game speeds by up to two times. To do that, the RTX tensor cores will take over anti-aliasing (AA) that normally consumes a lot of GPU cycles and memory resources. DLSS is a specific NVIDIA function that will have to be coded game-by-game, but NVIDIA will do it for free if developers send them the code. There has been a lot of hype over GPU tech that never panned out as it was supposed to. Take AMD's Mantle and, most recently, GameWorks. Much of this tech didn't bring the promised gaming speed or quality improvements, yet the cards were sold in part on those features. Any game-improvement features are especially suspect if they're limited to one brand or another, as appears to be the case with NVIDIA's DLSS and some RTX functions. The reason is obvious. Game developers don't want to favor one GPU platform over another, as it stretches their already-thin resources and often results in bad press and unhappy buyers if it doesn't deliver. For instance, NVIDIA introduced GameWorks middleware to help developers create better hair, fur, physics and other effects. However, the features were optimized for NVIDIA cards, and running them on an AMD GPU often lead to bad or unpredictable performance -- and GameWorks proved to be problematic even on NVIDIA's own cards. NVIDIA brought it up during the RTX launch earlier this year, but we haven't heard much about GameWorks for the RTX 2000-series cards. Buying into a new graphics system based on unproven tech, especially something as complex as ray-tracing, is a pretty big risk. It doesn't help that the new RTX 2080 Ti card is expensive and power-hungry, and doesn't offer amazing speed improvements on paper. It might indeed have a superior architecture that overcomes the specs, but it's worrying that NVIDIA spent so much time describing the ray-tracing tech and very little showing how it improves games you can play right now. It'll be great if RTX ray-tracing and AI improves future games as promised, but given the two year or so hardware upgrade cycle, NVIDIA might have moved on to other things by the time they come along. All we've got to go on right now are the specs and NVIDIA's word about performance. Until testing and reviews make the picture clearer, you might want to wait and see how it all shakes out. Update 8/27/18 10:16AM: Following the publication of the article, NVIDIA sent us charts showing performance upgrades with the RTX 2080 over the current GTX 1080 model, which we've added. As we noted, performance improvements are in the 30-40 percent range on average (up to 50 percent) without DLSS, and over double with DLSS turned on. We've also included a chart showing 4K frame rates of popular games when powered by the RTX 2080. NVIDIA also emphasized that ray-traced rendering will be powered by Microsoft's DXR and is not an exclusive NVIDIA function. Follow all the latest news live from Gamescom here!

The math

Ray-tracing

Giga rays and RTX-OPS

Demos versus the real world

GameWorks and the graveyard of lost GPU tech