How HoloLens is helping advance the science of spaceflight

Microsoft's AR headset is making an impact both on and off the planet.

AR headsets haven't exactly caught on with the general public -- especially after the Google Glass debacle. Mixed reality technology has garnered a sizable amount of interest in a variety of professional industries, though, from medicine and education to design and engineering. Since 2015, the technology has even made its way into aerospace where NASA and its partners have leveraged Microsoft's HoloLens platform to revolutionize how spacecraft are constructed and astronauts perform their duties while in orbit.

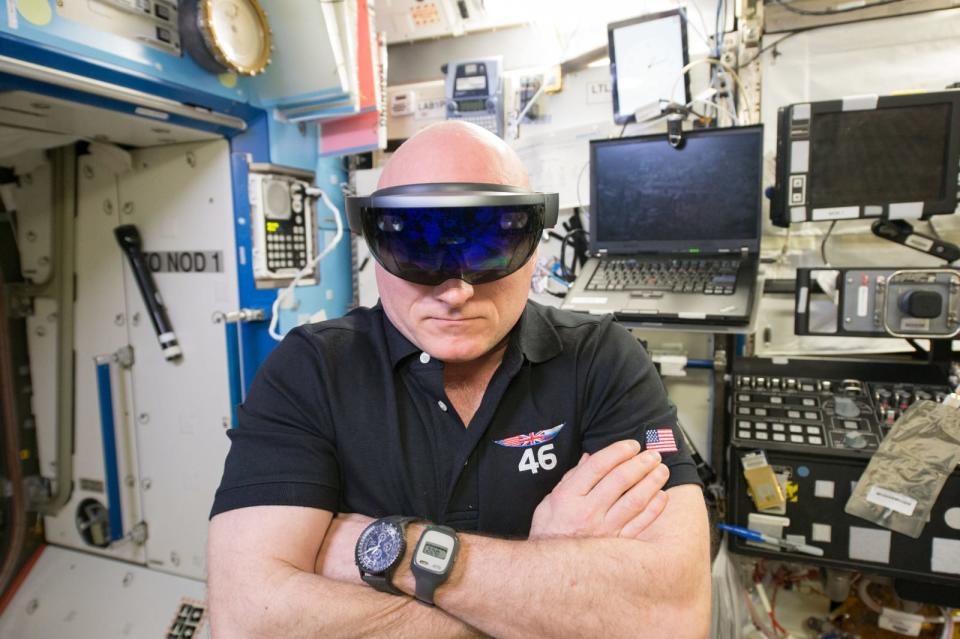

Microsoft and NASA's partnership began on June 28th, 2015 as part of Project Sidekick when a SpaceX supply rocket docked with the ISS and delivered the headsets to the waiting astronauts. "HoloLens and other virtual and mixed reality devices are cutting edge technologies that could help drive future exploration and provide new capabilities to the men and women conducting critical science on the International Space Station," Sam Scimemi, director of the ISS program at NASA said in a 2015 press release. "This new technology could also empower future explorers requiring greater autonomy on the journey to Mars."

Aboard the ISS, crews utilized the HoLolens' "Remote Expert Mode" in many of their tasks. Remote Expert connects the wearer with an Earth-based technician from the flight control team via Skype, allowing them to see what the astronaut is seeing and advise accordingly. The headsets could also be deployed in Procedure Mode, which played locally-stored animated holographic illustrations for times when an expert wasn't available.

Project Sidekick was short lived, only running until the following March. However, a few months later in the summer of 2016, NASA's Kennedy Space Center Visitor Complex in Florida launched "Destination: Mars," a mixed reality guided tour of the Red Planet narrated by none other than Buzz Aldrin. Visitors were taken on a walking tour of several Martian sites using images captured by the Curiosity Mars Rover.

"This experience lets the public explore Mars in an entirely new way. To walk through the exact landscape that Curiosity is roving across puts its achievements and discoveries into beautiful context," said Doug Ellison, visualization producer at JPL, said in a press release at the time. The OnSight application, which actually stitched those captured images together, went on to win NASA's 2018 Software of the Year award.

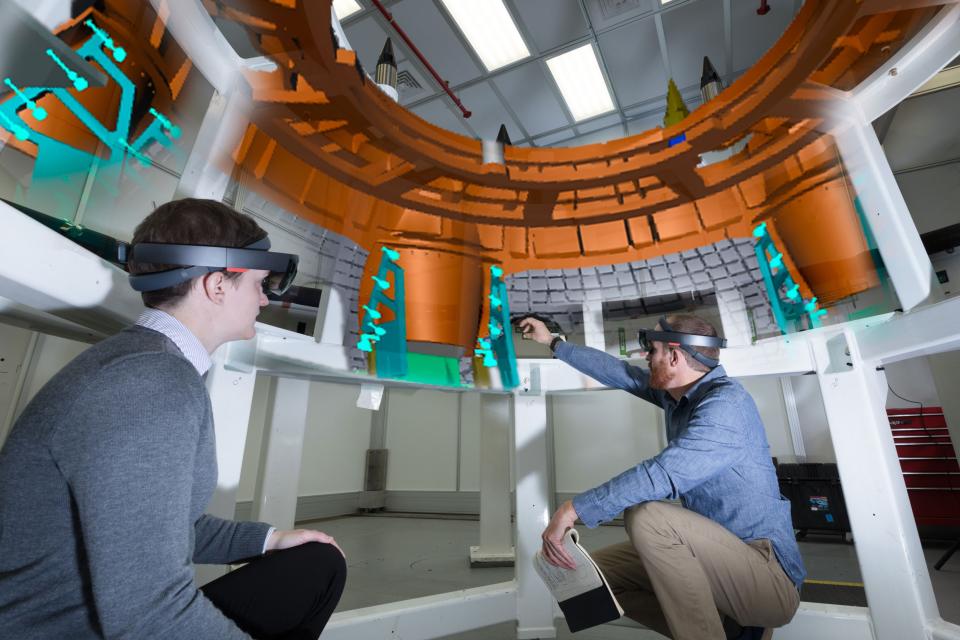

Augmented reality has also found its way into spacecraft design and production with incredible results. Take the Orion Multi-Purpose Crew Vehicle, for example. It's currently being developed by NASA and the ESA and built by Lockheed Martin. The 4-person crew capsule is designed to ride atop the Space Launch System during the Artemis lunar exploration missions as well as to Mars.

It's also a fantastically complex piece of engineering. The Orion's assembly manual alone is a 1,500 page behemoth, requiring technicians to constantly flip back and forth between the instructions and the task at hand. But that's where the HoloLens comes in.

"Manufacturing was a good place to start because it's easier to quantify what we're seeing in terms of a comparison between traditional methods and what AR helping would take," Shelley Peterson, the principal engineer for Augmented & Mixed Reality at Lockheed Martin Space, told Engadget.

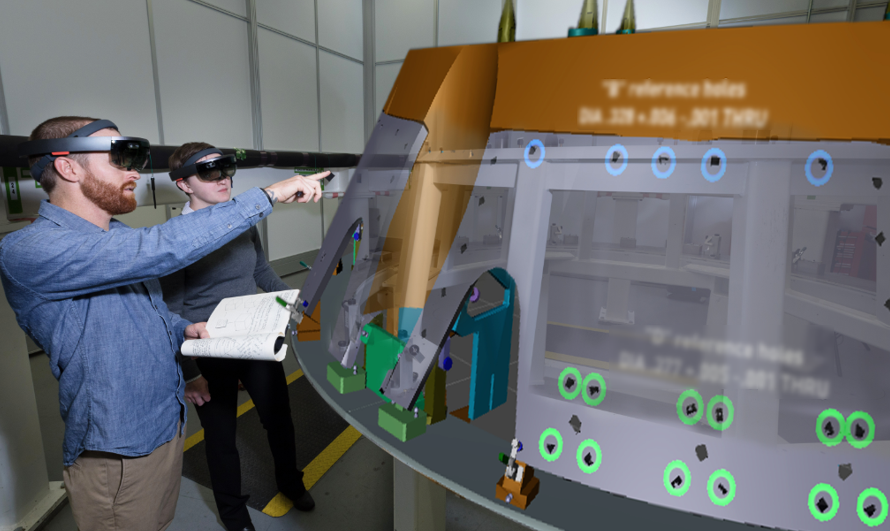

Using the same Procedure Mode as aboard the ISS, Lockheed's teams were able to drastically reduce the amount of time needed to assemble the spacecraft's various systems. The team was able to cut down the time spent joining components and torquing bolts to precise specifications by 30 to 50 percent. Rather than having to thumb through the instructions to know how many pounds of pressure a specific bolt requires, that information is displayed directly atop the bolt by the HoloLens, Peterson explained.

"More recently, we've been working with position alignment of objects," she continued. It really just changes things when you can see within your environment where you're needing to place an object, instead of having to measure or use other methods. It's a fantastic way to represent the data."

What used to take a technician a full 8-hour shift to complete can now be done in 15 minutes, Peterson said. What would take a pair of technicians three days to do can now be done by a single technician in two and a half hours. "At Kennedy Space Center, we had an activity that normally takes eight shifts," Peterson said. "They completed it in six hours."

The HoloLens doesn't just reduce the amount of time (and money) spent putting the Orion together, it also helps to mitigate uncertainty in the manufacturing process and prevent costly mistakes. "If [the technicians are] trying to interpret a 2D drawing or 3D model on a 2D screen, and make that mental translation to what it means to the object in the room, there's still some questions," Peterson explained, "and they'd like to be absolutely certain when they're working on the spacecraft."

Peterson also points to the headsets' ease of use. A technician typically needs less than a half hour to get orient themselves with the system before jumping into their tasks. "They're able to put it on and just start working,' she continued. The current iteration of the HoloLens is still a bit heavy to be worn all day, though technicians can wear them for up to three hours before tiring, or simply pop the headset on and off as needed throughout the day.

The only major sticking point that Peterson notes is the difficulty in entering data. "We need a better way to type or to take the place of typing -- voice doesn't quite do it just yet," Peterson said. "There's times where we need to enter data, or capture data as we're working and they have to move across to a Bluetooth keyboard." That takes the technician out of their workflow, which is what the HoloLens was designed to minimize in the first place.

Lockheed isn't the only organization leveraging AR technology in its manufacturing process. Rival aerospace company BAE has also paired with Microsoft, using HoloLens to eliminate the need for paper assembly manuals in its electric bus division, while a team of researchers from Johns Hopkins University Applied Physics Laboratory (APL) have used the tech to design their Dragonfly rotorcraft lander.

The Dragonfly will be heading deep into our solar system when it launches towards Saturn's moon, Titan, in 2025. It'll take a whopping nine years to get to the moon's surface but once there, the Dragonfly's exploration will help unlock the mysteries of our home system and maybe even -- fingers crossed -- give us our first glimpse at extraterrestrial life.

Apollo 11 anniversary at Engadget