The robots of war: AI and the future of combat

Before we start fighting with robots, we have to learn to trust them.

The 1983 film WarGames portrayed a young hacker tapping into NORAD's artificial-intelligence-driven nuclear weapons' system. When the hit movie was screened for President Reagan, it prompted the commander in chief to ask if it were possible for the country's defense system network to be compromised. Turns out it could. What they didn't talk about was the science fiction of using AI to control the nation's nuclear arsenal. It was too far-fetched to even be considered. Until now.

At Def Con, seven AI bots were pitted against one another in a game of capture the flag. The DARPA-sponsored event was more than just a fun exercise for hackers. It was meant to get more researchers and companies to focus on autonomous artificial intelligence. As part of the Department of Defense (DoD), DARPA is tasked with making sure the United States is at the forefront of this emerging field.

While the country may currently be mired in a ground wars against insurgents and extremist groups, the DoD is looking at future skirmishes. The department's long-term artificial intelligence plans are focused more on conflicts with countries like Russia, China and North Korea than terrorism. "Across the military services and the leadership we see people really starting to focus on the next generation of capabilities if we are to deter -- and defeat if necessary -- not the kind of terrorist organizations we've been dealing with but the peer adversaries," DARPA Director Arati Prabhakar said.

With that in mind, the Department of Defense has introduced its third offset strategy. When faced with new tactical issues, the United States comes up with plans to stay ahead of its adversaries. The military's first offset strategy was the buildup of nuclear arms. The second, the creation of smarter missiles and more reliance on reconnaissance and espionage. It's a long-term plan that focuses on cyberwarfare, autonomy and how humans and machines will work together on the battlefield. We've entered into an AI arms race. "Our intelligence suggests that our adversaries are already contemplating this move. We know that China is investing heavily in robotics and autonomy. The Russian Chief of the General Staff (Valery) Gerasimov recently said that the Russian military is preparing to fight on a roboticized battlefield." Deputy Defense Secretary Bob Work said during a National Security Forum talk.

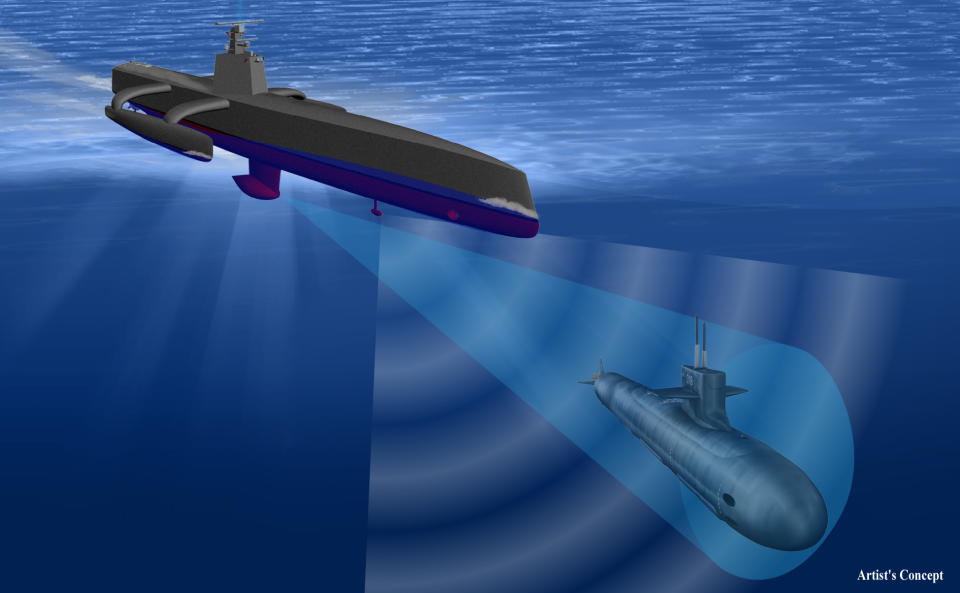

Although drone strikes are currently piloted by humans thousands of miles away from a target, in the future, these unmanned craft (airborne, undersea and ground-based) will be largely autonomous and probably part of a swarm that's simply overseen by humans. DARPA is already researching two self-flying devices. One is the CODE (Collaborative Operations in Denied Environment) program that aims to create an autonomous aircraft that can be used in hostile airspace not just for reconnaissance -- but also airstrikes. The other is the Gremlins fleet of small aircraft that can be deployed and retrieved mid-air.

But to keep that horde of flying robots in the air, the DoD will need to work on making sure they can consistently talk to each other. To that end, DARPA has introduced the Spectrum Collaboration Challenge. Like in the Cyber Grand Challenge, multiple teams will compete to build a machine-learning solution for radio-frequency scarcity by predicting what other RF devices and potential enemies are doing and figuring out how to best use the available spectrum.

While the government agency is offering cash prizes for the teams that help come up with a solution, DARPA is also working on the more impressive sounding Behavioral Learning for Adaptive Electronic Warfare (BLADE) program. That research will be "developing the capability to counter new and dynamic wireless communication threats in tactical environments."

It's a two-pronged approach to solving something that's going to be key to keeping communications open between everything on the battlefield. But neither will be ready for battle anytime soon.

Prabhakar said that while AI is extremely powerful it's also limited in some very important ways. Even though their statistical systems like image recognition are currently better than humans', they're far from perfect and "when they make mistakes, they make mistakes that no human would make." If a system goes awry, there's no underlying theory on why. "You don't understand what's happening," Prabhakar told Engadget.

There's also the issue of faith. At what point does the military decide to deploy something that it might not fully understand? DARPA and the DoD are trying to figure this out. "Knowing how much and in what circumstances to trust a system are some really big questions," Prabhakar said.

Even if you trust the AI infrastructure that's been created and believe it'll do everything it's supposed to, it could still be hacked. "I don't think people fully understand yet what it means to deceive these systems" Prabhakar said. In addition to trust, DARPA thinks a lot about the potential for artificial intelligence to be hacked or tricked into veering from its mission.

A rogue drone or smart gun that's been hacked could be devastating. It could alter the course of a mission or worse, turn on its human counterparts. Which brings us back to the DARPA Cyber Grand Challenge and its bots that find and fix vulnerabilities. They're an important part of a giant puzzle of technology that'll pit robot against robot with human helpers.

The future of warfare will be filled with AI and robots, but it'll be more than just autonomous drones clashing on the battlefield. It'll include humans and computers working together to attack and defend military systems. More importantly, it'll be a world where whoever builds the best artificial intelligence will emerge the victor.