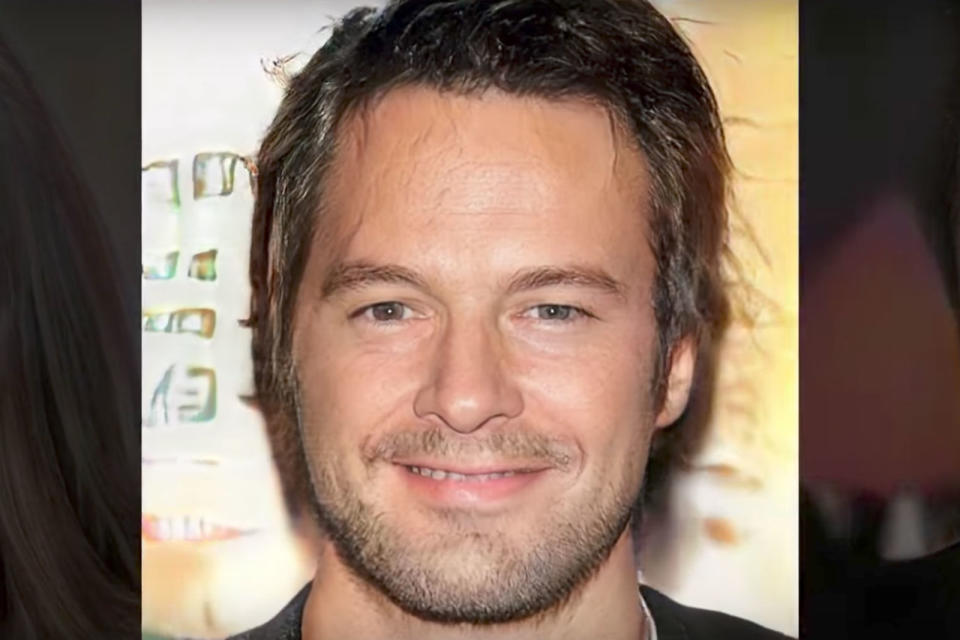

Neural network creates photo-realistic images of fake celebs

The results can be a bit nightmarish at times.

While Facebook and Prisma tap AI to transform everyday images and video into flowing artworks, NVIDIA is aiming for all-out realism. The graphics card-maker just released a paper detailing its use of a generative adversarial network (GAN) to create high-definition photos of fake humans. The results, as illustrated in an accompanying video, are impressive and creepy in equal measure.

GAN has has been used by Google (as part of its DeepDream experiment) and artist Mike Tyka in the past, but never like this. The tech pits two neural networks against each other, which in this case saw one algorithm act as the image generator and the other as the discriminator (whose job it is to compare those images to real-world samples). Think of it as an artist-critic collab that works in tandem to modify and improve the results.

NVIDIA took things a step further by using a "progressive" method that began the GAN's tuition on low-res images, and then worked upwards to HD. "This both speeds the training up and greatly stabilizes it, allowing us to produce images of unprecedented quality," wrote the company of its approach. But, instead of using regular people, the firm sourced its images from the CelebA HQ database of famous faces. The results look like a creepy meld of a-list mugs that will haunt your dreams. Yet, there's no denying that many of them also look photo-realistic. Mission accomplished, then. The method also threw up some solid generation of objects and scenery, as displayed in the clip above.