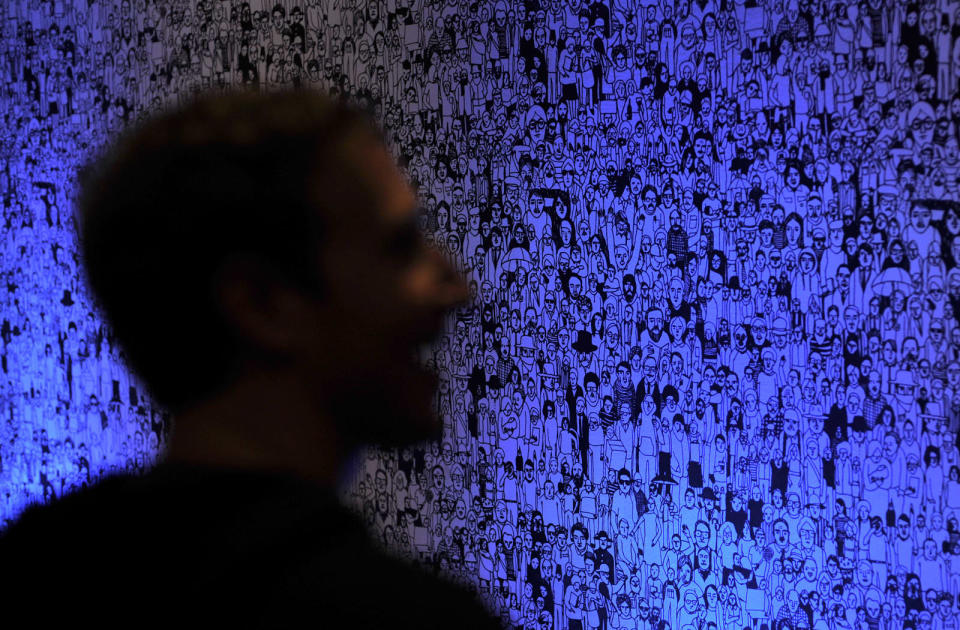

Facebook feigns accountability with ‘trusted’ news survey

A simple survey with useless answers is apparently the answer to fake news.

When Facebook announced it was rolling out a major overhaul to its News Feed earlier this month, it did so with the intention of prioritizing interactions between people over content from publishers. It was a notable shift in strategy for the company, which for the past couple of years had been working closely with news outlets to on heavily promote their articles and videos. But, Facebook discovered that people just weren't happy on the site -- likely due to the vast amount of political flame-throwing they've been exposed to since the 2016 US Presidential election. So in order to alleviate this problem, it decided it was best if users saw more posts from friends and family, instead of news that could have a negative effect on their emotions. Because keeping people both happy and informed is, apparently, hard.

Unfortunately, Facebook's solution to this problem doesn't seem to be the best one. Last week, it said its plan is to only put front and center links from outlets that users deem to be "trustworthy." Which just proves that Facebook would rather put the responsibility for policing misinformation on the community instead of itself. This is concerning because Facebook is, essentially, letting people's biases dictate how outlets are perceived by its algorithms.

The full Facebook news trustworthiness survey.

It its entirety. https://t.co/bd0qkkXGgN pic.twitter.com/oUvTZLNiyB— Alex Kantrowitz (@Kantrowitz) January 23, 2018

As reported by BuzzFeed News, Facebook has a "trusted" news source survey that consists of two simple questions: "Do you recognize the following websites?" and "How much do you trust each of these domains?" For the first one, the answers you can provide are a simple "yes" or "no," while the latter gives you the options to reply with "entirely," "a lot," "somewhat," "barely" or "not at all." That doesn't seem like the best or most thorough way to judge editorial integrity. Not only that, but the survey doesn't take into account personal biases, leaving the system wide open to abuse. This is only going to encourage people to continue to live in a bubble of their own creation, where there's no room for information or opinions that challenge their worldview.

Facebook seems to think the benefit to its survey is that it's simple and straightforward, though that's actually why it's so misguided. There's no room for nuance or additional context. How many people will say they don't trust the New York Times or CNN simply because President Donald Trump calls them "Fake News" any chance he gets? Sure, those particular outlets shouldn't have any problem being recognized as legit, but even labeling them as such doesn't seem like it's a responsibility Facebook's willing to take on. A Facebook spokesperson said to Engadget that Facebook is a platform for people to "gain access to an ideologically diverse set of views," adding the following:

We surveyed a vast, broadly representative range of people (which helps -- among other measures -- to prevent the gaming-of-the-system or abuse issue you noted) within our Facebook community to develop the roadmap to these changes -- changes that are not intended to directly impact any specific groups of publishers based on their size or ideological leanings.

Instead, we are making a change so that people can have more from their favorite sources and more from trusted sources. I'd also add that this is one of many signals that go into News Feed ranking. We do not plan to release individual publishers' trust scores because they represent an incomplete picture of how each story's position in each person's feed is determined.

"There's too much sensationalism, misinformation and polarization in the world today," Zuckerberg said in Facebook post announcing the News Feed changes. "Social media enables people to spread information faster than ever before, and if we don't specifically tackle these problems, then we end up amplifying them. That's why it's important that News Feed promotes high quality news that helps build a sense of common ground."

The problem is that, by letting its users control how the system works, Facebook may actually end up amplifying the fake news bubble it helped create. Facebook users were instrumental in the spreading of misinformation and Russian propaganda during the 2016 US Presidential election. According to its own data over 125 million Americans had been exposed to Kremlin-sponsored pages on Facebook.

"The hard question we've struggled with is how to decide what news sources are broadly trusted in a world with so much division," Zuckerberg added. He said that Facebook could try to make that decision itself, but that "that's not something we're comfortable with."

There was also the thought of asking outside experts to help with the issue, Zuckerberg said, but apparently he wasn't okay with that either because it would take the decisions out of Facebook's hands and "would likely not solve the objectivity problem." Instead, Facebook chose to rely on the community's feedback to rank publishers -- you know the same community that was responsible for sharing phony headlines like "FBI Agent Suspected in Hillary Email Leaks Found Dead in Apparent Murder-Suicide."

Facebook told Engadget that this isn't designed to be a voting system, and that people can't volunteer to weigh in on how trustworthy a news outlet is. The idea is that a random sample of people will be surveyed and Facebook is going to ensure there's diversity among those who participate -- meaning the answers will come from Democrats, Republicans or users without party affiliation. And even if there are some people who respond based on their political ideology, Facebook said it won't affect the ranking of any given publisher because no one group can.

The company suggested that labeling publications appropriately won't be an issue, because it only determines their value if many different groups of people agree that a certain one is trusted or distrusted. Still, it's hard to imagine just how exactly this is going to work as Facebook hopes, especially in a country that's obviously so politically divided.

Either way, Facebook clearly seems to be having an identity crisis. And it begs the question: Why can't a company worth billions of dollars, and with so much influence, seem to come up with a better solution? Yes, Zuckerberg said that Facebook didn't feel comfortable leaving the objectivity decisions up to outside experts, but how is it any better to let users be the judge? Surely there's a third option in which Facebook takes some responsibility (perhaps with third-party help), and builds something more robust than a useless survey.