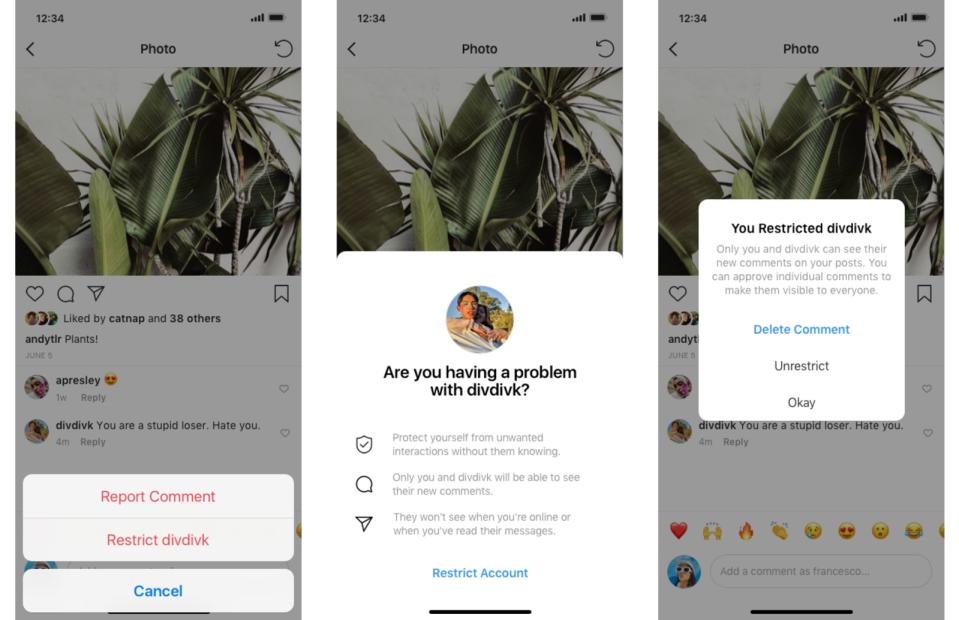

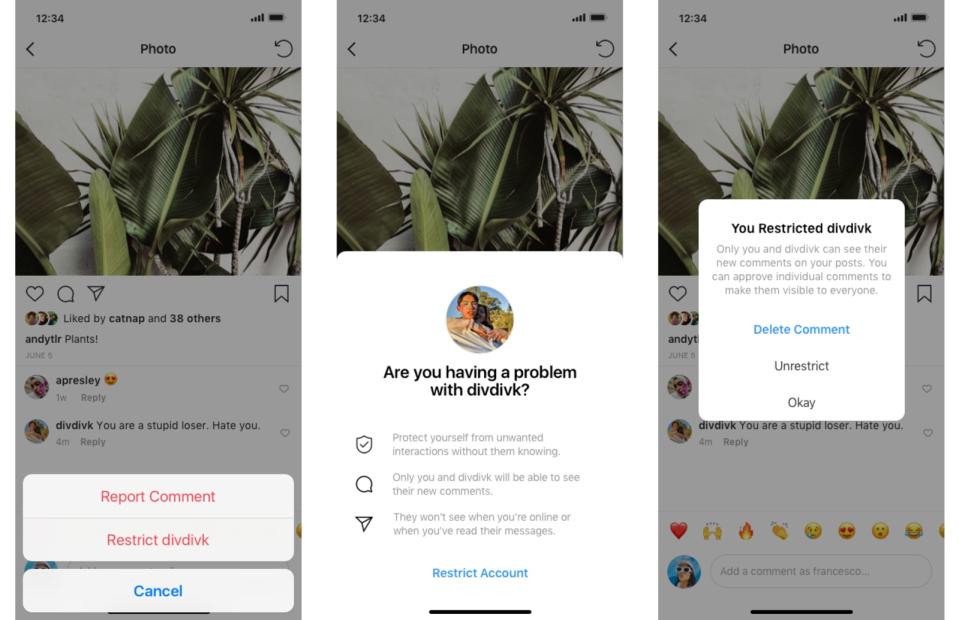

Instagram’s anti-bullying tool lets you ‘restrict’ problematic followers

Its AI will warn users before they post offensive comments.

Today, Instagram announced two new tools meant to combat bullying. The first will use AI to warn users if a comment they're about to post may be considered offensive. In theory, it will give users a chance to rethink their comments. The second will allow users to "restrict" problematic followers. Comments by restricted followers won't appear publicly (unless you approve them), and users on your restricted list won't be able to see when you're active or when you've read their direct messages.

The tools are part of Instagram's ongoing efforts to fight bullying, which have received more attention since Adam Mosseri took over as Head of Instagram late last year. "We are committed to leading the industry in the fight against online bullying, and we are rethinking the whole experience of Instagram to meet that commitment," Mosseri wrote in a blog post today.

Instagram has experimented with filtering out negative comments in the past, and it's used machine learning to combat bullying in photos. It's also experimented with changes like hiding "like" counts from people looking at your posts. While taking a stand against bullying is noble. It's also good for business. As Mosseri told Time, bullying "could hurt our reputation and our brand over time." Mosseri acknowledges that he'll have to walk a fine line and not alienate users or "overstep," but he told Time that Instagram is willing to "make decisions that mean people use Instagram less, if it keeps people more safe."