siggraph

Latest

NVIDIA's Turing-powered GPUs are the first ever built for ray tracing

Earlier this year NVIDIA announced a new set of "RTX" features that included support for advanced ray tracing features, upgrading a graphics technique that simulates the way light works in the real world. It's expected to usher in a new generation of hyper-realistic graphics but there was one small problem: no one made any hardware to support the new stuff yet. Now at the SIGGRAPH conference NVIDIA CEO Jen Hsun Huang revealed eighth-generation Turing GPU hardware that's actually capable of accelerating both ray tracing and AI. Turing can render ray tracing 25x faster than old Pascal technology thanks to dedicated processors that will do the math on how light and sound travel through 3D environments. They're also the first graphics cards announced with Samsung's new GDDR6 memory on board to move data faster using less power than ever before.

Disney’s first VR short ‘Cycles’ debuts next month

Walt Disney Animation Studios is set to share its first VR short, a film called Cycles that took four months to create. The short will make its debut at the Association for Computing Machinery's annual SIGGRAPH conference in August and the team behind it hopes VR will help viewers form a stronger emotional connection with the film. "VR is an amazing technology and a lot of times the technology is what is really celebrated," Director Jeff Gipson said in a statement. "We hope more and more people begin to see the emotional weight of VR films, and with Cycles in particular, we hope they will feel the emotions we aimed to convey with our story."

AI is making more realistic CG animal fur

Creating realistic animal fur has always been a vexing problem for 3D animators because of the complex way the fibers interact with light. Now, thanks to our ubiquitous friend artificial intelligence, University of California researchers have found a way to do it better. "Our model generates much more accurate simulations and is 10 times faster than the state of the art," said lead author Ravi Ramamoorthi. The result could be that very soon, you'll see more believable (and no doubt cuter) furry critters in movies, TV and video games.

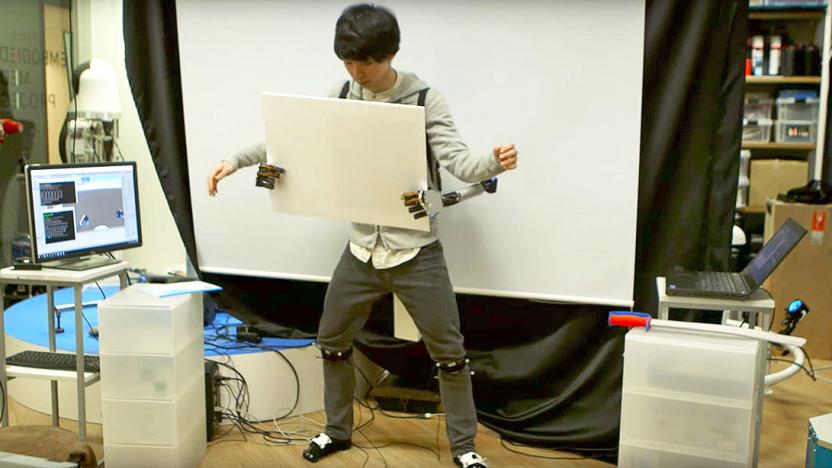

Adding a second pair of arms is as easy as putting on a backpack

There's only so much you can do with two arms and hands. That's basic science. But what if you could add extras without the need for ethically shady surgery or trading your apartment for a hovel in the shadow of a nuclear power plant? That's what researchers from Keio University and the University of Tokyo hope to achieve with their "Metalimbs" project. As the name suggests, Metalimbs are a pair of metal, robotic arms that doubles the amount of torso-extremities and worn with a backpack of sorts. And unlike thought-powered prosthetics we've seen recently, these are controlled not with your brain, but your existing limbs. Specifically, your legs and feet.

Microsoft Research helped 'Gears of War 4' sound so good

Popping in and out of cover has been a hallmark of the Gears of War franchise since the first game came out in 2006. It hasn't changed much because it didn't need to. What's always been an issue though is how thin the game sounds -- a shortcoming of the underlying tech, Unreal Engine, powering it. But Microsoft owns the series now and has far more money to throw at it than former owners/Unreal Engine creators Epic Games did. With help from Microsoft Research, Redmond's Gears of War factory The Coalition found a high tech way to fix that problem. It's called Triton. Two years ago Microsoft Research's Nikunj Raghuvanshi and John Snyder presented a paper (PDF) titled "Parametric Wave Field Coding for Precomputed Sound Propagation." The long and short of the research is that it detailed how to create realistic reverb effects based on objects in a video game's map, to hear it in action pop on a pair of headphones and watch the video below.

Disney Research has a faster way to render realistic fabrics

Computer graphics have come a long way, but there are still a few aspects that are pretty time consuming to get right. Realistic fabric movement that reacts to gravity and other forces is one of 'em and the folks at Disney Research have found a way to make life-like cloth simulations by six to eight times in certain situations. Walt's science department says that using a technique called multigrid, specifically, smoothed aggregation allowed it to make clothing worn by a main character or fabrics that make up the foreground of a scene at a much faster clip. There's an awful lot of science and equations behind the concept (PDF), but the long and short of it is that this should allow for more realistic cloth simulations that stretch and act like fabric does in the real world and even aid in virtual try-on situations.

New tech uses ultrasound to create haptics you can 'see' and touch

We've seen haptic feedback in mid-air before, but not quite like this. The folks from Bristol University are using focused ultrasound in a way that creates a 3D shape out of air that you can see and feel. We know what you're probably thinking: How do you see something made of air? By directing the apparatus generating it at oil. As you do. According to the school, the tech could see use in letting surgeons feel a tumor while exploring a CT scan. Or, on the consumer side of things, to create virtual knobs you could turn to adjust your car's infotainment system without taking your eyes off the road. The tech can also apparently be added to 3D displays to make something that's both visible and touchable. If you're curious about what it looks like in action, we've embedded a video just below.

Microsoft Research project turns a smartphone camera into a cheap Kinect

Microsoft's been awfully busy at this year's SIGGRAPH conference: embers of the company's research division have already illustrated how they can interpret speech based on the vibrations of a potato chip bag and turn shaky camera footage into an experience that feels like flying. Look at the list of projects Microsofties have been working on long enough, though, and something of a theme appears: These folks are really into capturing motion, depth and object deformation with the help of some slightly specialized hardware.

Experimental display lets glasses wearers ditch the specs

Engadgeteers spend a lot of their day staring at a screen, so it's no surprise that nearly all of us are blind without glasses or contact lenses. But wouldn't it be great if we could give our eyes a break and just stare at the screen without the aid of corrective lenses? That's the idea behind an experimental display that automatically adjusts itself to compensate for your lack of ocular prowess, enabling you to sit back and relax without eyewear. It works by placing a light-filtering screen in front of a regular LCD display that breaks down the picture in such a way that, when it reaches your eye, the light rays are reconstructed as a sharp image. The prototype and lots more details about the method will be shown off at SIGGRAPH next month, after which, its creators, a team from Berkeley, MIT and Microsoft, plan to develop a version that'll work in the home and, further down the line, with more than one person at a time.

Glasses-free 3D projector offers a cheap alternative to holograms

Holograms are undoubtedly spiffy-looking, but they're not exactly cheap; even a basic holographic projector made from off-the-shelf parts can cost thousands of dollars. MIT researchers may have a budget-friendly alternative in the future, though. They've built a glasses-free 3D projector that uses two liquid crystal modulators to angle outgoing light and present different images (eight in the prototype) depending on your point of view. And unlike some 3D systems, the picture should remain relatively vivid -- the technology uses a graphics card's computational power to preserve as much of an image's original information (and therefore its brightness) as possible.

The Daily Roundup for 07.25.2013

You might say the day is never really done in consumer technology news. Your workday, however, hopefully draws to a close at some point. This is the Daily Roundup on Engadget, a quick peek back at the top headlines for the past 24 hours -- all handpicked by the editors here at the site. Click on through the break, and enjoy.

Disney Research's AIREAL creates haptic feedback out of thin air

Disney Research is at it again. The arm of Walt's empire responsible for interactive house plants wants to add haptic feedback not to a seat cushion, but to thin air. Using a combination of 3D-printed components -- thank the MakerBots for those -- with five actuators and a gaggle of sensors, AIREAL pumps out tight vortices of air to simulate tactility in three dimensional space. The idea is to give touchless experiences like motion control a form of physical interaction, offering the end user a more natural response through, well, touch. Like most of the lab's experiments this has been in the works for a while, and the chances of it being used outside of Disneyworld anytime soon are probably slim. AIREAL will be on display at SIGGRAPH in Anaheim from Sunday to Wednesday this week. Didn't register? Check out the video after the break.

Fabricated: Scientists develop method to synthesize the sound of clothing for animations (video)

Developments in CGI and animatronics might be getting alarmingly realistic, but the audio that goes with it often still relies on manual recordings. A pair of associate professors and a graduate student from Cornell University, however, have developed a method for synthesizing the sound of moving fabrics -- such as rustling clothes -- for use in animations, and thus, potentially film. The process, presented at SIGGRAPH, but reported to the public today, involves looking into two components of the natural sound of fabric, cloth moving on cloth, and crumpling. After creating a model for the energy and pattern of these two aspects, an approximation of the sound can be created, which acts as a kind of "road map" for the final audio. The end result is created by breaking the map down into much smaller fragments, which are then matched against a database of similar sections of real field-recorded audio. They even included binaural recordings to give a first-person perspective for headphone wearers. The process is still overseen by a human sound engineer, who selects the appropriate type of fabric and oversees the way that sounds are matched, meaning it's not quite ready for prime time. Understandable really, as this is still a proof of concept, with real-time operations and other improvements penciled in for future iterations. What does a virtual sheet being pulled over an imaginary sofa sound like? Head past the break to hear it in action, along with a presentation of the process.

Watch Square Enix's next-gen engine get a real-time demo at SIGGRAPH

The three-plus minutes of Square Enix's next-gen Luminous Engine debuted during E3 may not have been enough for your hungry eyes. Well good thing for you, Eric Carmen, that Square showed an even more nerdy walkthrough of its engine demo during a presentation of SIGGRAPH 2012.4Gamer captured the dazzling video above, wherein ridiculously detailed on-the-fly changes can be made to effects in the engine. Now all Square Enix needs to do is build a game in the engine and we're all set.

SIGGRAPH 2012 wrap-up

Considering that SIGGRAPH focuses on visual content creation and display, there was no shortage of interesting elements to gawk at on the show floor. From motion capture demos to 3D objects printed for Hollywood productions, there was plenty of entertainment at the Los Angeles Convention Center this year. Major product introductions included ARM's Mali-T604 GPU and a handful of high-end graphics cards from AMD, but the highlight of the show was the Emerging Technologies wing, which played host to a variety of concept demonstrations, gathering top researchers from institutions like the University of Electro-Communications in Tokyo and MIT. The exhibition has come to a close for the year, but you can catch up with the show floor action in the gallery below, then click on past the break for links to all of our hands-on coverage, direct from LA.%Gallery-162185%

Colloidal Display uses soap bubbles, ultrasonic waves to form a projection screen (hands-on video)

If you've ever been to an amusement park, you may have noticed ride designers using some non-traditional platforms as projection screens -- the most common example being a steady stream of artificial fog. Projecting onto transparent substances is a different story, however, which made this latest technique a bit baffling to say the least. Colloidal Display, developed by Yoichi Ochiai, Alexis Oyama and Keisuke Toyoshima, uses bubbles as an incredibly thin projection "screen," regulating the substance's properties, such as reflectance, using ultrasonic sound waves from a nearby speaker. The bubble liquid is made from a mixture of sugar, glycerin, soap, surfactant, water and milk, which the designers say is not easily popped. Still, during their SIGGRAPH demo, a motor dunked the wands in the solution and replaced the bubble every few seconds. A standard projector directed at the bubble creates an image, which appears to be floating in the air. And, because the bubbles are transparent, they can be stacked to simulate a 3D image. You can also use the same display to project completely different images that fade in and out of view depending on your angle relative to the bubble. There is a tremendous amount of distortion, however, because the screen used is a liquid that remains in a fluid state. Because of the requirement to constantly refresh the bubbles, and the unstable nature of the screen itself, the project, which is merely a proof of concept, wouldn't be implemented without significant modification. Ultimately, the designers hope to create a film that offers similar transparent properties but with a more solid, permanent composition. For now, you can sneak a peek of the first iteration in our hands-on video after the break.%Gallery-162176%

Stuffed Toys Alive! replaces mechanical limbs with strings for a much softer feel (hands-on)

It worked just fine for Pinocchio, so why not animatronic stuffed bears? A group of researchers from the Tokyo University of Technology are on hand at SIGGRAPH's Emerging Technologies section this week to demonstrate "Stuffed Toys Alive!," a new type of interactive toy that replaces the rigid plastic infrastructure used today with a seemingly simple string pulley-based solution. Several strings are installed at different points within each of the cuddly gadget's limbs, then attached to a motor that pulls the strings to move the fuzzy guy's arms while also registering feedback, letting it respond to touch as well. There's not much more to it than that -- the project is ingenious but also quite simple, and it's certain to be a hit amongst youngsters. The obligatory creepy hands-on video is waiting just past the break.%Gallery-162161%

Chilly Chair uses static electricity to raise your arm hair, force an 'emotional reaction' (hands-on video)

Hiding in the back of the SIGGRAPH Emerging Technologies demo area -- exactly where such a project might belong -- is a dark wood chair that looks anything but innocent. Created by a team at the University of Electro-Communications in Toyko, Chilly Chair, as it's called, may be a reference to the chilling feeling the device is tasked with invoking. After signing a liability waiver, attendees are welcomed to pop a squat before resting their arms atop a cool, flat metal platform hidden beneath a curved sheath that looks like something you may expect to see in Dr. Frankenstein's lab, not a crowded corridor of the Los Angeles Convention Center. Once powered up, the ominous-looking contraption serves to "enrich" the experience as you consume different forms of media, be it watching a movie or listening to some tunes. It works by using a power source to pump 10 kV of juice to an electrode, which then polarizes a dielectric plate, causing it to attract your body hair. After signing our life away with the requisite waiver, we sat down and strapped in for the ride. Despite several minutes of build-up, the entire experience concluded in what seemed like only a few seconds. A projection screen in front of the chair lit up to present a warning just as we felt the hairs jet directly towards the sheath above. By the time we rose, there was no visual evidence of the previous state, though we have no doubt that the Chilly Chair succeeded in raising hair (note: the experience didn't come close to justifying the exaggerated reaction you may have noticed above). It's difficult to see how this could be implemented in future home theater setups, especially considering all the extra hardware currently required, but it could potentially add another layer of immersion to those novelty 4D attractions we can't seem to avoid during visits to the amusement park. You can witness our Chilly Chair experience in the hands-on video after the break.%Gallery-162116%

MIT Media Lab's Tensor Displays stack LCDs for low-cost glasses-free 3D (hands-on video)

Glasses-free 3D may be the next logical step in TV's evolution, but we have yet to see a convincing device make it to market that doesn't come along with a five-figure price tag. The sets that do come within range of tickling our home theater budgets won't blow you away, and it's not unreasonable to expect that trend to continue through the next few product cycles. A dramatic adjustment in our approach to glasses-free 3D may be just what the industry needs, so you'll want to pay close attention to the MIT Media Lab's latest brew. Tensor Displays combine layered low-cost panels with some clever software that assigns and alternates the image at a rapid pace, creating depth that actually looks fairly realistic. Gordon Wetzstein, one of the project creators, explained that the solution essentially "(takes) the complexity away from the optics and (puts) it in the computation," and since software solutions are far more easily scaled than their hardware equivalent, the Tensor Display concept could result in less expensive, yet superior 3D products. We caught up with the project at SIGGRAPH, where the first demonstration included four fixed images, which employed a similar concept as the LCD version, but with backlit inkjet prints instead of motion-capable panels. Each displaying a slightly different static image, the transparencies were stacked to give the appearance of depth without the typical cost. The version that shows the most potential, however, consists of three stacked LCD panels, each displaying a sightly different pattern that flashes back and forth four times per frame of video, creating a three-dimensional effect that appears smooth and natural. The result was certainly more tolerable than the glasses-free 3D we're used to seeing, though it's surely a long way from being a viable replacement for active-glasses sets -- Wetzstein said that the solution could make its way to consumers within the next five years. Currently, the technology works best in a dark room, where it's able to present a consistent image. Unfortunately, this meant the light levels around the booth were a bit dimmer than what our camera required, resulting in the underexposed, yet very informative hands-on video you'll see after the break.%Gallery-162096%

Gocen optical music recognition can read a printed score, play notes in real-time (hands-on video)

It's not often that we stumble upon classical music on the floor at SIGGRAPH, so the tune of Bach's Cantata 147 was reason enough to stop by Gocen's small table in the annual graphics trade show's Emerging Technologies hall. At first glance, the four Japanese men at the booth could have been doing anything on their MacBook Pros -- there wasn't a musical instrument in sight -- but upon closer inspection, they each appeared to be holding identical loupe-like devices, connected to each laptop via USB. Below each self-lit handheld reader were small stacks of sheet music, and it soon became clear that each of the men was very slowly moving their devices from side to side, playing a seemingly perfect rendition of "Jesu, Joy of Man's Desiring." The project, called Gocen, is described by its creators as a "handwritten notation interface for musical performance and learning music." Developed at Tokyo Metropolitan University, the device can read a printed (or even handwritten) music score in real-time using optical music recognition (OMR), which is sent through each computer to an audio mixer, and then to a set of speakers. The interface is entirely text and music-based -- musicians, if you can call them that, scan an instrument name on the page before sliding over to the notes, which can be played back at different pitches by moving the reader vertically along the line. It certainly won't replace an orchestra anytime soon -- it takes an incredible amount of care to play in a group without falling out of a sync -- but Gocen is designed more as a learning tool than a practical device for coordinated performances. Hearing exactly how each note is meant to sound makes it easier for students to master musical basics during the beginning stages of their educations, providing instant feedback for those that depend on self-teaching. You can take a closer look in our hands-on video after the break, in a real-time performance demo with the Japan-based team.%Gallery-162022%