AI stuntpeople could lead to more realistic video games

Why use motion capture when characters can learn for themselves?

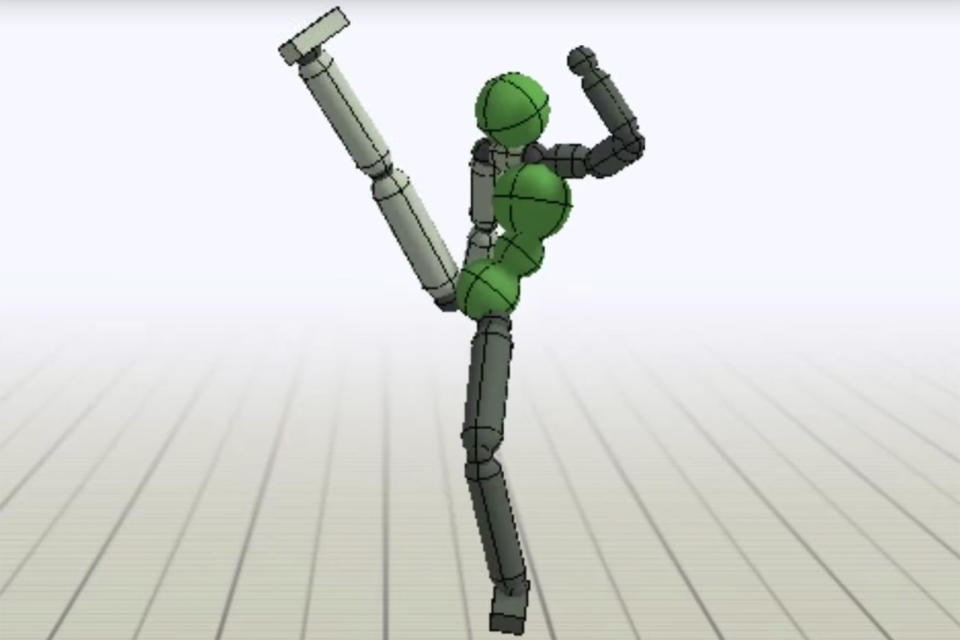

Video game developers often turn to motion capture when they want realistic character animations. Mocap isn't very flexible, though, as it's hard to adapt a canned animation to different body shapes, unusual terrain or an interruption from another character. Researchers might have a better solution: teach the characters to fend for themselves. They've developed a deep learning engine (DeepMimic) that has characters learning to imitate reference mocap animations or even hand-animated keyframes, effectively training them to become virtual stunt actors. The AI promises realistic motion with the kind of flexibility that's difficult even with methods that blend scripted animations together.

At its heart, DeepMimic revolves around reinforcement training. The closer it gets to the reference material, the more positive reinforcement it receives. It's a bit more complex than that, mind you. By randomizing the initial body state in a training situation, the training system teaches the character how to perform the intended action rather than whatever motion will reach the goal quickly (say, a backflip instead of hopping backwards).

And importantly, the technology is very generalized. You can teach it to kick or punch a specific target even if the original motion didn't account for that, for example. DeepMimic is physics-based by its very nature, too, so it can adapt to different body shapes and interruptions like projectiles. So long as it's technically possible to complete a movement in the first place, it'll happen. The reference animations are merely the starting point.

The uses for video games are fairly self-evident. You could have characters that move and fight in convincing ways without having to either capture animations for every possible scenario or design the game world around the limited animations you can provide. There are possibilities beyond pure entertainment, to boot. You could use the same basic methodology for training robots to climb and jump obstacles before they're placed in real-world situations, where the consequences of failure could be very costly.