objectrecognition

Latest

AI can identify objects based on verbal descriptions

Modern speech recognition is clunky and often requires massive amounts of annotations and transcriptions to help understand what you're referencing. There might, however, be a more natural way: teaching the algorithms to recognize things much like you would a child. Scientists have devised a machine learning system that can identify objects in a scene based on their description. Point out a blue shirt in an image, for example, and it can highlight the clothing without any transcriptions involved.

MIT machine vision system figures out what it's looking at by itself

Robotic vision is already pretty good, assuming that it's being used within the narrow bounds of the application for which it's been designed. That's fine for machines that perform a specific movement over and over, such as picking an object off of an assembly line and placing it into a bin. However for robots to become useful enough to not just pack boxes in warehouses but actually help out around our own homes, they'll have to stop being so myopic. And that's where the MIT's "DON" system comes in.

Snapchat's camera may help you shop at Amazon

Snap may rely on more than gaming to help turn around its ailing fortunes -- it might soon offer camera-assisted shopping. App researcher Ishan Agarwal has found hidden Snapchat code for a Camera Search feature (initially called Visual Search) that would use the app to identify objects and barcodes, pointing you to Amazon if it found a match. While it's not exactly certain how this would work, TechCrunch theorized that it might tie into an existing context cards system that pulls up relevant info.

AI-powered instant camera turns photos into crude cartoons

Most cameras are designed to capture scenes as faithfully as possible, but don't tell that to Dan Macnish. He recently built an instant camera, Draw This, that uses a neural network to translate photos into the sort of crude cartoons you would put on your school notebooks. Macnish mapped the millions of doodles from Google's Quick, Draw! game data set to the categories the image processor can recognize. After that, it was largely a matter of assembling a Raspberry Pi-powered camera that used this know-how to produce its 'hand-drawn' pictures with a thermal printer.

AI-enabled Guess store helps you create an ensemble

You can try Amazon's Echo Look if you want AI to offer fashion advice at home. But what if you're at the store, and would rather not hem and haw while you decide if that top goes with those jeans? Guess and Alibaba think they can help. They've worked together on an AI system, FashionAI, that uses computer vision to help you create an entire outfit while you're shopping. A smart mirror can recognize the color, style and traits of what you're holding (such as the neckline) and suggest other items that would be a good complement, including clothes you've already bought online. Can't style your way out of a wet paper bag? You might only have to pick one piece that strikes your fancy to create a full ensemble.

Bing can use your phone camera to search the web

Microsoft isn't about to let Google's visual search features go uncontested. The tech giant has introduced a Visual Search feature to Bing that uses your phone's camera (either a fresh shot or from your camera roll) to identify objects and serve up links related to what you see. Snap a picture of a landmark and you may get travel info, for instance. Logically, Microsoft is also playing up the shopping angle: search for an outfit or home furniture and you'll get prices and shopping locations.

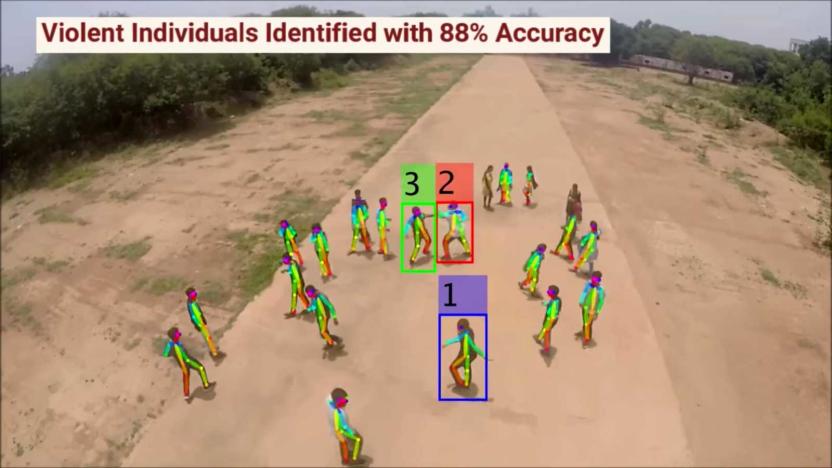

Experimental drone uses AI to spot violence in crowds

Drone-based surveillance still makes many people uncomfortable, but that isn't stopping research into more effective airborne watchdogs. Scientists have developed an experimental drone system that uses AI to detect violent actions in crowds. The team trained their machine learning algorithm to recognize a handful of typical violent motions (punching, kicking, shooting and stabbing) and flag them when they appear in a drone's camera view. The technology could theoretically detect a brawl that on-the-ground officers might miss, or pinpoint the source of a gunshot.

ARM's latest processors are designed for mobile AI

ARM isn't content to offer processor designs that are kinda-sorta ready for AI. The company has unveiled Project Trillium, a combination of hardware and software ingredients designed explicitly to speed up AI-related technologies like machine learning and neural networks. The highlights, as usual, are the chips: ARM ML promises to be far more efficient for machine learning than a regular CPU or graphics chip, with two to four times the real-world throughput. ARM OD, meanwhile, is all about object detection. It can spot "virtually unlimited" subjects in real time at 1080p and 60 frames per second, and focuses on people in particular -- on top of recognizing faces, it can detect facing, poses and gestures.

Mitsubishi's mirrorless car cameras highlight distant traffic

Mirrorless cars, terrifying as they may sound, are coming. In 2015, the United Nations gave the go-ahead for carmakers to replace mirrors with cameras and display systems, so the race is on to design tech that's fit for the job. Now, Mitsubishi Electric says it's developed the industry's highest-performing vehicle camera, able to detect objects up to 100 meters away.

Autonomous wheelchairs arrive at Japanese airport

Passengers with limited mobility will soon be able to navigate airports more easily thanks to Panasonic's robotic electric wheelchair. Developed as part of a wider program to make Japan's Haneda Airport more accessible to all, the wheelchair utilizes autonomous mobility technology: after users input their destination via smartphone the wheelchair will identify its position and select the best route to get there. Multiple chairs can move in tandem which means families or groups can travel together, and after use, the chairs will 'regroup' automatically, reducing the workload for airport staff. The chairs also use sensors to stop automatically if they detect a potential collision.

Google AI experiments help you appreciate neural networks

Sure, you may know that neural networks are spicing up your photos and translating languages, but what if you want a better appreciation of how they function? Google can help. It just launched an AI Experiments site that puts machine learning to work in a direct (and often entertaining) way. The highlight by far is Giorgio Cam -- put an object in front of your phone or PC camera and the AI will rattle off a quick rhyme based on what it thinks it's seeing. It's surprisingly accurate, fast and occasionally chuckle-worthy.

Google machine learning can protect endangered sea cows

It's one thing to track endangered animals on land, but it's another to follow them when they're in the water. How do you spot individual critters when all you have are large-scale aerial photos? Google might just help. Queensland University researchers have used Google's TensorFlow machine learning to create a detector that automatically spots sea cows in ocean images. Instead of making people spend ages coming through tens of thousands of photos, the team just has to feed photos through an image recognition system that knows to look for the cows' telltale body shapes.

Google's mini radar can identify virtually any object

Google's Project Soli radar technology is useful for much more than controlling your smartwatch with gestures. University of St. Andrews scientists have used the Soli developer kit to create RadarCat, a device that identifies many kinds of objects just by getting close enough. Thanks to machine learning, it can not only identify different materials, such as air or steel, but specific items. It'll know if it's touching an apple or an orange, an empty glass versus one full of water, or individual body parts.

Google buys startup that helps your phone identify objects

Google has purchased Moodstocks, a French startup that specializes in speedy object recognition from a smartphone, showing (again) the search giant's intense interest in AI. Unlike other products (including Google's own Goggles object recognition app) Moodstocks does most of the crunching on your smartphone, rather than on a server. While Google seemingly has some pretty good image-spotting tech already, like the canny visual categorization in Photos, it says it's just getting started.

Computers learn to predict high-fives and hugs

Deep learning systems can already detect objects in a given scene, including people, but they can't always make sense of what people are doing in that scene. Are they about to get friendly? MIT CSAIL's researchers might help. They've developed a machine learning algorithm that can predict when two people will high-five, hug, kiss or shake hands. The trick is to have multiple neural networks predict different visual representations of people in a scene and merge those guesses into a broader consensus. If the majority foresees a high-five based on arm motions, for example, that's the final call.

Apple iOS 10 uses AI to help you find photos and type faster

Apple is making artificial intelligence a big, big cornerstone of iOS 10. To start, the software uses on-device computer vision to detect both faces and objects in photos. It'll recognize a familiar friend, for instance, and can tell that there's a mountain in the background. While this is handy for tagging your shots, the feature really comes into its own when you let the AI do the hard work. There's a new Memories section in the Photos app that automatically organizes pictures based on events, people and places, complete with related memories (such as similar trips) and smart presentations. Think of it as Google Photos without having to go online.

AI-powered cameras make thermal imaging more accessible

As cool as thermal cameras may be, they're not usually very bright -- they may show you something hiding in the dark, but they won't do much with it. FLIR wants to change that with its new Boson thermal camera module. The hardware combines a long wave infrared camera with a Movidius vision processing unit, giving the camera a dash of programmable artificial intelligence. Device makers can not only use those smarts for visual processing (like reducing noise), but some computer vision tasks as well -- think object detection, depth calculations and other tasks that normally rely on external computing power.

Microsoft's imaging tech is (sometimes) better than you at spotting objects

Many computer vision projects struggle to mimic what people can achieve, but Microsoft Research thinks that its technology might have already trumped humanity... to a degree, that is. The company has published results showing that its neural network technology made fewer mistakes recognizing objects than humans in an ImageNet challenge, slipping up on 4.94 percent of pictures versus 5.1 percent for humans. One of the keys was a "parametric rectified linear unit" function (try saying that three times fast) that improves accuracy without any real hit to processing performance.

Google's latest object recognition tech can spot everything in your living room

Automatic object recognition in images is currently tricky. Even if a computer has the help of smart algorithms and human assistants, it may not catch everything in a given scene. Google might change that soon, though; it just detailed a new detection system that can easily spot lots of objects in a scene, even if they're partly obscured. The key is a neural network that can rapidly refine the criteria it's looking for without requiring a lot of extra computing power. The result is a far deeper scanning system that can both identify more objects and make better guesses -- it can spot tons of items in a living room, including (according to Google's odd example) a flying cat. The technology is still young, but the internet giant sees its recognition breakthrough helping everything from image searches through to self-driving cars. Don't be surprised if it gets much easier to look for things online using only vaguest of terms.

BYU image algorithm can recognize objects without any human help

Even the smartest object recognition systems tend to require at least some human input to be effective, even if it's just to get the ball rolling. Not a new system from Brigham Young University, however. A team led by Dah-Jye Lee has built a genetic algorithm that decides which features are important all on its own. The code doesn't need to reset whenever it looks for a new object, and it's accurate to the point where it can reliably pick out subtle differences -- different varieties of fish, for instance. There's no word on just when we might see this algorithm reach the real world, but Lee believes that it could spot invasive species and manufacturing defects without requiring constant human oversight. Let's just hope it doesn't decide that we're the invasive species.