artificialintelligence

Latest

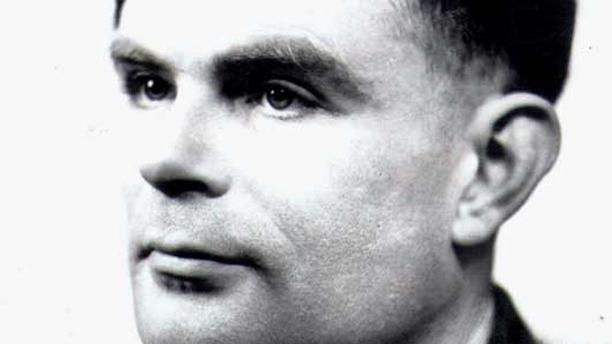

UK pardons computing pioneer Alan Turing

Campaigners have spent years demanding that the UK exonerate computing legend Alan Turing, and they're finally getting their wish. Queen Elizabeth II has just used her royal prerogative to pardon Turing, 61 years after an indecency conviction that many now see as unjust. The criminal charge shouldn't overshadow Turing's vital cryptoanalysis work during World War II, Justice Secretary Chris Grayling said when explaining the move. The pardon is a purely symbolic gesture, but an important one all the same -- it acknowledges that the conviction cut short the career of a man who defended his country, broke ground in artificial intelligence and formalized computing concepts like algorithms.

Facebook hires NYU professor to lead its artificial intelligence efforts

Facebook's fledgling artificial intelligence group now has a leader -- the social network has hired New York University professor Yann LeCun to run the research division from New York City. He'll remain at the university part time, but most of his energy will now be spent researching data science, deep learning and other technologies that could refine Facebook's social stream. While LeCun's hire won't pay off for some time, it already suggests that Zuckerberg and crew are serious about competing with Google's Ray Kurzweil in the AI space.

Recommended Reading: AI pioneer Douglas Hofstadter profiled, the NSA files decoded and more

Recommended Reading highlights the best long-form writing on technology in print and on the web. Some weeks, you'll also find short reviews of books dealing with the subject of technology that we think are worth your time. We hope you enjoy the read. The Man Who Would Teach Machines to Think by James Somers, The Atlantic Artificial intelligence has been in the public consciousness for decades now, due in no small part to fictional incarnations like 2001's HAL 9000, but it's been getting more attention than ever due to IBM's Watson, Apple's Siri and other recent developments. One constant figure throughout much of that time is AI pioneer Douglas Hofstadter, who's profiled at length in this piece by James Somers for The Atlantic. In it, Somers talks to Hofstadter and other key figures from the likes of IBM and Google, while examining his approach to the field, which is as much about studying the human mind as replicating it. [Image credit: null0/Flickr]

Qualcomm's brain-like processor is almost as smart as your dog

Biological brains are sometimes overrated, but they're still orders of magnitude quicker and more power-efficient than traditional computer chips. That's why Qualcomm has been quietly funneling some of its prodigious income into a project called "Zeroth," which it hopes will one day give it a radical advantage over other mobile chip companies. According to Qualcomm's Matt Grob, Zeroth is a "biologically-inspired" processor that is modeled on real-life neurons and is capable of learning from feedback in much the same way as a human or animal brain does. And unlike some other so-called artifical intelligences we've seen, this one appears to work. The video after the break shows a Zeroth-controlled robot exploring an environment and then naturally adjusting its behavior in response not to lines of code, but to someone telling it whether it's being "good" or "bad." "Everything here is biologically realistic: spiking neurons implemented in hardware on that actual machine." Zeroth is advanced enough that Qualcomm says it's ready to work with other companies who want to develop applications to run on it. One particular focus is on building neural networks that will fit into mobile devices and enable them to learn from users who, unlike coders, aren't able or willing to instruct devices in the usual tedious manner. Grob even claims that, when a general-purpose Zeroth neural network is trained to do something specific, such as recognizing and tracking an object, it can already accomplish that task better than an algorithm designed solely for that function. Check out the source link to see Grob's full talk and more demo videos -- especially if you want to confirm your long-held suspicion that dogs are scarily good at math.

Facebook developing brain-like AI to find deeper meaning in feeds and photos

Facebook's current News Feed ranking isn't all that clever -- it's good at surfacing popular updates, but it can miss lower-profile updates that are personally relevant. The company may soon raise the News Feed's IQ, however, as it recently launched an artificial intelligence research group. The new team hopes to use deep learning AI, which simulates a neural network, to determine which posts are genuinely important. The technology could also sort a user's photos, and it might even select the best shots. While the AI work has only just begun, the company tells MIT Technology Review that it should release some findings to the public; those breakthroughs in social networking could help society as a whole.

Joaquin Phoenix finds real love with artificial intelligence in Spike Jonze's 'Her' (video)

When sci fi narratives explore artificial intelligence approaching a human level of sentience, they tend to focus on the negative (Skynet, anyone?). Not so with Spike Jonze's new movie Her, a melancholy examination of what it means to be human in an increasingly inhuman world. The film stars Joaquin Phoenix as a social recluse who finds a friend in his smartphone's Siri-inspired assistant, Samantha (voiced by Scarlett Johansson). The relationship blossoms in a way that manages to be both heartfelt and deeply unsettling, and Jonze's take on a sort of technological animism feels pretty culturally resonant. Her is set for a November release, and you can watch the trailer after the break.

Study reveals AI systems are as smart as a 4-year-old, lack common sense

It'll take a long time before we see a J.A.R.V.I.S. in real life -- University of Illinois at Chicago researchers put MIT's ConceptNet 4 AI through the verbal portions of a children's IQ test, and rated its apparent relative intelligence as that of a 4-year-old. Despite an excellent vocabulary and ability to recognize similarities, the lack of basic life experience leaves one of the best AI systems unable to answer even easy "why" questions. Those sound simple, but not even the famed Watson supercomputer is capable of human-like comprehension, and research lead Robert Sloan believes we're far from developing one that is. We hope scientists get cracking and conjure up an AI worthy of our sci-fi dreams... so long as it doesn't pull a Skynet on humanity. [Image credit: Kenny Louie]

Apple announces Anki Drive, an AI robotics app controlled through iOS

Apple is just starting its WWDC keynote this morning, but it's already announcing something quite interesting: a new company called Anki and its inaugural iOS app called Anki Drive, which centers around artificial intelligence and robotics. The name, which is Japanese for "memorize," features smart cars that are capable of driving themselves (although you can certainly take over at any time) and communicate with your iPhone using Bluetooth LE. These intelligent vehicles, when placed upon a printed race track, can sense the track up to 500 times a second. The iOS-exclusive game is available as a beta in the App Store today, which you'll need to sign up for -- the full release won't be coming until this fall -- and it's billed as a "video game in the real world." According to the developers, "the real fun is when you take control of these cars yourselves," which we can definitely attest to -- the WWDC demo cars had weapons, after all. Follow all of our WWDC 2013 coverage at our event hub.

Google and NASA team up for D-Wave-powered Quantum Artificial Intelligence Lab

Google. NASA. Quantum computers. Seriously, everything about the new Quantum Artificial Intelligence Lab at the Ames Research Center is exciting. The joint effort between Mountain View and America's space agency will put a 512 qubit machine from D-Wave at the disposal of researchers from around the globe, with the USRA (Universities Space Research Association) inviting teams of scientists and engineers to share time on the unique super computer. The goal is to study how quantum computing might be leveraged to advance machine learning, a branch of AI that has proven crucial to Google's success. The internet giant has already done some work with quantum computing before, now the goal is to see if its experimentation can translate into real world results. The idea, for Google at least, is to combine the extreme (but highly-specialized) power of the quantum bit with its oceans of traditional data centers to build more accurate models for everything from speech recognition to web search. And maybe, just maybe, with the help of quantum computers your phone will finally realize you didn't mean to say "duck."

MIT algorithms teach robot arms to think outside of the box (video)

Although robots are getting better at adapting to the real world, they still tend to tackle challenges with a fixed set of alternatives that can quickly become impractical as objects (and more advanced robots) complicate the situation. Two MIT students, Jennifer Barry and Annie Holladay, have developed fresh algorithms that could help robot arms improvise. Barry's method tells the robot about an object's nature, focusing its attention on the most effective interactions -- sliding a plate until it's more easily picked up, for example. Holladay, meanwhile, turns collision detection on its head to funnel an object into place, such as balancing a delicate object with a free arm before setting that object down. Although the existing code for either approach currently requires plugging in existing data, their creators ultimately want more flexible code that determines qualities on the spot and reacts accordingly. Long-term development could nudge us closer to robots with truly general-purpose code -- a welcome relief from the one-track minds the machines often have today.

Kimera Systems wants your smartphone to think for you

When Google took the wraps off Now we all got a pretty excited about the potential of the preemptive virtual assistant. Kimera Systems wants to build a similar system, but one that will make Mountain View's tool look about as advanced as a Commodore 64. The founder of the company, Mounir Shita, envisions a network of connected devices that use so-called smart software agents to track your friends, suggest food at a restaurant and even find someone to paint your house. That explanation is a bit simplistic, but it gets to the heart of what the Artificial General Intelligence network is theoretically capable of. In this world (as you'll see in the video after the break) you don't check Yelp or text your friend to ask if they're running late. Instead, your phone would recognize that you'd walked into a particular restaurant, analyze the menu and suggest a meal based on your tastes. Meanwhile, your friend has just reached the bus stop, but it's running a little behind. Her phone knows she's supposed to meet you so it sends an alert to let you know of the delay. With some spare time on your hands, your phone would suggest making a new social connection or walking to a nearby store to pick up that book sitting in your wishlist. It's creepy, ambitious and perhaps a bit unsettling that we'd be letting our phones run our lives. Kimera is trying to raise money to build a plug-in for Android and an SDK to start testing its vision. You check out the promotional video after the break and, if you're so inclined, pledge some cash to the cause at the source.

Georgia Tech receives $900,000 grant from Office of Naval Research to develop 'MacGyver' robot

Robots come in many flavors. There's the subservient kind, the virtual representative, the odd one with an artistic bent, and even robo-cattle. But, typically, they all hit the same roadblock: they can only do what they are programmed to do. Of course, there are those that posses some AI smarts, too, but Georgia Tech wants to take this to the next level, and build a 'bot that can interact with its environment on the fly. The project hopes to give machines deployed in disaster situations the ability to find objects in their environment for use as tools, such as placing a chair to reach something high, or building bridges from debris. The idea builds on previous work where robots learned to moved objects out of their way, and developing an algorithm that allows them to identify items, and asses its usefulness as a tool. This would be backed up by some programming, to give the droids a basic understanding of rigid body mechanics, and how to construct motion plans. The Office of Navy Research's interest comes from potential future applications, working side-by-side with military personnel out on missions, which along with iRobot 110, forms the early foundations for the cyber army of our childhood imaginations.

IBM debuts new mainframe computer as it eyes a more mobile Watson

Those looking for a juxtaposition of IBM's past and future needn't look much further than two bits of news out of the company this week. The first comes with IBM's announcement of its new zEnterprise EC12 25 mainframe server -- a class of computer that may be a thing of the past in some places, but which still serves a fairly broad range of companies. In addition to an appearance that lives up to the "mainframe" moniker, this one promises 25 percent more performance per core than its predecessor and 50 percent more capacity. The second bit of news involves Watson, the company's AI effort that rose to fame on Jeopardy! and has since gone on to find a number of new roles. As Bloomberg reports, one of its next steps may be to take on Siri in the smartphone space. While there's no indication of a broader consumer product, IBM sees a range of possible applications for a mobile Watson in business and enterprise -- even, for instance, giving farmers the ability to ask when they should plant their crops. Before that happens, though, IBM says it needs to give Watson more "senses" in order to respond to real-world input like image recognition -- not to mention learn all it can about any given subject.

Scientists investigating AI-based traffic control, so we can only blame the jams on ourselves

Ever found yourself stuck at the lights convinced that whatever is controlling these things is just trying to test your patience, and that you could do a better job? Well, turns out you might -- at least partly -- be right. Researchers at the University of Southampton have just revealed that they are investigating the use of artificial intelligence-based traffic lights, with the hope that it could be used in next-generation road signals. The research uses video games and simulations to assess different traffic control systems, and apparently us humans do a pretty good job. The team at Southampton hope that they will be to emulate this human-like approach with new "machine learning" software. With cars already being tested out with WiFi, mobile connectivity and GPS on board for accident prevention, a system such as this could certainly have a lot of data to tap into. There's no indication as to when we might see a real world trial, but at least we're reminded, for once, that as a race we're not quite able to be replaced by robotic overlords entirely.

Robot stock traders lose $440,000,000 in 45 minutes, need someone to spell it out

Humans never learn and apparently neither do robots. Autonomous trading AIs went on a spending spree at Knight Capital Group in New Jersey this week, buying up shares in everything from RadioShack to Ford and American Airlines (ouch) in a 45-minute frenzy of disobedience. The company tried to offload the unwanted stock, but discovered it was already nearly half a billion dollars in the red -- enough to wipe out its entire profit from 2011 and "severely impact" its ability to conduct business. If only it had protected itself with one of these.

IBM celebrates the 15th anniversary of Deep Blue beating Garry Kasparov (video)

It's been 15 years since IBM's Deep Blue recorded its famous May 11th 1997 victory over world champion chess player Garry Kasparov -- a landmark in artificial intelligence. Designed by Big Blue as a way of understanding high-power parallel processing, the "brute force" system could examine 200 million chess positions every second, beating the grandmaster 3.5-2.5 after losing 4-2 the previous year. It went on to help develop drug treatments, analyze risk and aid data miners before being replaced with Blue Gene and, more recently, Watson -- which recorded a famous series of victories on Jeopardy! in 2011. If you'd like to know more, we've got a video with one of the computer's fathers: Dr. Murray Campbell and a comparison on how the three supercomputers stack up after the break. As for Garry Kasparov? The loss didn't ruin his career, he went on to win every single Chess trophy conceived, retired, wrote some books and went into politics. As you do.

Angelina: the experimental AI that's coming for our game dev jobs

Ok so, maybe Angelina couldn't have created Skyrim all on her own, but the experimental AI from Michael Cook (a computer scientist at Imperial College London) is still quite impressive. The artificial dev is able program enemy behavior, layout levels, and distribute power ups with random attributes. While many elements of a game like Space Station Invaders (which you can play at the more coverage link) are designed by human hands, it's Angelina's ability to act as a composer building something fun from the various ingredients that's so interesting. Before setting a level in stone she plays through the possible combinations, determining which will be most enjoyable for a human player. Hit up the source link for loads more info.

John McCarthy, AI pioneer, dies at 84

It might be a stretch to suggest that there'd be no AI without John McCarthy, but at the very least, we'd likely be discussing the concept much differently. The computer scientist, who died on Sunday at 84, is credited with coining the term "Artificial Intelligence" as part of a proposal for a Dartmouth conference on the subject. The event, held in 1956, is regarded as a watershed moment for the subject. Early the following decade, McCarthy pioneered LISP, a highly popular programming language amongst the AI development community. In 1971, he won a Turing Award from the Association for Computing Machinery and 20 years later was awarded National Medal of Science. A more complete obituary for McCarthy can be found in the source link below. [Thanks, Jason]

Siri and the possibility of artificial intelligence

Wired does a little speculating over on the Cloudline blog about whether or not Apple's redesigned Siri service actually counts as an AI. Technically, no, Siri's not a real artificial intelligence. When you ask "her" something and she comes back with a witty answer, your iPhone doesn't actually "understand" what you said in any meaningful way -- it's just identifying a set of words that you put together, and then outputting some data based on those words. Sometimes that's movie times or nearby store locations, and sometimes that's just a witty phrase that Apple engineers have programmed into the system. But of course, while programmers have been creating these "chatbots" for years, Siri has an advantage in that it runs on the cloud; Apple is constantly updating Siri's phrases and responses, which means that "her" answers will only get more appropriate over time. And while the system works as is, you have to imagine that Apple is collecting lots of information from it, including both what people are asking of Siri, and how they're asking for it. The more Apple learns about how to deal with that information, the better Siri will get at providing the right answer at the right time. That will make Siri "smarter" than ever. Until Apple hooks it up to an as-yet-uninvented thinking engine, it still won't "understand" your queries in the same way that a real human would -- or even in the way that a hyper-parallel quiz show competitor does. But for a lot of people, that doesn't much matter. As long as Siri responds correctly and helpfully, that's as good as many people need in terms of the payoff from artificial intelligence.

Switched On: As Siri gets serious

Each week Ross Rubin contributes Switched On, a column about consumer technology. Nearly 15 years passed between Apple's first foray into handheld electronics -- the Newton MessagePad -- and the far more successful iPhone. But while phones have replaced PDAs for all intents and purposes, few if any have tried to be what Newton really aspired to -- an intelligent assistant that would seamlessly blend into your life. That has changed with Siri, the standout feature of iOS 5 on the iPhone 4S, which could aptly be described as a "personal digital assistant" if there weren't so much baggage tied to that term. Siri is far more than parlor entertainment or a simple leapfrogging the voice control support in Android and Windows Phone. At the other end of the potential spectrum, Siri may not be a new platform in itself (although at this point Apple has somewhat sandboxed the experience). In any case, though, Siri certainly paves the way for voice as an important component for a rich multi-input digital experience. It steps toward the life-management set of functionality that the bow-tied agent immortalized in Apple's 1987 Knowledge Navigator video could achieve.