ImageRecognition

Latest

Microsoft's wacky AI app matches you with a dog breed

Artificial intelligence (AI) is getting pretty good at identifying people, but before it starts looking for Sarah Connor, Microsoft's Garage team is having fun with it. Last time, the group released an app that (poorly) guessed your age, and the latest app, Fetch, determines what dog breed you'd be based on your photo. It sometimes makes canny matches, but on the other hand, three different pictures of the same person (below) yielded three different breeds of dog -- an Afghan hound, a Cairn terrier and a Beagle, so it's lacking in consistency.

Smart car algorithm sees pedestrians as well as you can

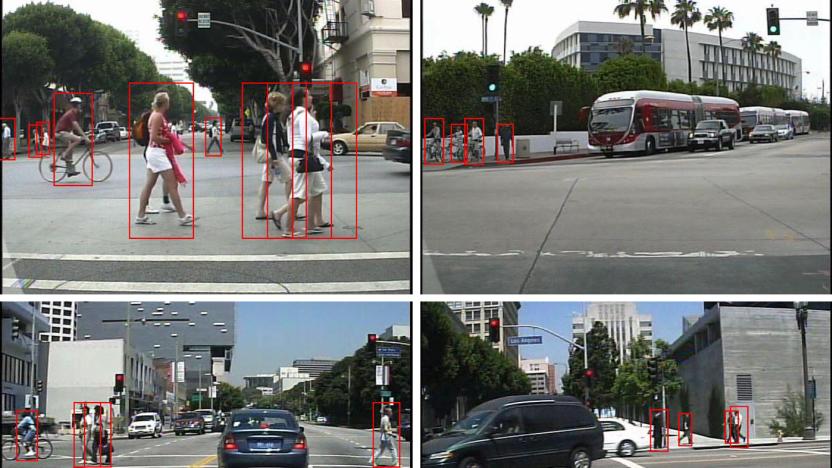

It's one thing for computers to spot people in relatively tame academic situations, but it's another when they're on the road -- you need your car to spot that jaywalker in time to avoid a collision. Thankfully, UC San Diego researchers have made that more realistic than ever. They've crafted a pedestrian detection algorithm that's much quicker and more accurate than existing systems. It can spot people at a rate of 2-4 frames per second, or roughly as well as humans can, while making half as many mistakes as existing systems. That could make the difference between a graceful stop and sudden, scary braking.

Algorithm turns any picture into the work of a famous artist

A group of German researchers have created an algorithm that basically amounts to the most amazing Instagram filter ever conceived: a convolutional neural network that can convert any photograph into a work of fine art. The process takes an hour (sorry, it's not actually coming to a smartphone near you), and the math behind it is horrendously complicated, but the results speak for themselves.

Smart camera warns you when guns enter your home

Anxious that you might face an armed home invasion, or that your kids might find the key to the gun cabinet? NanoWatt Design thinks it has a way to give you that crucial early warning. Its crowdfunded GunDetect camera uses computer vision to detect firearms and send a text alert to your phone. If it works as promised, you'll know there's an intruder at the door, or that you need to rush into the den before there's a terrible accident. If you're intrigued, it'll take a minimum $349 pledge to reserve a Cloud GunDetect (which requires a service subscription to process images) and $549 for a Premium model which does all the image recognition work itself. Provided that NanoWatt reaches its funding goal, both cams should ship in February.

Military AI interface helps you make sense of thousands of photos

It's easy to find computer vision technology that detect objects in photos, but it's still tough to sift through photos... and that's a big challenge for the military, where finding the right picture could mean taking out a target or spotting a terrorist threat. Thankfully, the US' armed forces may soon have a way to not only spot items in large image libraries, but help human observers find them. DARPA's upcoming, artificial intelligence-backed Visual Media Reasoning system both detects what's in a shot and presents it in a simple interface that bunches photos and videos together based on patterns. If you want to know where a distinctive-looking car has been, for example, you might only need to look in a single group.

Facebook and Google get neural networks to create art

For Facebook and Google, it's not enough for computers to recognize images... they should create images, too. Both tech firms have just shown off neural networks that automatically generate pictures based on their understanding of what objects look like. Facebook's approach uses two of these networks to produce tiny thumbnail images. The technique is much like what you'd experience if you learned painting from a harsh (if not especially daring) critic. The first algorithm creates pictures based on a random vector, while the second checks them for realistic objects and rejects the fake-looking shots; over time, you're left with the most convincing results. The current output is good enough that 40 percent of pictures fooled human viewers, and there's a chance that they'll become more realistic with further refinements.

Google hopes to count the calories in your food photos

Be careful about snapping pictures of your obscenely tasty meals -- one day, your phone might judge you for them. Google recently took the wraps off Im2Calories, a research project that uses deep learning algorithms to count the calories in food photos. The software spots the individual items on your plate and creates a final tally based on the calorie info available for those dishes. If it doesn't properly guess what you're eating, you can correct it yourself and improve the system over time. Ideally, Google will also draw from the collective wisdom of foodies to create a truly smart dietary tool -- enough experience and it could give you a solid estimate of how much energy you'll have to burn off at the gym.

Brain-like circuit performs human tasks for the first time

There are already computer chips with brain-like functions, but having them perform brain-like tasks? That's another challenge altogether. Researchers at UC Santa Barbara aren't daunted, however -- they've used a basic, 100-synapse neural circuit to perform the typical human task of image classification for the first time. The memristor-based technology (which, by its nature, behaves like an 'analog' brain) managed to identify letters despite visual noise that complicated the task, much as you would spot a friend on a crowded street. Conventional computers can do this, but they'd need a much larger, more power-hungry chip to replicate the same pseudo-organic behavior.

Start shopping directly from Bing image search

Microsoft continues to refine Bing in the hopes it can steal some of Google's search-dominance thunder. Today it updated Bing image search results with links to buy the item in the photo you select. The feature is still in beta, but once you select a photo and scroll down, a list of online retailers where the item can be purchased appear. Scrolling down from a selected result also surfaces the new related images, Pinterest collections, pages with the image and more sizes of the selected image. If you're a fan of window shopping on your computer, Bing's updated image search might be worth checking out.

Microsoft's imaging tech is (sometimes) better than you at spotting objects

Many computer vision projects struggle to mimic what people can achieve, but Microsoft Research thinks that its technology might have already trumped humanity... to a degree, that is. The company has published results showing that its neural network technology made fewer mistakes recognizing objects than humans in an ImageNet challenge, slipping up on 4.94 percent of pictures versus 5.1 percent for humans. One of the keys was a "parametric rectified linear unit" function (try saying that three times fast) that improves accuracy without any real hit to processing performance.

Computers can now describe images using language you'd understand

Software can now easily spot objects in images, but it can't always describe those objects well; "short man with horse" not only sounds awkward, it doesn't reveal what's really going on. That's where a computer vision breakthrough from Google and Stanford University might come into play. Their system combines two neural networks, one for image recognition and another for natural language processing, to describe a whole scene using phrases. The program needs to be trained with captioned images, but it produces much more intelligible output than you'd get by picking out individual items. Instead of simply noting that there's a motorcycle and a person in a photo, the software can tell that this person is riding a motorcycle down a dirt road. The software is also roughly twice as accurate at labeling previously unseen objects when compared to earlier algorithms, since it's better at recognizing patterns.

PhotoMath uses your phone's camera to solve equations

Need a little help getting through your next big math exam? MicroBlink has an app that could help you study more effectively -- perhaps too effectively. Its newly unveiled PhotoMath for iOS and Windows Phone (Android is due in early 2015) uses your smartphone's camera to scan math equations and not only solve them, but show the steps involved. Officially, it's meant to save you time flipping through a textbook to check answers when you're doing homework or cramming for a test. However, there's a concern that this could trivialize learning -- just because it shows you how to solve a problem doesn't mean that the knowledge will actually sink in. And if teachers don't confiscate smartphones at the door, unscrupulous students could cheat when no one is looking. The chances of that happening aren't very high at this stage, but apps like this suggest that schools might have to be vigilant in the future.

Computers are learning to size up neighborhoods using photos

Us humans are normally good at making quick judgments about neighborhoods. We can figure out whether we're safe, or if we're likely to find a certain store. Computers haven't had such an easy time of it, but that's changing now that MIT researchers have created a deep learning algorithm that sizes up neighborhoods roughly as well as humans. The code correlates what it sees in millions of Google Street View images with crime rates and points of interest; it can tell what a sketchy part of town looks like, or what you're likely to see near a McDonald's (taxis and police vans, apparently).

Google's latest object recognition tech can spot everything in your living room

Automatic object recognition in images is currently tricky. Even if a computer has the help of smart algorithms and human assistants, it may not catch everything in a given scene. Google might change that soon, though; it just detailed a new detection system that can easily spot lots of objects in a scene, even if they're partly obscured. The key is a neural network that can rapidly refine the criteria it's looking for without requiring a lot of extra computing power. The result is a far deeper scanning system that can both identify more objects and make better guesses -- it can spot tons of items in a living room, including (according to Google's odd example) a flying cat. The technology is still young, but the internet giant sees its recognition breakthrough helping everything from image searches through to self-driving cars. Don't be surprised if it gets much easier to look for things online using only vaguest of terms.

Competition coaxes computers into seeing our world more clearly

As surely as the seasons turn and the sun races across the sky, the Large Scale Visual Recognition Competition (or ILSVRC2014, for those in the know) came to a close this week. That might not mean much to you, but it does mean some potentially big things for those trying to teach computers to "see". You see, the competition -- which has been running annually since 2010 -- fields teams from Oxford, the National University of Singapore, the Chinese University of Hong Kong and Google who cook up awfully smart software meant to coax high-end machines into recognizing what's happening in pictures as well as we can.

Microsoft's image-recognition AI is a stickler for the details

Computer scientists have been modeling networks after the human brain and teaching machines to think independently for years, completing tasks like document reading and speech cues. Image recognition is another useful chore for the neural networks, and Microsoft Research has just offered a peek at its recent dive into the matter. Project Adam is one of those deep-learning systems that's been taught to complete image-recognition tasks 50 times faster and twice as accurate as its predecessors. So, what does that mean? Well, instead of just determining the breed in a canine snapshot, the tech can also distinguish between American and English Cocker Spaniels. The team is looking into tacking on speech and text recognition as well, so your next virtual assistant may not only wrangle your schedule and commute, but also could constantly learn from the world that you live in.

App lets you attach digital messages to real-world objects... for fun?

For those who don't know, Project Tango is a Google-built prototype smartphone jam packed with Kinect-like 3D sensors and components. One of Mountain View's software partners involved in the project, FlyBy Media, has built what it's calling the first consumer app capable of utilizing Tango's image recognition skills to... chat with friends. Dubbed FlyBy, the app allows users to share text and videos messages by attaching them to a real-world object; like a menu at your favorite restaurant or collectible from your honeymoon. Recipients are notified once they're in close proximity, then they need only to scan said object and voila, message received. While the concept isn't new -- or popular... yet -- the company believes that this time people will catch on. Just think of it as geocaching your conversations. That could be fun, right?

BYU image algorithm can recognize objects without any human help

Even the smartest object recognition systems tend to require at least some human input to be effective, even if it's just to get the ball rolling. Not a new system from Brigham Young University, however. A team led by Dah-Jye Lee has built a genetic algorithm that decides which features are important all on its own. The code doesn't need to reset whenever it looks for a new object, and it's accurate to the point where it can reliably pick out subtle differences -- different varieties of fish, for instance. There's no word on just when we might see this algorithm reach the real world, but Lee believes that it could spot invasive species and manufacturing defects without requiring constant human oversight. Let's just hope it doesn't decide that we're the invasive species.

Carnegie Mellon computer learns common sense through pictures, shows what it's thinking

Humans have a knack for making visual associations, but computers don't have it so easy; we often have to tell them what they see. Carnegie Mellon's recently launched Never Ending Image Learner (NEIL) supercomputer bucks that trend by forming those connections itself. Building on the university's earlier NELL research, the 200-core cluster scours the internet for images and defines objects based on the common attributes that it finds. It knows that buildings are frequently tall, for example, and that ducks look like geese. While NEIL is occasionally prone to making mistakes, it's also transparent -- a public page lets you see what it's learning, and you can suggest queries if you think there's a gap in the system's logic. The project could eventually lead to computers and robots with a much better understanding of the world around them, even if they never quite gain human-like perception.

Mhoto analyzes any image, gives it an appropriate, customized soundtrack

When we see a picture of the Notorious B.I.G., the hook from Hypnotize starts streaming in our heads. Imagine if you will, an app that analyzes your picture and creates a soundtrack suited to you. Mhoto does just that, and it can synthesize an appropriate tune for any digital photograph. Mhoto's magic comes courtesy of some patent pending technology that analyzes a picture's saturation, brightness and contrast levels and uses that information to create music tailored to fit the feel of the photo -- and the company's working on a way to integrate facial recognition into the mix to make mood based music, too. Users also can choose what musical genre they want the generated tunes to come from (Hip Hop, Rock, Pop, etc.). The best part is, the heavy lifting is done in Amazon's cloud, so Mhoto can work on any device with a data connection, even a featurephone.