neural network

Latest

Here's how Alexa learned to speak Spanish without your help

Now that Alexa knows how to speak Spanish in the US, there's a common question: how did it learn the language when it didn't have the benefit of legions of users issuing commands? Through new tools, it seems. Amazon has revealed a pair of system that helped Alexa hone its español (and Hindi, and Brazilian Portugese) using just a tiny amount of reference material. Effectively, they gave the natural language machine learning model a jumpstart.

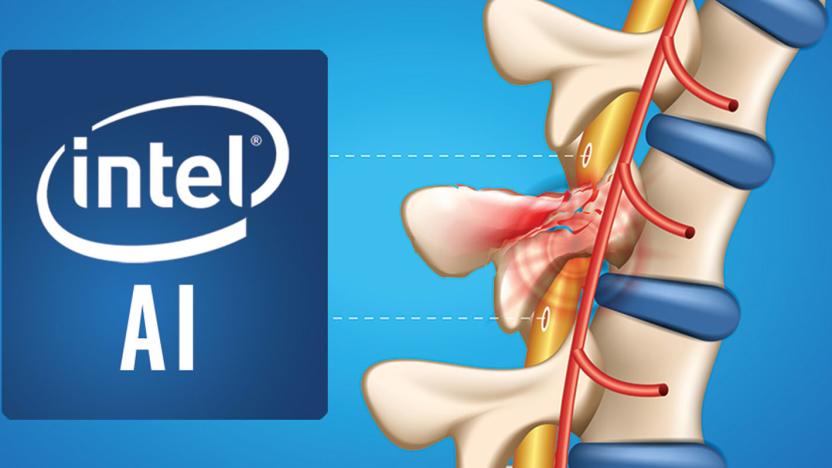

Intel wants to use AI to reconnect damaged spinal nerves

AI's use in medicine could soon extend to one of the medical world's toughest challenges: helping the paralyzed regain movement. Intel and Brown University have started work on a DARPA-backed Intelligent Spine Interface project that would use AI to restore movement and bladder control for those with serious spinal cord injuries. The two-year effort will have scientists capture motor and sensory signals from the spinal cord, while surgeons will implant electrodes on both ends of an injury to create an "intelligent bypass." From there, neural networks running on Intel tools will (hopefully) learn how to communicate motor commands through the bypass and restore functions lost to severed nerves.

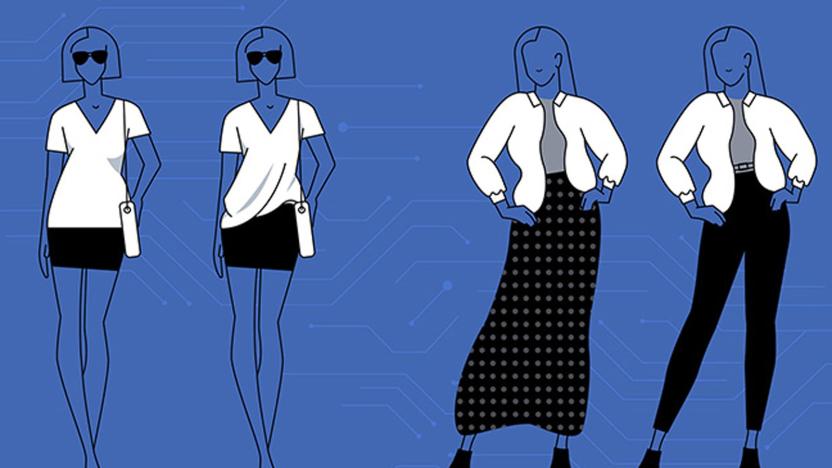

Facebook’s latest AI experiment helps you pick what to wear

Just when you think researchers have found the wackiest possible use for a neural net yet, another team finds an even more novel use for artificial intelligence. Take Facebook's new Fashion++ AI. It's a program that will help you become a fashionista.

Things get weird when a neural net is trained on text adventure games

We've seen people turn neural networks to almost everything from drafting pickup lines to a new Harry Potter chapter, but it turns out classic text adventure games may be one of the best fits for AI yet. This latest glimpse into what artificial intelligence can do was created by a neuroscience student named Nathan. Nathan trained GPT-2, a neural net designed to create predictive text, on classic PC text adventure games. Inspired by the Mind Game in Ender's Game, his goal was to create a game that would react to the player. Since he uploaded the resulting game to a Google Colab notebook, people like research scientist Janelle Shane have had fun seeing what a text adventure created by an AI looks like.

AI learns to solve a Rubik's Cube in 1.2 seconds

Researchers at the University of California, Irvine have created an artificial intelligence system that can solve a Rubik's Cube in an average of 1.2 seconds in about 20 moves. That's two seconds faster than the current human world record of 3.47 seconds, while people who can finish the puzzle quickly usually do so in about 50.

Steam's new experiment hub includes AI-based game recommendations

Valve is tinkering with the way Steam works, and it wants you to try those experiments for yourself. It's launching a Steam Labs section with usable "works-in-progress" that might make it to the regular game portal if there's enough positive feedback. Only three projects are available to start, but at least one of them could be genuinely useful if you're scrounging for new games to play.

AI can simulate quantum systems without massive computing power

It's difficult to simulate quantum physics, as the computing demand grows exponentially the more complex the quantum system gets -- even a supercomputer might not be enough. AI might come to the rescue, though. Researchers have developed a computational method that uses neural networks to simulate quantum systems of "considerable" size, no matter what the geometry. To put it relatively simply, the team combines familiar methods of studying quantum systems (such as Monte Carlo random sampling) with a neural network that can simultaneously represent many quantum states.

Google researchers trained AI with your Mannequin Challenge videos

Way back in 2016, thousands of people participated in the Mannequin Challenge. As you might remember, it was an internet phenomenon in which people held random poses while someone with a camera walked around them. Those videos were shared on YouTube and many earned millions of views. Now, a team from Google AI is using the videos to train neural networks. The goal is to help AI better predict depth in videos where the camera is moving.

MIT is turning AI into a pizza chef

Never mind having robots deliver pizza -- if MIT and QCRI researchers have their way, the automatons will make your pizza as well. They've developed a neural network, PizzaGAN (Generative Adversarial Network), that learns how to make pizza using pictures. After training on thousands of synthetic and real pizza pictures, the AI knows not only how to identify individual toppings, but how to distinguish their layers and the order in which they need to appear. From there, the system can create step-by-step guides for making pizza using only one example photo as the starting point.

MIT’s sensor-packed glove helps AI identify objects by touch

Researchers have spent years trying to teach robots how to grip different objects without crushing or dropping them. They could be one step closer, thanks to this low-cost, sensor-packed glove. In a paper published in Nature, a team of MIT scientists share how they used the glove to help AI recognize objects through touch alone. That information could help robots better manipulate objects, and it may aid in prosthetics design.

Microsoft AI creates realistic speech with little training

Text-to-speech conversion is becoming increasingly clever, but there's a problem: it can still take plenty of training time and resources to produce natural-sounding output. Microsoft and Chinese researchers might have a more effective way. They've crafted a text-to-speech AI that can generate realistic speech using just 200 voice samples (about 20 minutes' worth) and matching transcriptions.

I listened to a Massive Attack record remixed by a neural network

Drums. Synth. The faintest hint of a vocal track. For a moment the song is familiar and I'm teleported back to the late '90s, listening to worn-out cassettes in the backseat of my parent's saloon. But then the track shifts in a way that I didn't expect, introducing samples that, while appropriate in tone and feeling, don't match up with my brain's subconscious. It's an odd sensation that I haven't felt before; as if someone has snuck into my flat and rearranged the furniture in small, barely-perceptible ways. I can't help but stand rooted to the spot, waiting to see how the song shifts next.

MIT finds smaller neural networks that are easier to train

Despite all the advancements in artificial intelligence, most AI-based products still rely on "deep neural networks," which are often extremely large and prohibitively expensive to train. Researchers at MIT are hoping to change that. In a paper presented today, the researchers reveal that neural networks contain "subnetworks" that are up to 10 times smaller and could be cheaper and faster to teach.

AI generates non-stop stream of death metal

There's a limit to the volume of death metal humans can reproduce -- their fingers and vocal chords can only handle so much. Thanks to technology, however, you'll never have to go short. CJ Carr and Zack Zukowski recently launched a YouTube channel that streams a never-ending barrage of death metal generated by AI. Their Dadabots project uses a recurrent neural network to identify patterns in the music, predict the most common elements and reproduce them.

Turing Award winners include AI giants from Facebook and Google

The Turing Award has recognized some of the biggest names in AI and computing over the years, and the latest winners are particularly heavy hitters. The three prize recipients for 2018 are Google VP Geoffrey Hinton, Facebook's Yann LeCun (above) and Yoshua Bengio, the Scientific Director of the giant AI research center Mila. All three helped "develop conceptual foundations" for deep neural networks, according to the Association for Computing Machinery, and created breakthroughs that showed he "practical advantages" of the technology.

MIT’s AI can train neural networks faster than ever before

In an effort "to democratize AI," researchers at MIT have found a way to use artificial intelligence to train machine-learning systems much more efficiently. Their hope is that the new time- and cost-saving algorithm will allow resource-strapped researchers and companies to automate neural network design. In other words, by bringing the time and cost down, they could make this AI technique more accessible.

How Apple reinvigorated its AI aspirations in under a year

At its WWDC 2017 keynote on Monday, Apple showed off the fruits of its AI research labors. We saw a Siri assistant that's smart enough to interpret your intentions, an updated Metal 2 graphics suite designed for machine learning and a Photos app that can do everything its Google rival does without an internet connection. Being at the front of the AI pack is a new position for Apple to find itself in. Despite setting off the AI arms race when it introduced Siri in 2010, Apple has long lagged behind its competitors in this field. It's amazing what a year of intense R&D can do.

Google's neural network is now writing sappy poetry

After binge reading romance novels for the past few months, Google's neural network is suddenly turning into the kid from English Lit 101 class. The reading assignment was part of Google's plan to help the app sound more conversational, but the follow-up assignment to take what it learned and write some poetry turned out a little more sophomoric.

Artificial intelligence learns Mario level in just 34 attempts

Perhaps it's that all the levels have simple, left-to-right objectives, or maybe it's just that they're so iconic, but for some reason older Mario games have long been a target for those interested in AI and machine learning. The latest effort is called MarI/O (get it?), and it learned an entire level of Super Mario World in 34 tries.

Qualcomm's brain-like processor is almost as smart as your dog

Biological brains are sometimes overrated, but they're still orders of magnitude quicker and more power-efficient than traditional computer chips. That's why Qualcomm has been quietly funneling some of its prodigious income into a project called "Zeroth," which it hopes will one day give it a radical advantage over other mobile chip companies. According to Qualcomm's Matt Grob, Zeroth is a "biologically-inspired" processor that is modeled on real-life neurons and is capable of learning from feedback in much the same way as a human or animal brain does. And unlike some other so-called artifical intelligences we've seen, this one appears to work. The video after the break shows a Zeroth-controlled robot exploring an environment and then naturally adjusting its behavior in response not to lines of code, but to someone telling it whether it's being "good" or "bad." "Everything here is biologically realistic: spiking neurons implemented in hardware on that actual machine." Zeroth is advanced enough that Qualcomm says it's ready to work with other companies who want to develop applications to run on it. One particular focus is on building neural networks that will fit into mobile devices and enable them to learn from users who, unlike coders, aren't able or willing to instruct devices in the usual tedious manner. Grob even claims that, when a general-purpose Zeroth neural network is trained to do something specific, such as recognizing and tracking an object, it can already accomplish that task better than an algorithm designed solely for that function. Check out the source link to see Grob's full talk and more demo videos -- especially if you want to confirm your long-held suspicion that dogs are scarily good at math.