terrorism

Latest

Google manually reviewed a million suspected terrorist videos on YouTube

In the first three months of 2019, Google manually reviewed more than a million suspected "terrorist videos" on YouTube, Reuters reports. Of those reviewed, it deemed 90,000 violated its terrorism policy. That means somewhere around nine percent of the million videos were removed, suggesting that either the videos must be rather extreme to get cut, or the process that flags them for review is a bit of a catchall.

The NSA says it's time to drop its massive phone-surveillance program

The National Security Agency (NSA) has formally recommended that the White House drop the phone surveillance program that collects information about millions of US phone calls and text messages. The Wall Street Journal reports that people familiar with the matter say the logistical and legal burdens of maintaining the program outweigh any intelligence benefits it brings.

Sri Lanka temporarily bans social media after terrorist bombings

Extremist violence has once again prompted Sri Lanka to put a halt to social media in the country. The government has instituted a "temporary" ban on social networks, including Facebook, WhatsApp and Viber, after a string of apparently coordinated bombings that targeted churches and hotels on April 21st, killing over 200 people. Udaya Seneviratne, secretary to Sri Lanka's president, described as an attempt to "prevent incorrect and wrong information" from spreading in the wake of the terrorist attacks.

Christchurch shooting videos are still on Facebook over a month later

Current methods for filtering out terrorist content are still quite limited, and a recent discovery makes that all too clear. Motherboard and the Global Intellectual Property Enforcement Center's Eric Feinberg have discovered that variants of the Christchurch mass shooter's video were available on Facebook 36 days after the incident despite Facebook's efforts to wipe them from the social network. Some of them were trimmed to roughly a minute, but they were all open to the public -- you just had to click a "violent or graphic content" confirmation to see them. Others appeared to dodge filtering attempts by using screen captures instead of the raw video.

EU law could fine sites for not removing terrorist content within an hour

The European Union has been clear on its stance that terrorist content is most harmful in the first hour it appears online. Yesterday, the European Parliament voted in favor of a new rule that could require internet companies to remove terrorist content within one hour after receiving an order from authorities. Companies that repeatedly fail to abide by the law could be fined up to four percent of their global revenue.

New York fails in its first attempt at face recognition for drivers

New York's bid to identify road-going terrorists with facial recognition isn't going very smoothly so far. The Wall Street Journal has obtained a Metropolitan Transportation Authority email showing that a 2018 technology test on New York City's Robert F. Kennedy Bridge not only failed, but failed spectacularly -- it couldn't detect a single face "within acceptable parameters." An MTA spokesperson said the pilot program would continue at RFK as well as other bridges and tunnels, but it's not an auspicious start.

Australian bill could imprison social network execs over violent content

Australia may take a stricter approach to violent online material than Europe in light of the mass shooting in Christchurch, New Zealand. The government is introducing legislation that would punish social networks that don't "expeditiously" remove "abhorrent" violent content produced by perpetrators, such as terrorism, kidnapping and rape. If found guilty, a company could not only face fines up to 10 percent of their annual turnover, but see its executives imprisoned for up to three years. The country's Safety Commissioner would have the power to issue formal notices, giving companies a deadline to remove offending material.

House chair asks tech CEOs to speak about New Zealand shooting response (updated)

Internet companies say they've been scrambling to remove video of the mass shooting in Christchurch, New Zealand, but US politicians are concerned they haven't been doing enough. The Chairman of the House Committee on Homeland Security, Bennie Thompson, has sent letters to the CEOs of Facebook, Microsoft, Twitter and YouTube asking them to brief the committee on their responses to the video on March 27th. Thompson was concerned the footage was still "widely available" on the internet giants' platforms, and that they "must do better."

A New Zealand shooting video hit YouTube every second this weekend

In the 24 hours after the mass shooting in New Zealand on Friday, YouTube raced to remove videos that were uploaded as fast as one per second, reports The Washington Post. While the company will not say how many videos it removed, it joined Facebook, Twitter and Reddit in a desperate attempt to remove graphic footage from the shooter's head-mounted camera.

Facebook pulled over 1.5 million videos of New Zealand shooting

Internet giants have been racing to pull copies of the New Zealand mass shooter's video from their sites, and Facebook is illustrating just how difficult that task has been. Facebook New Zealand's Mia Garlick has revealed that the social network removed 1.5 million attack videos worldwide in the first 24 hours, 1.2 million of which were stopped at the upload stage. This includes versions edited to remove the graphic footage of the shootings, Garlick said, as the company wants to both respect people affected by the murders and the "concerns of local authorities."

Facebook and YouTube rush to remove New Zealand shooting footage

Facebook and YouTube are working to remove "violent footage" of the New Zealand mass shootings. The gunman -- who killed 49 people and injured 20 in shootings at two mosques in the city of Christchurch -- appeared to livestream his attack to Facebook using a head-mounted GoPro camera. New Zealand Police said they were moving to have the "extremely distressing footage" removed.

US bans cargo shipments of lithium-ion batteries on passenger planes

The US government just added a new wrinkle to receiving lithium-ion batteries. The Department of Transportation and the FAA have issued an interim rule banning the transport of lithium-ion batteries and cells as cargo aboard passenger flights. It also demands that batteries aboard cargo aircraft carry no more than a 30 percent charge. You can still carry devices (including spare batteries) on your trips in most cases, but companies can't just stuff a passel of battery packs into an airliner's hold.

Hackers seize dormant Twitter accounts to push terrorist propaganda

As much progress as Twitter has made kicking terrorists off its platform, it still has a long way to go. TechCrunch has learned that ISIS supporters are hijacking long-dormant Twitter accounts to promote their ideology. Security researcher WauchulaGhost found that the extremists were using a years-old trick to get in. Many of these idle accounts used email addresses that either expired or never existed, often with names identical to their Twitter handles -- the social site didn't confirm email addresses for roughly a decade, making it possible to use the service without a valid inbox. As Twitter only partly masks those addresses, it's easy to create those missing addresses and reset those passwords.

EU draft law would force sites to remove extremist content

The European Union is no longer convinced that self-policing is enough to purge online extremist content. The Financial Times has learned that the EU is drafting legislation to force Facebook, Twitter, YouTube and other internet companies to delete material when law enforcement flags it as terrorist-related. While EU security commissioner Julian King didn't provide details of how the measure would work, a source said it would "likely" mandate removing that content within an hour of receiving notice, turning the existing voluntary guidelines into an absolute requirement.

Facebook has already removed 583 million fake accounts this year

Last month, Facebook published its internal community enforcement guidelines for the first time and today, the company has provided some numbers to show what that enforcement really looks like. In a new report that will be published quarterly, Facebook breaks down its enforcement efforts across six main areas -- graphic violence, adult nudity and sexual activity, terrorist propaganda, hate speech, spam and fake accounts. The report details how much of that content was seen by Facebook users, how much of it was removed and how much of it was taken down before any Facebook users reported it.

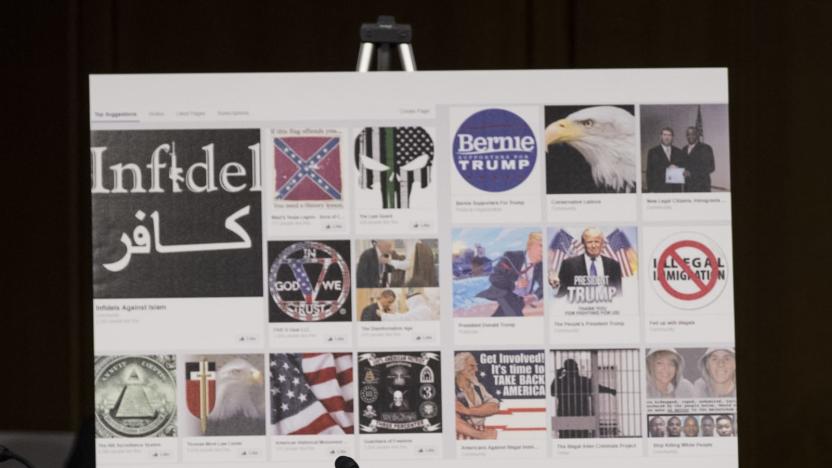

Facebook's friend suggestions helped connect extremists

When you think of internet giants fighting terrorism online, there's a good chance you think of them banning accounts and deleting posts. However, their friend suggestions may prove to be just as problematic. Researchers have shared a report to the Telegraph revealing that Facebook's "suggested friends" feature has been connecting Islamic extremists on a routine basis. While some instances are almost expected (contacting one extremist brings up connections to others), some of the suggestions surface purely by accident: reading an article about an extremist uprising in the Philippines led to recommendations for "dozens" of local extremists.

Facebook: AI will protect you

Artificial intelligence is a key part of everything Facebook does, from chatbots in Messenger to powering the personalized recommendations you get on apps like Instagram. But, as great as the technology is to create new and deeper experiences for users, Facebook says the most important role of AI lies in keeping its community safe. Today at F8, the company's chief technology officer, Mike Schroepfer, highlighted how valuable the tech has become to combating abuse on its platform, including hate speech, bullying and terrorist content. Schroepfer pointed to stats Facebook revealed last month that showed that its AI tools removed almost two million pieces of terrorist propaganda, with 99 percent of those being spotted before a human even reported them.

Facebook details its fight to stop terrorist content

Last June, Facebook described how it uses AI to help find and take down terrorist content on its platform and in November, the company said that its AI tools had allowed it to remove nearly all ISIS- and Al Qaeda-related content before it was flagged by a user. Its efforts to remove terrorist content with artificial intelligence came up frequently during Mark Zuckerberg's Congressional hearings earlier this month and the company's lead policy manager of counterterrorism spoke about the work during SXSW in March. Today, Facebook gave an update of that work in an installment of its Hard Questions series.

Twitter has removed over 1.2 million accounts for promoting terrorism

Twitter released its biannual Transparency Report today and it shared stats on how it continues to handle terrorist content. Overall, since August of 2015, the company has removed over 1.2 million accounts that promoted terrorism. During the second half of last year, it permanently suspended 274,460 accounts for this reason, which is slightly less than what was removed during the first half of 2017. Twitter notes that it has now seen a decline in these sorts of removals across three reporting periods and it attributes that pattern to "years of hard work making our site an undesirable place for those seeking to promote terrorism."

Facebook knows it must do more to fight bad actors

Not everything at SXSW 2018 was about films or gadgets. A few blocks away from the Austin Convention Center, where the event is being held, the Consumer Technology Association (CTA) hosted a number of panels for its Innovation Policy Day. In a session dubbed "Fighting Terror with Tech," Facebook's Lead Policy Manager of Counterterrorism, Brian Fishman, spoke at great length about what the company is doing to keep bad actors away from its platform. That doesn't only include terrorists who may be using the site to communicate, or to try to radicalize others, but also trolls, bots and the spreading of hate speech and fake news.