Google’s AI advances are equal parts worry and wonder

We cannot put off finding an answer to AI ethics any longer.

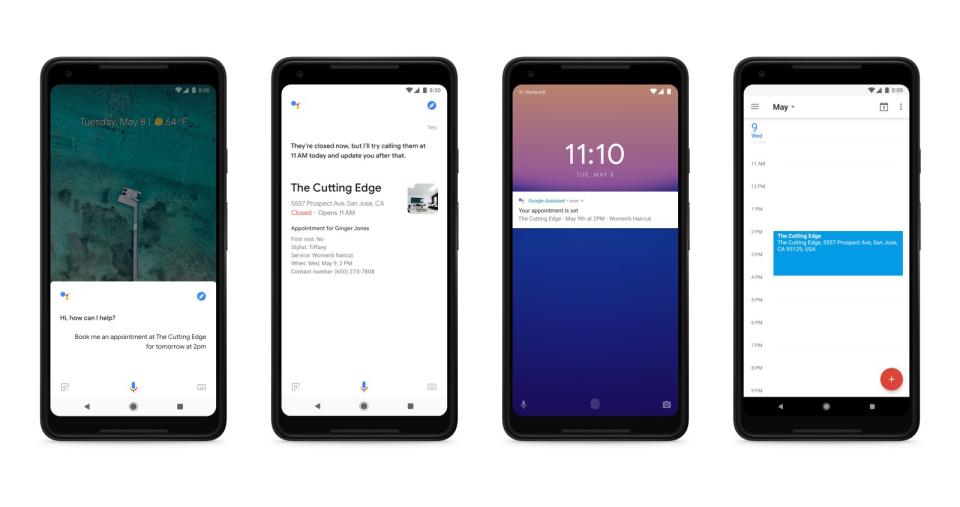

I laughed along with most of the audience at I/O 2018 when, in response to a restaurant rep asking it to hold on, Google Assistant said "Mmhmm". But beneath our mirth lay a sense of wonder. The demo of Google Duplex, "an AI system for accomplishing real-world tasks over the phone," was almost unbelievable. The artificially intelligent Assistant successfully made a reservation with a human being over the phone without the person knowing it wasn't real. It even used sounds like "umm," "uhh" and tonal inflections to create a more convincing, realistic cadence. It was like a scene straight out of a science fiction movie or Black Mirror.

When I played that clip again on Google's blog post later, my wonder turned to unease. I dissected that conversation, analyzing all the ways that the AI mimicked human behavior. Its methods, I discovered, were incredibly sophisticated. It didn't just insert random pauses or nonverbal sounds, but did so in places that made sense. And even the way it said things like "Ohh, I gotcha," were so lifelike they were laden with meaning and subtext. Assistant conveyed rich, high-context information in simple sentences, just as we humans do -- with sarcasm and ambiguity. How did it get so creepily real?

It's been a year since CEO Sundar Pichai declared the intention to shift focus from "mobile first" to "AI first." Since then, Google has invested heavily in machine learning research, developing frameworks to create more sophisticated applications that can basically think for themselves.

There's no denying Google's pivot to AI has brought some truly useful new features. Photos, for one, can identify the exact outline of a subject like your adorable toddler, and turn everything else in the picture grayscale to make a stylized picture. Or it can take an old black-and-white photo, identify the trees or the grass and colorize them appropriately. Meanwhile, the revamped News app will use AI to pick a variety of sources to deliver full, rounded perspectives on news stories. Smart Replies, which debuted in Inbox as early as 2015, and its logical extension Smart Compose, can save you the trouble of coming up with answers to your friends' inane emails. In every one of Google's vast array of products, AI has been inserted to improve performance and utility. They're getting smarter and faster at understanding not just context but also our preferences and behaviors, meaning we can think less, and let the computers do that for us.

With Duplex, Assistant will be able to book restaurants and services for you via a phone call. Simply ask it to make you a haircut appointment on Tuesday between 10am and noon, for example, and Assistant will call your designated salon and sort out your reservation. It's like having a real-life personal assistant, and if Google pulls this off, the convenience it offers would be immense.

But Duplex doesn't simply think for us, it emotes for us, as well. In an effort to prevent the person on the other end from catching on or feeling uncomfortable, the system has injected human imperfections in its speech to reproduce natural conversations.

How far are we (and Google and its peers) going to let AI go? How much of our tasks are we going to relegate to a disembodied voice? These aren't new questions -- the industry has been debating such ethical and philosophical issues for years. But before the Duplex demo, the idea of AI that can trick you into thinking it's an actual human seemed unrealistic and far away. Suddenly though, answering those questions seems quite urgent.

The AI revolution had seemingly harmless beginnings. Neural networks applied to text recognition and translation brought great results, offering more accurate interpretations of languages as complex as Mandarin. Then they learned to identify faces the way we humans do, even from just a profile, beat us at complex games, and began to surpass real doctors in accurately predicting heart attacks. There seems to be no limit to what AI can do with enough training and models.

It's hard to imagine the goal isn't to remove as much thought and effort on the user's part as possible. Even if the trade-off for all that convenience is blurring the line between human and robot. AI's already taken over as photographer in Google's smart camera Clips, and to a lesser extent in phones like the Huawei P20 Pro and LG G7 ThinQ. The extension of that to the rest of our lives seems nigh.

With Duplex, Google currently leads its peers in the race to develop natural-sounding AI, and may perhaps even be close to creating a robot that can pass the Turing test. Other companies like Apple, Amazon and Facebook will surely redouble their own AI efforts, collectively pushing the limits of what machines can achieve.

I/O 2018 shows we've made significant progress in realizing something that's existed mostly as a science fiction concept forever, but we've still barely scratched the surface of what's possible. But, maybe it's time that we pump the brakes, even if ever so slightly. We're not past the point of no return, yet, and we should figure out, as a society, what our endgame is with AI. Do we want it to be a soulless helper? Or are we willing to wrestle with computers that behave in ways that are increasingly indistinguishable from a person?

Click here to catch up on the latest news from Google I/O 2018!