ethics

Latest

Facebook backs an independent AI ethics research center

Facebook is just as interested as its peers in fostering ethical AI. The social network has teamed up with the Technical University of Munich to back the formation of an independent AI ethics research center. The plainly titled Institute for Ethics in Artificial Intelligence will wield the university' academic resources to explore issues that "industry alone cannot answer," including those in areas like fairness, privacy, safety and transparency.

China forms video game ethics committee as part of crackdown

China's freeze on game approvals is winding down, although gamers and developers might not like what the thaw entails. The country has revealed the existence of an Online Game Ethics Committee that will screen games to ensure they're "healthy and beneficial" and address "social concerns," among other issues. To put it another way, the panel will clamp down on game addiction, sex, violence and short-sightedness. That's on top of the country's crackdowns on political dissent, of course.

Canada and France will explore AI ethics with an international panel

The AI revolution is coming, and both Canada and France want to make sure we're approaching it responsibly. Today, the countries announced plans for the International Panel on Artificial Intelligence (IPAI), a platform to discuss "responsible adoption of AI that is human-centric and grounded in human rights, inclusion, diversity, innovation and economic growth," according to a mandate from the office of Canadian Prime Minister Justin Trudeau. It's still unclear which other countries will be participating, but Mounir Mahjoubi, France's secretary of state for digital affairs, says it'll include both G7 and EU countries, Technology Review reports. It won't just be politicians joining the conversation. France and Canada plan to get the scientific community involved, as well as industry and civil society experts. While it's easy to jump to doomsday scenarios when talking about AI, that loses sight of the other ways the technology will eventually impact humanity. How do we build AI that takes human rights and the public good into account? What does the rise of AI and automation mean for human workers? And how do we develop AI we can actually trust? Those are some topics the panel could end up considering, according to the Canadian government, but they're also questions for every country on Earth to ask as we barrel towards true AI. If anything, the panel could help to normalize discussions around artificial intelligence. While luminaries like Elon Musk and Stephen Hawking haven't been shy about discussing the dangers of the technology and the dramatic impact it could have on humanity, their warnings have leaned towards the extreme. By having more countries thinking hard about the ethical considerations of AI, there's a better chance we'll actually be able to preempt potential issues.

China halts scientist's gene-edited baby research

The scientific community and the world at large were rocked this week when researcher He Jiankui claimed he had created the world's first genetically edited babies. Using CRISPR/Cas9, He says he edited the genes of twin girls, Lulu and Nana, in order to make them more resistant to HIV. But He's work has been met with severe backlash as scientists around the world have called it irresponsible and unethical while emphasizing that CRISPR technology is not yet ready for human embryos since associated risks are not fully understood. Now, the Associated Press reports that China's government has put a hold on He's work.

Google employees push back on censored China search engine (update)

Employees at Google are protesting the company's work on a censored search engine for China, the New York Times reports, signing a letter that calls for more transparency and questions the move's ethics. Reports of the search engine surfaced earlier this month, leaving many to wonder how the company could justify it after publicly pulling its Chinese search engine in 2010 due to the country's censorship practices. The letter, which is circulating on Google's internal communications system, has been signed by approximately 1,000 employees, according to the New York Times' sources.

DeepMind, Elon Musk and others pledge not to make autonomous AI weapons

Today during the Joint Conference on Artificial Intelligence, the Future of Life Institute announced that more than 2,400 individuals and 160 companies and organizations have signed a pledge, declaring that they will "neither participate in nor support the development, manufacture, trade or use of lethal autonomous weapons." The signatories, representing 90 countries, also call on governments to pass laws against such weapons. Google DeepMind and the Xprize Foundation are among the groups who've signed on while Elon Musk and DeepMind co-founders Demis Hassabis, Shane Legg and Mustafa Suleyman have made the pledge as well.

Microsoft employees criticize ICE contract amid recent reports

Add Microsoft to the list of companies whose government deals are provoking outrage both inside and outside their offices. The Redmond firm sparked a wave of criticism on social networks after users discovered a January blog post noting that the company's Azure cloud service team was "proud to support" US Immigration and Customs Enforcement, which has come under fire for policies that include separating children from parents. Microsoft briefly took down the post on June 18th in response, but the company has since described it as "a mistake" and replaced the content.

Google Assistant fired a gun: We need to talk

For better or worse, Google Assistant can do it all. From mundane tasks like turning on your lights and setting reminders to convincingly mimicking human speech patterns, the AI helper is so capable it's scary. Its latest (unofficial) ability, though, is a bit more sinister. Artist Alexander Reben recently taught Assistant to fire a gun. Fortunately, the victim was an apple, not a living being. The 30-second video, simply titled "Google Shoots," shows Reben saying, "OK Google, activate gun." Barely a second later, a buzzer goes off, the gun fires and Assistant responds, "Sure, turning on the gun." On the surface, the footage is underwhelming -- nothing visually arresting is happening. But peel back the layers even a little and it's obvious that this project is meant to provoke a conversation on the boundaries of what AI should be allowed to do.

Google says its military AI work will be guided by ethical principles

Google's Pentagon contract and its involvement with the military's Project Maven has stirred controversy both outside of and within the company. Its plan to provide AI technology that can help flag drone images for human review has led to an internal petition signed by thousands of employees who oppose the decision as well as a number of resignations. Now, the New York Times reports that Google is working on a set of guidelines aimed at steering the company's decisions regarding defense and intelligence contracts.

Google will always do evil

One day in late April or early May, Google removed the phrase "don't be evil" from its code of conduct. After 18 years as the company's motto, those three words and chunks of their accompanying corporate clauses were unceremoniously deleted from the record, save for a solitary, uncontextualized mention in the document's final sentence. Google didn't advertise this change. In fact, the code of conduct states it was last updated April 5th. The "don't be evil" exorcism clearly took place well after that date. Google has chosen to actively distance itself from the uncontroversial, totally accepted tenet of not being evil, and it's doing so in a shady (and therefore completely fitting) way. After nearly two decades of trying to live up to its motto, it looks like Google is ready to face reality. In order for Google to be Google, it has to do evil.

Google’s AI advances are equal parts worry and wonder

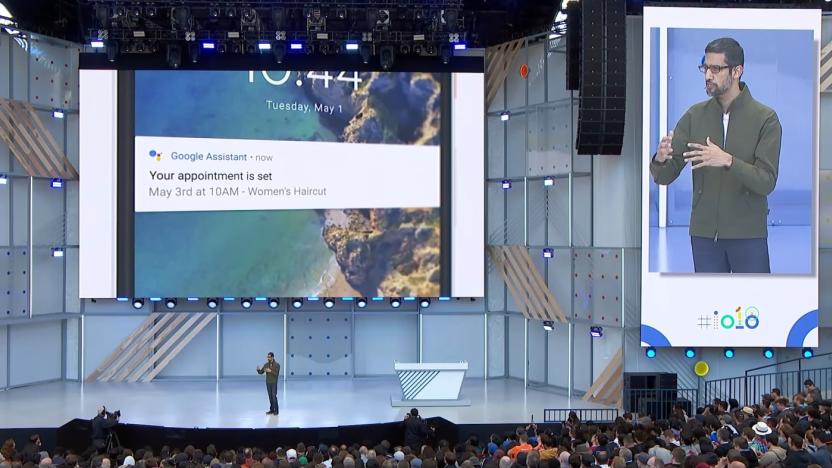

I laughed along with most of the audience at I/O 2018 when, in response to a restaurant rep asking it to hold on, Google Assistant said "Mmhmm". But beneath our mirth lay a sense of wonder. The demo of Google Duplex, "an AI system for accomplishing real-world tasks over the phone," was almost unbelievable. The artificially intelligent Assistant successfully made a reservation with a human being over the phone without the person knowing it wasn't real. It even used sounds like "umm," "uhh" and tonal inflections to create a more convincing, realistic cadence. It was like a scene straight out of a science fiction movie or Black Mirror.

Google: Duplex phone calling AI will identify itself

After an impressive Google I/O demo where its Duplex AI system called a restaurant and salon to set up appointments, one of the big questions concerned the ethics of the technology. The Google Assistant voice took steps to pretend to be human, inserting "umms" and "ahs" into the conversation while a person on the other end appeared to be unaware that they were talking to a program. As a Turing Test candidate, it was impressive, but we'd like to know if the "person" who just called us has a heartbeat and a favorite One Direction member.

Axon opens ethics board to guide its use of AI in body cameras

Axon (formerly Taser) is keenly aware of the potential for Orwellian abuses of facial recognition, and it's taking an unusual step to avoid creating that drama with its body cameras and other image recognition systems. The police- and military-focused company has created an AI ethics board that will convene twice per year (on top of regular interactions) to discuss the ramifications of upcoming products. As spokesperson Steve Tuttle explained to The Verge, this will ideally establish a set of "AI ethics principles" within police work where certain uses are off-limits.

Apple may secure its own battery materials to avoid shortages

Cobalt is an essential component in lithium ion batteries, making it a crucial material in the production of smart devices and electric vehicles. But, as battery-hungry cars go mainstream, there's a risk that the world's supply will be eaten up by cars, which poses a problem for all of the other things we use. It's why Apple has reportedly entered into direct talks with cobalt miners in the hope of securing a supply of the material itself. Bloomberg reports that the company, which has previously left the effort to its battery manufacturers, has now taken a more active role.

'The Red Strings Club' explores the morality of transhumanism

If you had the ability to turn off all the negative emotions in your mind -- depression, anxiety, rage -- would you do it? Would you eagerly implant a device in your body that eliminates those feelings, or would you pause and consider the consequences? Without anxiety, would your drive to succeed stagnate? Without rage, would your body be primed to fight or flee in a sticky situation? Without depression, would you appreciate joy? Think about it for a moment. We'll wait.

You can’t buy an ethical smartphone today

Any ethical, non-🍏 📱 recommendations? It all started with a WhatsApp message from my friend, an environmental campaigner who runs a large government sustainability project. She's the most ethical person I know and has always worked hard to push me, and others, into making a more positive impact on the world. Always ahead of the curve, she steered me clear of products containing palm oil, as well as carbon-intensive manufacturing and sweatshop labor. That day, she wanted my opinion on what smartphone she should buy, but this time requested an ethical device. Until now, she's been an HTC loyalist, but wanted to explore the options for something better and more respectable. My default response was the Fairphone 2, which is produced in small quantities by a Dutch startup, but I began to wonder -- that can't be the only phone you can buy with a clear conscience, can it?

You don’t need a PhD to grasp the anxieties around sex robots

NSFW Warning: This story may contain links to and descriptions or images of explicit sexual acts. If you want to understand the myriad issues concerning sex robots that humanity needs to grapple with, you have two options. You can either spend several years studying for a PhD in either of those fields, or you can sit down in front of your TV.

This year we took small, important steps toward the Singularity

We won't have to wait until 2019 for our Blade Runner future, mostly because artificially intelligent robots already walk, roll and occasionally backflip among us. They're on our streets and in our stores. Some have wagged their way into our hearts while others have taken a more literal route. Both in civilian life and the military battlespace, AI is adopting physical form to multiply the capabilities of the humans it serves. As robots gain ubiquity, friction between these bolt buckets and we meat sacks is sure to cause issues. So how do we ensure that the increasingly intelligent machines we design share our ethical values while minimizing human-robot conflict? Sit down, Mr. Asimov.

Navajo Nation may undo genetic research ban in hopes of better care

The Navajo Nation banned genetic studies in 2002 due to concerns over how its members' genetic material would be used, but, as Nature News reports, the Navajo are considering a reversal of that policy. An oncology center is set to open next year on Navajo lands and the tribe's research-ethics board is looking into allowing some genetic research to take place at the facility.

DeepMind forms an ethics group to explore the impact of AI

Google's AI-research arm DeepMind has announced the creation of DeepMind Ethics & Society (DMES), a new unit dedicated to exploring the impact and morality of the way AI shapes the world around us. Along with external advisors from academia and the charitable sector, the team aims to "help technologists put ethics into practice, and to help society anticipate and direct the impact of AI so that it works for the benefit of all".