CMU

Latest

Carnegie Mellon’s latest snakebot can swim underwater

You can now add swimming to the list of things Carnegie Mellon's snake robot can do.

A mind-controlled robot arm doesn’t have to mean brain implants

A robotic arm smoothly traces the movements of a cursor on a computer screen, controlled by the brain activity of a person sitting close by who stares straight ahead. The person wears a cap covered in electrodes. This "mind-controlled" robot limb is being manipulated by a brain-computer interface (BCI), which provides a direct link between the neural information of a brain that's wired to an electroencephalography (EEG) device and an external object.

Tomorrow's ‘general’ AI revolution will grow from today's technology

During his closing remarks at the I/O 2019 keynote last week, Jeff Dean, Google AI's lead, noted that the company is looking at "AI that can work across disciplines," suggesting the Silicon Valley giant may soon pursue artificial general intelligence, a technology that eventually could match or exceed human intellect.

How we can all cash in on the benefits of workplace automation

Artificial intelligence is no different than the cotton gin, telecommunication satellites or nuclear power plants. It's a technology, one with the potential to vastly improve the lives of every human on Earth, transforming the way that we work, learn and interact with the world around us. But like nuclear science, AI technology also carries the threat of being weaponized -- a digital cudgel with which to beat down the working class and enshrine the current capitalist status quo.

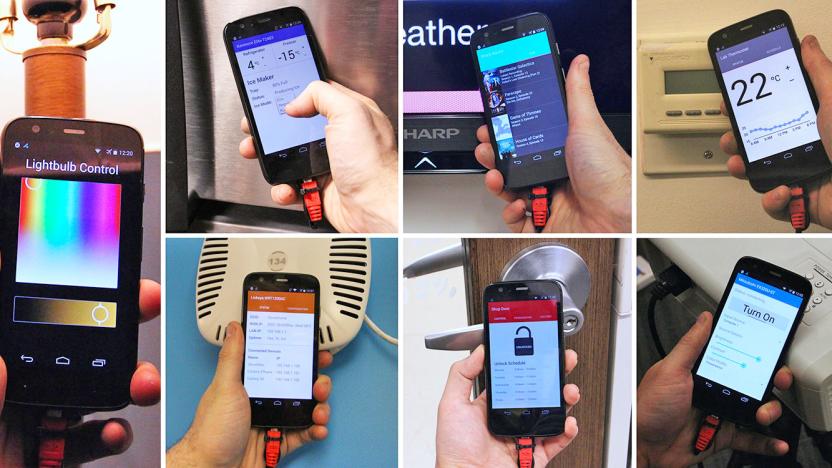

Future phones will ID devices by their electromagnetic fields

While NFC has become a standard feature on Android phones these days, it is only as convenient as it is available on the other end, not to mention the awkwardness of aligning the antennas as well. As such, Carnegie Mellon University's Future Interfaces Group is proposing a working concept that's practically the next evolution of NFC: electromagnetic emissions sensing. You see, as Disney Research already pointed out last year, each piece of electrical device has its own unique electromagnetic field, so this characteristic alone can be used as an ID so long as the device isn't truly powered off. With a little hardware and software magic, the team has come up with a prototype smartphone -- a modified Moto G from 2013 -- fitted with electromagnetic-sensing capability, so that it can recognize any electronic device by simply tapping on one.

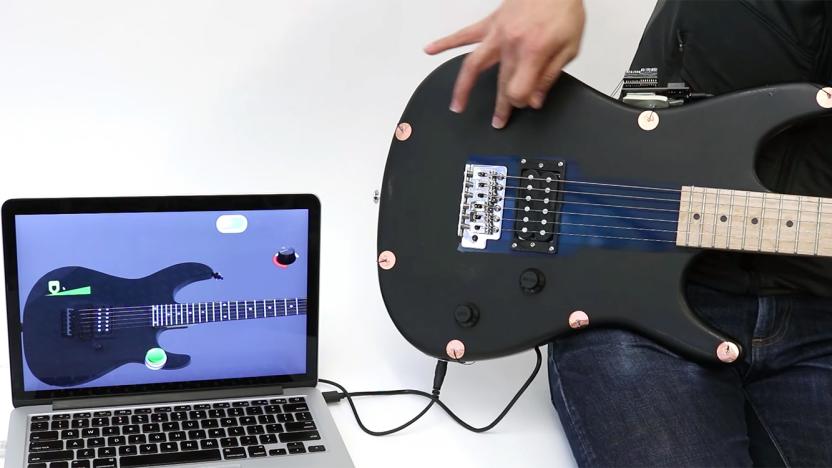

Get ready to 'spray' touch controls onto any surface

Nowadays we're accustomed to the slick glass touchscreens on our phones and tablets, but what if we could extend such luxury to other parts of our devices -- or to any surface, for that matter -- in a cheap and cheerful way? Well, apparently there's a solution on the way. At the ACM CHI conference this week, Carnegie Mellon University's Future Interfaces Group showed off its latest research project, dubbed Electrick, which enables low-cost touch sensing on pretty much any object with a conductive surface -- either it's made out of a conductive material (including plastics mixed with conductive particles) or has a conductive coating (such as a carbon conductive spray paint) applied over it. Better yet, this technique works on irregular surfaces as well.

How an AI took down four world-class poker pros

"That was anticlimactic," Jason Les said with a smirk, getting up from his seat. Unlike nearly everyone else in Pittsburgh's Rivers Casino, Les had just played his last few hands against an artificially intelligent opponent on a computer screen. After his fellow players -- Daniel McAulay next to him and Jimmy Chou and Dong Kim in an office upstairs -- eventually did the same, they started to commiserate. The consensus: That AI was one hell of a player.

Libratus, the poker-playing AI, destroyed its four human rivals

The Kenny Rogers classic profoundly states that "you've got to know when to hold 'em, know when to fold 'em," and for the first time, an AI has out-gambled world-class players at heads-up, no-limit Texas Hold'em. Our representatives of humanity -- Jason Les, Dong Kyu Kim, Daniel McAulay and Jimmy Chou -- kept things relatively tight at the outset but a ill-fated shift in strategy wiped out their gains and forced them to chase the AI for the remaining weeks. At the end of day 20 and after 120,000 hands, Libratus claimed victory with daily total of $206,061 in theoretical chips and an overall pile of $1,766,250.

Two research teams taught their AIs to beat pros at poker

Poker-playing bots aren't exactly new -- just ask anyone who's tried to win a little cash on PokerStars -- but two different groups of researchers are setting their sights a little higher. To no one's surprise, those AI buffs are trying to teach their algorithms how to beat world-class Texas Hold'em players, and they're juuuust about there.

New AI 'Gabriel' wants to whisper instructions in your ear

Researchers at Carnegie Mellon University are building an AI platform that will "whisper" instructions in your ear to provide cognitive assistance. Named after Gabriel, the biblical messenger of God, the whispering robo-assistant can already guide you through the process of building a basic Lego object. But, the ultimate goal is to provide wearable cognitive assistance to millions of people who live with Alzheimer's, brain injuries or other neurodegenerative conditions. For instance, if a patient forgets the name of a relative, Gabriel could whisper the name in their ear. It could also be programmed to help patients through everyday tasks that will decrease their dependence on caregivers.

Experimental UI equips you with a virtual tape measure and other skeuomorphs

While companies like Apple are moving away wholesale from faux real-world objects, one designer wants to take the concept to its extreme. Chris Harrison from CMU's Future Interfaces Group thinks modern, "flat" software doesn't profit from our dexterity with real-world tools like cameras, markers or erasers. To prove it, he created TouchTools, which lets you manipulate tools on the screen just as you would in real life. By touching the display with a grabbing motion, for example, a realistic-looking tape measure appears, and if you grab the "tape," you can unsheathe it like the real McCoy. He claims that provides "fast and fluid mode switching" and doesn't force designers to shoehorn awkward toolbars. So far, it's only experimental, but the idea is to eventually make software more natural to use -- 2D interfaces be damned.

Carnegie Mellon researchers develop robot that takes inventory, helps you find aisle four

Fed up with wandering through supermarket aisles in an effort to cross that last item off your shopping list? Researchers at Carnegie Mellon University's Intel Science and Technology Center in Embedded Computing have developed a robot that could ease your pain and help store owners keep items in stock. Dubbed AndyVision, the bot is equipped with a Kinect sensor, image processing and machine learning algorithms, 2D and 3D images of products and a floor plan of the shop in question. As the mechanized worker roams around, it determines if items are low or out of stock and if they've been incorrectly shelved. Employees then receive the data on iPads and a public display updates an interactive map with product information for shoppers to peruse. The automaton is currently meandering through CMU's campus store, but it's expected to wheel out to a few local retailers for testing sometime next year. Head past the break to catch a video of the automated inventory clerk at work.

Google Sky Map boldly explores open source galaxy

Via its Research Blog, Google has announced the donation of the Sky Map project to the open source community. Originally developed by Googlers during their "20% time," the stellar application was launched in 2009 to showcase the sensors in first generation Android handsets. Four years and over 20 million downloads later, Sky Map's code will be donated to the people -- with Carnegie Mellon University taking the reins on further development through "a series of student projects." Hit the source link for the official announcement and a bit of nostalgia from Google.

Researchers demo 3D face scanning breakthroughs at SIGGRAPH, Kinect crowd squarely targeted

Lookin' to get your Grown Nerd on? Look no further. We just sat through 1.5 hours of high-brow technobabble here at SIGGRAPH 2011, where a gaggle of gurus with IQs far, far higher than ours explained in detail what the future of 3D face scanning would hold. Scientists from ETH Zürich, Texas A&M, Technion-Israel Institute of Technology, Carnegie Mellon University as well as a variety of folks from Microsoft Research and Disney Research labs were on hand, with each subset revealing a slightly different technique to solving an all-too-similar problem: painfully accurate 3D face tracking. Haoda Huang et al. revealed a highly technical new method that involved the combination of marker-based motion capture with 3D scanning in an effort to overcome drift, while Thabo Beeler et al. took a drastically different approach. Those folks relied on a markerless system that used a well-lit, multi-camera system to overcome occlusion, with anchor frames acting as staples in the success of its capture abilities. J. Rafael Tena et al. developed "a method that not only translates the motions of actors into a three-dimensional face model, but also subdivides it into facial regions that enable animators to intuitively create the poses they need." Naturally, this one's most useful for animators and designers, but the first system detailed is obviously gunning to work on lower-cost devices -- Microsoft's Kinect was specifically mentioned, and it doesn't take a seasoned imagination to see how in-home facial scanning could lead to far more interactive games and augmented reality sessions. The full shebang can be grokked by diving into the links below, but we'd advise you to set aside a few hours (and rest up beforehand). %Gallery-130390%

Carnegie Mellon researchers develop world's smallest biological fuel cell

Cars and other vehicles may be the first thing that springs to mind at the mention of fuel cells, but the technology can of course also be used for plenty of other devices big and small, and a team of researchers at Carnegie Mellon University are now looking to take them to a few new places that haven't been possible so far. To that end, they've developed what they claim is the world's smallest biological fuel cell, which is the size of a single human hair and "generates energy from the metabolism of bacteria on thin gold plates in micro-manufactured channels." That, they say, could make it ideal for use in places like deep ocean environments where batteries are impractical -- or possibly in electronic devices with some further refinements, where they could potentially store more energy than traditional batteries in the same space. The university's full press release is after the break.

Vibratron plays impossible music with ball bearings, is your new master (video)

First they came for Jeopardy!, then they came for our vibraphones. We still own baseball, but the "humans only" list has grown one shorter now that the Carnegie Mellon Robotics Club has birthed Vibratron, a robotic vibraphone. Vibratron's Arduino Mega controls 30 solenoid gates that drop steel balls onto the vibration keys, producing a note; an Archimedes screw recycles the bearings, turning them once more into sweet, sweet music. We should also note that Vibratron doesn't put decent, salt-of-the-earth vibraphonists out of work. That cacophony in the video is "Circus Galop," written for two player pianos and impossible for humans to perform -- and still pretty hard for humans to listen to. See, Vibratron is here to help you, fellow humans. At least for now. Click the video above to get acquainted.

Carnegie Mellon's GigaPan Time Machine brings time-lapse to panoramas

We've already seen GigaPan technology used for plenty of impressive panoramas, but some researchers from Carnegie Mellon University have now gone one step further with their so-called "GigaPan Time Machine" project. Thanks to the magic of HTML5 and some time-consuming (but automated) photography, you can now "simultaneously explore space and time" right in your web browser -- that is, zoom in and around a large-format panorama that also happens to be a time-lapse video. If you don't feel like exploring yourself, you can also jump straight to some highlights -- like the like the construction of the Hulk statue at the CMU Carnival pictured above. Ht up the source link below to try it out -- just make sure you're in either Chrome and Safari, as they're the only compatible browsers at this time.

NC State and CMU develop velocity-sensing shoe radar, aim to improve indoor GPS routing

The world at large owes a good bit to Maxwell Smart, you know. Granted, it's hard to directly link the faux shoe phone to the GPS-equipped kicks that are around today, but the lineage is certainly apparent. The only issue with GPS in your feet is how they react when you waltz indoors, which is to say, not at all. In the past, most routing apparatuses have used inertial measurement units (IMUs) to track motion, movement and distance once GPS reception is lost indoors, but those have proven poor at spotting the difference between a slow gait and an outright halt. Enter NC State and Carnegie Mellon University, who have worked in tandem in order to develop a prototype shoe radar that's specifically designed to sense velocity. Within the shoe, a radar is attached to a diminutive navigational computer that "tracks the distance between your heel and the ground; if that distance doesn't change within a given period of time, the navigation computer knows that your foot is stationary." Hard to say when Nike will start testing these out in the cleats worn by football players, but after last week's abomination of a spot (and subsequent botching of a review by one Ron Cherry) during the NC State - Maryland matchup, we're hoping it's sooner rather than later.

Google and TU Braunschweig independently develop self-driving cars (video)

There's a Toyota Prius in California, and a VW Passat halfway around the globe -- each equipped with bucket-shaped contraptions that let the cars drive themselves. Following their research on autonomous autos in the DARPA Urban Challenge, a team at Germany's TU Braunschweig let the above GPS, laser and sensor-guided Volkswagen wander down the streets of Brunswick unassisted late last week, and today Google revealed that it's secretly tested seven similar vehicles by the folks who won that same competition. CMU and Stanford engineers have designed a programmable package that can drive at the speed limit on regular streets and merge into highway traffic, stop at red lights and stop signs and automatically react to hazards -- much like the German vehicle -- except Google says its seven autos have already gone 1,000 unassisted miles. That's still a drop in the bucket, of course, compared to the efforts it will take to bring the technology home -- Google estimates self-driving vehicles are at least eight years down the road. Watch the TU Braunschweig vehicle in action after the break. Update: Though Google's cars have driven 1,000 miles fully autonomously, that's a small fraction of the time they've spent steering for themselves. We've learned the vehicles have gone 140,000 miles with occasional human interventions, which were often a matter of procedure rather than a compelling need for their human drivers to take control. [Thanks, el3ktro]

Carnegie Mellon's robotic snake stars in a glamour video

We've been pretty into Carnegie Mellon's modular snake robots for a while now, and seeing as it's a relatively sleepy Sunday we thought we'd share this latest video of snakebots just basically crawling all over the place and getting crazy. Bots like these have been getting some serious military attention lately, so watching these guys wriggle into any damn spot they please is at once awesome and terrifying. Or maybe it's just the music. Video after the break.[Thanks, Curtis]