DeepLearning

Latest

Microsoft grounds its AI chat bot after it learns racism

Microsoft's Tay AI is youthful beyond just its vaguely hip-sounding dialogue -- it's overly impressionable, too. The company has grounded its Twitter chat bot (that is, temporarily shutting it down) after people taught it to repeat conspiracy theories, racist views and sexist remarks. We won't echo them here, but they involved 9/11, GamerGate, Hitler, Jews, Trump and less-than-respectful portrayals of President Obama. Yeah, it was that bad. The account is visible as we write this, but the offending tweets are gone; Tay has gone to "sleep" for now.

Google AI finally loses to Go world champion

At last, humanity is on the scoreboard. After three consecutive losses, Go world champion Lee Sedol has beaten Google's DeepMind artificial intelligence program, AlphaGo, in the fourth game of their five-game series. DeepMind founder Demis Hassabis notes that the AI lost thanks to its delayed reaction to a slip-up: it slipped on the 79th turn, but didn't realize the extent of its mistake (and thus adapt its playing style) until the 87th move. The human win won't change the results of the challenge -- Google is donating the $1 million prize to charity rather than handing it to Lee. Still, it's a symbolic victory in a competition that some had expected AlphaGo to completely dominate.

Google is using neural networks to improve Translate

Google has got a pretty good handle on AI, judging by its shocking back-to-back wins against a 9-dan champ Lee Sedol in the intricate strategy game Go. Though the company is using it in Google Photos, Gmail and other apps, it may soon bring deep learning to one that really needs it: Google Translate. Anybody who uses that app regularly knows that its translations are flaky, at best, especially for languages that are vastly different from English, like Sedol's native Korean.

Google neural network tells you where photos were taken

It's easy to identify where a photo was taken if there's an obvious landmark, but what about landscapes and street scenes where there are no dead giveaways? Google believes artificial intelligence could help. It just took the wraps off of PlaNet, a neural network that relies on image recognition technology to locate photos. The code looks for telltale visual cues such as building styles, languages and plant life, and matches those against a database of 126 million geotagged photos organized into 26,000 grids. It could tell that you took a photo in Brazil based on the lush vegetation and Portuguese signs, for instance. It can even guess the locations of indoor photos by using other, more recognizable images from the album as a starting point.

AI learns to predict human reactions by reading our fiction

A team of Stanford researchers have developed a novel means of teaching artificial intelligence systems how to predict a human's response to their actions. They've given their knowledge base, dubbed Augur, access to online writing community Wattpad and its archive of more than 600,000 stories. This information will enable support vector machines (basically, learning algorithms) to better predict what people do in the face of various stimuli.

ICYMI: Ripples in Spacetime, brain jolts to learn and more

#fivemin-widget-blogsmith-image-917098{display:none;} .cke_show_borders #fivemin-widget-blogsmith-image-917098, #postcontentcontainer #fivemin-widget-blogsmith-image-917098{width:570px;display:block;} try{document.getElementById("fivemin-widget-blogsmith-image-917098").style.display="none";}catch(e){}Today on In Case You Missed It: Researchers at the Laser Interferometer Gravitational-Wave Observatory confirmed Einstein's theory that ripples in the fabric of spacetime do, in fact, exist. They spotted the gravitational waves made when two black holes collided.

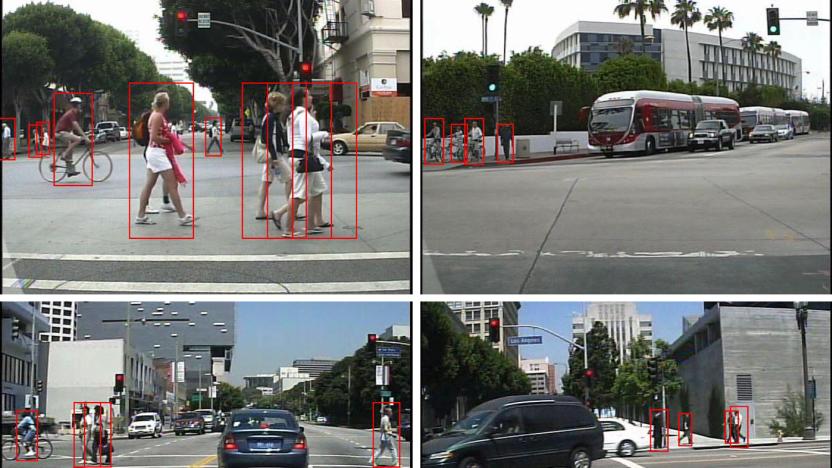

Smart car algorithm sees pedestrians as well as you can

It's one thing for computers to spot people in relatively tame academic situations, but it's another when they're on the road -- you need your car to spot that jaywalker in time to avoid a collision. Thankfully, UC San Diego researchers have made that more realistic than ever. They've crafted a pedestrian detection algorithm that's much quicker and more accurate than existing systems. It can spot people at a rate of 2-4 frames per second, or roughly as well as humans can, while making half as many mistakes as existing systems. That could make the difference between a graceful stop and sudden, scary braking.

Chip promises brain-like AI in your mobile devices

There's one big, glaring reason why you don't see neural networks in mobile devices right now: power. Many of these brain-like artificial intelligence systems depend on large, many-core graphics processors to work, which just isn't practical for a device meant for your hand or wrist. MIT has a solution in hand, though. It recently revealed Eyeriss, a chip that promises neural networks in very low-power devices. Although it has 168 cores, it consumes 10 times less power than the graphics processors you find in phones -- you could stuff one into a phone without worrying that it will kill your battery.

Google's latest partnership could make smartphones smarter

Google has signed a deal with Movidius to include its Myriad 2 MA2450 processor in future devices. The search giant first worked with Movidius back in 2014 for its Project Tango devices, and it's now licensing the company's latest tech to "accelerate the adoption of deep learning within mobile devices."

An algorithm can tell if your face is forgettable

Some faces are more memorable than others. The brain processes visual cues to decide if a face or an image will stay lodged in the memory bank. What if a network could be trained to imitate that response? You could potentially alter the visuals for a greater impact. A team of researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) has built MemNet, a deep learning based algorithm that predicts the "memorability" of your photographs almost as well as the human brain.

You might not have to update next-gen antivirus software

Antivirus and malware protection programs are great, but they have a fatal flaw: they can only protect your PC from threats they know about. It's not a terrible problem, but it gives attackers a brief window of opportunity to harm your computer every time they tweak their code. If a PC hasn't nabbed the latest update to its protection suite, it's vulnerable -- but it doesn't have to be that way. Researchers are using deep learning algorithms that can spot new malicious code naturally, without database updates.

Apple poaches NVIDIA's artificial intelligence leader

Apple's widely rumored electric car may not be fully autonomous, but it may well have some smarts. The company has hired Jonathan Cohen, who until this month was the director of NVIDIA's deep learning division -- in other words, a form of artificial intelligence. Cohen's LinkedIn profile only mentions that he's working on a nebulous "software" effort at Apple. However, his most recent job at NVIDIA centered around technology like Drive PX, a camera-based autopilot system for cars that can identify and react to specific vehicle types. While there's a chance that Cohen could be working on AI for iOS or the Mac, it won't be surprising if he brings some self-driving features to Cupertino's first car, such as hands-off lane changing or parking. [Image credit; NVIDIA, Flickr]

IBM wires up 'neuromorphic' chips like a rodent's brain

IBM has been working with DARPA's Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) program since 2008 to develop computing systems that work less like conventional computers and more like the neurons inside your brain. After years of development, IBM has finally unveiled the system to the public as part of a three-week "boot camp" training session for academic and government researchers.

Microsoft wants you to teach computers how to learn

As clever as learning computers may be, they only have as much potential as their software. What if you don't have the know-how to program one of these smart systems yourself? That's where Microsoft Research thinks it can help: it's developing a machine teaching tool that will let most anyone show computers how to learn. So long as you're knowledgeable about your field, you'd just have to plug in the right parameters. A chef could tell a computer how to create tasty recipes, for example, while a doctor could get software to sift through medical records and find data relevant to a new patient.

Military AI interface helps you make sense of thousands of photos

It's easy to find computer vision technology that detect objects in photos, but it's still tough to sift through photos... and that's a big challenge for the military, where finding the right picture could mean taking out a target or spotting a terrorist threat. Thankfully, the US' armed forces may soon have a way to not only spot items in large image libraries, but help human observers find them. DARPA's upcoming, artificial intelligence-backed Visual Media Reasoning system both detects what's in a shot and presents it in a simple interface that bunches photos and videos together based on patterns. If you want to know where a distinctive-looking car has been, for example, you might only need to look in a single group.

Facebook and Google get neural networks to create art

For Facebook and Google, it's not enough for computers to recognize images... they should create images, too. Both tech firms have just shown off neural networks that automatically generate pictures based on their understanding of what objects look like. Facebook's approach uses two of these networks to produce tiny thumbnail images. The technique is much like what you'd experience if you learned painting from a harsh (if not especially daring) critic. The first algorithm creates pictures based on a random vector, while the second checks them for realistic objects and rejects the fake-looking shots; over time, you're left with the most convincing results. The current output is good enough that 40 percent of pictures fooled human viewers, and there's a chance that they'll become more realistic with further refinements.

Amazon uses machine learning to show you more helpful reviews

Let's be blunt: Amazon's reviews sometimes suck. Many of them are hasty day-one reactions, others are horribly misinformed and a few are out-and-out fakes. The internet shopping giant thinks it knows how to sort the wheat from the chaff, however. It just launched a new machine learning system that understands which reviews are likely to be the most helpful, and floats them to the top. The artificial intelligence typically prefers reviews that are recent, receive a lot of up-votes or come from verified buyers. Amazon hopes that this will show you opinions that are not only more trustworthy, but reflect any fixes. In other words, you'll see reviews for the product you're actually likely to get.

Twitter buys a machine learning company to better study your tweets

Twitter thrives on its ability to understand both your tweets and the hot topic of the day, and it needs every bit of help it can get -- including from computers. Accordingly, the social network just snapped up Whetlab, a startup that makes it easier to implement machine learning (aka a form of artificial intelligence). The two companies are shy about what the acquisition means besides an improvement to Twitter's "internal machine learning efforts." However, the likely focus is on highlighting the content that's most relevant to you based on your activity and who you follow, as well as hiding abusive tweets before you have to reach for the "block" option. Whetlab's technology could get the ball rolling on these robotic discovery techniques much faster than before, and give you a custom-tailored Twitter experience that requires little effort on your part.

Computers can tell how much pain you're in by looking at your face

Remember Baymax's pain scale in Big Hero 6? In the real world, machines might not even need to ask whether or not you're hurting -- they'll already know. UC San Diego researchers have developed a computer vision algorithm that can gauge your pain levels by looking at your facial expressions. If you're wincing, for example, you're probably in more agony than you are if you're just furrowing your brow. The code isn't as good at detecting your pain as your parents (who've had years of experience), but it's up to the level of an astute nurse.

Google hopes to count the calories in your food photos

Be careful about snapping pictures of your obscenely tasty meals -- one day, your phone might judge you for them. Google recently took the wraps off Im2Calories, a research project that uses deep learning algorithms to count the calories in food photos. The software spots the individual items on your plate and creates a final tally based on the calorie info available for those dishes. If it doesn't properly guess what you're eating, you can correct it yourself and improve the system over time. Ideally, Google will also draw from the collective wisdom of foodies to create a truly smart dietary tool -- enough experience and it could give you a solid estimate of how much energy you'll have to burn off at the gym.