neuralnetwork

Latest

The Canadian AI that writes holiday chiptunes

Is there no industry safe from economic encroachment by automation and machine learning? A team from the University of Toronto have built a digital Irving Berlin that can generate Christmas carols from a single image.

MIT's AI figured out how humans recognize faces

It appears machines may already be catching up to humans, at least in the world of computational biology. A team of researchers at the MIT-based Center for Brains, Minds and Machines (CBMM) found that the system they designed to recognize faces had spontaneously come up with a step that can identify portraits regardless of the rotation of the face. This adds credence to a previous theory about how humans recognize faces that was based studies of MRIs of primate brains.

Light-based neural network could lead to super-fast AI

It's one thing to create computers that behave like brains, but it's something else to make them perform as well as brains. Conventional circuitry can only operate so quickly as part of a neural network, even if it's sometimes much more powerful than standard computers. However, Princeton researchers might have smashed that barrier: they've built what they say is the first photonic neural network. The system mimics the brain with "neurons" that are really light waveguides cut into silicon substrates. As each of those nodes operates in a specific wavelength, you can make calculations by summing up the total power of the light as it's fed into a laser -- and the laser completes the circuit by sending light back to the nodes. The result is a machine that can calculate a differential math equation 1,960 times faster than a typical processor.

Google AI experiments help you appreciate neural networks

Sure, you may know that neural networks are spicing up your photos and translating languages, but what if you want a better appreciation of how they function? Google can help. It just launched an AI Experiments site that puts machine learning to work in a direct (and often entertaining) way. The highlight by far is Giorgio Cam -- put an object in front of your phone or PC camera and the AI will rattle off a quick rhyme based on what it thinks it's seeing. It's surprisingly accurate, fast and occasionally chuckle-worthy.

Google expands mission to make automated translations suck less

What started with Mandarin Chinese is expanding to English; French; German; Japanese; Korean; Portuguese and Turkish, as Google has increased the languages its Neural Machine Translation (NMT) handle. "These represent the native languages of around one-third of the world's population, covering more than 35 percent of all Google Translate queries," according to The Keyword blog. The promise here is that because NMT uses the context of the entire sentence, rather than translating individual words on their own, the results will be more accurate, especially as time goes on, thanks to machine learning. For a comparison of the two methods, check out the GIF embedded below.

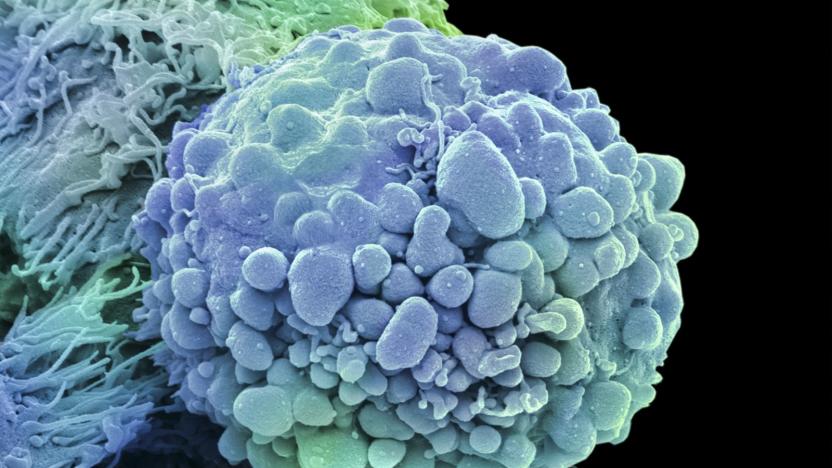

NVIDIA helps the US build an AI for cancer research

Microsoft isn't the only big-name tech company using AI to fight cancer. NVIDIA is partnering with the US Department of Energy and the National Cancer Institute to develop CANDLE (Cancer Distributed Learning Environment), an AI-based "common discovery platform" that aims for 10 times faster cancer research on modern supercomputers with graphics processors. The hardware promises to rapidly accelerate neural networks that can both spot crucial data and speed up simulations.

MIT makes neural nets show their work

Turns out, the inner workings of neural networks really aren't any easier to understand than those of the human brain. But thanks to research coming out of MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL), that could soon change. They've devised a means of making these digital minds not just provide the correct answer, classification or prediction, but also explain the rationale behind its choice. And with this ability, researchers hope to bring a new weapon to bear in the fight against breast cancer.

Google's AI created its own form of encryption

Researchers from the Google Brain deep learning project have already taught AI systems to make trippy works of art, but now they're moving on to something potentially darker: AI-generated, human-independent encryption. According to a new research paper, Googlers Martín Abadi and David G. Andersen have willingly allowed three test subjects -- neural networks named Alice, Bob and Eve -- to pass each other notes using an encryption method they created themselves.

Google's arty filters one-up Prisma by mixing various styles

Basic filters are soooo last year, and Google knows it. It's all about turning your mundane pet photos into works of art now, spearheaded by popular mobile app Prisma. Since it launched earlier this year, Prisma's added an offline mode and video support (albeit after a me-too competitor), but just a few days ago Facebook revealed it's also working on style transfer tech for live video -- though Prisma says it's going to beat the social network to the punch in a matter of days. Now, Google has revealed it's going one better, detailing a system that can mix and match multiple art styles to create photo and video filters that are altogether unique.

AI-powered security cameras recognize small details faster

San Mateo-based Movidius may still be in the process of getting bought up by Intel, but the company's latest deal will put its low-power AI and computer vision platform into more than just DJI drones and Google VR headsets. The company announced today that the Movidius Myriad 2 Video Processing Unit (VPU) will soon power a new generation of Hikvision smart surveillance cameras capable of recognizing everything from suspicious packages to distracted drivers.

Google's memory-boosted AI could help you navigate the subway

Modern neural networks are good at making quick, reactive decisions and recognizing patterns, but they're not very skilled at the careful, deliberate thought that you need for complex choices. Google's DeepMind team may have licked that problem, however. Its researchers have developed a memory-boosted neural network (a "differentiable neural computer") that can create and work with sophisticated data structures. If it has a map of the London Underground, for example, it could figure out the quickest path from stop to stop or tell you where you'd end up after following a route sequence.

Brain-like memory gets an AI test drive

Humanity just took one step closer to computers that mimic the brain. University of Southampton researchers have demonstrated that memristors, or resistors that remember their previous resistance, can power a neural network. The team's array of metal-oxide memristors served as artificial synapses to learn (and re-learn) from "noisy" input without intervention, much like you would. And since the memristors will remember previous states when turned off, they should use much less power than conventional circuitry -- ideal for Internet of Things devices that can't afford to pack big batteries.

AI can help you find a programming job

Artificial intelligence isn't just helping you work more effectively... it can help you find work, too. Source{d} is running a job service that matches programmers with employers by using a "deep neural network" to scan open source code for relevant qualities. And it's not just about understanding whether or not you can write well in a given language, either. The AI can even look for coding styles that match the methods of a given company, so you may land a position simply by fitting in more gracefully than anyone else.

Google releases massive visual databases for machine learning

It seems like we hear about a new breakthrough using machine learning nearly every day, but it's not easy. In order to fine-tune algorithms that recognize and predict patterns in data, you need to feed them massive amounts of already-tagged information to test and learn from. For researchers, that's where two recently-released archives from Google will come in. Joining other high-quality datasets, Open Images and YouTube8-M provide millions of annotated links for researchers to train their processes on.

Photo editor uses neural networks to airbrush like a pro

Most people think Photoshop is a magical tool that can change reality, but it does require a skilled artist for decent results. Using neural networks, however, University of Edinburgh researcher Andrew Brock has built an uncanny image editing app that can transform someone's entire hairstyle with just the stroke of a brush.

Google's Chinese-to-English translations might now suck less

Mandarin Chinese is a notoriously difficult language to translate to English, and for those who rely on Google Translate to decipher important information, machine-based tools simply aren't good enough. All that is about to change, as Google today announced it has implemented a new learning system in its web and mobile translation apps that will bring significantly better results.

SwiftKey for Android is now powered by a neural network

From today, the popular keyboard app SwiftKey will be powered by a neural network. The latest version of the app combines the features of its Neural Alpha, released last October, and its regular app in order to serve better predictions. It's the first major change to the main SwiftKey app since Microsoft acquired the London-based company earlier this year. Understanding why the new SwiftKey is going to be better than what came before it requires a little effort, but the real-world benefits are definitely tangible. See, the regular SwiftKey app has, since its inception, used a probability-based language algorithm based on the "n-gram" model for predictions. At its core, the system read the last two words you've written, checked them against a large database and picked three words it thought might come next, in order of probability. That two-word constraint is a serious problem for predicting what a user is trying to say. If I were to ask you to guess the word that comes after the fragment "It might take a," the first suggestion you come up with is unlikely to be "look." But with a two-word prediction engine, it's only looking at "take a," and "look" is the first suggestion. There had to be a better solution. Simply upping the number of words it looks at is impractical -- the database grows exponentially with every word you add -- so SwiftKey's initial solution was to boost its n-gram engine with less fallible, personalized data. If you regularly use phrases, SwiftKey uses that data to improve predictions. And you could also link social media and Gmail accounts for better predictions.

Neural networks are powerful thanks to physics, not math

When you think about how a neural network can beat a Go champion or otherwise accomplish tasks that would be impractical for most computers, it's tempting to attribute the success to math. Surely it's those algorithms that help them solve certain problems so quickly, right? Not so fast. Researchers from Harvard and MIT have determined that the nature of physics gives neural networks their edge.

Google AI builds a better cucumber farm

Artificial intelligence technology doesn't just have to solve grand challenges. Sometimes, it can tackle decidedly everyday problems -- like, say, improving a cucumber farm. Makoto Koike has built a cucumber sorter that uses Google's TensorFlow machine learning technology to save his farmer parents a lot of work. The system uses a camera-equipped Raspberry Pi 3 to snap photos of the veggies and send the shots to a small TensorFlow neural network, where they're identified as cucumbers. After that, it sends images to a larger network on a Linux server to classify the cucumbers by attributes like color, shape and size. An Arduino Micro uses that info to control the actual sorting, while a Windows PC trains the neural network with images.

Google's using neural networks to make image files smaller

Somewhere at Google, researchers are blurring the line between reality and fiction. Tell me if you've heard this one, Silicon Valley fans -- a small team builds a neural network for the sole purpose of making media files teeny-tiny. Google's latest experiment isn't exactly the HBO hit's Pied Piper come to life, but it's a step in that direction: using trained computer intelligence to make images smaller than current JPEG compression allows.