neuralnetwork

Latest

Prisma's arty photo filters now work offline

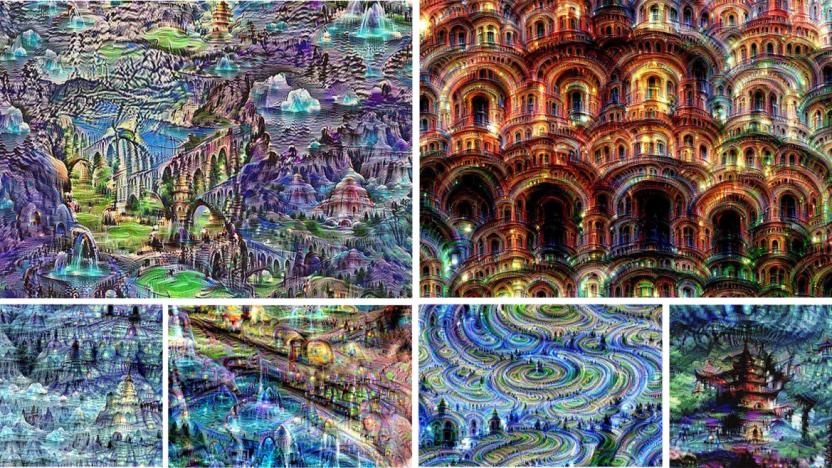

There's a lot going on behind the curtain with Prisma, the app that turns your banal photos into Lichtenstein- or Van Gogh-esque artworks. The app actually sends your cat photo to its servers where a neural network does the complex transformation. Starting soon, that will no longer be necessary, though. "We have managed to implement neural networks to smartphones, which means users will no longer need an internet connection to turn their photos into art pieces," the company says. Only half of Prisma's styles will be available offline at first (16 total), but others will be added in the "near future."

Prisma's neural net-powered photo app arrives on Android

When Prisma Labs said you wouldn't have to wait long to use its Android app outside of the beta test, it wasn't joking around. The finished Prisma app is now readily available on Google Play, giving anyone a chance to see what iOS users were excited about a month ago. Again, the big deal is the use of cloud-based machine learning to turn humdrum photos into hyper-stylized pieces of art -- vivid brush strokes and pencil lines appear out of nowhere. Give it a shot if you don't think your smartphone's usual photo filters are enough.

Twitter-bot plasters creepy smiles on celebrities' faces

Not all Twitter bots are racist -- some are genuinely creepy, (but in the best possible way). Take @smilevector, an algorithm created by New Zealand neural network researcher Tom White. If you submit a photo of your favorite celebrity in a glum or neutral pose, it'll turn it into a bizarre, "I just ate a child" kind of grin. The bot "uses a generative neural network to add or remove smiles from images it finds in the wild" or submitted to its follow list, according to its creator.

Computers learn to predict high-fives and hugs

Deep learning systems can already detect objects in a given scene, including people, but they can't always make sense of what people are doing in that scene. Are they about to get friendly? MIT CSAIL's researchers might help. They've developed a machine learning algorithm that can predict when two people will high-five, hug, kiss or shake hands. The trick is to have multiple neural networks predict different visual representations of people in a scene and merge those guesses into a broader consensus. If the majority foresees a high-five based on arm motions, for example, that's the final call.

Machine-vision algorithms help craft realistic portraits from sketches

Sketching a person's face is difficult even for the most talented of artists. But even though it's an austere task for humans, it's not entirely perfect for computers just yet, either. That's where machine-vision algorithms come in.

This short film was written by a sci-fi hungry neural network

Remember when we played with Google's Deep Dream neural network to create trippy visuals that featured a whole lot dogs? The creators behind the short film Sunspring do. Instead of Google's product, however, they turned to a neural network named "Jetson" to do all the heavy lifting. The result? A bizarre nine minutes that you'll remember for quite some time.

Google's new tools let anyone create art using AI

Google doesn't just want to dabble in using AI to create art -- it wants you to make that art yourself. As promised, the search giant has launched its Magenta project to give artists tools for bringing machine learning to their creations. The initial effort focuses around an open source infrastructure for producing audio and video that, ideally, heads off in unexpected directions while maintaining the better traits of human-made art.

Google's 'Magenta' project will see if AIs can truly make art

Google's next foray into the burgeoning world of artificial intelligence will be a creative one. The company has previewed a new effort to teach AI systems to generate music and art called Magenta. It'll launch officially on June 1st, but Google gave attendees at the annual Moogfest music and tech festival a preview of what's in store. As Quartz reports, Magenta comes from Google's Brain AI group -- which is responsible for many uses of AI in Google products like Translate, Photos and Inbox. It builds on previous efforts in the space, using TensorFlow -- Google's open-source library for machine learning -- to train computers to create art. The goal is to answer the questions: "Can machines make music and art? If so, how? If not, why not?"

Google's neural network is now writing sappy poetry

After binge reading romance novels for the past few months, Google's neural network is suddenly turning into the kid from English Lit 101 class. The reading assignment was part of Google's plan to help the app sound more conversational, but the follow-up assignment to take what it learned and write some poetry turned out a little more sophomoric.

Computer vision is key to Amazon Prime Air drone deliveries

For all of Amazon's grand plans regarding delivery drones, it still needs to figure out concepts we take for granted with traditional courier methods. Namely, figuring out how to drop off your latest order without destroying anything (including the UAV itself) during transit and landing. That's where advanced computer vision comes in from Jeff Bezos' new team of Austria-based engineers, according to The Verge. The group invented methods for reconstructing geometry from images and contextually recognizing environmental objects, giving the drones the ability to differentiate between, say, a swimming pool and your back patio. Both are flat surfaces, but one won't leave your PlayStation VR headset waterlogged after drop-off.

Google's neural network is binge reading romance novels

The Big G wants its app to be more conversational, so it's feeding a neural network with steamy sex scenes and hot encounters. According to Buzzfeed News, the network has been devouring a collection of 2,865 romance novels over the past few months, with saucy titles like Fatal Desire and Jacked Up. It seems to be working too: it was able to write sentences resembling passages in the books during the researchers' tests. While the AI now has what it takes to become an erotic novelist, the team's real goal is to use its newly acquired conversational tone with the Google app.

Artificial intelligence now fits inside a USB stick

Movidius chips have been showing up in quite a few products recently. It's the company that helps DJI's latest drone avoid obstacles, and FLIR's new thermal camera automatically spot people trapped in a fire, all through deep learning via neural networks. It also signed a deal with Google to integrate its chips into as-yet-unannounced products. Now, the chip designer has a product it says will bring the capacity for powerful deep learning to everyone: a USB accessory called the Fathom Neural Compute Stick.

Microsoft beats Google to offline translation on iOS

Microsoft updated its Translator app to support offline translation on Android back in February, and it's just added the same feature to the iOS version. Like the Android app, the translation works by way of deep learning. Behind the scenes a neural network, trained on millions of phrases, does the heavy lifting, and the translations are claimed to be of "comparable" quality to online samples. Your mileage will apparently "vary by language and topic," but even an adequate translation is probably worth it when you're saving on data costs abroad.

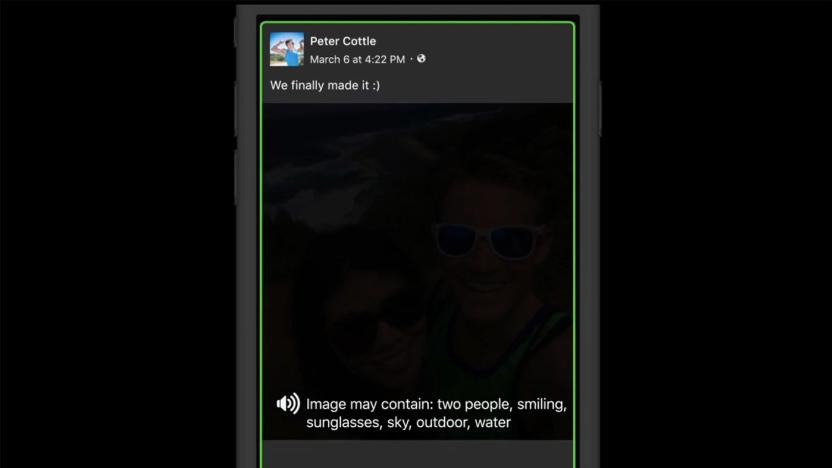

Facebook's tool for blind users can describe News Feed photos

Facebook has launched a new tool for iOS that can help blind, English-speaking users make sense of all the photos people post on the social network. It's called automatic alternative text, and it can give a basic description of a photo's contents for anyone who's using a screen reader. In the past, screen readers can only tell visually impaired users that there's a photo in the status update they're viewing. With this new tool in place, they can rattle off elements the company's object recognition technology detects in the images. For instance, they can now tell users that they're looking at a friend's photo that "may contain: tree, sky, sea."

This is what it looks like when a neural net colorizes photos

We've seen the horrific results of Google's servers taking acid and interpreting photos with DeepDream, but what happens when a neural network does something altogether less terrifying with snapshots? It'll go all Ted Turner and colorize black and white images with what it thinks are the right chroma values based on analyzing countless similar photos. At least that's what a team of University of California at Berkeley researchers experimented with in their paper Colorful Image Colorization (PDF).

Google AI finally loses to Go world champion

At last, humanity is on the scoreboard. After three consecutive losses, Go world champion Lee Sedol has beaten Google's DeepMind artificial intelligence program, AlphaGo, in the fourth game of their five-game series. DeepMind founder Demis Hassabis notes that the AI lost thanks to its delayed reaction to a slip-up: it slipped on the 79th turn, but didn't realize the extent of its mistake (and thus adapt its playing style) until the 87th move. The human win won't change the results of the challenge -- Google is donating the $1 million prize to charity rather than handing it to Lee. Still, it's a symbolic victory in a competition that some had expected AlphaGo to completely dominate.

Google's Deepmind AI beats Go world champion in first match

Google's Deepmind artificial intelligence has done what many thought it couldn't: beat a grandmaster at the ancient Chinese strategy game Go. The "AlphaGo" program forced its opponent, 33-year-old 9-dan professional Lee Sedol, to resign three and a half hours into the first of their five-match battle. While Deepmind has defeated a Go champion before, it's the first time a machine has beaten a world champion.

Google neural network tells you where photos were taken

It's easy to identify where a photo was taken if there's an obvious landmark, but what about landscapes and street scenes where there are no dead giveaways? Google believes artificial intelligence could help. It just took the wraps off of PlaNet, a neural network that relies on image recognition technology to locate photos. The code looks for telltale visual cues such as building styles, languages and plant life, and matches those against a database of 126 million geotagged photos organized into 26,000 grids. It could tell that you took a photo in Brazil based on the lush vegetation and Portuguese signs, for instance. It can even guess the locations of indoor photos by using other, more recognizable images from the album as a starting point.

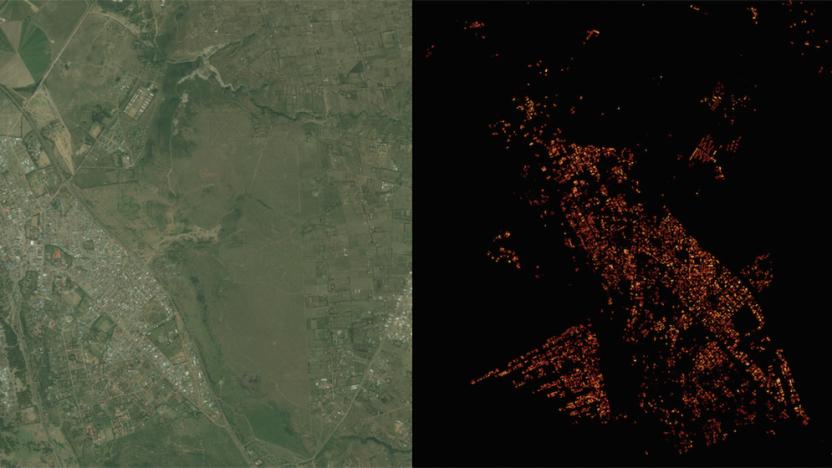

Facebook created a super-detailed population density map

Facebook's quest to get the world online is paying some unexpected dividends. Its Connectivity Lab is using image recognition technology to create population density maps that are much more accurate (to within 10m) than previous data sets -- where earlier examples are little more than blobs, Facebook shows even the finer aspects of individual neighborhoods. The trick was to modify the internet giant's existing neural network so that it could quickly determine whether or not buildings are present in satellite images. Instead of spending ages mapping every last corner of the globe, Facebook only had to train its network on 8,000 images and set it loose.

Hiker with head-mounted cameras taught drones to fly through forests

Researchers in Switzerland have developed a drone that can navigate forest trails in search of missing hikers. According to EPFL, around 1,000 people get lost in Swiss forests every year and often need to be rescued. Rather than enlisting a search party, which are limited by the number of warm bodies on hand, a fleet of drones could cover the main trails with ease. Unfortunately, while getting a drone to fly through dense wooded forests was reasonable enough, letting it navigate the territory on its own was another thing altogether.