uncanny valley

Latest

NVIDIA helps bring more lifelike avatars to chatbots and games

NVIDIA has unveiled tools that could see realistic AI avatars come to more apps and games.

NVIDIA created a toy replica of its CEO to demo its new AI avatars

NVIDIA has advanced its AI voice and avatar technology in uncanny ways. You could talk to assistants that look like real people — or toys.

Disney robot with human-like gaze is equal parts uncanny and horrifying

Disney researchers have built a robot with a highly realistic gaze -- and a horrifying look that could haunt your dreams.

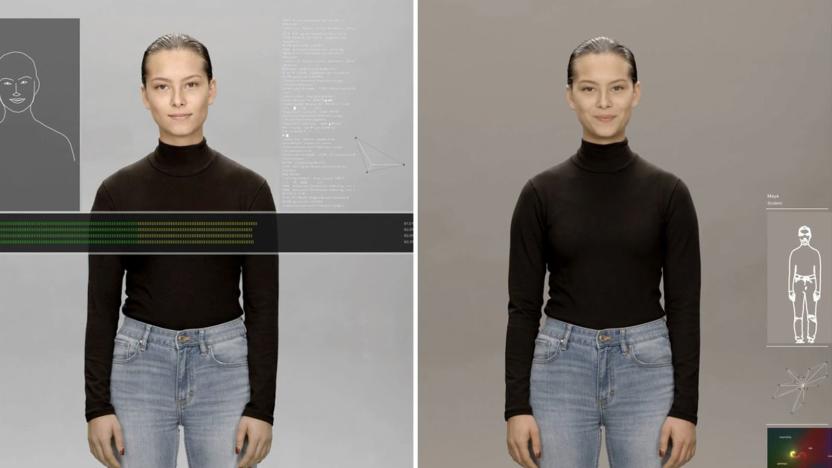

Samsung sheds light on its 'artificial human' project

Samsung has been drumming up hype for its Neon 'artificial human' project, and now it's clearer as to what that initiative entails. Project lead Pranav Mistry has posted a teaser effectively confirming that Neon is nothing less than an effort to create lifelike avatars. The digital beings are based on "captured data," but can generate their own expressions, movements and sayings in multiple languages. While the static image doesn't reveal much more than that, some recent discoveries help fill in the gaps.

AI avatars of Chinese authors could soon narrate audiobooks

The Chinese search engine Sogou isn't stopping at AI news anchors. The company has created "lifelike" avatars of two Chinese authors, and it plans to have them narrate audiobooks in video recordings. According to the BBC, Sogou used AI, text-to-speech technology and video clips from the China Online Literature+ conference to create avatars of authors Yue Guan and Bu Xin Tian Shang Diao Xian Bing.

Why putting googly eyes on robots makes them inherently less threatening

At the start of 2019, supermarket chain Giant Food Stores announced it would begin operating customer-assisting robots -- collectively dubbed Marty -- in 172 East Coast locations. These autonomous machines may navigate their respective store using a laser-based detection system, but they're also outfitted with a pair of oversize googly eyes. This is to, "[make] it a bit more fun," Giant President Nick Bertram told Adweek in January, and "celebrate the fact that there's a robot."

Five questions for the man who created a robot documentarian

We've spilled buckets of digital ink on headless horse bots, uncanny humanoids and the coming of the robot apocalypse, but there's a softer, more emotional side to these machines. Social robots, as they're referred to, are less mechanized overlords and more emotional-support automatons, providing companionship as well as utility. Robots like these are forcing us to consider how we interact with the technology that we've created. Under the direction of artist/roboticist Alexander Reben and filmmaker Brent Hoff, a fleet of precious, cardboard BlabDroids, set out to explore the shifting boundaries of human-robot interaction. These tiny, wheeled machines aren't automated playthings, but serious documentarians seeking an answer to a deceptively simple question: "Can you have a meaningful interaction with a machine?" We'll dive deeper into the topic at Expand this weekend, but in the meantime, here's a short Q&A with Reben on an incredibly complex topic.

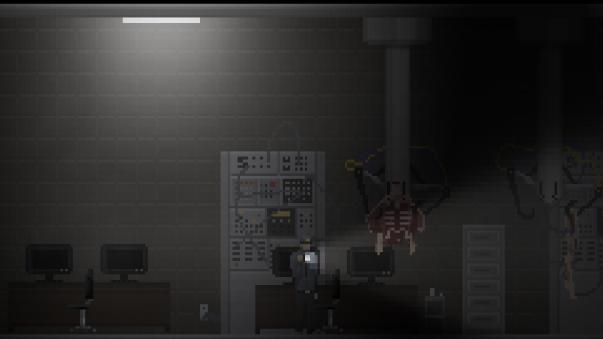

Horror game Uncanny Valley can't hold onto its flashlight

You're Tom, a security guard at a corporate facility deep in the mountains, and you have the night shift. To help pass the time, you grab a flashlight and explore the cavernous building. You walk down dark hallways, through meat-packing rooms and offices with anatomical diagrams on the walls – and eventually, you end up in places you shouldn't be, with things you wish you'd never seen. Uncanny Valley is a survival horror, exploration and adventure game from Slovenia studio Cowardly Creations, due out around Halloween for PC and Mac. Survival is key in Uncanny Valley, but it isn't the main mechanic – there aren't many things in the game that kill you. Instead, there's a consequence system that directly impacts the gameplay and storyline. For example, if you fail to escape a group of attackers, Tom will move more slowly throughout the rest of the game, Cowardly Creations says: "Why? Because dying and repeating the same section over and over is tedious and leads to frustration. The game stops being scary if you're angry and just want to rush through it, so we think that adding such a system will still keep the tension while adding a new layer to scariness." Uncanny Valley is in the midst of an Indiegogo campaign seeking €5,000. It just got started this week, and it ends on June 15. [Images: Cowardly Creations]

Sony takes SOEmote live for EverQuest II, lets gamers show their true CG selves (video)

We had a fun time trying Sony's SOEmote expression capture tech at E3; now everyone can try it. As of today, most EverQuest II players with a webcam can map their facial behavior to their virtual personas while they play, whether it's to catch the nuances of conversation or drive home an exaggerated game face. Voice masking also lets RPG fans stay as much in (or out of) character as they'd like. About the only question left for those willing to brave the uncanny valley is when other games will get the SOEmote treatment. Catch our video look after the break if you need a refresher.

Baby robot Affetto gets a torso, still gives us the creeps (video)

It's taken a year to get the sinister ticks and motions of Osaka University's Affetto baby head out of our nightmares -- and now it's grown a torso. Walking that still-precarious line between robots and humans, the animated robot baby now has a pair of arms to call its own. The prototype upper body has a babyish looseness to it -- accidentally hitting itself in the face during the demo video -- with around 20 pneumatic actuators providing the movement. The face remains curiously paused, although we'd assume that the body prototype hasn't been paired with facial motions just yet, which just about puts it the right side of adorable. However, the demonstration does include some sinister faceless dance motions. It's right after the break -- you've been warned.

Samsung files patents for robot that mimics human walking and breathing, ratchets up the creepy factor

As much as Samsung is big on robots, it hasn't gone all-out on the idea until a just-published quartet of patent applications. The filings have a robot more directly mimicking a human walk and adjusting the scale to get the appropriate speed without the unnatural, perpetually bent gait of certain peers. To safely get from point A to point B, any path is chopped up into a series of walking motions, and the robot constantly checks against its center of gravity to stay upright as it walks uphill or down. All very clever, but we'd say Samsung is almost too fond of the uncanny valley: one patent has rotating joints coordinate to simulate the chest heaves of human breathing. We don't know if the company will ever put the patents to use; these could be just feverish dreams of one-upping Honda's ASIMO at its own game. But if it does, we could be looking at Samsung-made androids designed like humans rather than for them.

MIT unveils computer chip that thinks like the human brain, Skynet just around the corner

It may be a bit on the Uncanny Valley side of things to have a computer chip that can mimic the human brain's activity, but it's still undeniably cool. Over at MIT, researchers have unveiled a chip that mimics how the brain's neurons adapt to new information (a process known as plasticity) which could help in understanding assorted brain functions, including learning and memory. The silicon chip contains about 400 transistors and can simulate the activity of a single brain synapse -- the space between two neurons that allows information to flow from one to the other. Researchers anticipate this chip will help neuroscientists learn much more about how the brain works, and could also be used in neural prosthetic devices such as artificial retinas. Moving into the realm of "super cool things we could do with the chip," MIT's researchers have outlined plans to model specific neural functions, such as the visual processing system. Such systems could be much faster than digital computers and where it might take hours or days to simulate a simple brain circuit, the chip -- which functions on an analog method -- could be even faster than the biological system itself. In other news, the chip will gladly handle next week's grocery run, since it knows which foods are better for you than you ever could.

PIGORASS quadruped robot baby steps past AIBO's grave (video)

Does the Uncanny Valley extend to re-creations of our four-legged friends? We'll find out soon enough if Yasunori Yamada and his University of Tokyo engineering team manage to get their PIGORASS quadruped bot beyond its first unsteady hops, and into a full-on gallop. Developed as a means of analyzing animals' musculoskeletal system for use in biologically-inspired robots, the team's cyborg critter gets its locomotion on via a combo of CPU-controlled pressure sensors and potentiometers. It may move like a bunny (for now), but each limb's been designed to function independently in an attempt to simulate a simplified neural system. Given a bit more time and tweaking (not to mention a fine, faux fur coating), we're pretty sure this wee bitty beastie'll scamper its way into the homes of tomorrow. Check out the lil' fella in the video after the break.

Japan creates frankenstein pop idol, sells candy

Sure, Japan's had its fair share of holographic and robotic pop idols, but they always seem to wander a bit too far into the uncanny valley. Might an amalgam composite pop-star fare better? Nope, still creepy -- but at least its a new kind of creepy. Eguchi Aimi, a fictional idol girl created for a Glico candy ad, is comprised of the eyes, ears, nose, and other facial elements of girls from AKB48, a massive (over 50 members) all-female pop group from Tokyo. Aimi herself looks pretty convincing, but the way she never looks away from the camera makes our skin crawl ever so slightly. Check out the Telegraph link below to see her pitch Japanese sweets while staring through your soul.

Emoti-bots turn household objects into mopey machines (video)

Some emotional robots dip deep into the dark recesses of the uncanny valley, where our threshold for human mimicry resides. Emoti-bots on the other hand, manage to skip the creepy human-like pitfalls of other emo-machines, instead employing household objects to ape the most pathetic of human emotions -- specifically dejection and insecurity. Sure it sounds sad, but the mechanized furniture designed by a pair of MFA students is actually quite clever. Using a hacked Roomba and an Arduino, the duo created a chair that reacts to your touch, and wanders aimlessly once your rump has disembarked. They've also employed Nitinol wires, a DC motor, and a proximity sensor to make a lamp that seems to tire with use. We prefer our lamps to look on the sunny side of life, but for those of you who like your fixtures forlorn, the Emoti-bots are now on display at Parsons in New York and can be found moping about in the video after the break.

Microsoft shows off prototype avatar that will haunt your dreams

Microsoft's chief research and strategy officer Craig Mundie wants to show you the haunting bridge his team has built over the uncanny valley. Employing Kinect hardware and custom PC software, the research team at Microsoft has created an unnervingly realistic new avatar that can handle text-to-speech when combined with a script and can recognize the words in any order. "This is a way to create a synthetic model of people that will be acceptable to them when they would look at them on a television or in an Avatar Kinect kind of scenario," Mundie told USA Today in a video interview. "There's no reason that we couldn't do that in real time by feeding the information that we get from a Kinect sensor, including its audio input and its 3D modeling, spacial representation, and couple that to the body and the gesture recognition in order to create a full body avatar, that has photo realistic features and full facial animation," he added. This impressive (if not somewhat terrifying) demo is still very much in the prototype phase, however, and Mundie said it would be "some time before we see it show up in products." We're just hoping those first "products" aren't T-1000s.

The Daily Grind: How realistic do you like your avatars?

From the highly detailed characters in EVE Online to the beautifully impressionistic avatars in LOVE, there's a wide variety of avatar types available in MMO games. Whether you use your avatar purely for humorous results as the above EVE Online pilot did, attempt to create a character that looks at least somewhat like you, or are out to create a completely foreign fantasy being to role-play, choice abounds these days. Character creators go from automatically generated with no choice to insanely complex and detailed -- and everywhere in-between. Nonetheless, with the sheer number of options out there, this morning we thought we'd ask which you prefer? Do you like your avatar so realistic that it's almost bordering on uncanny valley? Perhaps you prefer more middle-of-the-road options like Guild Wars or other games in that general neighborhood -- not too realistic, not too cartoony? Or do you prefer to go as far into your imagination as the character creator will let you with avatars such as the ones in LOVE or World of Warcraft's stylistic, non-human offerings? Every morning, the Massively bloggers probe the minds of their readers with deep, thought-provoking questions about that most serious of topics: massively online gaming. We crave your opinions, so grab your caffeinated beverage of choice and chime in on today's Daily Grind!

Fake robot baby provokes real screams (video)

Uncanny valley, heard of it? No worries, you're knee-deep in it right now. It's the revulsion you feel to robots, prostheses, or zombies that try, but don't quite duplicate their human models. As the robot becomes more humanlike, however, our emotional response becomes increasingly positive and empathetic. Unfortunately, the goal of Osaka University's AFFETTO was to create a robot modeled after a young child that could produce realistic facial expressions in order to endear it to a human caregiver in a more natural way. Impressive, sure, but we're not ready to let it suckle from our teat just yet.

Cambridge developing 'mind reading' computer interface with the countenance of Charles Babbage (video)

For years now, researchers have been exploring ways to create devices that understand the nonverbal cues that we take for granted in human-human interaction. One of the more interesting projects we've seen of late is led by Professor Peter Robinson at the Computer Laboratory at the University of Cambridge, who is working on what he calls "mind-reading machines," which can infer mental states of people from their body language. By analyzing faces, gestures, and tone of voice, it is hoped that machines could be made to be more helpful (hell, we'd settle for "less frustrating"). Peep the video after the break to see Robinson using a traditional (and annoying) satnav device, versus one that features both the Cambridge "mind-reading" interface and a humanoid head modeled on that of Charles Babbage. "The way that Charles and I can communicate," Robinson says, "shows us the future of how people will interact with machines." Next stop: uncanny valley!

President Obama takes a minute to chat with our future robot overlords (video)

President Obama recently took some time out of the APEC Summit in Yokohama to meet with a few of Japan's finest automatons, and as always he was one cool cat. Our man didn't even blink when confronted with this happy-go-lucky HRP-4C fashion robot, was somewhat charmed by the Paro robotic seal, and more than eager to take a seat in one of Yamaha's personal transport robots. But who wouldn't be, right? See him in action after the break.